Unity Blend Modes

- From the sandbox

- Tutorial

Many probably heard about the blending modes ( blend modes ), which are present in most popular programs for working with images and videos. There, it is an important tool for creating content that has long been an integral part of them.

And what about games?

Suppose you need to use Color Dodge blending for a particle system, or the UI artist made beautiful graphics for the game interface, but some of its elements use some kind of Soft Light. Or maybe you needed to subject a three-dimensional object to Divide-mixing to get the effect straight from Lynch's films?

In this article we will consider the principle of operation of popular mixing modes and try to recreate their effect on the Unity game engine as accurately as possible.

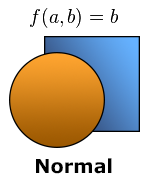

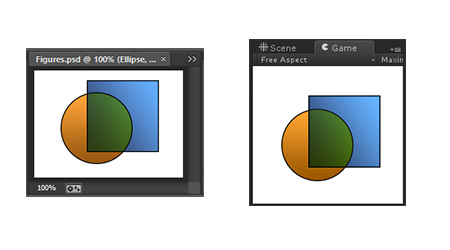

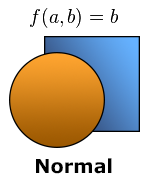

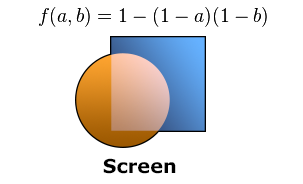

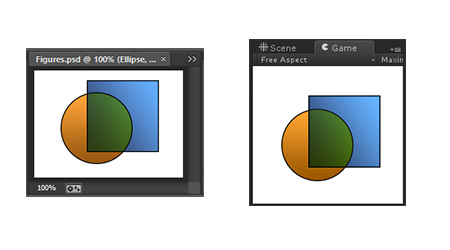

First, let's figure out what exactly we need to do. Take for example two graphic elements and arrange them so that one overlaps the other:

In the normal (Normal) blending mode, the color of each pixel in the lower layer ( a ) is completely replaced by the color of the pixel in the layer that “overlaps” it ( b ). Everything is trivial here: in this way the majority of graphic objects in games are “mixed”.

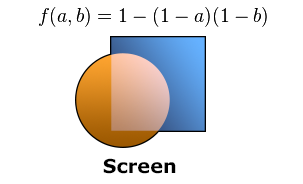

In Screen mode, the pixel colors of both layers are inverted, multiplied, and then inverted again. We implement this algorithm in Cg :

Please note that in the alpha component of the resulting color ( ra ) we pass the alpha value of the top layer ( ba ) to maintain the ability to independently control the transparency level of the material.

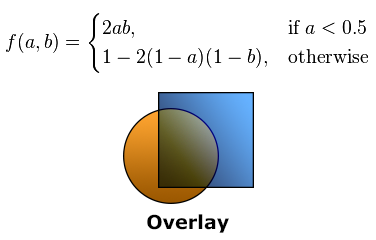

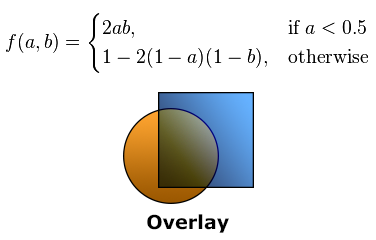

The Overlay algorithm works conditionally: for "dark" sections, colors are multiplied, and for "light" sections, an analog of the Screen mode is used.

The Darken blending mode compares the values of each of the three color channels for two layers and leaves the one that is “darker”.

Most of the remaining modes operate according to similar schemes. If you are interested, an implementation of another 18 mixing algorithms on Cg can be found here: gist.github.com/Elringus/d21c8b0f87616ede9014

So, our general problem can be formulated as follows: for each pixel of the material of the object ( b ) find a pixel that located “below it” ( a ) and, using the selected algorithm, “mix” them.

Having received all the necessary mixing algorithms, it may seem that the matter is small: you just need to get a - the color of the pixels that are located "below" our object. However, it was this stage that turned out to be the most problematic in practical implementation.

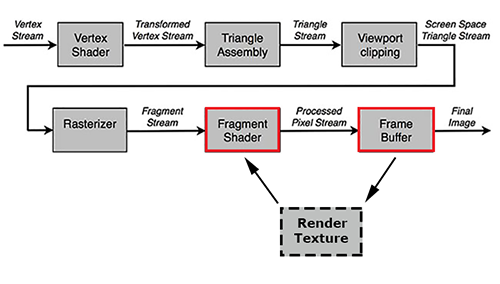

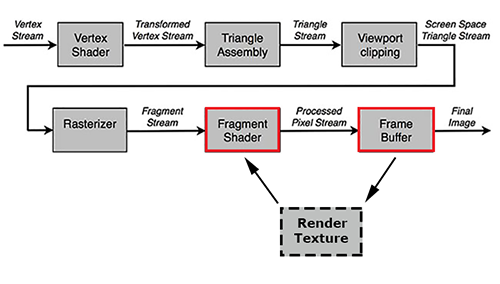

The fact is that it is impossible to access the contents of the frame buffer, in which the same “back layer” is located, during the execution of the fragment shader due to the logic of the rendering pipeline:

Final The image (final image) is formed after the execution of the fragment shader; accordingly, we cannot directly obtain it during the execution of the Cg program. So, you need to look for workarounds.

In fact, the need for data on the final image as part of a fragment shader arises quite often. The implementation of most post-effects (post processing effects), for example, is unthinkable without access to the “final picture”. For such cases, there is a so-called render to texture: data from the frame buffer is copied to a special texture, from which it is then read the next time the fragment shader is executed:

In Unity, there are several ways to work with the render texture. In our case, the most appropriate is the use of GabPass - a special type of "pass" (pass), which captures the contents of the screen into the texture where the object will be drawn. Just what we need!

Create a simple shader for UI-graphics, add GrabPass to it and return the result of color mixing using the Darken algorithm from the fragment function:

To evaluate the result, we take the same textures that we used in the graphics editor during the demonstration of the blending modes:

As you can see in the illustration, the results of rendering UI graphics on Unity and the document in Photoshop are identical.

We could stop here if it were not for one “but”: rendering into a texture is a rather time-consuming operation. Even on a mid-range PC, using more than 100 of these operations at the same time leads to a noticeable reduction in frame rate. The situation is aggravated by the fact that the speed of GrabPass is inversely dependent on the resolution of the display. Imagine what will be the performance if you perform a similar procedure on any iPad with an ultra-high resolution display? In my case, even a pair of UI objects with “unconventional” mixing in an empty scene led to FPS falling below 20.

One optimization suggests itself: why not use a single GrabPass? The original image within the frame remains unchanged, which means you can “take” it once and then use it for all subsequent mixing operations.

Unity provides us with a convenient way to implement our plan. It is enough to pass a line with the name of the variable to the GrabPass construct in which we want to store the “general” render texture:

Now, any material instance using this shader will receive information from the general texture renderer and will not execute the expensive GrabPass if it has already been executed by one of the instances. Thus, we get the opportunity to use a large number of mixing operations at a time without serious performance problems.

Unfortunately, this solution has one significant drawback: since different objects use the same information about the image of the “back layer”, this same layer becomes identical for them. That is, such objects "do not see" each other and do not take this information into account when mixing.

The problem becomes apparent if two objects that use blending are “superimposed” on top of each other:

In addition, even one GrabPass can be too "expensive" for most mobile devices, which means that you need to look for alternative approaches.

Since using GrabPass in any form is too costly, let's try to do without it. One of the options: try to change the blending mode, which is executed after the fragment shader (as part of the Unity visualization pipeline):

This stage is mainly used for processing translucent objects and the possibilities for modifying it are very limited - you can’t insert Cg instructions there. It is possible only with the help of a set of key phrases to configure how the color obtained from the fragment shader should (and should at all) interact with the color that is "behind" it.

The operation is defined by the following construction:

The logic is that the source color (obtained from the fragment shader) is multiplied by the value that the first operand returns ( SrcFactor ), the target color (the color of the "back" layer) is multiplied by the second operand ( DstFactor ) and the resulting values are added. The list of operands, in turn, is rather limited: you can operate with units, zeros, source and target colors, as well as the results of their inversion.

The optional BlendOp command expands the capabilities somewhat. It allows replacing the addition of the result of two operands by subtraction, taking a minimum or maximum.

With a little imagination, I was able to implement the following mixing algorithms:

We modify our shader for mixing UI graphics in Darken mode to use BlendOp:

For demonstration, we will use the same textures:

The problem is obvious: due to the fact that we use the blending stage “for our needs”, alpha blending is nowhere to be done and the transparency of objects is simply ignored. On the other hand, opaque objects mix correctly and without loss in performance. So, if you need to use one of the modes that can be recreated using the Blend construction and the object does not have transparent areas, this is perhaps the best option.

I mentioned earlier that it is not possible to access the framebuffer from the fragment shader. In fact this is not true.

In 2013, the EXT_shader_framebuffer_fetch function was added to the OpenGL ES 2.0 specification , which allows access to the frame buffer data from the fragment shader. And a few months ago, in Unity 4.6.3 release, support for this function from Cg was announced.

We modify our shader to use Framebuffer Fetch:

Perfect. It would seem that what else is needed? No unnecessary operations, maximum performance, you can implement any mixing logic ... Only here is the illustration above - this is a fragment of a screenshot taken from iPad Air. But, for example, in the Unity editor, our shader will simply refuse to work.

The problem is that support for the OpenGL ES specification is fully implemented only on iOS devices. On other platforms (even if their graphics subsystem uses the OpenGL ES API), this function may not work, so you can’t rely on cross-platform functionality.

We examined four implementations of blending modes on the Unity game engine:

We could not find a single universal and cross-platform solution, however, a combination of the presented options will allow us to use “mixing” in most cases.

In conclusion, I want to offer a video demonstrating some blending modes applied to particle effects, GUI elements, three-dimensional objects, and sprites in Unity:

Also, I’m risking (AppEngine - you can stand it, I know!) To publish a link to the WebGL assembly, where you can interactively experiment with different blending modes .

Thanks for attention!

And what about games?

Suppose you need to use Color Dodge blending for a particle system, or the UI artist made beautiful graphics for the game interface, but some of its elements use some kind of Soft Light. Or maybe you needed to subject a three-dimensional object to Divide-mixing to get the effect straight from Lynch's films?

In this article we will consider the principle of operation of popular mixing modes and try to recreate their effect on the Unity game engine as accurately as possible.

Mixing Algorithms

First, let's figure out what exactly we need to do. Take for example two graphic elements and arrange them so that one overlaps the other:

In the normal (Normal) blending mode, the color of each pixel in the lower layer ( a ) is completely replaced by the color of the pixel in the layer that “overlaps” it ( b ). Everything is trivial here: in this way the majority of graphic objects in games are “mixed”.

In Screen mode, the pixel colors of both layers are inverted, multiplied, and then inverted again. We implement this algorithm in Cg :

fixed4 Screen (fixed4 a, fixed4 b)

{

fixed4 r = 1.0 - (1.0 - a) * (1.0 - b);

r.a = b.a;

return r;

}

Please note that in the alpha component of the resulting color ( ra ) we pass the alpha value of the top layer ( ba ) to maintain the ability to independently control the transparency level of the material.

The Overlay algorithm works conditionally: for "dark" sections, colors are multiplied, and for "light" sections, an analog of the Screen mode is used.

fixed4 Overlay (fixed4 a, fixed4 b)

{

fixed4 r = a < .5 ? 2.0 * a * b : 1.0 - 2.0 * (1.0 - a) * (1.0 - b);

r.a = b.a;

return r;

}

The Darken blending mode compares the values of each of the three color channels for two layers and leaves the one that is “darker”.

fixed4 Darken (fixed4 a, fixed4 b)

{

fixed4 r = min(a, b);

r.a = b.a;

return r;

}

Most of the remaining modes operate according to similar schemes. If you are interested, an implementation of another 18 mixing algorithms on Cg can be found here: gist.github.com/Elringus/d21c8b0f87616ede9014

So, our general problem can be formulated as follows: for each pixel of the material of the object ( b ) find a pixel that located “below it” ( a ) and, using the selected algorithm, “mix” them.

Implementation Using GrabPass

Having received all the necessary mixing algorithms, it may seem that the matter is small: you just need to get a - the color of the pixels that are located "below" our object. However, it was this stage that turned out to be the most problematic in practical implementation.

The fact is that it is impossible to access the contents of the frame buffer, in which the same “back layer” is located, during the execution of the fragment shader due to the logic of the rendering pipeline:

Final The image (final image) is formed after the execution of the fragment shader; accordingly, we cannot directly obtain it during the execution of the Cg program. So, you need to look for workarounds.

In fact, the need for data on the final image as part of a fragment shader arises quite often. The implementation of most post-effects (post processing effects), for example, is unthinkable without access to the “final picture”. For such cases, there is a so-called render to texture: data from the frame buffer is copied to a special texture, from which it is then read the next time the fragment shader is executed:

In Unity, there are several ways to work with the render texture. In our case, the most appropriate is the use of GabPass - a special type of "pass" (pass), which captures the contents of the screen into the texture where the object will be drawn. Just what we need!

Create a simple shader for UI-graphics, add GrabPass to it and return the result of color mixing using the Darken algorithm from the fragment function:

Grabdarken.shader

Shader "Custom/GrabDarken"

{

Properties

{

_MainTex ("Sprite Texture", 2D) = "white" {}

_Color ("Tint", Color) = (1,1,1,1)

}

SubShader

{

Tags

{

"Queue" = "Transparent"

"RenderType" = "Transparent"

}

Blend SrcAlpha OneMinusSrcAlpha

GrabPass { }

Pass

{

CGPROGRAM

#include "UnityCG.cginc"

#pragma vertex ComputeVertex

#pragma fragment ComputeFragment

sampler2D _MainTex;

sampler2D _GrabTexture;

fixed4 _Color;

struct VertexInput

{

float4 vertex : POSITION;

float4 color : COLOR;

float2 texcoord : TEXCOORD0;

};

struct VertexOutput

{

float4 vertex : SV_POSITION;

fixed4 color : COLOR;

half2 texcoord : TEXCOORD0;

float4 screenPos : TEXCOORD1;

};

VertexOutput ComputeVertex (VertexInput vertexInput)

{

VertexOutput vertexOutput;

vertexOutput.vertex = mul(UNITY_MATRIX_MVP, vertexInput.vertex);

vertexOutput.screenPos = vertexOutput.vertex;

vertexOutput.texcoord = vertexInput.texcoord;

vertexOutput.color = vertexInput.color * _Color;

return vertexOutput;

}

fixed4 Darken (fixed4 a, fixed4 b)

{

fixed4 r = min(a, b);

r.a = b.a;

return r;

}

fixed4 ComputeFragment (VertexOutput vertexOutput) : SV_Target

{

half4 color = tex2D(_MainTex, vertexOutput.texcoord) * vertexOutput.color;

// Находим координаты пикселя на рендер текстуре,

// который находится "под" текущим пикселем объекта

float2 grabTexcoord = vertexOutput.screenPos.xy / vertexOutput.screenPos.w;

grabTexcoord.x = (grabTexcoord.x + 1.0) * .5;

grabTexcoord.y = (grabTexcoord.y + 1.0) * .5;

// В зависимости от платформы,

// ось V текстурной системы координат может быть перевёрнута.

#if UNITY_UV_STARTS_AT_TOP

grabTexcoord.y = 1.0 - grabTexcoord.y;

#endif

fixed4 grabColor = tex2D(_GrabTexture, grabTexcoord);

return Darken(grabColor, color);

}

ENDCG

}

}

Fallback "UI/Default"

}

To evaluate the result, we take the same textures that we used in the graphics editor during the demonstration of the blending modes:

As you can see in the illustration, the results of rendering UI graphics on Unity and the document in Photoshop are identical.

We could stop here if it were not for one “but”: rendering into a texture is a rather time-consuming operation. Even on a mid-range PC, using more than 100 of these operations at the same time leads to a noticeable reduction in frame rate. The situation is aggravated by the fact that the speed of GrabPass is inversely dependent on the resolution of the display. Imagine what will be the performance if you perform a similar procedure on any iPad with an ultra-high resolution display? In my case, even a pair of UI objects with “unconventional” mixing in an empty scene led to FPS falling below 20.

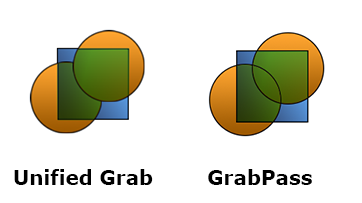

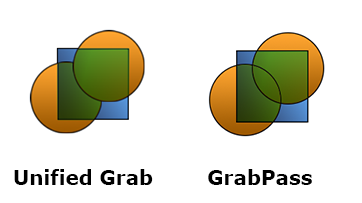

Implementing with Unified Grab

One optimization suggests itself: why not use a single GrabPass? The original image within the frame remains unchanged, which means you can “take” it once and then use it for all subsequent mixing operations.

Unity provides us with a convenient way to implement our plan. It is enough to pass a line with the name of the variable to the GrabPass construct in which we want to store the “general” render texture:

GrabPass { "_SharedGrabTexture" }

Now, any material instance using this shader will receive information from the general texture renderer and will not execute the expensive GrabPass if it has already been executed by one of the instances. Thus, we get the opportunity to use a large number of mixing operations at a time without serious performance problems.

Unfortunately, this solution has one significant drawback: since different objects use the same information about the image of the “back layer”, this same layer becomes identical for them. That is, such objects "do not see" each other and do not take this information into account when mixing.

The problem becomes apparent if two objects that use blending are “superimposed” on top of each other:

In addition, even one GrabPass can be too "expensive" for most mobile devices, which means that you need to look for alternative approaches.

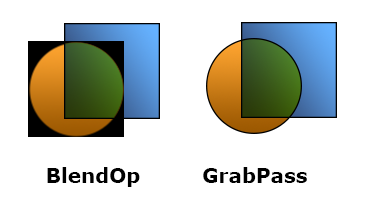

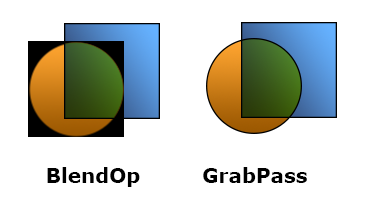

Implementation with BlendOp

Since using GrabPass in any form is too costly, let's try to do without it. One of the options: try to change the blending mode, which is executed after the fragment shader (as part of the Unity visualization pipeline):

This stage is mainly used for processing translucent objects and the possibilities for modifying it are very limited - you can’t insert Cg instructions there. It is possible only with the help of a set of key phrases to configure how the color obtained from the fragment shader should (and should at all) interact with the color that is "behind" it.

The operation is defined by the following construction:

Blend SrcFactor DstFactor

The logic is that the source color (obtained from the fragment shader) is multiplied by the value that the first operand returns ( SrcFactor ), the target color (the color of the "back" layer) is multiplied by the second operand ( DstFactor ) and the resulting values are added. The list of operands, in turn, is rather limited: you can operate with units, zeros, source and target colors, as well as the results of their inversion.

The optional BlendOp command expands the capabilities somewhat. It allows replacing the addition of the result of two operands by subtraction, taking a minimum or maximum.

With a little imagination, I was able to implement the following mixing algorithms:

- Darken:

BlendOp Min Blend One One - Lighten:

BlendOp Max Blend One One - Linear Burn:

BlendOp RevSub Blend One One - Linear Dodge:

Blend One One - Multiply:

Blend DstColor OneMinusSrcAlpha

We modify our shader for mixing UI graphics in Darken mode to use BlendOp:

BlendOpDarken.shader

Shader "Custom/BlendOpDarken"

{

Properties

{

_MainTex ("Sprite Texture", 2D) = "white" {}

_Color ("Tint", Color) = (1,1,1,1)

}

SubShader

{

Tags

{

"Queue" = "Transparent"

"RenderType" = "Transparent"

}

BlendOp Min

Blend One One

Pass

{

CGPROGRAM

#include "UnityCG.cginc"

#pragma vertex ComputeVertex

#pragma fragment ComputeFragment

sampler2D _MainTex;

fixed4 _Color;

struct VertexInput

{

float4 vertex : POSITION;

float4 color : COLOR;

float2 texcoord : TEXCOORD0;

};

struct VertexOutput

{

float4 vertex : SV_POSITION;

fixed4 color : COLOR;

half2 texcoord : TEXCOORD0;

};

VertexOutput ComputeVertex (VertexInput vertexInput)

{

VertexOutput vertexOutput;

vertexOutput.vertex = mul(UNITY_MATRIX_MVP, vertexInput.vertex);

vertexOutput.texcoord = vertexInput.texcoord;

vertexOutput.color = vertexInput.color * _Color;

return vertexOutput;

}

fixed4 ComputeFragment (VertexOutput vertexOutput) : SV_Target

{

return tex2D(_MainTex, vertexOutput.texcoord) * vertexOutput.color;

}

ENDCG

}

}

Fallback "UI/Default"

}

For demonstration, we will use the same textures:

The problem is obvious: due to the fact that we use the blending stage “for our needs”, alpha blending is nowhere to be done and the transparency of objects is simply ignored. On the other hand, opaque objects mix correctly and without loss in performance. So, if you need to use one of the modes that can be recreated using the Blend construction and the object does not have transparent areas, this is perhaps the best option.

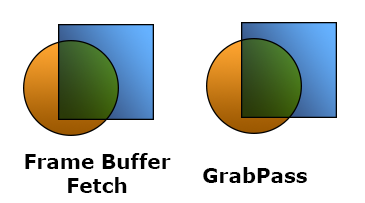

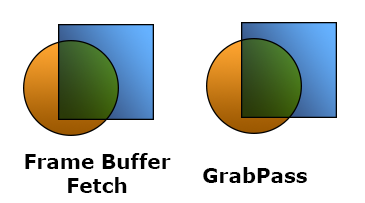

Implementation with Framebuffer Fetch

I mentioned earlier that it is not possible to access the framebuffer from the fragment shader. In fact this is not true.

In 2013, the EXT_shader_framebuffer_fetch function was added to the OpenGL ES 2.0 specification , which allows access to the frame buffer data from the fragment shader. And a few months ago, in Unity 4.6.3 release, support for this function from Cg was announced.

We modify our shader to use Framebuffer Fetch:

FrameBufferFetchDarken.shader

Shader "Custom/FrameBufferFetchDarken"

{

Properties

{

_MainTex ("Sprite Texture", 2D) = "white" {}

_Color ("Tint", Color) = (1,1,1,1)

}

SubShader

{

Tags

{

"Queue" = "Transparent"

"RenderType" = "Transparent"

}

Blend SrcAlpha OneMinusSrcAlpha

Pass

{

CGPROGRAM

#include "UnityCG.cginc"

#pragma vertex ComputeVertex

#pragma fragment ComputeFragment

sampler2D _MainTex;

fixed4 _Color;

struct VertexInput

{

float4 vertex : POSITION;

float4 color : COLOR;

float2 texcoord : TEXCOORD0;

};

struct VertexOutput

{

float4 vertex : SV_POSITION;

fixed4 color : COLOR;

half2 texcoord : TEXCOORD0;

};

VertexOutput ComputeVertex (VertexInput vertexInput)

{

VertexOutput vertexOutput;

vertexOutput.vertex = mul(UNITY_MATRIX_MVP, vertexInput.vertex);

vertexOutput.texcoord = vertexInput.texcoord;

vertexOutput.color = vertexInput.color * _Color;

return vertexOutput;

}

fixed4 Darken (fixed4 a, fixed4 b)

{

fixed4 r = min(a, b);

r.a = b.a;

return r;

}

fixed4 ComputeFragment (VertexOutput vertexOutput

#ifdef UNITY_FRAMEBUFFER_FETCH_AVAILABLE

, inout fixed4 fetchColor : COLOR0

#endif

) : SV_Target

{

half4 color = tex2D(_MainTex, vertexOutput.texcoord) * vertexOutput.color;

#ifdef UNITY_FRAMEBUFFER_FETCH_AVAILABLE

fixed4 grabColor = fetchColor;

#else

fixed4 grabColor = fixed4(1, 1, 1, 1);

#endif

return Darken(grabColor, color);

}

ENDCG

}

}

Fallback "UI/Default"

}

Perfect. It would seem that what else is needed? No unnecessary operations, maximum performance, you can implement any mixing logic ... Only here is the illustration above - this is a fragment of a screenshot taken from iPad Air. But, for example, in the Unity editor, our shader will simply refuse to work.

The problem is that support for the OpenGL ES specification is fully implemented only on iOS devices. On other platforms (even if their graphics subsystem uses the OpenGL ES API), this function may not work, so you can’t rely on cross-platform functionality.

Conclusion

We examined four implementations of blending modes on the Unity game engine:

- GrabPass is the most resource-intensive, but most correctly reproduces all mixing modes;

- Unified Grab is an optimization of GrabPass, it seriously gains in performance while performing several mixing operations at the same time, but excludes the ability to mix objects with each other;

- BlendOp works as quickly as possible, but it allows you to implement only a limited number of modes and does not support translucent materials;

- Frame Buffer Fetch works just as fast, correctly reproduces all modes, but its use is possible only on devices running iOS.

We could not find a single universal and cross-platform solution, however, a combination of the presented options will allow us to use “mixing” in most cases.

In conclusion, I want to offer a video demonstrating some blending modes applied to particle effects, GUI elements, three-dimensional objects, and sprites in Unity:

Also, I’m risking (AppEngine - you can stand it, I know!) To publish a link to the WebGL assembly, where you can interactively experiment with different blending modes .

Thanks for attention!