Under the hood of the Hakeslet Educational Project

Hello, Habr!

In a previous article, I talked about the new version of the Hexlet educational project . In the vote, you decided that the next article will be about the technical implementation of the platform.

Let me remind you, Huxlet

Is a platform for creating hands-on programming lessons in a true development environment. By a true development environment, we mean a full machine connected to the network. This important detail distinguishes Hakeslet from other educational projects (for example, Codecademy or CodeSchool) - we do not have simulators, all for real. This allows you to train and learn not only programming, but also working with databases, servers, networks, frameworks and so on. In general, if it runs on a Unix machine, it can be trained on a Hexlet. At the same time, realizing it or not, users use Test-Driven Development (TDD), because their decisions are checked by unit tests.

In this post I will talk about the architecture of the Hexlet platform and the tools that we use. About how to create practically lessons on this platform - in the next article.

Almost the entire backend is written in Rails. Everything runs on Amazon Web Services (AWS). Initially, we tried not to get attached to the AWS infrastructure much, but gradually began to use more of their services. In RDS (Relational Database Service), PostgreSQL (main base) and Redis are spinning. Thanks to it, we can not worry about backups, replication, updating - everything works automatically. Also in RDS we use automatic failover - multiAZ. In the event of a fall of the main machine, the synchronous replica automatically rises in another availability zone, and within a couple of minutes the DNS record is mapped to the new IP address.

SQS (Simple Queue Service) for queuing. All mail is sent through SES, domains live in Route53. Amazon Simple Notification Service (SNS) sends message delivery status messages to our SQS queue. Pictures and files are stored in S3. Recently, we have been using Cloudfront, a CDN from Amazon.

The cornerstone of the entire platform is Docker .

Each service works in our container. One container = one service. Most container images are pre-made images from tutum.co . We store the repository for our application in the Docker Registry. For staging, the image is collected automatically when committing to the Dockerfile. The code itself is stored on Github. For production, we collect images through Ansible on a separate server. The build takes a considerable time, 20-60 minutes depending on the conditions, therefore the option “quick fix production” is not possible. But it turned out this is not a problem, on the contrary - disciplines. When something goes wrong when deploying to production, we just kill one container and the previous one. Our database grows only horizontally (which is typical for projects with, khm-khm, good architecture), which allows us to use the base with different versions of the code and not get conflicts. So rollback is almost always a simple replacement for a version of code.

At first, for deploying we used Capistrano, but in the end we abandoned it in favor of Ansible. A bit unusual, but Ansible just delivers configs to a remote server and runs upstart, which in turn already updates the images. In this scheme, we do not need to install anything special on the server; for Ansible, we only need ssh access. Tags are used for versioning (v64, v65 and so on), and deployment to staging always uses the latest version of the code.

By the way, we love Ansible so much that we made a practical course on it - “Ansible: Introduction” .

A big plus - locally in the development we use almost identical Ansible playbooks, as for production. So the infrastructure runs locally as much as possible, which minimizes errors such as “but it worked on the LAN”. As a result, the deployment process looks like this: a new commit in Dockerfile in github -> launch a new build in docker registry -> launch Ansible playbook -> updated configs on the server -> launch upstart -> get new images.

We also use the Amazonian balancer, and in the case of the habraeffect, we can raise additional cars in 10-20 minutes. An important condition of such a scheme is that the final web servers do not store stateless state, they do not store any data. This allows you to quickly scale.

We love Amazon too, about Route53 (domain management / DNS) and about the balancer, we have lessons in the "Distributed Systems" cycle .

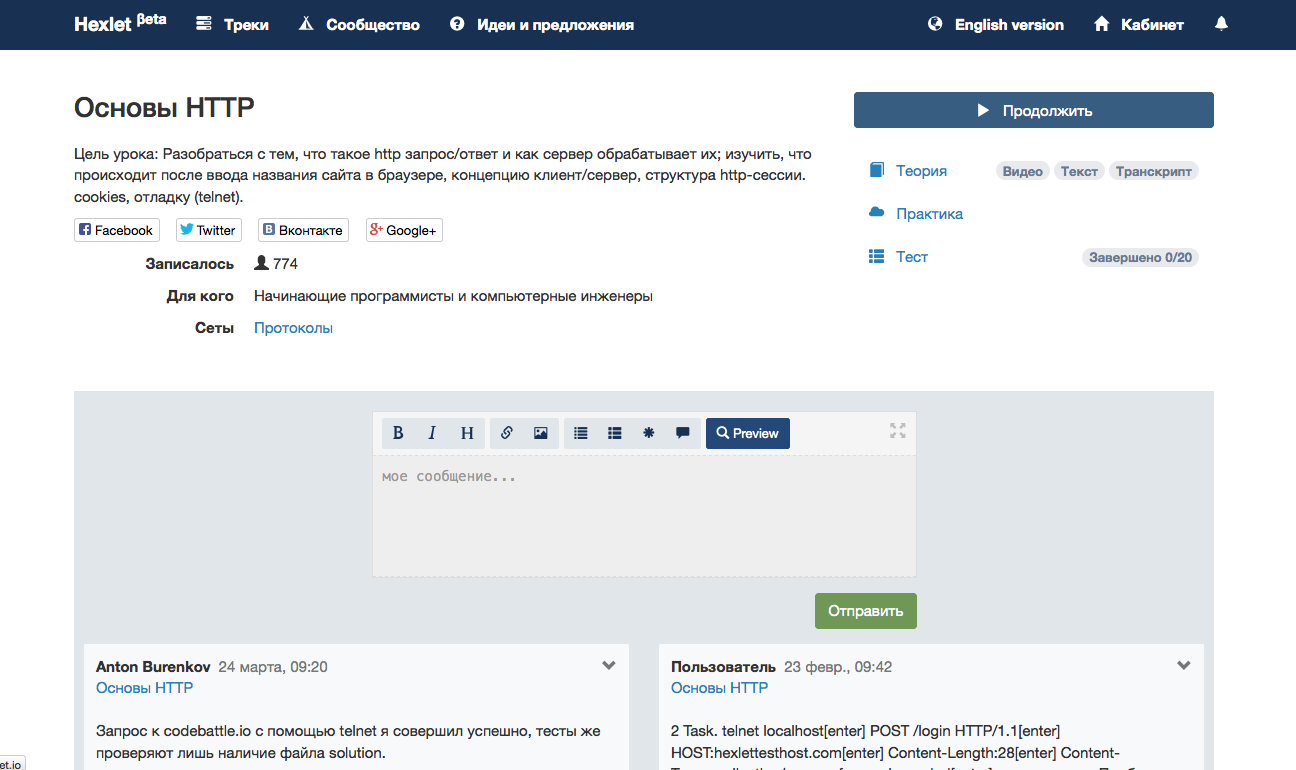

Page of a popular lesson.

The essence of the project is to allow people to learn in a real environment. To do this, we raise a container for each user in which he performs a practical exercise. These containers are raised on a special “eval” server. It has only Docker and can be accessed only from Shoryuken , asynchronously.

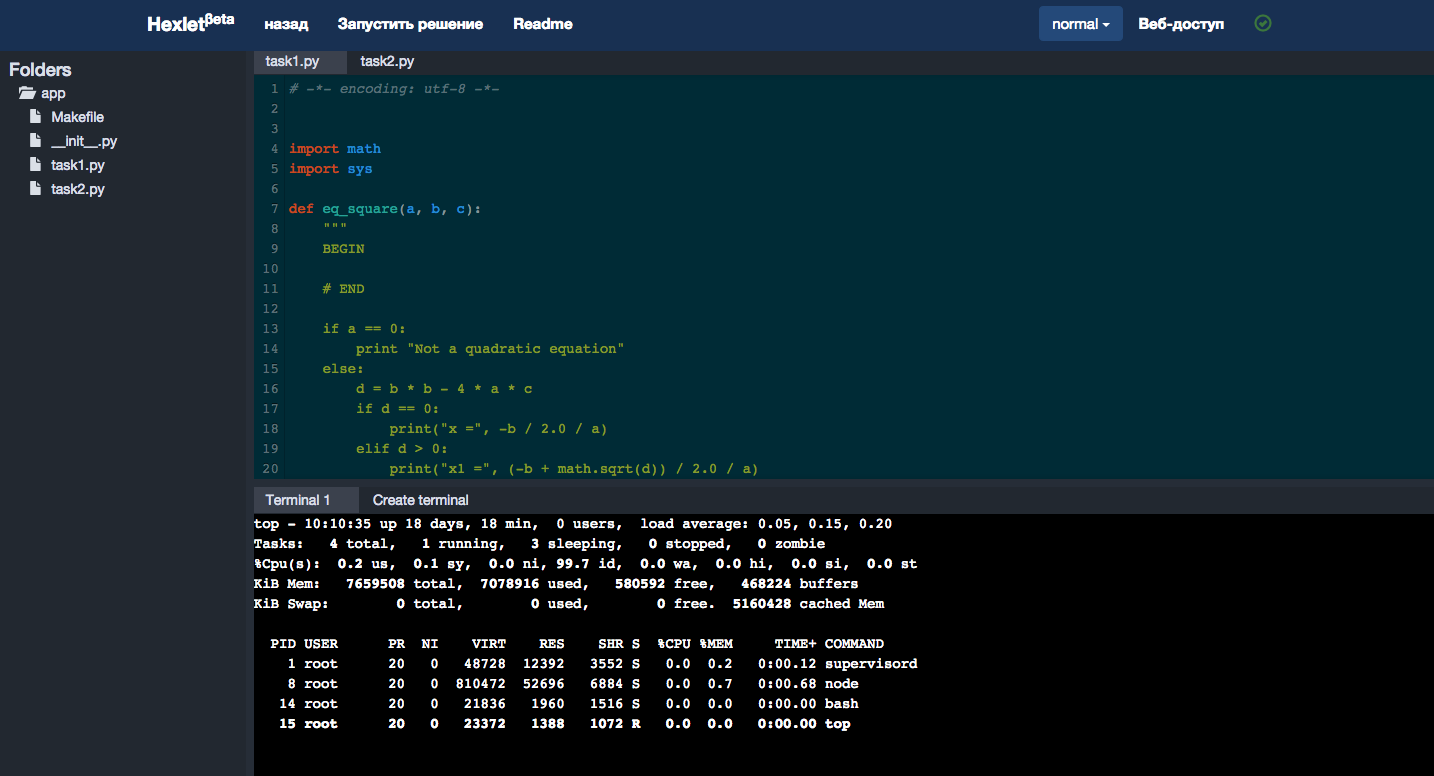

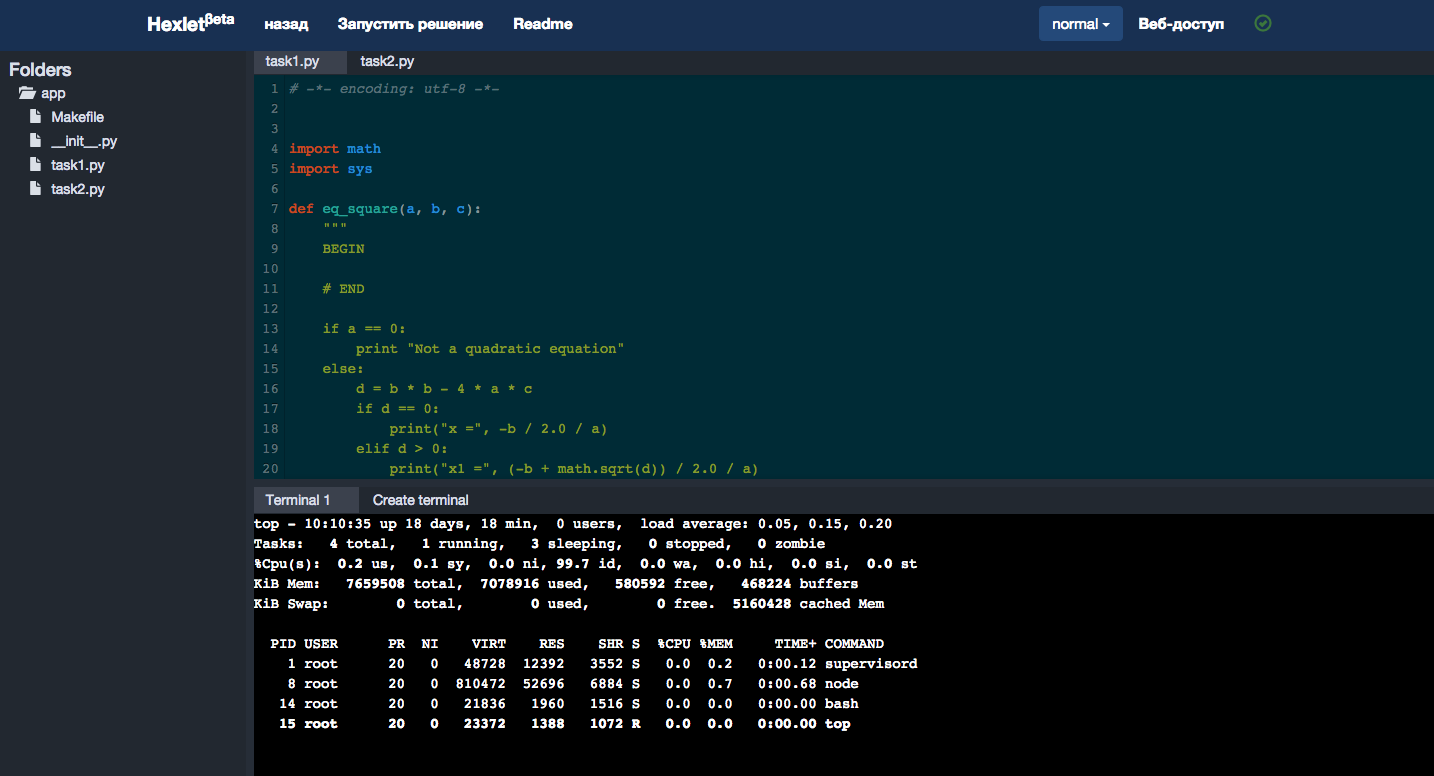

In the first Hexlet prototypes, the system required users to work on practical tasks on their computers, but now all the work is done in the browser, nothing needs to be downloaded and installed. To do this, we needed a browser development environment that would allow users to edit files and run programs. There are many cloud-based IDEs, and we, like any self-respecting startup, wanted to make the most of ready-made solutions. We found a cool IDE with a bunch of functions (even with integration with Git), but then we estimated the cost of maintaining someone else's code (full of invented bicycles) and decided to write your own simple IDE. Here, another new technology saved us - ReactJS and the Flux concept. A couple of weeks ago , a new version of the Hexlet IDE was released with a bunch of functional and visual improvements.

New version of Hexlet IDE in action.

In the first weeks, we sent all the metrics (both system ones, such as the load on the machines, and business metrics, such as registrations and payments) to our InfluxDB database, and rendered the graphs in Grafana. But now we are switching to third-party services, for example, Datadog . He can integrate with AWS, configure alerts when incidents occur.

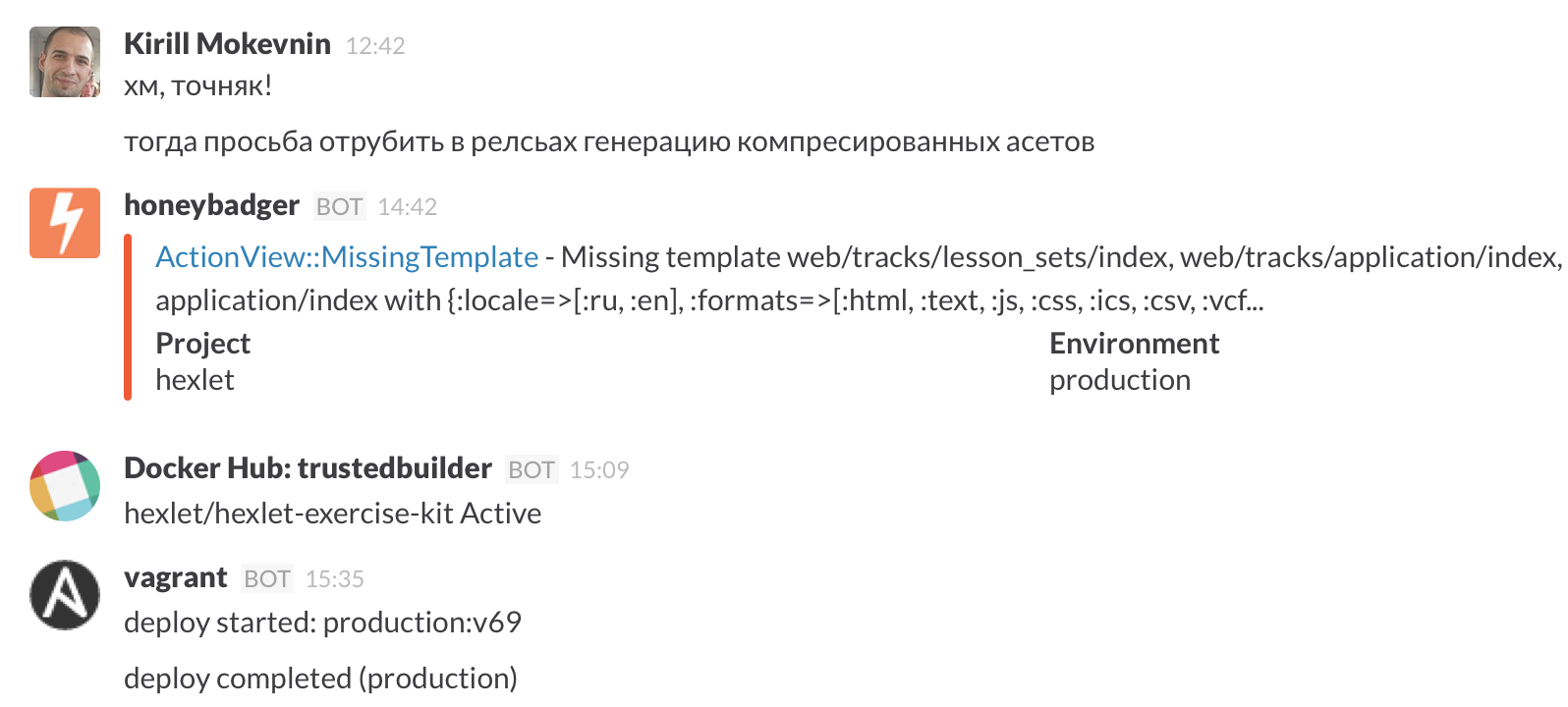

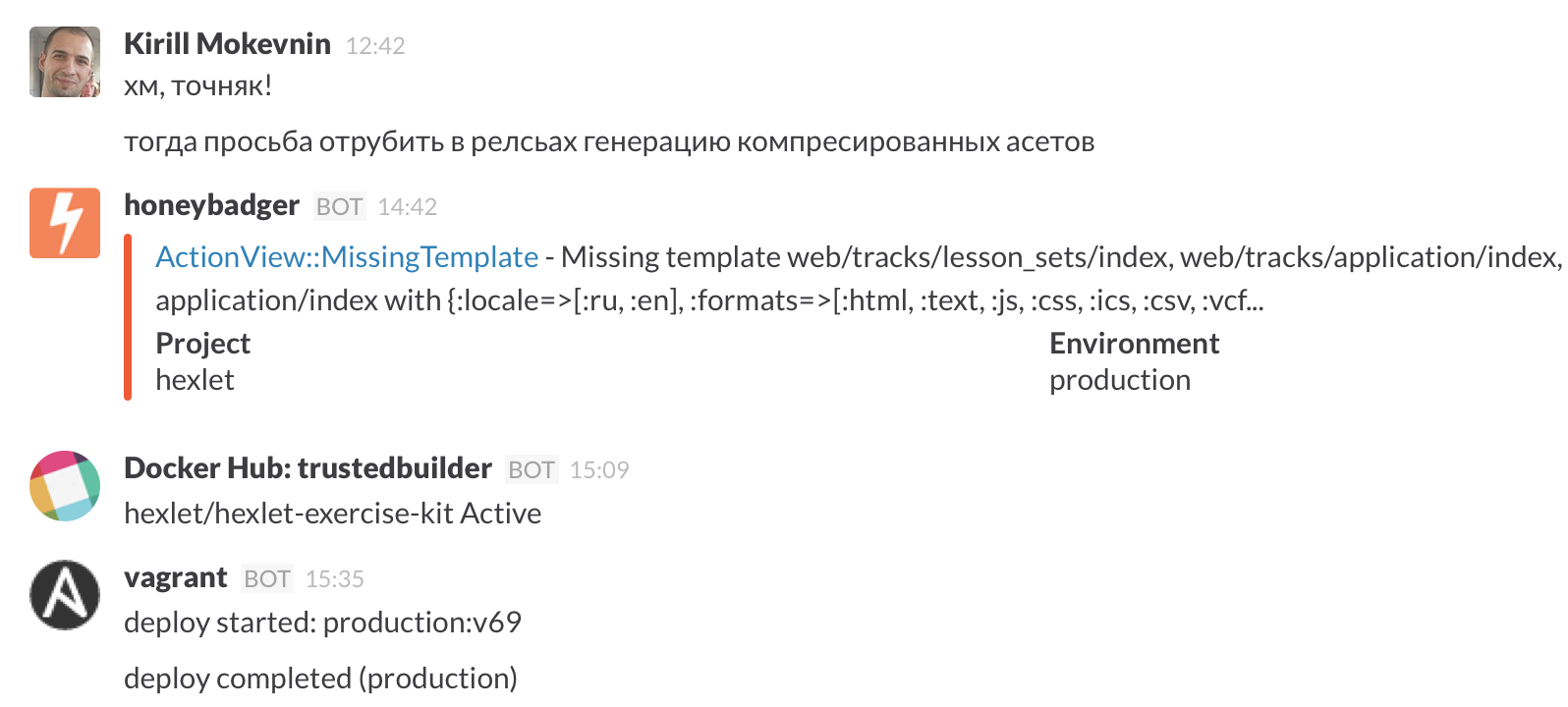

The entire Huxlet team sits in Slack , there in the special #operations chat we see everything that happens on the project: deployments, errors, builds, etc.

Our team creates lessons on its own and also invites authors from among professional developers. Any person or company can become the authors of the lessons, both public and for internal use, for example, for training within their development department or for workshops.

If you are interested, write to info@hexlet.io and join our group for authors on Facebook .

Thanks!

Is a platform for creating hands-on programming lessons in a true development environment. By a true development environment, we mean a full machine connected to the network. This important detail distinguishes Hakeslet from other educational projects (for example, Codecademy or CodeSchool) - we do not have simulators, all for real. This allows you to train and learn not only programming, but also working with databases, servers, networks, frameworks and so on. In general, if it runs on a Unix machine, it can be trained on a Hexlet. At the same time, realizing it or not, users use Test-Driven Development (TDD), because their decisions are checked by unit tests.

In this post I will talk about the architecture of the Hexlet platform and the tools that we use. About how to create practically lessons on this platform - in the next article.

Almost the entire backend is written in Rails. Everything runs on Amazon Web Services (AWS). Initially, we tried not to get attached to the AWS infrastructure much, but gradually began to use more of their services. In RDS (Relational Database Service), PostgreSQL (main base) and Redis are spinning. Thanks to it, we can not worry about backups, replication, updating - everything works automatically. Also in RDS we use automatic failover - multiAZ. In the event of a fall of the main machine, the synchronous replica automatically rises in another availability zone, and within a couple of minutes the DNS record is mapped to the new IP address.

SQS (Simple Queue Service) for queuing. All mail is sent through SES, domains live in Route53. Amazon Simple Notification Service (SNS) sends message delivery status messages to our SQS queue. Pictures and files are stored in S3. Recently, we have been using Cloudfront, a CDN from Amazon.

The cornerstone of the entire platform is Docker .

Each service works in our container. One container = one service. Most container images are pre-made images from tutum.co . We store the repository for our application in the Docker Registry. For staging, the image is collected automatically when committing to the Dockerfile. The code itself is stored on Github. For production, we collect images through Ansible on a separate server. The build takes a considerable time, 20-60 minutes depending on the conditions, therefore the option “quick fix production” is not possible. But it turned out this is not a problem, on the contrary - disciplines. When something goes wrong when deploying to production, we just kill one container and the previous one. Our database grows only horizontally (which is typical for projects with, khm-khm, good architecture), which allows us to use the base with different versions of the code and not get conflicts. So rollback is almost always a simple replacement for a version of code.

At first, for deploying we used Capistrano, but in the end we abandoned it in favor of Ansible. A bit unusual, but Ansible just delivers configs to a remote server and runs upstart, which in turn already updates the images. In this scheme, we do not need to install anything special on the server; for Ansible, we only need ssh access. Tags are used for versioning (v64, v65 and so on), and deployment to staging always uses the latest version of the code.

By the way, we love Ansible so much that we made a practical course on it - “Ansible: Introduction” .

A big plus - locally in the development we use almost identical Ansible playbooks, as for production. So the infrastructure runs locally as much as possible, which minimizes errors such as “but it worked on the LAN”. As a result, the deployment process looks like this: a new commit in Dockerfile in github -> launch a new build in docker registry -> launch Ansible playbook -> updated configs on the server -> launch upstart -> get new images.

We also use the Amazonian balancer, and in the case of the habraeffect, we can raise additional cars in 10-20 minutes. An important condition of such a scheme is that the final web servers do not store stateless state, they do not store any data. This allows you to quickly scale.

We love Amazon too, about Route53 (domain management / DNS) and about the balancer, we have lessons in the "Distributed Systems" cycle .

Page of a popular lesson.

The essence of the project is to allow people to learn in a real environment. To do this, we raise a container for each user in which he performs a practical exercise. These containers are raised on a special “eval” server. It has only Docker and can be accessed only from Shoryuken , asynchronously.

In the first Hexlet prototypes, the system required users to work on practical tasks on their computers, but now all the work is done in the browser, nothing needs to be downloaded and installed. To do this, we needed a browser development environment that would allow users to edit files and run programs. There are many cloud-based IDEs, and we, like any self-respecting startup, wanted to make the most of ready-made solutions. We found a cool IDE with a bunch of functions (even with integration with Git), but then we estimated the cost of maintaining someone else's code (full of invented bicycles) and decided to write your own simple IDE. Here, another new technology saved us - ReactJS and the Flux concept. A couple of weeks ago , a new version of the Hexlet IDE was released with a bunch of functional and visual improvements.

New version of Hexlet IDE in action.

In the first weeks, we sent all the metrics (both system ones, such as the load on the machines, and business metrics, such as registrations and payments) to our InfluxDB database, and rendered the graphs in Grafana. But now we are switching to third-party services, for example, Datadog . He can integrate with AWS, configure alerts when incidents occur.

The entire Huxlet team sits in Slack , there in the special #operations chat we see everything that happens on the project: deployments, errors, builds, etc.

Our team creates lessons on its own and also invites authors from among professional developers. Any person or company can become the authors of the lessons, both public and for internal use, for example, for training within their development department or for workshops.

If you are interested, write to info@hexlet.io and join our group for authors on Facebook .

Thanks!