Resize images in the browser. Everything is very bad

If you have ever faced the task of resizing images in a browser, then you probably know that it is very simple. In any modern browser, there is such an element as canvas (

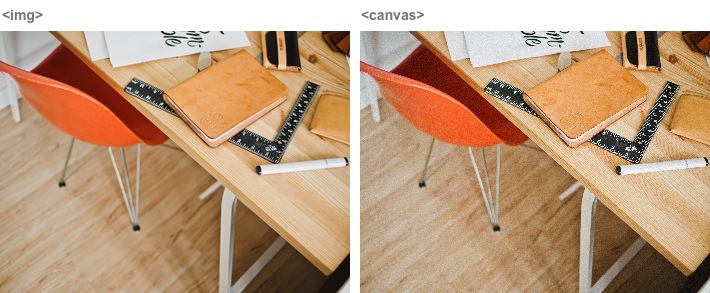

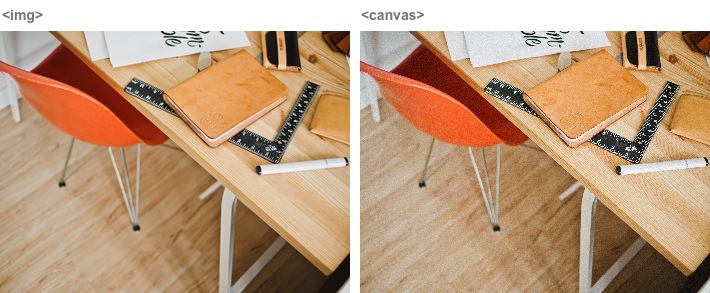

From a canvas, a picture can be saved in JPEG and, for example, sent to a server. It was possible to end this article, but first, let's take a look at the result. If you put such a canvas next to it and an ordinary element

For some reason, all modern browsers, both desktop and mobile, use a cheap method of affine transformations to draw on canvas. The differences in image resizing methods I have already described in the corresponding article. Let me remind you the essence of the method of affine transformations. In it, to calculate each point of the final image, 4 points of the source are interpolated. This means that when the image is reduced by more than 2 times, holes are formed in the original image - pixels that are not taken into account at all in the final one. It is because of these unaccounted pixels that quality suffers.

Of course, a picture in this form cannot be shown to decent people. And not surprisingly, the issue of resizing quality using a canvas is often asked on stackoverflow. The most common tip is to reduce the image in a few steps. Indeed, if a strong image reduction does not capture all the pixels, then why not reduce the image slightly . And then again and again until we get the desired size.Like in this example .

Undoubtedly, this method gives a much better result, because all points of the original image are taken into account in the final one. Another question is how exactly they are taken into account. It already depends on the step size, the size of the initial and the size of the final image. For example, if you take a step size of exactly 2, these reductions will be equivalent to supersampling. And here is the last step - how lucky. If you are completely lucky, then the last step will also be 2. But it may not be lucky at all, when in the last step the image will need to be reduced by one pixel, and the picture will be soapy. Compare, the difference in size is only one pixel, and what is the difference ( source , 4 MB):

But maybe you should try a completely different way? We have a canvas from which you can get pixels, and there is a superfast javascript that can easily cope with the task of resizing. That means we can implement any resize method ourselves, without relying on browser support. For example, supersampling or convolution .

All you need now is to upload a full-sized picture to the canvas. That would look like an ideal case.

That's so trite and boring at first glance. Glory to the eggs, browser developers will not let us get bored. No, of course this code works in some cases. The catch lies in an unexpected place.

Let's talk about why you may need to resize on the client. I had the task to reduce the size of the selected photos before sending to the server, thus saving user traffic. This is most relevant on mobile devices with a slow connection and paid traffic. And what photos are most often downloaded on such devices? Taken on the cameras of these mobile devices. Camera resolution, for example, iPhone - 8 megapixels. But with it you can take a panorama of 25 megapixels (on the iPhone 6 even more). On Android and Windows phones, camera resolutions are even higher. And here we are faced with the limitations of these mobile devices. Unfortunately, in iOS you cannot create a canvas larger than 5 megapixels.

Apple can be understood, they have to monitor the normal operation of their devices with limited resources. In fact, in the above function, the whole picture will occupy memory three times! Once - the buffer associated with the Image object, where the image is unpacked, the second time - the canvas pixels, and the third - the typed array in ImageData. For a picture of 8 megapixels, you need 8 × 3 × 4 = 96 megabytes of memory, for 25 megapixels - 300.

But in the testing process, I ran into problems not only in iOS. Chrome on the Mac with some probability began to draw instead of one large image a few small ones, and under Windows just gave a white sheet.

But since you cannot get all the pixels at once, can you get them in parts? You can load a picture into the canvas in pieces whose width is equal to the width of the original image, and the height is much smaller. First, load the first 5 megapixels, then another, then how much remains. Or even 2 megapixels, which will further reduce memory usage. Fortunately, unlike two-pass convolution resizing, the supersampling resize method is single-pass. Those. You can not only receive the image in portions, but also send one portion at a time for processing. The memory is needed only for the Image element, a canvas (for example, 2 megapixels) and a typed array. Those. for a picture, 8 megapixels (8 + 2 + 2) × 4 = 48 megabytes, which is 2 times less.

I implemented the approach described above and measured the runtime of each part. You can test yourself here . Here's what I got for a picture with a resolution of 10,800 × 2,332 pixels (panorama from iPhone).

This is a very interesting table, let's dwell on it in detail. The great news is that resizing itself in javascript is not a bottleneck. Yes, in Safari it is 1.7 times slower than in Chrome and Firefox, and in IE 3 times slower, but in all browsers the time to download a picture and receive data is still longer.

The second remarkable point is that in no browser the picture is decoded for the event

The table shows the total time for drawing and receiving data, while in fact these operations are done for every 2 megapixels, and the script using the link above displays the time of each iteration separately. And if you look at these indicators, you can see that despite the fact that the total time for receiving data for Safari, Chrome and IE is approximately the same, in Safari almost all the time it takes only the first call, in which the picture is decoded, while in Chrome and IE time is the same for all calls and indicates a general slowdown in receiving data. The same goes for Firefox, but to a lesser extent.

So far, this approach looks promising. Let's test on mobile devices. At my fingertips were iPhone 4s (i4s), iPhone 5 (i5), Meizu MX4 Pro (A) and I asked Oleg Korsunsky to test on Windows Phone, he turned out to be HTC 8x (W).

The first thing that catches your eye is the "outstanding" result of Chrome on iOS. Indeed, until recently in iOS, all third-party browsers could only work with the version of the engine without jit compilation. In iOS 8, it became possible to use jit, but Chrome has not yet had time to adapt.

Another oddity is the two results for Chrome on Android, radically different in drawing time and almost identical in everything else. This is not a mistake in the table; Chrome can indeed behave differently. I already said that browsers load images lazily, at the moment when they consider it necessary. So, nothing prevents the browser from freeing the memory occupied by the picture when it believes that the picture is no longer needed. Naturally, when the picture is needed again the next time you draw on the canvas, you have to decode it again. In this case, the picture was decoded 7 times. This can be clearly seen by the time of drawing individual chunks (I recall that in the table only the total time). Under such conditions, decoding time becomes unpredictable.

Alas, these are not all the problems. I must admit that I was powdering your brains with the Explorer. The fact is that it has a limit on the size of each side of the canvas at 4096 pixels. And part of the picture beyond these limits becomes just transparent black pixels. If the restriction on the maximum canvas area is quite easy to bypass, cutting the picture horizontally, and thereby save memory, then to bypass the width limit you will have to either pretty much rework the resize function or glue adjacent pieces into strips, which will only increase memory consumption.

At this point, I decided to spit on this matter. There was a completely crazy option not only to resize, but also to decode jpeg on the client. Cons: only jpeg, Chrome’s bad time for iOS will get even worse. Pros: predictability in Chrome under Android, no size limits, less memory needed (no endless copying to the canvas and back). I did not dare to this option, although there is a jpeg decoder in pure javascript.

Remember how at the very beginning we got a good result with a sequential decrease of 2 times in the best case, and soap - in the worst? But what if you try to get rid of the worst option without changing the approach too much? Let me remind you that soap is obtained if at the last step you need to reduce the picture by a little bit. What if the last step is taken first, decreasing first by some indefinite number of times, and then only strictly by 2 times? Along the way, you must take into account that the first step should be no more than 5 megapixels in area and 4096 pixels in any width. In this case, the code is clearly simpler than manual resizing.

On the left, the image is reduced in 4 steps, on the right in 5, and there is almost no difference. Almost a victory. Unfortunately, the difference between two and three steps (not to mention the difference between one and two steps) is still visible quite strongly:

Although the soap is much less than it was at the very beginning. I would even say that the image on the right (obtained in 3 steps) looks a little nicer than the left, which is too sharp.

One could still pull up the resize, trying to simultaneously reduce the number of steps, and bring the average step ratio closer to two, the main thing is to stop in time. Browser restrictions will not allow you to do something fundamentally better. Let's move on to the next topic.

Resize is a relatively long operation. If you act in the forehead and resize all the pictures one after another, the browser will freeze for a long time and will be inaccessible to the user. It is best done

Here is a complete example: all that was in the second part, plus the implementation of the queue and timeouts before long operations. I added a twist to the page, and now it’s clear that the browser, if it sticks, does not last long. It's time to test on mobile devices!

Rolling up my sleeves, I opened the page on the iPhone and selected 20 photos. With a little thought, Safari happily reported: A problem occurred with this webpage so it was reloaded. The second attempt is the same result. In this place, I envy you, dear readers, because for you the next paragraph will fly by in a minute, whereas for me it was a night of pain and suffering.

So, the Safari crashes. It is not possible to debug it using the developer tools - there is nothing about memory consumption there. I hopefully opened the page in the iOS simulator - it does not fall. I looked in the Activity Monitor - oh, but the memory is growing with each picture and is not freed. Well, at least something. He began to experiment. So that you understand what an experiment is in a simulator: it is impossible to see a memory leak in one picture. At 4-5 it is difficult. It is best to take 20 pieces. You cannot drag or select them with a “shift”, you need to click 20 times. After choosing, you need to look into the task manager and guess: a 50 megabyte decrease in memory consumption is random fluctuations, or I did something right.

In general, after a lot of trial and error, I came to a simple but very important conclusion: you need to free everything. As soon as possible, by any means available. And allocate as late as possible. You cannot rely on garbage collection completely. If a canvas is being created, it must be zeroed at the end (made to be 1 × 1 pixel), if the picture is, it must be unloaded at the end by assigning

After a strong code reworkan old iPhone with 512 MB of memory began to digest 50 photos and more. Chrome and Opera on Android also began to behave much better - unprecedented 160 20-megapixel photos were given, though slowly, but “without breaks”. The same beneficial effect on memory consumption and desktop browsers - IE, Chrome and Safari began to eat stably no more than 200 megabytes per tab during operation. Unfortunately, this did not help Firefox - as he ate about a gigabyte of 25 test pictures, he continued. Nothing can be said about the mobile Firefox and Dolphin under Android - it is impossible to select several files in them.

As you can see, resizing pictures on the client is damn exciting and painful. It turns out a sort of Frankenstein: the disgusting native resize is repeatedly used to get at least some semblance of quality. Thus it is necessary to bypass undetectable limits of various platforms. And still, there are many private combinations of the original and final sizes, when the picture is too soapy or sharp.

Browsers devour resources like crazy, nothing is freed, magic doesn’t work. In this sense, everything is worse than when working with compiled languages, where you need to explicitly free resources. In js, firstly, it is not obvious what needs to be released, and secondly, this is far from always possible. Nevertheless, to restrain the appetites of at least most browsers is quite realistic.

Behind the scenes was the work with EXIF. Almost all smartphones and cameras take the image from the matrix in the same orientation, and the actual orientation is recorded in EXIF, so it is important to transfer this information to the server along with the thumbnail. Fortunately, the JPEG format is quite simple and in my project I simply transfer the EXIF section from the source file to the final one, without even parsing it.

All this I learned and measured in the process of writing a resize before uploading files for the Uploadcare widget . The code that I cited in the article follows the logic of the narrative more; much is missing in it in terms of error handling and browser support. Therefore, if you want to use it with yourself, it is better to look at the source code of the widget .

By the way, here are a few more numbers: using this technique, 80 photos from iPhone 5, reduced to a resolution of 800 × 600, are downloaded via 3G in less than 2 minutes. The same original photos could take 26 minutes to load. So it was worth it.

function resize(img, w, h) {

var canvas = document.createElement('canvas');

canvas.width = w;

canvas.height = h;

canvas.getContext('2d').drawImage(img, 0, 0, w, h);

return canvas;

}

From a canvas, a picture can be saved in JPEG and, for example, sent to a server. It was possible to end this article, but first, let's take a look at the result. If you put such a canvas next to it and an ordinary element

![]()

For some reason, all modern browsers, both desktop and mobile, use a cheap method of affine transformations to draw on canvas. The differences in image resizing methods I have already described in the corresponding article. Let me remind you the essence of the method of affine transformations. In it, to calculate each point of the final image, 4 points of the source are interpolated. This means that when the image is reduced by more than 2 times, holes are formed in the original image - pixels that are not taken into account at all in the final one. It is because of these unaccounted pixels that quality suffers.

Of course, a picture in this form cannot be shown to decent people. And not surprisingly, the issue of resizing quality using a canvas is often asked on stackoverflow. The most common tip is to reduce the image in a few steps. Indeed, if a strong image reduction does not capture all the pixels, then why not reduce the image slightly . And then again and again until we get the desired size.Like in this example .

Undoubtedly, this method gives a much better result, because all points of the original image are taken into account in the final one. Another question is how exactly they are taken into account. It already depends on the step size, the size of the initial and the size of the final image. For example, if you take a step size of exactly 2, these reductions will be equivalent to supersampling. And here is the last step - how lucky. If you are completely lucky, then the last step will also be 2. But it may not be lucky at all, when in the last step the image will need to be reduced by one pixel, and the picture will be soapy. Compare, the difference in size is only one pixel, and what is the difference ( source , 4 MB):

But maybe you should try a completely different way? We have a canvas from which you can get pixels, and there is a superfast javascript that can easily cope with the task of resizing. That means we can implement any resize method ourselves, without relying on browser support. For example, supersampling or convolution .

All you need now is to upload a full-sized picture to the canvas. That would look like an ideal case.

resizePixelsI will leave the implementation behind the scenes.function resizeImage(image, width, height) {

var cIn = document.createElement('canvas');

cIn.width = image.width;

cIn.height = image.height;

var ctxIn = cIn.getContext('2d');

ctxIn.drawImage(image, 0, 0);

var dataIn = ctxIn.getImageData(0, 0, image.width, image.heigth);

var dataOut = ctxIn.createImageData(width, heigth);

resizePixels(dataIn, dataOut);

var cOut = document.createElement('canvas');

cOut.width = width;

cOut.height = height;

cOut.getContext('2d').putImageData(dataOut, 0, 0);

return cOut;

}

That's so trite and boring at first glance. Glory to the eggs, browser developers will not let us get bored. No, of course this code works in some cases. The catch lies in an unexpected place.

Let's talk about why you may need to resize on the client. I had the task to reduce the size of the selected photos before sending to the server, thus saving user traffic. This is most relevant on mobile devices with a slow connection and paid traffic. And what photos are most often downloaded on such devices? Taken on the cameras of these mobile devices. Camera resolution, for example, iPhone - 8 megapixels. But with it you can take a panorama of 25 megapixels (on the iPhone 6 even more). On Android and Windows phones, camera resolutions are even higher. And here we are faced with the limitations of these mobile devices. Unfortunately, in iOS you cannot create a canvas larger than 5 megapixels.

Apple can be understood, they have to monitor the normal operation of their devices with limited resources. In fact, in the above function, the whole picture will occupy memory three times! Once - the buffer associated with the Image object, where the image is unpacked, the second time - the canvas pixels, and the third - the typed array in ImageData. For a picture of 8 megapixels, you need 8 × 3 × 4 = 96 megabytes of memory, for 25 megapixels - 300.

But in the testing process, I ran into problems not only in iOS. Chrome on the Mac with some probability began to draw instead of one large image a few small ones, and under Windows just gave a white sheet.

But since you cannot get all the pixels at once, can you get them in parts? You can load a picture into the canvas in pieces whose width is equal to the width of the original image, and the height is much smaller. First, load the first 5 megapixels, then another, then how much remains. Or even 2 megapixels, which will further reduce memory usage. Fortunately, unlike two-pass convolution resizing, the supersampling resize method is single-pass. Those. You can not only receive the image in portions, but also send one portion at a time for processing. The memory is needed only for the Image element, a canvas (for example, 2 megapixels) and a typed array. Those. for a picture, 8 megapixels (8 + 2 + 2) × 4 = 48 megabytes, which is 2 times less.

I implemented the approach described above and measured the runtime of each part. You can test yourself here . Here's what I got for a picture with a resolution of 10,800 × 2,332 pixels (panorama from iPhone).

| Browser | Safari 8 | Chrome 40 | Firefox 35 | IE 11 |

|---|---|---|---|---|

| Image load | 24 ms | 27 | 28 | 76 |

| Draw to canvas | 1 | 348 | 278 | 387 |

| Get image data | 304 | 299 | 165 | 320 |

| Js resize | 233 | 135 | 138 | 414 |

| Put data back | 1 | 1 | 3 | 5 |

| Get image blob | 10 | 16 | 21 | 19 |

| Total | 576 | 833 | 641 | 1243 |

This is a very interesting table, let's dwell on it in detail. The great news is that resizing itself in javascript is not a bottleneck. Yes, in Safari it is 1.7 times slower than in Chrome and Firefox, and in IE 3 times slower, but in all browsers the time to download a picture and receive data is still longer.

The second remarkable point is that in no browser the picture is decoded for the event

image.onload. Decoding is postponed to the moment when it is really necessary - display on the screen or display on canvas. And in Safari, the image is not decoded, even when applied to the canvas, because the canvas also does not appear on the screen. And it is decoded only when the pixels are removed from the canvas.The table shows the total time for drawing and receiving data, while in fact these operations are done for every 2 megapixels, and the script using the link above displays the time of each iteration separately. And if you look at these indicators, you can see that despite the fact that the total time for receiving data for Safari, Chrome and IE is approximately the same, in Safari almost all the time it takes only the first call, in which the picture is decoded, while in Chrome and IE time is the same for all calls and indicates a general slowdown in receiving data. The same goes for Firefox, but to a lesser extent.

So far, this approach looks promising. Let's test on mobile devices. At my fingertips were iPhone 4s (i4s), iPhone 5 (i5), Meizu MX4 Pro (A) and I asked Oleg Korsunsky to test on Windows Phone, he turned out to be HTC 8x (W).

| Browser | Safari i4s | Safari i5 | Chrome i4s | Chrome a | Chrome a | Firefox a | IE W |

|---|---|---|---|---|---|---|---|

| Image load | 517 ms | 137 | 650 | 267 | 220 | 81 | 437 |

| Draw to canvas | 2 706 | 959 | 2 725 | 1 108 | 6 954 | 1 007 | 1 019 |

| Get image data | 678 | 250 | 734 | 373 | 543 | 406 | 1,783 |

| Js resize | 2 939 | 1,110 | 96 320 | 491 | 458 | 418 | 2,299 |

| Put data back | 9 | 5 | 315 | 6 | 4 | 14 | 24 |

| Get image blob | 98 | 46 | 187 | 37 | 41 | 80 | 33 |

| Total | 6,995 | 2,524 | 101 002 | 2,314 | 8 242 | 2,041 | 5,700 |

The first thing that catches your eye is the "outstanding" result of Chrome on iOS. Indeed, until recently in iOS, all third-party browsers could only work with the version of the engine without jit compilation. In iOS 8, it became possible to use jit, but Chrome has not yet had time to adapt.

Another oddity is the two results for Chrome on Android, radically different in drawing time and almost identical in everything else. This is not a mistake in the table; Chrome can indeed behave differently. I already said that browsers load images lazily, at the moment when they consider it necessary. So, nothing prevents the browser from freeing the memory occupied by the picture when it believes that the picture is no longer needed. Naturally, when the picture is needed again the next time you draw on the canvas, you have to decode it again. In this case, the picture was decoded 7 times. This can be clearly seen by the time of drawing individual chunks (I recall that in the table only the total time). Under such conditions, decoding time becomes unpredictable.

Alas, these are not all the problems. I must admit that I was powdering your brains with the Explorer. The fact is that it has a limit on the size of each side of the canvas at 4096 pixels. And part of the picture beyond these limits becomes just transparent black pixels. If the restriction on the maximum canvas area is quite easy to bypass, cutting the picture horizontally, and thereby save memory, then to bypass the width limit you will have to either pretty much rework the resize function or glue adjacent pieces into strips, which will only increase memory consumption.

At this point, I decided to spit on this matter. There was a completely crazy option not only to resize, but also to decode jpeg on the client. Cons: only jpeg, Chrome’s bad time for iOS will get even worse. Pros: predictability in Chrome under Android, no size limits, less memory needed (no endless copying to the canvas and back). I did not dare to this option, although there is a jpeg decoder in pure javascript.

Part 2. Back to the beginning

Remember how at the very beginning we got a good result with a sequential decrease of 2 times in the best case, and soap - in the worst? But what if you try to get rid of the worst option without changing the approach too much? Let me remind you that soap is obtained if at the last step you need to reduce the picture by a little bit. What if the last step is taken first, decreasing first by some indefinite number of times, and then only strictly by 2 times? Along the way, you must take into account that the first step should be no more than 5 megapixels in area and 4096 pixels in any width. In this case, the code is clearly simpler than manual resizing.

On the left, the image is reduced in 4 steps, on the right in 5, and there is almost no difference. Almost a victory. Unfortunately, the difference between two and three steps (not to mention the difference between one and two steps) is still visible quite strongly:

Although the soap is much less than it was at the very beginning. I would even say that the image on the right (obtained in 3 steps) looks a little nicer than the left, which is too sharp.

One could still pull up the resize, trying to simultaneously reduce the number of steps, and bring the average step ratio closer to two, the main thing is to stop in time. Browser restrictions will not allow you to do something fundamentally better. Let's move on to the next topic.

Part 3. Many photos in a row

Resize is a relatively long operation. If you act in the forehead and resize all the pictures one after another, the browser will freeze for a long time and will be inaccessible to the user. It is best done

setTimeoutafter each resize step. But here another problem appears: if all the pictures start resizing at the same time, then memory for them will be needed at the same time. This can be avoided by organizing a queue. For example, you can start resizing the next image at the end of resizing the previous one. But I preferred a more general solution when the queue is formed inside the resize function, and not outside. This ensures that two pictures will not be resized at the same time, even if resize is called from different places at the same time. Here is a complete example: all that was in the second part, plus the implementation of the queue and timeouts before long operations. I added a twist to the page, and now it’s clear that the browser, if it sticks, does not last long. It's time to test on mobile devices!

Here I want to make a digression about mobile Safari 8 (I do not have data on other versions). In it, the choice of pictures in the input slows down the browser for a couple of seconds. This is either due to the fact that Safari creates a copy of the photo with cropped EXIF, or because it generates a small preview that is displayed directly inside the input. If for one photo it is tolerable and even, you can say, invisible, then for multiple choice it can turn into hell (depends on the number of selected photos). And all this time the page remains unaware that the photos are selected, as well as not know that the file selection dialog is generally open.

Rolling up my sleeves, I opened the page on the iPhone and selected 20 photos. With a little thought, Safari happily reported: A problem occurred with this webpage so it was reloaded. The second attempt is the same result. In this place, I envy you, dear readers, because for you the next paragraph will fly by in a minute, whereas for me it was a night of pain and suffering.

So, the Safari crashes. It is not possible to debug it using the developer tools - there is nothing about memory consumption there. I hopefully opened the page in the iOS simulator - it does not fall. I looked in the Activity Monitor - oh, but the memory is growing with each picture and is not freed. Well, at least something. He began to experiment. So that you understand what an experiment is in a simulator: it is impossible to see a memory leak in one picture. At 4-5 it is difficult. It is best to take 20 pieces. You cannot drag or select them with a “shift”, you need to click 20 times. After choosing, you need to look into the task manager and guess: a 50 megabyte decrease in memory consumption is random fluctuations, or I did something right.

In general, after a lot of trial and error, I came to a simple but very important conclusion: you need to free everything. As soon as possible, by any means available. And allocate as late as possible. You cannot rely on garbage collection completely. If a canvas is being created, it must be zeroed at the end (made to be 1 × 1 pixel), if the picture is, it must be unloaded at the end by assigning

src="about:blank". Just removing from the DOM is not enough. If the file is opened through URL.createObjectURL, it must be closed immediately through URL.revokeObjectURL. After a strong code reworkan old iPhone with 512 MB of memory began to digest 50 photos and more. Chrome and Opera on Android also began to behave much better - unprecedented 160 20-megapixel photos were given, though slowly, but “without breaks”. The same beneficial effect on memory consumption and desktop browsers - IE, Chrome and Safari began to eat stably no more than 200 megabytes per tab during operation. Unfortunately, this did not help Firefox - as he ate about a gigabyte of 25 test pictures, he continued. Nothing can be said about the mobile Firefox and Dolphin under Android - it is impossible to select several files in them.

Part 4. Something like a conclusion

As you can see, resizing pictures on the client is damn exciting and painful. It turns out a sort of Frankenstein: the disgusting native resize is repeatedly used to get at least some semblance of quality. Thus it is necessary to bypass undetectable limits of various platforms. And still, there are many private combinations of the original and final sizes, when the picture is too soapy or sharp.

Browsers devour resources like crazy, nothing is freed, magic doesn’t work. In this sense, everything is worse than when working with compiled languages, where you need to explicitly free resources. In js, firstly, it is not obvious what needs to be released, and secondly, this is far from always possible. Nevertheless, to restrain the appetites of at least most browsers is quite realistic.

Behind the scenes was the work with EXIF. Almost all smartphones and cameras take the image from the matrix in the same orientation, and the actual orientation is recorded in EXIF, so it is important to transfer this information to the server along with the thumbnail. Fortunately, the JPEG format is quite simple and in my project I simply transfer the EXIF section from the source file to the final one, without even parsing it.

All this I learned and measured in the process of writing a resize before uploading files for the Uploadcare widget . The code that I cited in the article follows the logic of the narrative more; much is missing in it in terms of error handling and browser support. Therefore, if you want to use it with yourself, it is better to look at the source code of the widget .

By the way, here are a few more numbers: using this technique, 80 photos from iPhone 5, reduced to a resolution of 800 × 600, are downloaded via 3G in less than 2 minutes. The same original photos could take 26 minutes to load. So it was worth it.