Writing fast and economical JavaScript code

- Transfer

A JS engine like Google’s V8 (Chrome, Node) is designed for fast execution of large applications. If during development you care about efficient memory usage and speed, you need to know something about the processes that take place in the browser JS engine.

Whatever it is - V8, SpiderMonkey (Firefox), Carakan (Opera), Chakra (IE) or something else, knowledge of internal processes will help you optimize the performance of your applications. But I do not urge you to optimize the engine for one browser or engine - do not do this.

Ask yourself:

- can something be made more efficient in my code?

- What kind of optimization do popular JS engines do?

- that the engine cannot compensate, and can garbage collection clean up everything as I expect from it?

There are many pitfalls associated with efficient memory usage and performance, and in the article we will study some approaches that have proven themselves well in tests.

Although it is possible to develop large applications without a proper understanding of the JS engine, any car owner will tell you that he has looked at least once under the hood of a car. Since I like the Chrome browser, I’ll talk about its JavaScript engine. V8 consists of several main parts.

- The main compiler that processes JS and issues machine code before it is executed, instead of executing bytecode or simply interpreting it. This code is usually not very optimized.

- V8 transforms objects into an object model . In JS, objects are implemented as associative arrays, but in V8 they are represented by hidden classes , which are an internal type system for optimized searches.

-a run-time profiler that monitors the operation of the system and defines “hot” functions (code that takes a long time to execute)

- an optimizing compiler that recompiles and optimizes hot code, and deals with other optimizations like inlining

- V8 supports deoptimization when the optimizing compiler rolls back, if he discovers that he has made some too optimistic assumptions when parsing the code

- garbage collection . The idea of her work is as important as the idea of optimization.

This is a form of memory management. The collector tries to return the memory occupied by objects that are no longer in use. In a language that supports garbage collection, objects that are still referenced are not cleaned up.

You can almost always not delete object references manually. Just placing the variables where they are needed (ideally, the more local the better - inside the functions that use them, and not in the external scope), you can achieve normal operation.

JS cannot force garbage collection to work. This does not need to be done, because this process is controlled at runtime, and he knows better when and what to clean.

In some online disputes over returning memory in JS, the delete keyword appears. Although it was originally intended to remove keys, some developers believe that it can be used to force the removal of links. Avoid using delete. In the example below, delete ox does more harm than good, as it changes the hidden class of o and makes it a slow object.

You will certainly find references to delete in many popular JS libraries, as it makes sense. The main thing that needs to be learned is that you do not need to change the structure of "hot" objects during program execution. JS engines can recognize such “hot” objects and try to optimize them. This will be easier to do if the structure of the object does not change much, and delete just leads to such changes.

There is a misunderstanding about how null works. Setting the object reference to null does not nullify the object. Writing ox = null is better than using delete, but that doesn't make sense.

If this link was the last object reference, then the garbage collector will pick it up. If this was not the last link, you can get to it, and the collector will not pick it up.

One more note: global variables are not tidied up by the garbage collector while the page is running. No matter how long it has been open, variables from the scope of the global object will exist.

Global variables are cleared when you reload the page, go to another page, close a bookmark, or exit the browser. Variables from the scope of the function are cleared when the scope disappears - when the function exits, and there are no more references to them.

For garbage collection to work as early as possible and to collect as many objects as possible, do not hold on to objects that you do not need. Usually this happens automatically, but here's what you need to remember:

- A good alternative to manually deleting links is to use variables with the correct scope. Instead of setting the global variable to null, use a local variable for the function, which disappears when the scope disappears. The code gets cleaner and fewer worries.

- Make sure that you remove event handlers when they are no longer needed, especially before deleting the DOM elements to which they are attached.

- When using a local data cache, be sure to clear it or use the aging mechanism to not store large unnecessary chunks of data.

Now let's turn to the functions. As we already said, garbage collection frees up used blocks of memory (objects) that cannot be reached anymore. To illustrate, a few examples.

Upon returning from foo, the object pointed to by bar will be cleared by the garbage collector, since nothing is already referencing it.

Compare with:

Now we have a reference to the object, which remains until the code that called the function assigns something else to b (or until b goes out of scope).

When you encounter a function that returns an internal function, the internal one has access to the scope outside it, even after the external one finishes working. This is a closure - an expression that can work with variables from the selected context. For instance:

The garbage collector cannot tidy up the created functional object, since there is still access to it, for example, via sumA (n). Here is another example. Can we access largeStr?

var a = function () {

var largeStr = new Array (1000000) .join ('x');

return function () {

return largeStr;

};

} ();

Yes - through a (), therefore it is also not eliminated by the collector. How about this:

We no longer have access to it, so it can be cleaned.

One of the worst places to leak is a loop, or in a pair of setTimeout () / setInterval (), although this problem is quite common. Consider an example:

If we do

to start the timer, ““ Time is running out! ”will be displayed every second. If we do:

the timer will continue to work anyway. myObj cannot be cleaned up because the closure passed to setTimeout continues to exist. In turn, it stores links to myObj through myRef. This is the same as if we passed the closure to any other function, leaving links to it.

Remember that links inside setTimeout / setInterval calls, such as functions, must be executed and completed before they can be cleared.

It is important not to optimize the code prematurely. You can get carried away with micro-tests, which say that N is faster than M in V8, but the real contribution of these things to the finished module can be much less than you think.

Let's say we need a module that:

- reads data from a local source that has numeric id;

- draws a tablet with this data;

- Adds event handlers for clicks on cells.

Questions immediately appear. How to store data? How to draw a label effectively and embed it in the DOM? How to handle events in an optimal way?

The first and naive approach is to store each piece of data in an object that can be grouped into an array. You can use jQuery to traverse data and draw a table, and then add it to the DOM. Finally, you can use event binding to add click-through behavior.

Here's how you should NOT do:

Cheap and cheerful.

However, in this example, we pass only by id, by numerical properties, which could be represented more simply as an array. In addition, the direct use of DocumentFragment and native DOM methods is more optimal than using jQuery to create a table, and of course, it will be much faster to process events through the parent element.

jQuery “behind the scenes” directly uses DocumentFragment, but in our example, the code calls append () in a loop, and each of the calls does not know about the others, so the code may not be optimized. It may not be scary, but it is better to check it through tests.

By adding the following changes we will speed up the script.

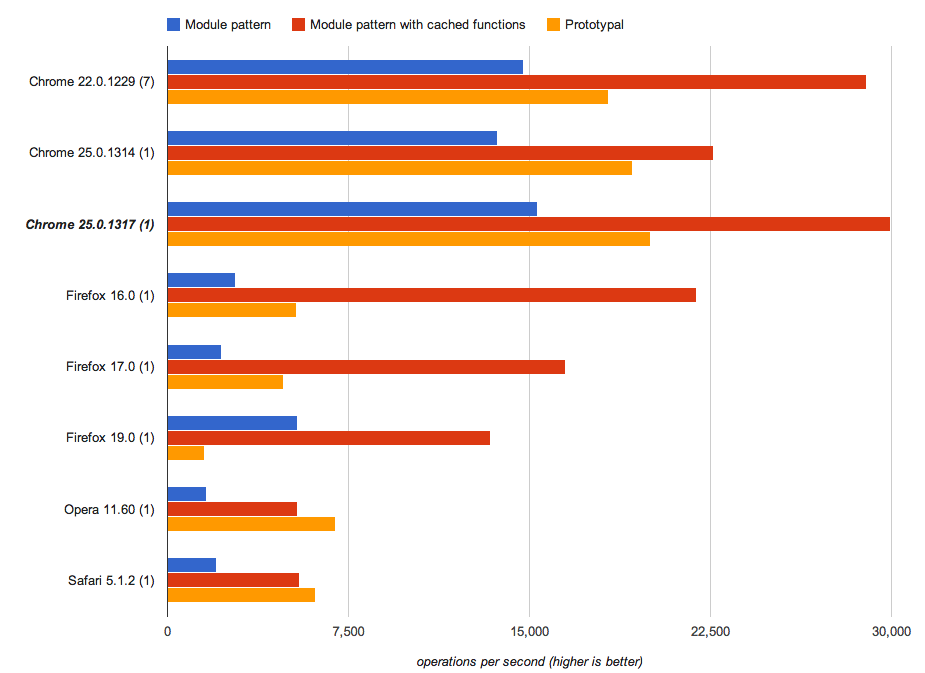

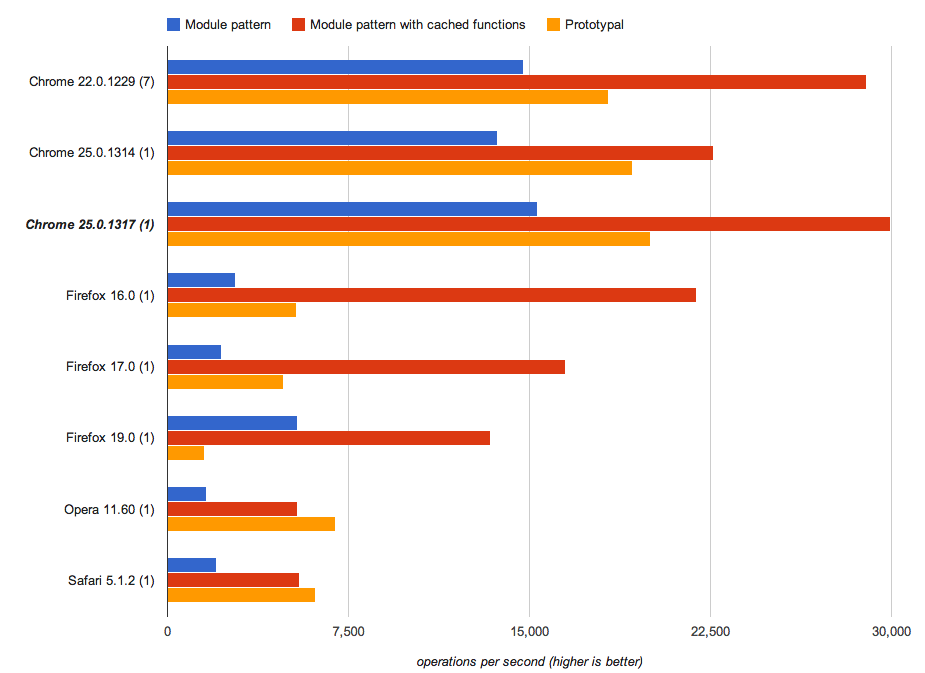

Let's look at other ways to improve performance. You could read somewhere that the prototype model is more optimal than the module model. Or that the frameworks for working with templates are highly optimized. Sometimes this is true, but they are mostly useful because the code becomes more readable. And you also need to do precompilation. Let's check these statements:

It turns out that in this case, the advantages in speed are negligible. These things are not used because of speed, but because of readability, inheritance model and maintainability.

More difficult problems are drawing pictures on canvas and working with pixels. Always check what speed tests do before using them. It is possible that their checks and limitations will be so artificial that they will not be useful to you in the world of real applications. All optimization is better to test in the whole ready-made code.

We will not give absolutely all the advice, but dwell on the most needed.

- Some models interfere with optimization, for example, a bunch of try-catch. Details about which functions may or may not be optimized can be obtained from the d8 utility using the command --trace-opt file.js

- try to keep your functions monomorphic, i.e. so that variables (including properties, arrays, and function parameters) always contain only objects from the same hidden class. For example, do not do this:

- do not boot from uninitialized or deleted elements

- do not write huge functions, because they are harder to optimize

- use an array to store a bunch of numbers or a list of objects of the same type

- if semantics require an object with properties (of different types), use an object. It is quite memory efficient, and quite fast.

- for elements with integer indices, the iteration will be faster than for the properties of the object

- the properties of objects - the thing is complicated, they can be created through setters, with different numbering and recording capabilities. Array elements cannot be configured this way - they either exist or they do not exist. From the point of view of the engine, this helps optimize performance. Especially if the array contains numbers. For example, when working with vectors, use an array instead of an object with properties x, y, z.

There is one major difference between arrays and objects in JS - the length property. If you track this parameter yourself, then the objects will be about as fast as the arrays.

Create objects through the constructor. Then all objects will have one hidden class. Also, this is slightly faster than Object.create ().

There are no restrictions on the number of different types of objects and their complexity (within reasonable limits - long prototype chains are harmful, and objects with a small number of properties are presented by the engine in a slightly different way and slightly faster than large ones). For hot objects, try to make short inheritance chains and a small number of properties.

A common problem. Be careful when copying big things - this usually happens slowly. It is especially bad to use for..in loops for this, which work slowly in any engines.

When you really need to quickly copy an object, use an array or a special function that directly copies each property. This will be the fastest way:

This technique can improve performance. Those examples of the example below that you probably met, most likely, work more slowly, because they create member functions all the time.

Here is a test for prototype performance versus modules:

If you do not need a class, do not create it. Here's an example of how you can improve performance by getting rid of the overlays associated with the jsperf.com/prototypal-performance/54 classes .

Do not remove items. If empty spaces form in the array, V8 switches to the dictionary method of working with arrays, which makes the script even slower.

Useful because hint V8 about the types and number of elements in the array. Suitable for small and medium arrays.

Do not mix different types in the same array (var arr = [1, “1”, undefined, true, “true”])

Testing the performance of mixed types

It can be seen from the test that the array of integers works the fastest.

In such arrays, access to elements is slower - V8 does not take up memory for all elements if only a few are used. She works with him with the help of dictionaries, which saves memory, but affects speed.

Testing Sparse Arrays

Avoid holey arrays resulting from deleting elements, or assigning a [x] = foo, where x> a.length). If you remove only one element, work with the array slows down.

Leaky array test

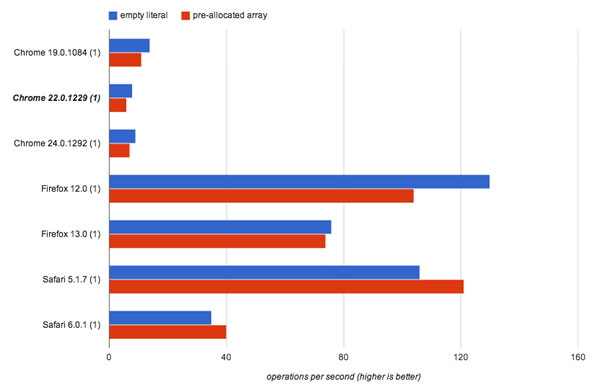

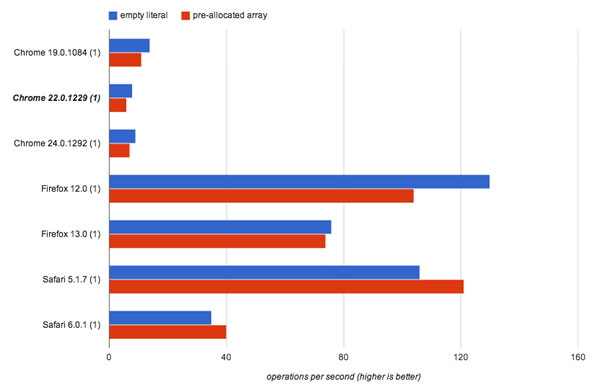

Do not pre-populate large arrays (more than 64K elements). Nitro (Safari) works with pre-populated arrays better. But other engines (V8, SpiderMonkey) work differently.

Prefilled Array Test

For web applications, speed is what matters. Users do not like to wait, so it is critical to try to squeeze all the possible speed out of the script. This is a rather difficult task, and here are our recommendations for its implementation:

- measure (find bottlenecks)

- understand (find what the problem is)

- forgive fix

A common principle for measuring speed is to measure runtime and compare. One comparison model was proposed by the jsPerf team and is used by SunSpider and Kraken :

The code is placed in a loop and executed several times, then the start time is subtracted from the end time.

But this is too simple an approach - especially to test work in different browsers or environments. Even garbage collection can affect performance. This should be remembered even when using window.performance.

For a serious dive into code testing, I recommend reading JavaScript Benchmarking.

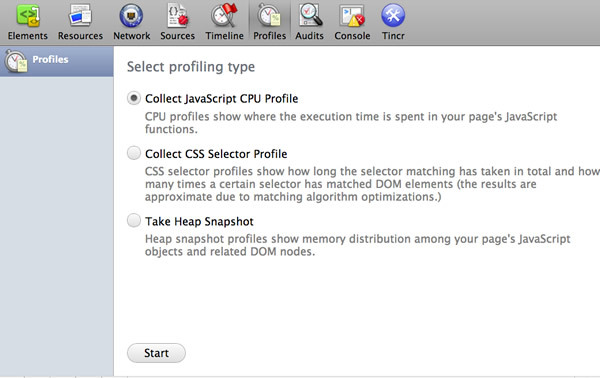

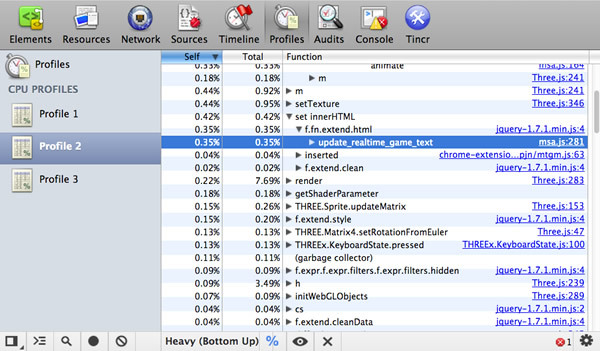

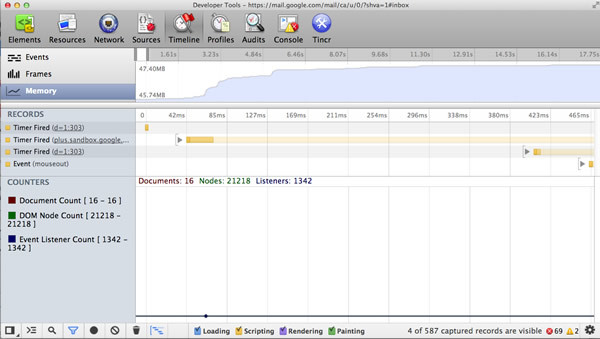

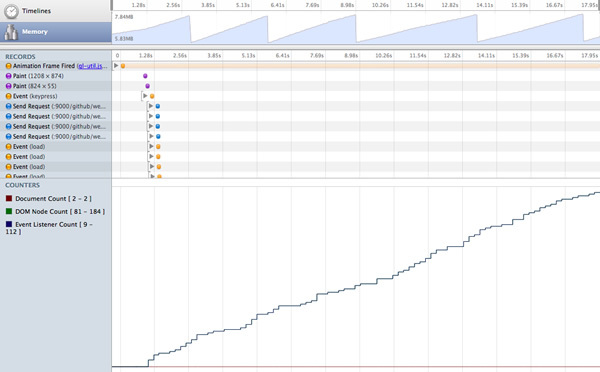

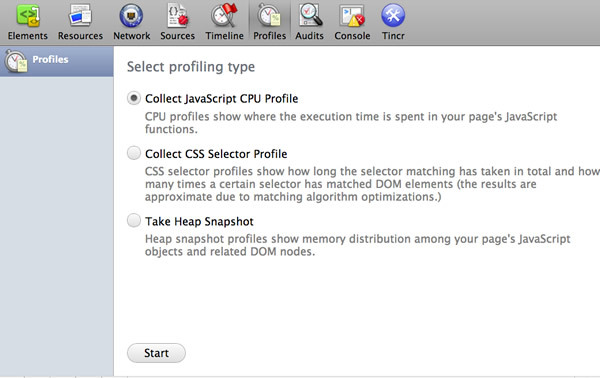

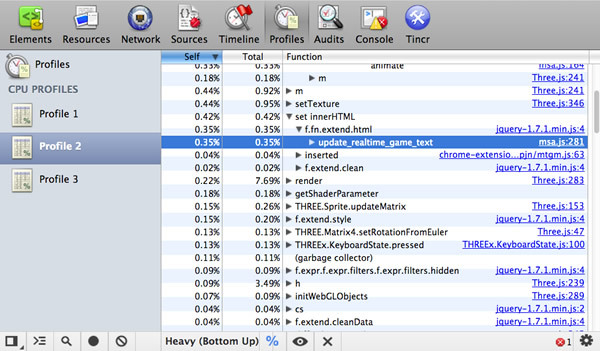

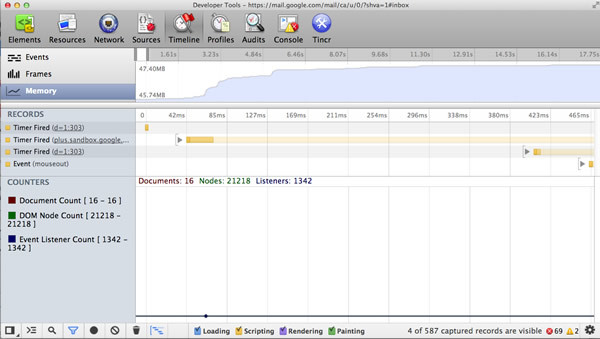

Chrome Developer Tools supports profiling. It can be used to find out which functions consume the most time and optimize them.

Profiling begins by defining a reference point for the speed of your code — Timeline is used for this. It notes how long our code has been running. The tab “profiles” describes in more detail what is happening in the application. The JavaScript CPU profile shows how much CPU time the code took, the CSS selector how much time it took to process the selectors, and Heap snapshots showing the memory usage.

Using these tools, you can isolate, tweak, and reprofile the code, measuring how the execution of the program changes.

Good profiling instructions are here:JavaScript Profiling With The Chrome Developer Tools .

Ideally, profiling should not be affected by installed extensions and programs, so run Chrome with the --user-data-dir <empty directory> option.

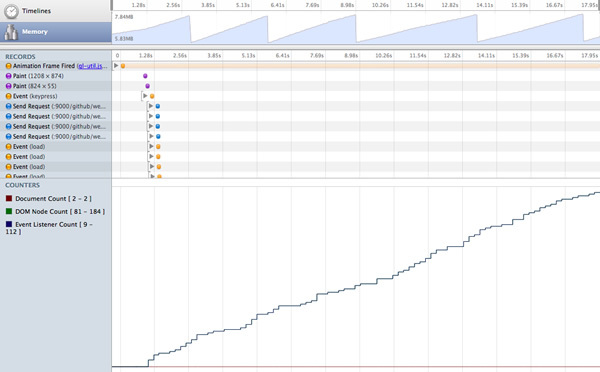

In Google Chrome, Developer Tools are actively used in projects like Gmail to detect and fix leaks.

Some of the parameters that our teams pay attention to are private memory usage, JS heap size, number of DOM nodes, storage cleaning, event handler count, garbage collection. Those familiar with event architectures will be interested in the fact that the most common problems we encountered when listen () lacks unlisten () (closure) and when there is no dispose () for objects that create event handlers.

There is a wonderful presentation of the 3 Snapshot technique that helps you find leaks through DevTools.

The point of the technique is that you record several actions in your application, start garbage collection, check if the number of DOM nodes returns to the expected value, and then analyze three snapshots of the heap to determine if there are leaks.

In modern single-page applications, it is important to manage memory (AngularJS, Backbone, Ember frameworks), because they do not restart. Therefore, memory leaks can quickly manifest themselves. This is a big trap for such applications, because memory is limited, and applications run for a long time (email clients, social networks). Big power is a big responsibility.

In Backbone, make sure you get rid of old views and links through dispose (). This function was added recently; it removes all handlers added to the events object, and all collections of handlers when the view is passed as the third argument (in callbacks). dispose () is also called in the view remove () function, which solves most of the simple memory cleaning issues. In Ember, clean browsers when they discover that an item has been removed from view.

Advice from Derick Bailey ::

In this article, Derrick describes many memory errors when working with Backbone.js, and also offers a solution to these problems.

Another great tutorial on debugging leaks in Node .

Such recounts block the page for the user, so you need to figure out how to reduce the recount time. Methods that cause recounting must be collected in one place and rarely used. As little action as possible directly with the DOM. To do this, use DocumentFragment - a way to isolate part of a document tree. Instead of constantly adding nodes to the DOM, we can use fragments to build everything we need, and then perform one insert into the DOM.

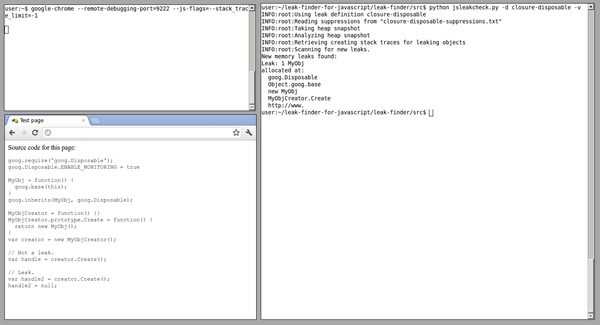

Let's make a function that adds 20 divs to the element. Just adding each div will cause 20 page recounts.

Instead, you can use a DocumentFragment, add a div to it, and then add it to the DOM through appendChild. Then all the heirs of the fragment will be added to the page in one recount.

For more information, see Make the Web Faster , JavaScript Memory Optimization, and Finding Memory Leaks .

To help with leak detection, a utility was developed for the Chrome Developer Tools that works through the remote work protocol, which takes pictures of the heap and finds out which objects are causing the leak.

I recommend reading a post on this topic or reading the project page .

Optimization Tracking:

More details:

trace-opt — write down the names of optimized functions and show the missing code that the optimizer couldn’t handle;

trace-deopt — write down the code that had to be de-optimized when running

trace-gc — record every stage of garbage collection

Optimized functions are marked with an asterisk (*), and not optimized - tilde (~).

Spicy details about the flags and the internal work of the V8 read in the post of Vyacheslav Egorov .

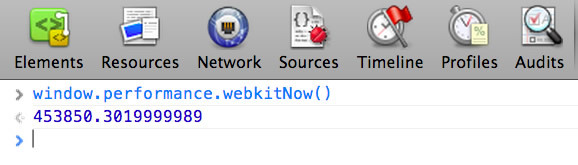

High resolution time ( High Resolution Time The , the HRT) - JS is an interface to access a timer with a resolution of less than a millisecond, which is independent of the user's time shifts. Useful for writing performance tests.

Available in Chrome (stable) as window.performance.webkitNow (), and in Chrome Canary without the prefix -window.performance.now (). Paul Irish wrote about this in detail in his post on HTML5Rocks .

If we need to measure the application’s performance on the web, the Navigation Timing API will help us . With its help, you can get accurate and detailed measurements performed when the page loads. Available through window.performance.timing, which can be used directly in the console:

From this data you can learn a lot of useful things. For example, network delay responseEnd-fetchStart; the time it took to load the page after receiving loadEventEnd-responseEnd from the server; the time between loading the page and starting navigation loadEventEnd-navigationStart.

For details, see Measuring Page Load Speed With Navigation Timing .

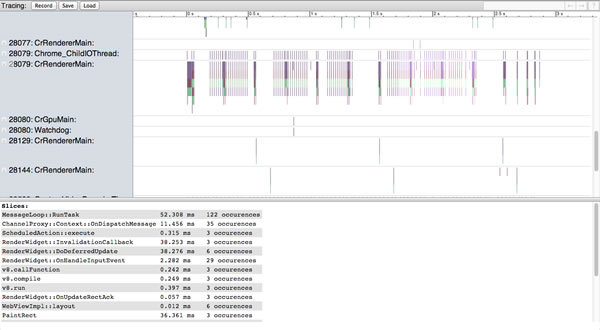

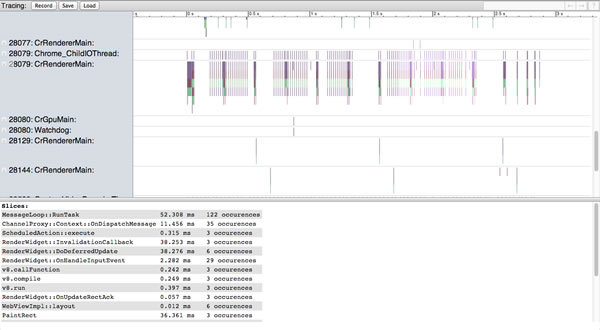

about: tracing in Chrome shows intimate details about the speed of the browser, recording all its activities in each of the threads, bookmarks and processes.

Here you can see all the details necessary for profiling the script and tweak the JS extension in such a way as to optimize downloads.

A good article on using about: tracing to profile WebGL games.

about: memory in Chrome is also a useful thing that shows how much memory each bookmark uses - this can be used to find leaks.

In the amazing and mysterious world of JS engines, there are many pitfalls associated with speed. There is no universal recipe for improving performance. By combining different optimization techniques and testing applications in a real environment, you can see how you need to optimize your application. Understanding how engines process and optimize your code can help you tweak your applications. Measure, understand, correct and repeat.

Do not forget about optimization, but do not engage in micro-optimization due to convenience. Think about which optimization is important for the application, and which it can do without.

Keep in mind that as JS engines get faster, the next bottleneck is the DOM. Recalculation and redrawing also needs to be minimized - touch the DOM only if absolutely necessary. Do not forget about the network. HTTP requests also need to be minimized and cached, especially for mobile applications.

Whatever it is - V8, SpiderMonkey (Firefox), Carakan (Opera), Chakra (IE) or something else, knowledge of internal processes will help you optimize the performance of your applications. But I do not urge you to optimize the engine for one browser or engine - do not do this.

Ask yourself:

- can something be made more efficient in my code?

- What kind of optimization do popular JS engines do?

- that the engine cannot compensate, and can garbage collection clean up everything as I expect from it?

There are many pitfalls associated with efficient memory usage and performance, and in the article we will study some approaches that have proven themselves well in tests.

And how does JS work in V8?

Although it is possible to develop large applications without a proper understanding of the JS engine, any car owner will tell you that he has looked at least once under the hood of a car. Since I like the Chrome browser, I’ll talk about its JavaScript engine. V8 consists of several main parts.

- The main compiler that processes JS and issues machine code before it is executed, instead of executing bytecode or simply interpreting it. This code is usually not very optimized.

- V8 transforms objects into an object model . In JS, objects are implemented as associative arrays, but in V8 they are represented by hidden classes , which are an internal type system for optimized searches.

-a run-time profiler that monitors the operation of the system and defines “hot” functions (code that takes a long time to execute)

- an optimizing compiler that recompiles and optimizes hot code, and deals with other optimizations like inlining

- V8 supports deoptimization when the optimizing compiler rolls back, if he discovers that he has made some too optimistic assumptions when parsing the code

- garbage collection . The idea of her work is as important as the idea of optimization.

Garbage collection

This is a form of memory management. The collector tries to return the memory occupied by objects that are no longer in use. In a language that supports garbage collection, objects that are still referenced are not cleaned up.

You can almost always not delete object references manually. Just placing the variables where they are needed (ideally, the more local the better - inside the functions that use them, and not in the external scope), you can achieve normal operation.

JS cannot force garbage collection to work. This does not need to be done, because this process is controlled at runtime, and he knows better when and what to clean.

Errors with deleting object references

In some online disputes over returning memory in JS, the delete keyword appears. Although it was originally intended to remove keys, some developers believe that it can be used to force the removal of links. Avoid using delete. In the example below, delete ox does more harm than good, as it changes the hidden class of o and makes it a slow object.

var o = { x: 1 };

delete o.x; // true

o.x; // undefined

You will certainly find references to delete in many popular JS libraries, as it makes sense. The main thing that needs to be learned is that you do not need to change the structure of "hot" objects during program execution. JS engines can recognize such “hot” objects and try to optimize them. This will be easier to do if the structure of the object does not change much, and delete just leads to such changes.

There is a misunderstanding about how null works. Setting the object reference to null does not nullify the object. Writing ox = null is better than using delete, but that doesn't make sense.

var o = { x: 1 };

o = null;

o; // null

o.x // TypeError

If this link was the last object reference, then the garbage collector will pick it up. If this was not the last link, you can get to it, and the collector will not pick it up.

One more note: global variables are not tidied up by the garbage collector while the page is running. No matter how long it has been open, variables from the scope of the global object will exist.

var myGlobalNamespace = {};

Global variables are cleared when you reload the page, go to another page, close a bookmark, or exit the browser. Variables from the scope of the function are cleared when the scope disappears - when the function exits, and there are no more references to them.

Simple rules

For garbage collection to work as early as possible and to collect as many objects as possible, do not hold on to objects that you do not need. Usually this happens automatically, but here's what you need to remember:

- A good alternative to manually deleting links is to use variables with the correct scope. Instead of setting the global variable to null, use a local variable for the function, which disappears when the scope disappears. The code gets cleaner and fewer worries.

- Make sure that you remove event handlers when they are no longer needed, especially before deleting the DOM elements to which they are attached.

- When using a local data cache, be sure to clear it or use the aging mechanism to not store large unnecessary chunks of data.

Functions

Now let's turn to the functions. As we already said, garbage collection frees up used blocks of memory (objects) that cannot be reached anymore. To illustrate, a few examples.

function foo() {

var bar = new LargeObject();

bar.someCall();

}

Upon returning from foo, the object pointed to by bar will be cleared by the garbage collector, since nothing is already referencing it.

Compare with:

function foo() {

var bar = new LargeObject();

bar.someCall();

return bar;

}

// где-то ещё в коде

var b = foo();

Now we have a reference to the object, which remains until the code that called the function assigns something else to b (or until b goes out of scope).

Short circuits

When you encounter a function that returns an internal function, the internal one has access to the scope outside it, even after the external one finishes working. This is a closure - an expression that can work with variables from the selected context. For instance:

function sum (x) {

function sumIt(y) {

return x + y;

};

return sumIt;

}

// Использование

var sumA = sum(4);

var sumB = sumA(3);

console.log(sumB); // Возвращает 7

The garbage collector cannot tidy up the created functional object, since there is still access to it, for example, via sumA (n). Here is another example. Can we access largeStr?

var a = function () {

var largeStr = new Array (1000000) .join ('x');

return function () {

return largeStr;

};

} ();

Yes - through a (), therefore it is also not eliminated by the collector. How about this:

var a = function () {

var smallStr = 'x';

var largeStr = new Array(1000000).join('x');

return function (n) {

return smallStr;

};

}();

We no longer have access to it, so it can be cleaned.

Timers

One of the worst places to leak is a loop, or in a pair of setTimeout () / setInterval (), although this problem is quite common. Consider an example:

var myObj = {

callMeMaybe: function () {

var myRef = this;

var val = setTimeout(function () {

console.log('Время выходит!');

myRef.callMeMaybe();

}, 1000);

}

};

If we do

myObj.callMeMaybe();

to start the timer, ““ Time is running out! ”will be displayed every second. If we do:

myObj = null;

the timer will continue to work anyway. myObj cannot be cleaned up because the closure passed to setTimeout continues to exist. In turn, it stores links to myObj through myRef. This is the same as if we passed the closure to any other function, leaving links to it.

Remember that links inside setTimeout / setInterval calls, such as functions, must be executed and completed before they can be cleared.

Watch out for performance traps

It is important not to optimize the code prematurely. You can get carried away with micro-tests, which say that N is faster than M in V8, but the real contribution of these things to the finished module can be much less than you think.

Let's say we need a module that:

- reads data from a local source that has numeric id;

- draws a tablet with this data;

- Adds event handlers for clicks on cells.

Questions immediately appear. How to store data? How to draw a label effectively and embed it in the DOM? How to handle events in an optimal way?

The first and naive approach is to store each piece of data in an object that can be grouped into an array. You can use jQuery to traverse data and draw a table, and then add it to the DOM. Finally, you can use event binding to add click-through behavior.

Here's how you should NOT do:

var moduleA = function () {

return {

data: dataArrayObject,

init: function () {

this.addTable();

this.addEvents();

},

addTable: function () {

for (var i = 0; i < rows; i++) {

$tr = $('');

for (var j = 0; j < this.data.length; j++) {

$tr.append('' + this.data[j]['id'] + '');

}

$tr.appendTo($tbody);

}

},

addEvents: function () {

$('table td').on('click', function () {

$(this).toggleClass('active');

});

}

};

}();

Cheap and cheerful.

However, in this example, we pass only by id, by numerical properties, which could be represented more simply as an array. In addition, the direct use of DocumentFragment and native DOM methods is more optimal than using jQuery to create a table, and of course, it will be much faster to process events through the parent element.

jQuery “behind the scenes” directly uses DocumentFragment, but in our example, the code calls append () in a loop, and each of the calls does not know about the others, so the code may not be optimized. It may not be scary, but it is better to check it through tests.

By adding the following changes we will speed up the script.

var moduleD = function () {

return {

data: dataArray,

init: function () {

this.addTable();

this.addEvents();

},

addTable: function () {

var td, tr;

var frag = document.createDocumentFragment();

var frag2 = document.createDocumentFragment();

for (var i = 0; i < rows; i++) {

tr = document.createElement('tr');

for (var j = 0; j < this.data.length; j++) {

td = document.createElement('td');

td.appendChild(document.createTextNode(this.data[j]));

frag2.appendChild(td);

}

tr.appendChild(frag2);

frag.appendChild(tr);

}

tbody.appendChild(frag);

},

addEvents: function () {

$('table').on('click', 'td', function () {

$(this).toggleClass('active');

});

}

};

}();

Let's look at other ways to improve performance. You could read somewhere that the prototype model is more optimal than the module model. Or that the frameworks for working with templates are highly optimized. Sometimes this is true, but they are mostly useful because the code becomes more readable. And you also need to do precompilation. Let's check these statements:

moduleG = function () {};

moduleG.prototype.data = dataArray;

moduleG.prototype.init = function () {

this.addTable();

this.addEvents();

};

moduleG.prototype.addTable = function () {

var template = _.template($('#template').text());

var html = template({'data' : this.data});

$tbody.append(html);

};

moduleG.prototype.addEvents = function () {

$('table').on('click', 'td', function () {

$(this).toggleClass('active');

});

};

var modG = new moduleG();

It turns out that in this case, the advantages in speed are negligible. These things are not used because of speed, but because of readability, inheritance model and maintainability.

More difficult problems are drawing pictures on canvas and working with pixels. Always check what speed tests do before using them. It is possible that their checks and limitations will be so artificial that they will not be useful to you in the world of real applications. All optimization is better to test in the whole ready-made code.

Optimization Tips for V8

We will not give absolutely all the advice, but dwell on the most needed.

- Some models interfere with optimization, for example, a bunch of try-catch. Details about which functions may or may not be optimized can be obtained from the d8 utility using the command --trace-opt file.js

- try to keep your functions monomorphic, i.e. so that variables (including properties, arrays, and function parameters) always contain only objects from the same hidden class. For example, do not do this:

function add(x, y) {

return x+y;

}

add(1, 2);

add('a','b');

add(my_custom_object, undefined);

- do not boot from uninitialized or deleted elements

- do not write huge functions, because they are harder to optimize

Objects or arrays?

- use an array to store a bunch of numbers or a list of objects of the same type

- if semantics require an object with properties (of different types), use an object. It is quite memory efficient, and quite fast.

- for elements with integer indices, the iteration will be faster than for the properties of the object

- the properties of objects - the thing is complicated, they can be created through setters, with different numbering and recording capabilities. Array elements cannot be configured this way - they either exist or they do not exist. From the point of view of the engine, this helps optimize performance. Especially if the array contains numbers. For example, when working with vectors, use an array instead of an object with properties x, y, z.

There is one major difference between arrays and objects in JS - the length property. If you track this parameter yourself, then the objects will be about as fast as the arrays.

Object Tips

Create objects through the constructor. Then all objects will have one hidden class. Also, this is slightly faster than Object.create ().

There are no restrictions on the number of different types of objects and their complexity (within reasonable limits - long prototype chains are harmful, and objects with a small number of properties are presented by the engine in a slightly different way and slightly faster than large ones). For hot objects, try to make short inheritance chains and a small number of properties.

Cloning Objects

A common problem. Be careful when copying big things - this usually happens slowly. It is especially bad to use for..in loops for this, which work slowly in any engines.

When you really need to quickly copy an object, use an array or a special function that directly copies each property. This will be the fastest way:

function clone(original) {

this.foo = original.foo;

this.bar = original.bar;

}

var copy = new clone(original);

Function Caching in the Modular Model

This technique can improve performance. Those examples of the example below that you probably met, most likely, work more slowly, because they create member functions all the time.

Here is a test for prototype performance versus modules:

// Модель прототипов

Klass1 = function () {}

Klass1.prototype.foo = function () {

log('foo');

}

Klass1.prototype.bar = function () {

log('bar');

}

// Модель модулей

Klass2 = function () {

var foo = function () {

log('foo');

},

bar = function () {

log('bar');

};

return {

foo: foo,

bar: bar

}

}

// Модули с кешированием функций

var FooFunction = function () {

log('foo');

};

var BarFunction = function () {

log('bar');

};

Klass3 = function () {

return {

foo: FooFunction,

bar: BarFunction

}

}

// Итерационные тесты

// Прототипы

var i = 1000,

objs = [];

while (i--) {

var o = new Klass1()

objs.push(new Klass1());

o.bar;

o.foo;

}

// Модули

var i = 1000,

objs = [];

while (i--) {

var o = Klass2()

objs.push(Klass2());

o.bar;

o.foo;

}

// Модули с кешированием функций

var i = 1000,

objs = [];

while (i--) {

var o = Klass3()

objs.push(Klass3());

o.bar;

o.foo;

}

// Обращайтесь к тесту за подробностями

If you do not need a class, do not create it. Here's an example of how you can improve performance by getting rid of the overlays associated with the jsperf.com/prototypal-performance/54 classes .

Array Usage Tips

Do not remove items. If empty spaces form in the array, V8 switches to the dictionary method of working with arrays, which makes the script even slower.

Array literals

Useful because hint V8 about the types and number of elements in the array. Suitable for small and medium arrays.

// V8 знает, что вам нужен массив чисел из 4 элементов:

var a = [1, 2, 3, 4];

// Не надо так:

a = []; // V8 ничего не знает про массив - совсем как Джон Сноу

for(var i = 1; i <= 4; i++) {

a.push(i);

}

Single or mixed types

Do not mix different types in the same array (var arr = [1, “1”, undefined, true, “true”])

Testing the performance of mixed types

It can be seen from the test that the array of integers works the fastest.

Sparse Arrays

In such arrays, access to elements is slower - V8 does not take up memory for all elements if only a few are used. She works with him with the help of dictionaries, which saves memory, but affects speed.

Testing Sparse Arrays

Leaky Arrays

Avoid holey arrays resulting from deleting elements, or assigning a [x] = foo, where x> a.length). If you remove only one element, work with the array slows down.

Leaky array test

Array pre-filling or on-the-fly filling

Do not pre-populate large arrays (more than 64K elements). Nitro (Safari) works with pre-populated arrays better. But other engines (V8, SpiderMonkey) work differently.

Prefilled Array Test

// Пустой массив

var arr = [];

for (var i = 0; i < 1000000; i++) {

arr[i] = i;

}

// Предзаполненный массив

var arr = new Array(1000000);

for (var i = 0; i < 1000000; i++) {

arr[i] = i;

}

Application optimization

For web applications, speed is what matters. Users do not like to wait, so it is critical to try to squeeze all the possible speed out of the script. This is a rather difficult task, and here are our recommendations for its implementation:

- measure (find bottlenecks)

- understand (find what the problem is)

- forgive fix

Speed tests (benchmarks)

A common principle for measuring speed is to measure runtime and compare. One comparison model was proposed by the jsPerf team and is used by SunSpider and Kraken :

var totalTime,

start = new Date,

iterations = 1000;

while (iterations--) {

// Здесь идёт тестируемый код

}

// totalTime → количество миллисекунд,

// требуемое для выполнения кода 1000 раз

totalTime = new Date - start;

The code is placed in a loop and executed several times, then the start time is subtracted from the end time.

But this is too simple an approach - especially to test work in different browsers or environments. Even garbage collection can affect performance. This should be remembered even when using window.performance.

For a serious dive into code testing, I recommend reading JavaScript Benchmarking.

Profiling

Chrome Developer Tools supports profiling. It can be used to find out which functions consume the most time and optimize them.

Profiling begins by defining a reference point for the speed of your code — Timeline is used for this. It notes how long our code has been running. The tab “profiles” describes in more detail what is happening in the application. The JavaScript CPU profile shows how much CPU time the code took, the CSS selector how much time it took to process the selectors, and Heap snapshots showing the memory usage.

Using these tools, you can isolate, tweak, and reprofile the code, measuring how the execution of the program changes.

Good profiling instructions are here:JavaScript Profiling With The Chrome Developer Tools .

Ideally, profiling should not be affected by installed extensions and programs, so run Chrome with the --user-data-dir <empty directory> option.

Avoiding memory leaks - the technique of three memory snapshots

In Google Chrome, Developer Tools are actively used in projects like Gmail to detect and fix leaks.

Some of the parameters that our teams pay attention to are private memory usage, JS heap size, number of DOM nodes, storage cleaning, event handler count, garbage collection. Those familiar with event architectures will be interested in the fact that the most common problems we encountered when listen () lacks unlisten () (closure) and when there is no dispose () for objects that create event handlers.

There is a wonderful presentation of the 3 Snapshot technique that helps you find leaks through DevTools.

The point of the technique is that you record several actions in your application, start garbage collection, check if the number of DOM nodes returns to the expected value, and then analyze three snapshots of the heap to determine if there are leaks.

Memory management in single-page applications

In modern single-page applications, it is important to manage memory (AngularJS, Backbone, Ember frameworks), because they do not restart. Therefore, memory leaks can quickly manifest themselves. This is a big trap for such applications, because memory is limited, and applications run for a long time (email clients, social networks). Big power is a big responsibility.

In Backbone, make sure you get rid of old views and links through dispose (). This function was added recently; it removes all handlers added to the events object, and all collections of handlers when the view is passed as the third argument (in callbacks). dispose () is also called in the view remove () function, which solves most of the simple memory cleaning issues. In Ember, clean browsers when they discover that an item has been removed from view.

Advice from Derick Bailey ::

Understand how events work from the point of view of links, but otherwise follow the standard rules for working with memory, and everything will be OK. If you are loading data into the Backbone collection, which has many User objects, this collection must be cleaned so that it does not use more memory, you need to delete all links to it and all objects separately. When you delete all links, everything will be cleared.

In this article, Derrick describes many memory errors when working with Backbone.js, and also offers a solution to these problems.

Another great tutorial on debugging leaks in Node .

We minimize recalculation of positions and sizes of elements when updating the appearance of the page

Such recounts block the page for the user, so you need to figure out how to reduce the recount time. Methods that cause recounting must be collected in one place and rarely used. As little action as possible directly with the DOM. To do this, use DocumentFragment - a way to isolate part of a document tree. Instead of constantly adding nodes to the DOM, we can use fragments to build everything we need, and then perform one insert into the DOM.

Let's make a function that adds 20 divs to the element. Just adding each div will cause 20 page recounts.

function addDivs(element) {

var div;

for (var i = 0; i < 20; i ++) {

div = document.createElement('div');

div.innerHTML = 'Heya!';

element.appendChild(div);

}

}

Instead, you can use a DocumentFragment, add a div to it, and then add it to the DOM through appendChild. Then all the heirs of the fragment will be added to the page in one recount.

function addDivs(element) {

var div;

// Creates a new empty DocumentFragment.

var fragment = document.createDocumentFragment();

for (var i = 0; i < 20; i ++) {

div = document.createElement('a');

div.innerHTML = 'Heya!';

fragment.appendChild(div);

}

element.appendChild(fragment);

}

For more information, see Make the Web Faster , JavaScript Memory Optimization, and Finding Memory Leaks .

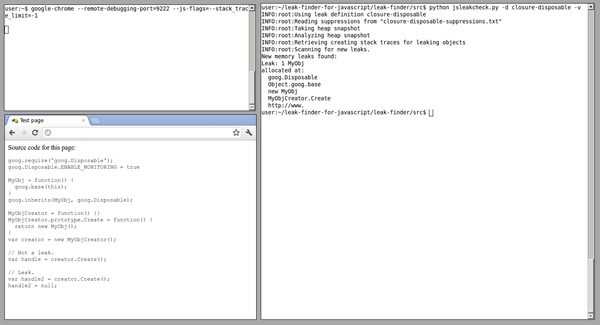

JavaScript memory leak detector

To help with leak detection, a utility was developed for the Chrome Developer Tools that works through the remote work protocol, which takes pictures of the heap and finds out which objects are causing the leak.

I recommend reading a post on this topic or reading the project page .

V8 flags to optimize debugging and garbage collection

Optimization Tracking:

chrome.exe --js-flags="--trace-opt --trace-deopt"

More details:

trace-opt — write down the names of optimized functions and show the missing code that the optimizer couldn’t handle;

trace-deopt — write down the code that had to be de-optimized when running

trace-gc — record every stage of garbage collection

Optimized functions are marked with an asterisk (*), and not optimized - tilde (~).

Spicy details about the flags and the internal work of the V8 read in the post of Vyacheslav Egorov .

High Resolution Time and Navigation Timing API

High resolution time ( High Resolution Time The , the HRT) - JS is an interface to access a timer with a resolution of less than a millisecond, which is independent of the user's time shifts. Useful for writing performance tests.

Available in Chrome (stable) as window.performance.webkitNow (), and in Chrome Canary without the prefix -window.performance.now (). Paul Irish wrote about this in detail in his post on HTML5Rocks .

If we need to measure the application’s performance on the web, the Navigation Timing API will help us . With its help, you can get accurate and detailed measurements performed when the page loads. Available through window.performance.timing, which can be used directly in the console:

From this data you can learn a lot of useful things. For example, network delay responseEnd-fetchStart; the time it took to load the page after receiving loadEventEnd-responseEnd from the server; the time between loading the page and starting navigation loadEventEnd-navigationStart.

For details, see Measuring Page Load Speed With Navigation Timing .

about: memory and about: tracing

about: tracing in Chrome shows intimate details about the speed of the browser, recording all its activities in each of the threads, bookmarks and processes.

Here you can see all the details necessary for profiling the script and tweak the JS extension in such a way as to optimize downloads.

A good article on using about: tracing to profile WebGL games.

about: memory in Chrome is also a useful thing that shows how much memory each bookmark uses - this can be used to find leaks.

Conclusion

In the amazing and mysterious world of JS engines, there are many pitfalls associated with speed. There is no universal recipe for improving performance. By combining different optimization techniques and testing applications in a real environment, you can see how you need to optimize your application. Understanding how engines process and optimize your code can help you tweak your applications. Measure, understand, correct and repeat.

Do not forget about optimization, but do not engage in micro-optimization due to convenience. Think about which optimization is important for the application, and which it can do without.

Keep in mind that as JS engines get faster, the next bottleneck is the DOM. Recalculation and redrawing also needs to be minimized - touch the DOM only if absolutely necessary. Do not forget about the network. HTTP requests also need to be minimized and cached, especially for mobile applications.