Fastware

Andrei Alexandrescu is a real living legend. This is a person who has made a significant contribution to the history of modern programming languages and techniques of generalized and metaprogramming. How many copies were broken in the discussions of "Modern Design in C ++" and "Coding Standards 101" (written with the Exceptional C ++ Emblem Satter), and other books and articles . Being a co-author of D , he had the opportunity not only to theorize, but also to make the dream a reality - and, characteristically, he embodied it .

Now you are holding his reportfrom the 2018 Piter DotNext conference, which describes modern optimization technologies. What does it have .NET? This is a fundamental report from a person who has been optimizing all his life. If performance is important to you, you need to watch it (or read this article). Welcome under the cut!

I would like to discuss a few benchmarking topics with you. First, let's repeat some basic things. Amdal's Law- part of the classic computer science, it is mainly used in parallel computing, but it works in any complex system. If we want to improve the performance of a certain system, then we must begin where the main problems of this system are concentrated. The law itself is obvious: if a component is 20% of the system, then the maximum improvement in system performance, which can be achieved by optimizing the work of only this component, is 20%. I too often have to meet with people (our readers, of course, do not belong to them) doing things like optimizing command-line parsing. These operations take the first 10 microseconds of the work of your program, and people analyze their algorithmic complexity and are terrified if the time turns out to be quadratic.

As you probably know, before starting the optimization, it is necessary to profile the application, select hot spots in it. It should be said about the law of Ladma(This is not a real last name, but Amdal, read backwards). You need to focus your efforts on the component that leads to the most time consuming. It needs to be taken out of the application, carry out the necessary work, return it back and test it again. The reason for doing this is that, very often, a 20% performance improvement is the result of ten improvements of 2% each. And within the framework of a large system, it is impossible to measure such a small improvement. To do this, the component must be tested in a test suite. Improving the performance of one of the main components of the system by 20% can mean an improvement of 5% for the system as a whole, and for some areas this is an excellent result. Don't forget that optimizations can have a variety of global effects,

A mistake that, I am sure, our readers do not allow, but which in general is quite common: people measure the speed of the debug build. This should never be done. This is similar to getting upset because of the low speed of the snail at the races: it is not intended for such a competition, it has other goals in life. Another error, somewhat less obvious: people first measure the basic indicators of the system, and immediately after that perform benchmarking. But after collecting basic indicators, many resources are heated. For example, open files are buffered and remain in memory (at least under Linux). Thus, the second test will pass faster only because it is launched after the first. This happens even with calls to malloc. After these calls, the system does not return to its original state even if memory free calls are made. The internal configuration, caching, and capabilities used by the memory allocator allow the following malloc calls to run much faster. Even without taking into account the effect of the cache, malloc remembers that, for example, a certain function allocated memory for objects with a size of 4 kilobytes many times - this means that it is necessary to have a free list with an element size of 4 kilobytes. Or another example: a DNS lookup is cached for reuse after the first request. If possible, with benchmarking, you need to re-run the entire process from beginning to end. allow the following malloc calls to run much faster. Even without taking into account the effect of the cache, malloc remembers that, for example, a certain function allocated memory for objects with a size of 4 kilobytes many times - this means that it is necessary to have a free list with an element size of 4 kilobytes. Or another example: a DNS lookup is cached for reuse after the first request. If possible, with benchmarking, you need to re-run the entire process from beginning to end. allow the following malloc calls to run much faster. Even without taking into account the effect of the cache, malloc remembers that, for example, a certain function allocated memory for objects with a size of 4 kilobytes many times - this means that it is necessary to have a free list with an element size of 4 kilobytes. Or another example: a DNS lookup is cached for reuse after the first request. If possible, with benchmarking, you need to re-run the entire process from beginning to end.

For example, in order to completely return the system to its original state, in the case of files, they need to be opened on a separate disk, which after the end of the test must be unmounted (as I understand it, this can be done under Windows). The operation is not easy, but in most cases necessary.

Continuing to talk about errors in optimization, I had to deal with such cases when the cost of printf is included in the test results. There are also procedural errors when changing more than one thing before each measurement, which violates the most basic principles of a scientific experiment, since it turns out that it is not clear what effect of changes you are measuring. Another serious mistake is when some rare cases are optimized, which leads to pessimization in other situations.

Here is an example from Stack Overflow. The author often sorts already sorted data and is surprised, because the `is_sorted 'function is obviously much faster than` sort. Why then in `sort the first line is not` if is_sorted return? You are optimizing an extremely rare case, a completely sorted data, and everyone else who has at least one non-sorted element will have to bear the costs for this optimization. So do not.

I think I don’t have to prove for a long time that today's competing architectures are extremely complex: dynamic frequency changes, interruption by other processes, virtualization, etc. Therefore, to get the same time when measuring is almost impossible, your indicators will always tremble. Therefore, do not rely on things that seem obvious. Say, it may seem obvious to us that a smaller number of instructions means a faster code, and this is not always true. It may also appear that using stored data will always be faster than re-performing calculations, so if you cache the results, you will be fine. As in the previous case, it cannot be unequivocally asserted, just as the opposite cannot be said unconditionally - it all depends on the context. Obviously you should have only one thing: everything needs to be measured.

There are a number of fairly reliable practices, the discussion of which can push you into interesting thoughts. You need to start with the fact that mathematics will not let you down. It makes it possible to show that systems of different speeds can be equivalent. Mathematics gives the rules to show the equivalence of certain things and to identify certain properties, and at the same time she is not biased, she does not care what things are interesting and which are not. Many people think that optimization is based on knowledge of machine code and work with bits, but in fact there is a lot of math in it, because you prove that a faster system is equivalent to a slower one.

Another general rule is that computers like to have everything boring. Do you need to multiply two vectors, with a billion elements each? This is an ideal task for a computer, all the equipment in it is specially sharpened for this kind of tasks. I don’t want to do this to analyze this data, build a regular expression based on it. Computers don't like things like branching, dependencies, indirect calls, in short - they don't like smart code, they like boring code. Computers do not like indirect recording - a difficult problem that people involved in iron, have been fighting for a long time and can not solve.

Another rule is that one should give preference to the least powerful operations, in other words, to prefer addition to multiplication, and multiplication to elevation. Again, math is useful here.

Finally, the last rule - the smaller, the more beautiful. Small sizes allow computers to best realize their advantages, as they prefer that data, and especially instructions, be close to each other. The results of several measurements of the speed of the application will always differ, you will have some distribution of results. Usually we just take the average of these several results. But the problem is that due to the specifics of computers, the average will involve a lot of noise. When Bill Gates rides a bus, on average, every bus passenger turns out to be a billionaire. It sounds great, but it is a poor consolation for a homeless person traveling on the same bus. A similar situation occurs with interruptions: the multiplication operation takes nanoseconds, but when you take many measurements of such operations, one of them will inevitably have an interruption of two milliseconds in duration. The difference is in three orders, and yet, the developers do not always take this circumstance into account.

So, I repeat: the noise in computers is always additive; It may seem insignificant to people, but it is essential for microbenchmarking, and the arithmetic average will include a lot of noise. Instead of the average, you need an indicator that will measure only the time that you can influence in any way. If we approach this question from the point of view of mathematics, we will see that we need to find a value that will correspond to the greatest number of measurements we have made. In other words, we need a mod. This immediately brings us to the problem: what will happen if we take quicksort fashion? If the algorithm is probabilistic or if the data is random, then there will almost never be a mod. The density of values will be almost the same throughout the spectrum. In this case, we simply discard the 5% of the largest measurements and then take the average value - or maximum, in the latter case we will have a ceiling that will not be exceeded in 95% of cases. Almost always there will be some single subject sitting in the old basement with a slow modem, in which each page will load by the hour. Purely human, we, of course, sympathize with him, but we cannot technically help everyone - so the remaining 5% of cases have to be neglected. In general, when solving network problems, we often focus on the 95th percentile, because it is impossible to focus on the 100th. The one-hundredth percentile will mean the slowest result from all collected measurements - this is not informative. Almost always there will be some single subject sitting in the old basement with a slow modem, in which each page will load by the hour. Purely human, we, of course, sympathize with him, but we cannot technically help everyone - so the remaining 5% of cases have to be neglected. In general, when solving network problems, we often focus on the 95th percentile, because it is impossible to focus on the 100th. The one-hundredth percentile will mean the slowest result from all collected measurements - this is not informative. Almost always there will be some single subject sitting in the old basement with a slow modem, in which each page will load by the hour. Purely human, we, of course, sympathize with him, but we cannot technically help everyone - so the remaining 5% of cases have to be neglected. In general, when solving network problems, we often focus on the 95th percentile, because it is impossible to focus on the 100th. The one-hundredth percentile will mean the slowest result from all collected measurements - this is not informative. When solving network problems, we often focus on the 95th percentile, because it is impossible to focus on the 100th. The one-hundredth percentile will mean the slowest result from all collected measurements - this is not informative. When solving network problems, we often focus on the 95th percentile, because it is impossible to focus on the 100th. The one-hundredth percentile will mean the slowest result from all collected measurements - this is not informative.

As I hope it became clear, measurement is not an easy problem. Let's look at some examples and start by trying to replace branching with arithmetic. We are talking about cases when we need an if statement, but at the same time its too frequent use is undesirable. Instead, we will integrate the result of the branch as the value 0/1. The code will look linear, the computer will just need to go through it from beginning to end, without thinking about exactly what step to take next.

Let's try to solve the following problem: move the minima of each quartile of the array to the first quartile. In other words, the array should be divided into four parts and the minimum value of each part should be placed at the beginning of the array.

The above is the basic version of the code. By the way, I can proudly announce that I translated these examples into C #, and they are successfully compiled. The code itself is quite simple: `m is assigned the index of the smallest of the two values located on the indices` i and `j, and then the same assignment is repeated two more times, depending on the other two indices. Finally, the value at the index `m changes places in the array with the value at the index` i. As you can see, we go around the array with the help of four inductive variables.

The problem of testing such an algorithm will be interesting and not obvious. We will need to test it not on a single set of data, but on data that might arise in various cases. For example, on data that looks like pipes of an organ: first increase, then decrease; on random data with a uniform distribution; on a random set of zeros and ones - from just random data, the difference here is that there will be many duplicate values; on already sorted data; finally, on the data obtained by real measurements of some physical phenomenon. This will be a serious approach to measuring the speed of an algorithm, and it is generally accepted among people who study algorithms.

Let's try to improve the code that we have just met.

As the first optimization, we will try to avoid excessive repetition of operations, for this we take out several division operations from the cycle - dividing `n into 2 and 4 and dividing 3 *` n into 4. But, having carried out this optimization, we find out that the calculations were not for We are the main problem: the code will not become faster, although it will be more compact. At best, we will achieve an improvement of half a percent.

The second change we make to the code will be to reduce dependencies. In the previous version of the algorithm, the assignment of `m value` k or `l depends on the value assigned to` m line above. To reduce the number of dependencies `m, we separately calculate` m0 and `m1, and then compare them. When I performed this optimization, I hoped for a significant improvement in the speed of the algorithm, but in the end it turned out to be zero. But, in my opinion, it is important to keep the number of dependencies minimal, so I saved the code.

Let us now try to reduce the number of inductive variables from four to one, and the remaining three will be calculated arithmetically, since they are in constant relation to each other. It is quite simple: instead of `k, we will have` i + q, instead of two other variables - `i + 2 * q and` i + 3 * q. I also had high hopes for this optimization, but, like the previous one, it did not give any results in time. This again proves the importance of measurement: without them, I could boast that I had significantly improved the performance of the algorithm, and I would have very substantial arguments.

As a fourth attempt, we restructure the cycle in order to eliminate multiplication by 3. This will give us an improvement of 3%. The result is still not impressive. Next, try to get rid of ternary operators.

For this, I would like to introduce you to a new function - `static int optional (bool flag, int value). It converts the input boolean value to Int32, multiplies by -1, and passes it to the bitwise AND operator along with the second input value. If the input flag was false, then in int32 it will be 0, and after all conversions on the output, we still get 0. If the input flag was true, in int32 it will be 1, when multiplied by -1, we get FFFFFFFF, which is after the bit "And" with any number will give this second number. Please note that there is no if statement anywhere, a code without branches, for a computer it is boring (although it just seems convoluted to us).

We will replace the ternary operators with this optional function, we will integrate it inside the calculation. Apply it twice, and in the third case, leave a question mark. Thus, instead of four checks in this cycle, I will have only one.

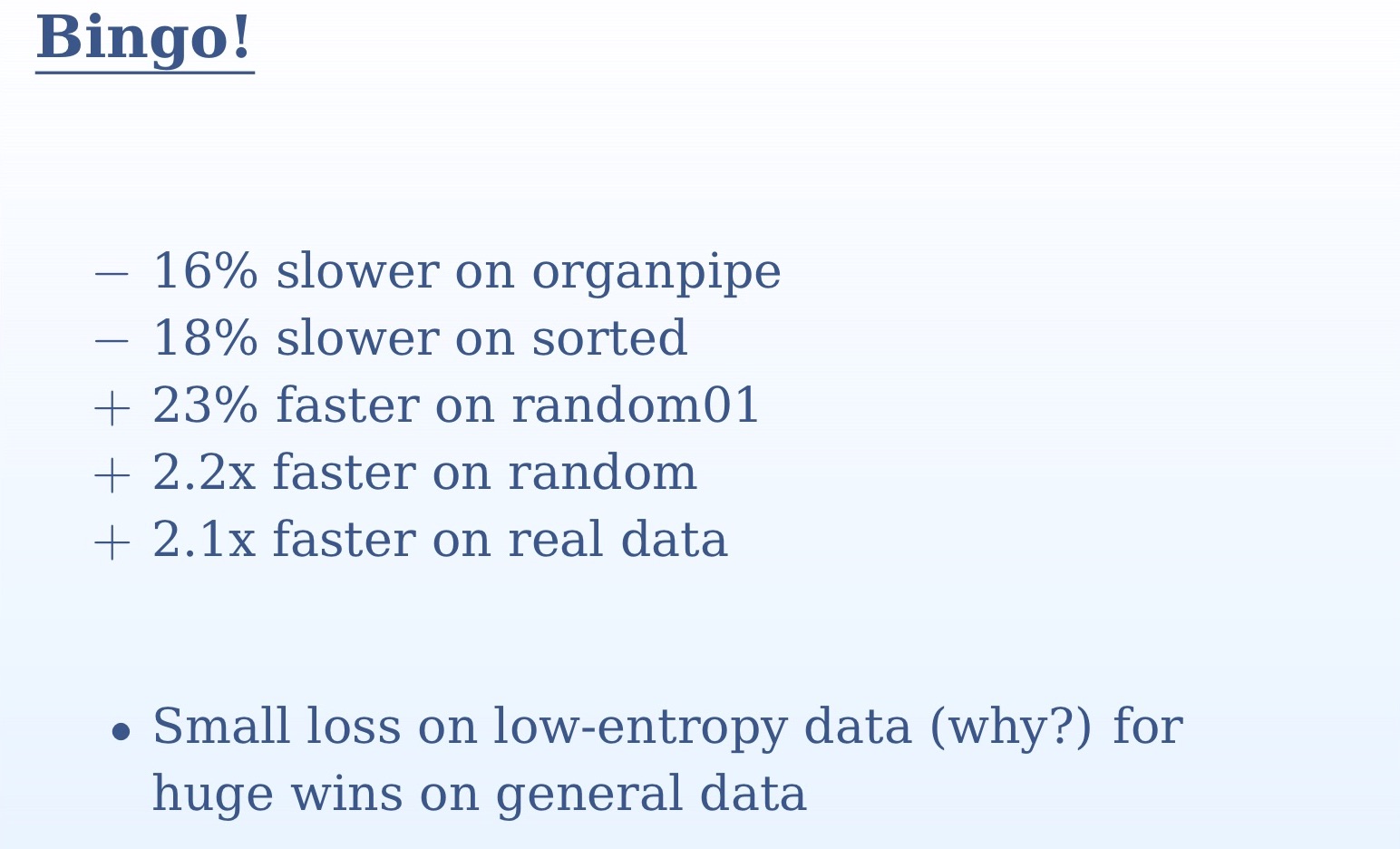

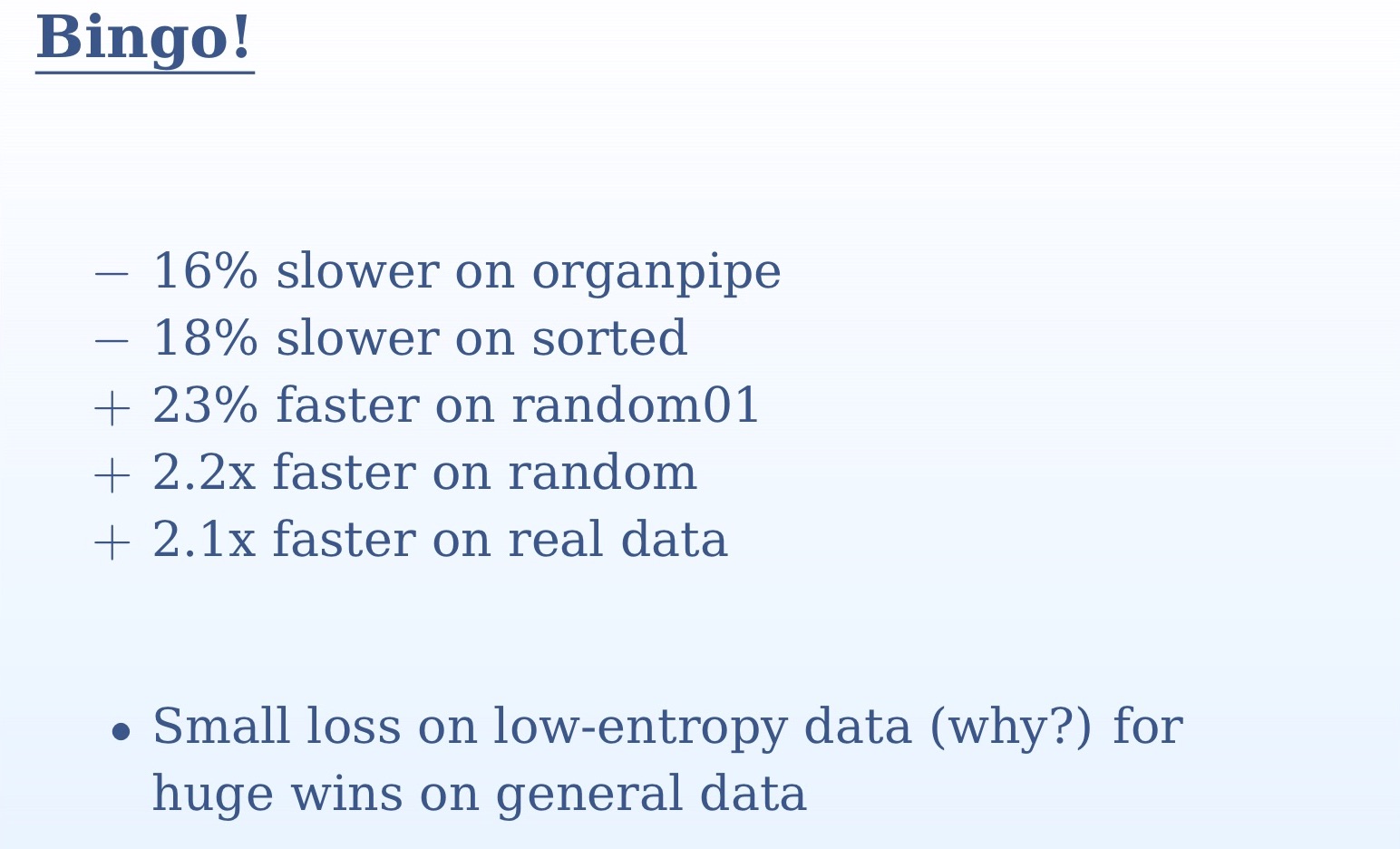

From the measurement results that you see on the slide, it is clear how important it was to check the algorithm on several different data sets. On one set, we would not understand anything. On random and real data, we have more than twice the acceleration, on the pipes of the organ and sorted data, we have a slight slowdown. This is due to the fact that in the case of the sorted data for the predictor of transitions there will be no surprises, it will predict with 100% accuracy. In the case of organ pipes, we will have one misprediction in the middle of the data set — again, very high accuracy. In contrast, with random data, the difference between our two approaches will be huge. We have replaced all unpredictable checks with simple logic. Here we return again to the simple truth: computers are designed for computing, as the name implies (computer - computing). Branching, displaying pictures on the screen - they all do much worse. It is much easier to execute the bit "AND" for them than to pass an if statement.

Having finally achieved a positive result from optimization, we will try to replace the last ternary operator with our `optional. Function. This time the speed gain will be small. To understand why this happens, you need to look at the generated code. In the previous version of the code, where the question mark was still present, the compiler has already found a way to execute the code without branching. And when he gets to the ternary operator, he could already predict it. Replacing this last piece with `optional will give some worse code. Therefore, I repeat, it is important to measure each time.

Another function I would like to recommend to you is the ifelse without branches, which you now see on the screen. True, I was not able to achieve with it the performance improvements in our example. If 0 is passed as a flag, the first line will be 0; in the second, we subtract 1 from 0 in Int32 and get FFFFFFFF, after which this value is passed to the bit "AND" along with the argument of the `v2 function, which will give us this argument unchanged; Finally, the first and second lines are transmitted to the bit "OR", which, again, will give us `v2. If the flag is 1, then the first line will be `v1; in the second, we subtract 1 from 1 and get 0, with the result that the entire line will be 0, and 0 and `v1 in the bit" OR "will give` v1.

I hope that such a function `ifelse without branching will interest the people involved in the backend - for now, for some reason, modern compilers do not use this approach. Having such functions, you can reorganize the algorithms so that compilers understand them for you, because you are smarter and more creative than your compiler.

Let's change the topic of our conversation a bit and move on to the intersection of large sets. So far, we have been talking about individual operators, but now we will create new algorithms, so we will need to distract from the details and open our consciousness to a larger perspective. I assume that you are familiar with the algorithms for merge sort, multiplying two vectors and searching for common elements of two sorted vectors. Two sorted sets are traversed, and when equal elements are found in them, this is considered a match. If one of the two matched elements turns out to be smaller, it is shifted. This algorithm is fairly simple, but very common - most likely the most used in the world. It is used in all queries of several words, each such query is the intersection of two sets. This algorithm

Take a look at the basic implementation of this algorithm. If both input sets are empty, then obviously we return 0. Next, we start an infinite loop, in which, if a match is met, we increase the result by 1 and check if the loop needs to be completed. Instead of an infinite loop, you could use the for operator and specify the end condition of the loop in it. But that would mean extra work. In the implementation that you see on the slide, in the first branch we have `if (a1 [i1] <a2 [i2]), after which there is an increase in` i1 by 1, and all we need to do is to check `i1. Similarly, in the second branch we only need to check `i2. Both values need to be checked only in the third branch. If this check were at the beginning of the cycle, then we would do the extra work.

We will try to improve this implementation. At the moment, its algorithmic complexity is linear, with dependence on two input arguments. In machine learning, quite often you have to find the intersection of sets that are very different from each other in size or in statistics. For example, you have a long input vector and a short vector of features (feature vector) against which you are checking. In our code there can be a million entries in `a1, and in` a2 - a thousand. In this case, we are not ready to go a million steps to complete this algorithm. The greatest load here will be on the following line of code: `if (++ i1 == a1.length) break. Immediately before this, a comparison occurs, and then in this line - the increment of the value; this is essentially a linear search. We iterate over a long vector in search of short elements.

Let's try to improve this algorithm. Binary search is better than linear search, so let's turn to it. Its advantage is that it gives the index of the largest of the smaller elements.

The code above is the implementation of our binary search algorithm. It is not very effective. The worst situation is when the binary search fails every time. It will occur in some fairly important scenarios - for example, with identical elements of the set. Here the binary search will be slower than the classic linear search. How to make the algorithm work equally well on identical and different data? Check all the data will be too expensive for resources. I’ll make a reservation that this is not about completely identical data, but about very similar, with similar statistics, dimensions may also differ. You can check the following several items. The obvious solution is to reduce the search area. When we perform a binary search, then finding a certain element, we are no longer interested in elements less than it, since the second vector is also sorted. Thus, we can reduce our search area every time, discarding all the elements less from the found element.

Here is the implementation of this approach. You see that we perform a binary search every time in a part of the original array starting with `i2 and ending with` a2.length. Since ʻI2 will increase with each search, the search area will be reduced.

The next optimization that I would like to implement here is connected with the Galloping Search algorithm. In essence, this is a binary search with a different step. In the case of a binary search, we start every time from the middle - but let's think about it, when we look for the name in the phone book, do we not open it in the middle itself? If the person’s last name begins, say, in “B”, we will open the book closer to the beginning. This principle is implemented in a galloping search: we begin to crawl data in an ascending direction with a step that increases exponentially after each check: first 1, then 2, then 4. This gives us a good algorithmic complexity. If the step grew linearly, the complexity would be quadratic. When we "skip" the desired element, we perform the usual binary search on the remaining segment, which will be small and will not significantly affect the execution time of the algorithm. Thus, we combine all the advantages of both approaches. The implementation of this algorithm:

We now discuss the scaling, that is, we try to find an intersection with more than two sets. For each search of several words, we will need to find the intersection of several sets. To do this, we can, for example, compare the first two sets, then their intersection with the third, and so on. But this is not the optimal solution. We need to take the first elements of all the sets and find the smallest of them, which will then need to be moved. We need a data structure that would allow us to find the smallest of many elements and would have constant complexity. Such a data structure is a bunch. But it will be a strange heap, it will not be based on a physical array. She will be imaginary, we will organize in her only the first elements of our sets. Having found the smallest item in the heap,

The work on the topics that we are discussing now has, in practice, a rather amateur form. In practice, we will often have several sets, not just two, and quite a lot of work has been written on this topic. The classic algorithm here is SVS, in which we group the sets, take the two smallest ones and choose the shortest one as a candidate. Under the link you can find a good overview on this topic. The problems associated with the intersection of sets, the scalar product of sparse vectors, sorting by merging, any form of comparison with the image over time turn out to be more and more interesting. The algorithm that I showed you proved to be very useful. Thank you for your attention.

Now you are holding his reportfrom the 2018 Piter DotNext conference, which describes modern optimization technologies. What does it have .NET? This is a fundamental report from a person who has been optimizing all his life. If performance is important to you, you need to watch it (or read this article). Welcome under the cut!

The art of benchmarking

I would like to discuss a few benchmarking topics with you. First, let's repeat some basic things. Amdal's Law- part of the classic computer science, it is mainly used in parallel computing, but it works in any complex system. If we want to improve the performance of a certain system, then we must begin where the main problems of this system are concentrated. The law itself is obvious: if a component is 20% of the system, then the maximum improvement in system performance, which can be achieved by optimizing the work of only this component, is 20%. I too often have to meet with people (our readers, of course, do not belong to them) doing things like optimizing command-line parsing. These operations take the first 10 microseconds of the work of your program, and people analyze their algorithmic complexity and are terrified if the time turns out to be quadratic.

As you probably know, before starting the optimization, it is necessary to profile the application, select hot spots in it. It should be said about the law of Ladma(This is not a real last name, but Amdal, read backwards). You need to focus your efforts on the component that leads to the most time consuming. It needs to be taken out of the application, carry out the necessary work, return it back and test it again. The reason for doing this is that, very often, a 20% performance improvement is the result of ten improvements of 2% each. And within the framework of a large system, it is impossible to measure such a small improvement. To do this, the component must be tested in a test suite. Improving the performance of one of the main components of the system by 20% can mean an improvement of 5% for the system as a whole, and for some areas this is an excellent result. Don't forget that optimizations can have a variety of global effects,

A mistake that, I am sure, our readers do not allow, but which in general is quite common: people measure the speed of the debug build. This should never be done. This is similar to getting upset because of the low speed of the snail at the races: it is not intended for such a competition, it has other goals in life. Another error, somewhat less obvious: people first measure the basic indicators of the system, and immediately after that perform benchmarking. But after collecting basic indicators, many resources are heated. For example, open files are buffered and remain in memory (at least under Linux). Thus, the second test will pass faster only because it is launched after the first. This happens even with calls to malloc. After these calls, the system does not return to its original state even if memory free calls are made. The internal configuration, caching, and capabilities used by the memory allocator allow the following malloc calls to run much faster. Even without taking into account the effect of the cache, malloc remembers that, for example, a certain function allocated memory for objects with a size of 4 kilobytes many times - this means that it is necessary to have a free list with an element size of 4 kilobytes. Or another example: a DNS lookup is cached for reuse after the first request. If possible, with benchmarking, you need to re-run the entire process from beginning to end. allow the following malloc calls to run much faster. Even without taking into account the effect of the cache, malloc remembers that, for example, a certain function allocated memory for objects with a size of 4 kilobytes many times - this means that it is necessary to have a free list with an element size of 4 kilobytes. Or another example: a DNS lookup is cached for reuse after the first request. If possible, with benchmarking, you need to re-run the entire process from beginning to end. allow the following malloc calls to run much faster. Even without taking into account the effect of the cache, malloc remembers that, for example, a certain function allocated memory for objects with a size of 4 kilobytes many times - this means that it is necessary to have a free list with an element size of 4 kilobytes. Or another example: a DNS lookup is cached for reuse after the first request. If possible, with benchmarking, you need to re-run the entire process from beginning to end.

For example, in order to completely return the system to its original state, in the case of files, they need to be opened on a separate disk, which after the end of the test must be unmounted (as I understand it, this can be done under Windows). The operation is not easy, but in most cases necessary.

Continuing to talk about errors in optimization, I had to deal with such cases when the cost of printf is included in the test results. There are also procedural errors when changing more than one thing before each measurement, which violates the most basic principles of a scientific experiment, since it turns out that it is not clear what effect of changes you are measuring. Another serious mistake is when some rare cases are optimized, which leads to pessimization in other situations.

Here is an example from Stack Overflow. The author often sorts already sorted data and is surprised, because the `is_sorted 'function is obviously much faster than` sort. Why then in `sort the first line is not` if is_sorted return? You are optimizing an extremely rare case, a completely sorted data, and everyone else who has at least one non-sorted element will have to bear the costs for this optimization. So do not.

I think I don’t have to prove for a long time that today's competing architectures are extremely complex: dynamic frequency changes, interruption by other processes, virtualization, etc. Therefore, to get the same time when measuring is almost impossible, your indicators will always tremble. Therefore, do not rely on things that seem obvious. Say, it may seem obvious to us that a smaller number of instructions means a faster code, and this is not always true. It may also appear that using stored data will always be faster than re-performing calculations, so if you cache the results, you will be fine. As in the previous case, it cannot be unequivocally asserted, just as the opposite cannot be said unconditionally - it all depends on the context. Obviously you should have only one thing: everything needs to be measured.

There are a number of fairly reliable practices, the discussion of which can push you into interesting thoughts. You need to start with the fact that mathematics will not let you down. It makes it possible to show that systems of different speeds can be equivalent. Mathematics gives the rules to show the equivalence of certain things and to identify certain properties, and at the same time she is not biased, she does not care what things are interesting and which are not. Many people think that optimization is based on knowledge of machine code and work with bits, but in fact there is a lot of math in it, because you prove that a faster system is equivalent to a slower one.

Another general rule is that computers like to have everything boring. Do you need to multiply two vectors, with a billion elements each? This is an ideal task for a computer, all the equipment in it is specially sharpened for this kind of tasks. I don’t want to do this to analyze this data, build a regular expression based on it. Computers don't like things like branching, dependencies, indirect calls, in short - they don't like smart code, they like boring code. Computers do not like indirect recording - a difficult problem that people involved in iron, have been fighting for a long time and can not solve.

Another rule is that one should give preference to the least powerful operations, in other words, to prefer addition to multiplication, and multiplication to elevation. Again, math is useful here.

Finally, the last rule - the smaller, the more beautiful. Small sizes allow computers to best realize their advantages, as they prefer that data, and especially instructions, be close to each other. The results of several measurements of the speed of the application will always differ, you will have some distribution of results. Usually we just take the average of these several results. But the problem is that due to the specifics of computers, the average will involve a lot of noise. When Bill Gates rides a bus, on average, every bus passenger turns out to be a billionaire. It sounds great, but it is a poor consolation for a homeless person traveling on the same bus. A similar situation occurs with interruptions: the multiplication operation takes nanoseconds, but when you take many measurements of such operations, one of them will inevitably have an interruption of two milliseconds in duration. The difference is in three orders, and yet, the developers do not always take this circumstance into account.

So, I repeat: the noise in computers is always additive; It may seem insignificant to people, but it is essential for microbenchmarking, and the arithmetic average will include a lot of noise. Instead of the average, you need an indicator that will measure only the time that you can influence in any way. If we approach this question from the point of view of mathematics, we will see that we need to find a value that will correspond to the greatest number of measurements we have made. In other words, we need a mod. This immediately brings us to the problem: what will happen if we take quicksort fashion? If the algorithm is probabilistic or if the data is random, then there will almost never be a mod. The density of values will be almost the same throughout the spectrum. In this case, we simply discard the 5% of the largest measurements and then take the average value - or maximum, in the latter case we will have a ceiling that will not be exceeded in 95% of cases. Almost always there will be some single subject sitting in the old basement with a slow modem, in which each page will load by the hour. Purely human, we, of course, sympathize with him, but we cannot technically help everyone - so the remaining 5% of cases have to be neglected. In general, when solving network problems, we often focus on the 95th percentile, because it is impossible to focus on the 100th. The one-hundredth percentile will mean the slowest result from all collected measurements - this is not informative. Almost always there will be some single subject sitting in the old basement with a slow modem, in which each page will load by the hour. Purely human, we, of course, sympathize with him, but we cannot technically help everyone - so the remaining 5% of cases have to be neglected. In general, when solving network problems, we often focus on the 95th percentile, because it is impossible to focus on the 100th. The one-hundredth percentile will mean the slowest result from all collected measurements - this is not informative. Almost always there will be some single subject sitting in the old basement with a slow modem, in which each page will load by the hour. Purely human, we, of course, sympathize with him, but we cannot technically help everyone - so the remaining 5% of cases have to be neglected. In general, when solving network problems, we often focus on the 95th percentile, because it is impossible to focus on the 100th. The one-hundredth percentile will mean the slowest result from all collected measurements - this is not informative. When solving network problems, we often focus on the 95th percentile, because it is impossible to focus on the 100th. The one-hundredth percentile will mean the slowest result from all collected measurements - this is not informative. When solving network problems, we often focus on the 95th percentile, because it is impossible to focus on the 100th. The one-hundredth percentile will mean the slowest result from all collected measurements - this is not informative.

Replace branches with arithmetic

As I hope it became clear, measurement is not an easy problem. Let's look at some examples and start by trying to replace branching with arithmetic. We are talking about cases when we need an if statement, but at the same time its too frequent use is undesirable. Instead, we will integrate the result of the branch as the value 0/1. The code will look linear, the computer will just need to go through it from beginning to end, without thinking about exactly what step to take next.

Let's try to solve the following problem: move the minima of each quartile of the array to the first quartile. In other words, the array should be divided into four parts and the minimum value of each part should be placed at the beginning of the array.

staticvoidmin4(double[] p){

int n = p.Length;

int i = 0, j = n / 4, k = n / 2, l = 3 * n / 4;

for (; i < n / 4; ++i, ++j, ++k, ++l) {

int m = p[i] <= p[j] ? i : j;

if (p[k] < p[m]) m = k;

if (p[l] < p[m]) m = l;

Swap(ref p[i], ref p[m]);

}

}The above is the basic version of the code. By the way, I can proudly announce that I translated these examples into C #, and they are successfully compiled. The code itself is quite simple: `m is assigned the index of the smallest of the two values located on the indices` i and `j, and then the same assignment is repeated two more times, depending on the other two indices. Finally, the value at the index `m changes places in the array with the value at the index` i. As you can see, we go around the array with the help of four inductive variables.

The problem of testing such an algorithm will be interesting and not obvious. We will need to test it not on a single set of data, but on data that might arise in various cases. For example, on data that looks like pipes of an organ: first increase, then decrease; on random data with a uniform distribution; on a random set of zeros and ones - from just random data, the difference here is that there will be many duplicate values; on already sorted data; finally, on the data obtained by real measurements of some physical phenomenon. This will be a serious approach to measuring the speed of an algorithm, and it is generally accepted among people who study algorithms.

Let's try to improve the code that we have just met.

staticvoidmin4(double[] p){

int q = p.Length / 4;

int i = 0, j = n / 4, k = n / 2, l = 3 * n / 4;

for (; i < q; ++i, ++j, ++k, ++l) {

int m = p[i] <= p[j] ? i : j;

if (p[k] < p[m]) m = k;

if (p[l] < p[m]) m = l;

Swap(ref p[i], ref p[m]);

}

}As the first optimization, we will try to avoid excessive repetition of operations, for this we take out several division operations from the cycle - dividing `n into 2 and 4 and dividing 3 *` n into 4. But, having carried out this optimization, we find out that the calculations were not for We are the main problem: the code will not become faster, although it will be more compact. At best, we will achieve an improvement of half a percent.

staticvoidmin4(double[] p){

int q = p.Length / 4;

int i = 0, j = q, k = 2 * q, l = 3 * q;

for (; i < q; ++i, ++j, ++k, ++l) {

int m0 = p[i] <= p[j] ? i : j;

int m1 = p[k] <= p[l] ? k : l;

Swap(ref p[i], ref p[p[m0] <= p[m1] ? m0 : m1]);

}

}The second change we make to the code will be to reduce dependencies. In the previous version of the algorithm, the assignment of `m value` k or `l depends on the value assigned to` m line above. To reduce the number of dependencies `m, we separately calculate` m0 and `m1, and then compare them. When I performed this optimization, I hoped for a significant improvement in the speed of the algorithm, but in the end it turned out to be zero. But, in my opinion, it is important to keep the number of dependencies minimal, so I saved the code.

staticvoidmin4(double[] p){

int q = p.Length / 4;

for (int i = 0; i < q; ++i) {

int m0 = p[i] <= p[i + q] ? i : i + q;

int m1 = p[i + 2 * q] <= p[i + 3 * q] ?

i + 2 * q : i + 3 * q;

Swap(ref p[i], ref p[p[m0] <= p[m1] ? m0 : m1]);

}

}Let us now try to reduce the number of inductive variables from four to one, and the remaining three will be calculated arithmetically, since they are in constant relation to each other. It is quite simple: instead of `k, we will have` i + q, instead of two other variables - `i + 2 * q and` i + 3 * q. I also had high hopes for this optimization, but, like the previous one, it did not give any results in time. This again proves the importance of measurement: without them, I could boast that I had significantly improved the performance of the algorithm, and I would have very substantial arguments.

staticvoidmin4(double[] p){

int q = p.Length / 4, q2 = q + q;

for (int i = q; i < q2: ++i) {

int m0 = p[i - q] < p[i] ? i - q : i;

int m1 = p[i + q2] < p[i + q] ? i + q2 ? i + q;

Swap(ref p[i - q], ref p[p[m0] <= p[m1] ? m0 : m1]);

}

}As a fourth attempt, we restructure the cycle in order to eliminate multiplication by 3. This will give us an improvement of 3%. The result is still not impressive. Next, try to get rid of ternary operators.

// Returns: value if flag is true, 0 otherwisestaticintoptional(bool flag, int value){

return -Convert.ToInt32(flag) & value;

}For this, I would like to introduce you to a new function - `static int optional (bool flag, int value). It converts the input boolean value to Int32, multiplies by -1, and passes it to the bitwise AND operator along with the second input value. If the input flag was false, then in int32 it will be 0, and after all conversions on the output, we still get 0. If the input flag was true, in int32 it will be 1, when multiplied by -1, we get FFFFFFFF, which is after the bit "And" with any number will give this second number. Please note that there is no if statement anywhere, a code without branches, for a computer it is boring (although it just seems convoluted to us).

staticvoidmin4(double[] p){

int q = p.Length / 4, q2 = q + q;

for (int i = q; i < q2; ++i) {

int m0 = i - optional(p[i - q] <= p[i], q);

int m1 = i + q + optional(p[i + q2] < p[i + q], q);

Swap(ref p[i - q], ref p[p[m0] <= p[m1] ? m0 : m1]);

}

}We will replace the ternary operators with this optional function, we will integrate it inside the calculation. Apply it twice, and in the third case, leave a question mark. Thus, instead of four checks in this cycle, I will have only one.

From the measurement results that you see on the slide, it is clear how important it was to check the algorithm on several different data sets. On one set, we would not understand anything. On random and real data, we have more than twice the acceleration, on the pipes of the organ and sorted data, we have a slight slowdown. This is due to the fact that in the case of the sorted data for the predictor of transitions there will be no surprises, it will predict with 100% accuracy. In the case of organ pipes, we will have one misprediction in the middle of the data set — again, very high accuracy. In contrast, with random data, the difference between our two approaches will be huge. We have replaced all unpredictable checks with simple logic. Here we return again to the simple truth: computers are designed for computing, as the name implies (computer - computing). Branching, displaying pictures on the screen - they all do much worse. It is much easier to execute the bit "AND" for them than to pass an if statement.

staticvoidmin4(double[] p){

int q = p.Length / 4, q2 = q + q;

for (int i = 0; i < q; ++i) {

int m = i + optional(p[i + q] < p[i], q);

m += optional(p[i + q2] < p[m], q);

m += optional(p[i + q2 + q] < p[m], q);

Swap(ref p[i], ref p[m]);

}

}Having finally achieved a positive result from optimization, we will try to replace the last ternary operator with our `optional. Function. This time the speed gain will be small. To understand why this happens, you need to look at the generated code. In the previous version of the code, where the question mark was still present, the compiler has already found a way to execute the code without branching. And when he gets to the ternary operator, he could already predict it. Replacing this last piece with `optional will give some worse code. Therefore, I repeat, it is important to measure each time.

// Returns: v1 if flag is true, v2 otherwisestaticintifelse(bool flag, int v1, int v2){

return (-Convert.ToInt32(flag) & v1) |

((Convert.ToInt32(flag) - 1) & v2);

}

Another function I would like to recommend to you is the ifelse without branches, which you now see on the screen. True, I was not able to achieve with it the performance improvements in our example. If 0 is passed as a flag, the first line will be 0; in the second, we subtract 1 from 0 in Int32 and get FFFFFFFF, after which this value is passed to the bit "AND" along with the argument of the `v2 function, which will give us this argument unchanged; Finally, the first and second lines are transmitted to the bit "OR", which, again, will give us `v2. If the flag is 1, then the first line will be `v1; in the second, we subtract 1 from 1 and get 0, with the result that the entire line will be 0, and 0 and `v1 in the bit" OR "will give` v1.

I hope that such a function `ifelse without branching will interest the people involved in the backend - for now, for some reason, modern compilers do not use this approach. Having such functions, you can reorganize the algorithms so that compilers understand them for you, because you are smarter and more creative than your compiler.

Large set intersection

Let's change the topic of our conversation a bit and move on to the intersection of large sets. So far, we have been talking about individual operators, but now we will create new algorithms, so we will need to distract from the details and open our consciousness to a larger perspective. I assume that you are familiar with the algorithms for merge sort, multiplying two vectors and searching for common elements of two sorted vectors. Two sorted sets are traversed, and when equal elements are found in them, this is considered a match. If one of the two matched elements turns out to be smaller, it is shifted. This algorithm is fairly simple, but very common - most likely the most used in the world. It is used in all queries of several words, each such query is the intersection of two sets. This algorithm

intIntersect(double[] a1, double[] a2, double[] t){

if (a1.Length == 0 || a2.Length == 0) return0;

int i1 = 0, i2 = 0, i = 0;

for (;;)

if (a1[i1] < a2[i2]) {

if (++i1 == a1.Length) break;

} elseif (a2[i2] < a1[i1]) {

if (++i2 == a2.Length) break;

} else {

t[i++] = a1[i1];

if (++i1 == a1.Length || ++i2 == a2.Length)

break:

}

return i;

}Take a look at the basic implementation of this algorithm. If both input sets are empty, then obviously we return 0. Next, we start an infinite loop, in which, if a match is met, we increase the result by 1 and check if the loop needs to be completed. Instead of an infinite loop, you could use the for operator and specify the end condition of the loop in it. But that would mean extra work. In the implementation that you see on the slide, in the first branch we have `if (a1 [i1] <a2 [i2]), after which there is an increase in` i1 by 1, and all we need to do is to check `i1. Similarly, in the second branch we only need to check `i2. Both values need to be checked only in the third branch. If this check were at the beginning of the cycle, then we would do the extra work.

We will try to improve this implementation. At the moment, its algorithmic complexity is linear, with dependence on two input arguments. In machine learning, quite often you have to find the intersection of sets that are very different from each other in size or in statistics. For example, you have a long input vector and a short vector of features (feature vector) against which you are checking. In our code there can be a million entries in `a1, and in` a2 - a thousand. In this case, we are not ready to go a million steps to complete this algorithm. The greatest load here will be on the following line of code: `if (++ i1 == a1.length) break. Immediately before this, a comparison occurs, and then in this line - the increment of the value; this is essentially a linear search. We iterate over a long vector in search of short elements.

Let's try to improve this algorithm. Binary search is better than linear search, so let's turn to it. Its advantage is that it gives the index of the largest of the smaller elements.

intIntersect(double[] a1, double[] a2, double[] t){

int i1 = 0, i = 0;

for (; i1 != a1.Length; ++i1) {

auto m = Bsearch(a2, a1[i1]);

if (m == a2.Length) continue;

--m;

if (!(a2[m] < a1[i1]))

t[i++] = a1[i1];

}

return i;

}

The code above is the implementation of our binary search algorithm. It is not very effective. The worst situation is when the binary search fails every time. It will occur in some fairly important scenarios - for example, with identical elements of the set. Here the binary search will be slower than the classic linear search. How to make the algorithm work equally well on identical and different data? Check all the data will be too expensive for resources. I’ll make a reservation that this is not about completely identical data, but about very similar, with similar statistics, dimensions may also differ. You can check the following several items. The obvious solution is to reduce the search area. When we perform a binary search, then finding a certain element, we are no longer interested in elements less than it, since the second vector is also sorted. Thus, we can reduce our search area every time, discarding all the elements less from the found element.

intIntersect(double[] a1, double[] a2, double[] t){

int i1 = 0, i2 = 0, i = 0;

for (; i1 != a1.Length; ++i1) {

auto m = Bsearch(a2, i2, a2.Length, a1[i1]);

if (m == i2) continue;

if (!(a2[m - 1] < a1[i1]))

t[i++] = a1[i1];

i2 = m + 1;

}

return i;

}Here is the implementation of this approach. You see that we perform a binary search every time in a part of the original array starting with `i2 and ending with` a2.length. Since ʻI2 will increase with each search, the search area will be reduced.

The next optimization that I would like to implement here is connected with the Galloping Search algorithm. In essence, this is a binary search with a different step. In the case of a binary search, we start every time from the middle - but let's think about it, when we look for the name in the phone book, do we not open it in the middle itself? If the person’s last name begins, say, in “B”, we will open the book closer to the beginning. This principle is implemented in a galloping search: we begin to crawl data in an ascending direction with a step that increases exponentially after each check: first 1, then 2, then 4. This gives us a good algorithmic complexity. If the step grew linearly, the complexity would be quadratic. When we "skip" the desired element, we perform the usual binary search on the remaining segment, which will be small and will not significantly affect the execution time of the algorithm. Thus, we combine all the advantages of both approaches. The implementation of this algorithm:

intGBsearch(double[] a, int i, int j, double v){

for (int step = 1;; step *= 2) {

if (i >= j) break;

if (a[i] > v) break;

if (i + step >= j)

return Bsearch(a, i + 1, J, v);

if (a[i + step] > v)

return Bsearch(a, i + 1, i + step, v);

i += step + 1;

}

return i;

}We now discuss the scaling, that is, we try to find an intersection with more than two sets. For each search of several words, we will need to find the intersection of several sets. To do this, we can, for example, compare the first two sets, then their intersection with the third, and so on. But this is not the optimal solution. We need to take the first elements of all the sets and find the smallest of them, which will then need to be moved. We need a data structure that would allow us to find the smallest of many elements and would have constant complexity. Such a data structure is a bunch. But it will be a strange heap, it will not be based on a physical array. She will be imaginary, we will organize in her only the first elements of our sets. Having found the smallest item in the heap,

The work on the topics that we are discussing now has, in practice, a rather amateur form. In practice, we will often have several sets, not just two, and quite a lot of work has been written on this topic. The classic algorithm here is SVS, in which we group the sets, take the two smallest ones and choose the shortest one as a candidate. Under the link you can find a good overview on this topic. The problems associated with the intersection of sets, the scalar product of sparse vectors, sorting by merging, any form of comparison with the image over time turn out to be more and more interesting. The algorithm that I showed you proved to be very useful. Thank you for your attention.

Andrei Alexandrescu will not come to DotNext 2018 Moscow, but there will be Jeffrey Richter, Greg Young, Pavel Yosifovich and others there. The names of the speakers and the topics of the reports can be viewed here , and the tickets - here . Join now!