High Availability Cloud Platform Crash Test

How to make sure that the infrastructure of the cloud provider does not really have a single point of failure?

Check it out!

Here I will talk about how we conducted acceptance tests of our new cloud platform.

Background

September 24, we have opened a new public cloud platform in St. Petersburg:

www.it-grad.ru/tsentr_kompetentsii/blog/39

preliminary test plan cloud platform:

habrahabr.ru/post/234213

And here we come ...

Remote testing

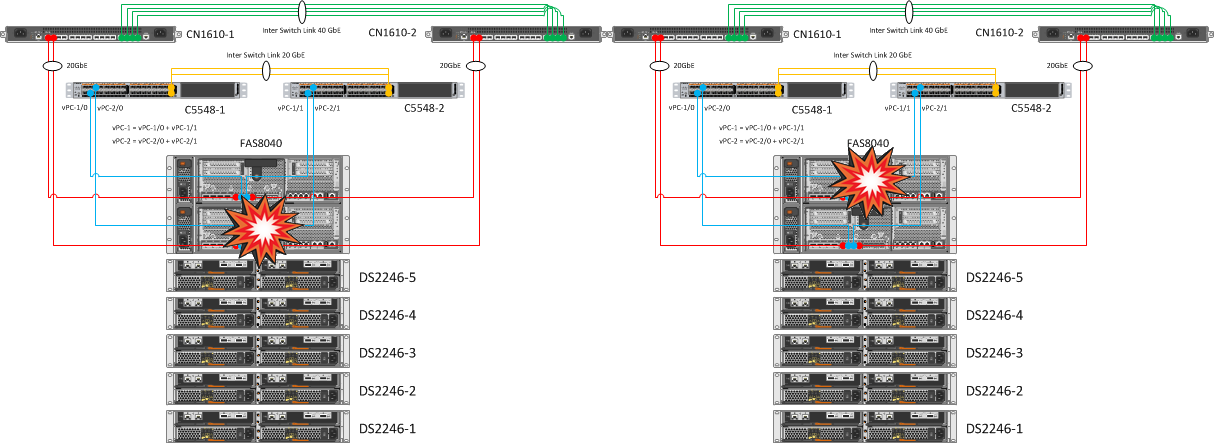

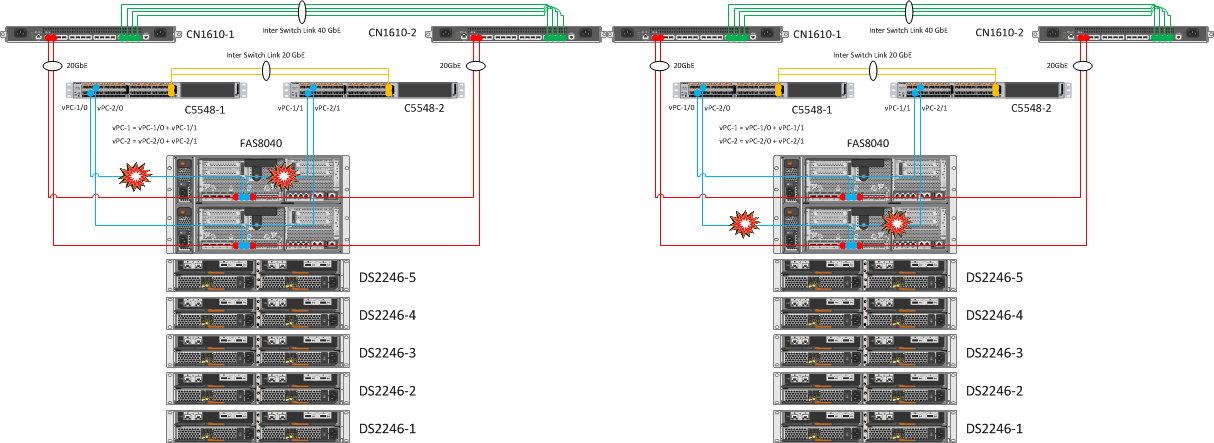

1. Turning off the FAS8040 controllers

| Expected Result | Actual result |

| Automatic takeover to the working node, all VSM resources should be available on ESXi, access to the datastores should not be lost. | We observed a successful automatic takeover of one “head” (then the second). Volumes from the first controller successfully switched to servicing the second, it is noteworthy that the procedure itself took some tens of seconds (including the detection of a “head” failure). The indicators are set on the nodes: options cf.takeover.detection.seconds 15 |

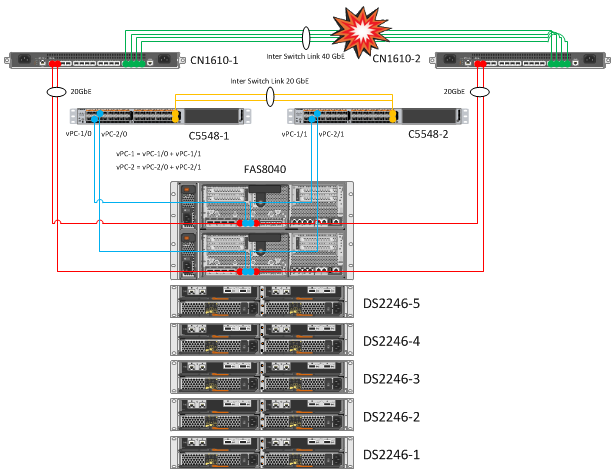

2. Disabling all Inter Switch Link between CN1610 switches

| Expected Result | Actual result |

| When disconnecting all Inter Switch Link between CN1610 switches, communication between nodes should not be interrupted. | The connection between the host and the network did not disappear, access to the ESXi was carried out via the second link. |

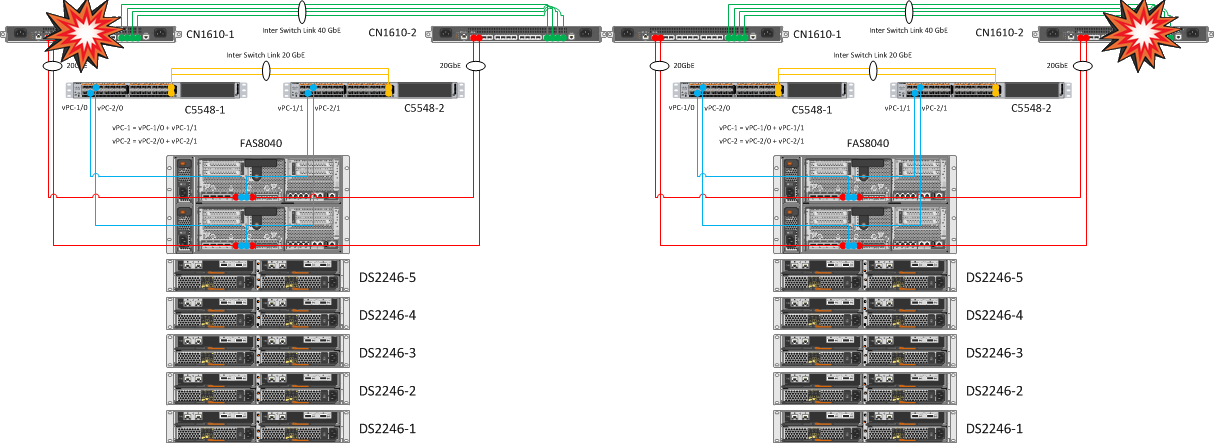

3. The sequential reboot of one of the paired cluster switches and one of the Nexus

| Expected Result | Actual result |

| No NetApp Cluster Failures | NetApp controllers remain clustered through the second CN1610 switch. Duplication of cluster switches and links to controllers allows you to painlessly transfer the fall of one piece of iron CN1610. |

| One of the ports on the nodes must remain accessible, on the IFGRP interfaces on each node one of the 10 GbE interfaces must remain available, all VSM resources must be available on ESXi, access to the datastores must not be lost. | As a result of duplicating the links and merging them into Port Channels, rebooting one of the Nexus 5548 did not cause any emotion. |

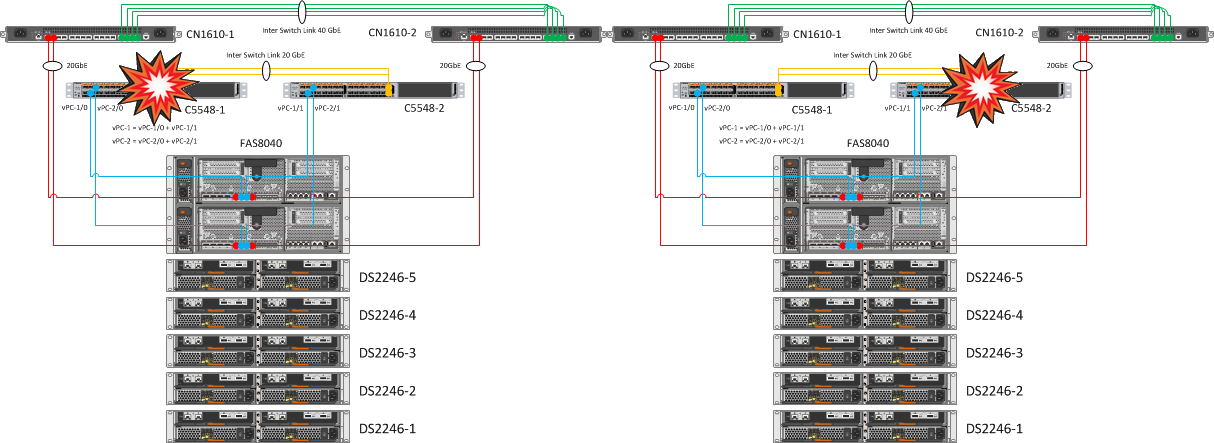

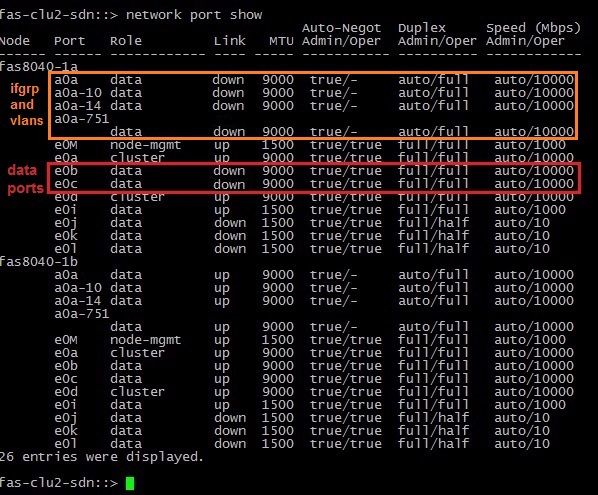

4. Serial cancellation of one of the vPC (vPC-1, vPC-2) on the Nexus

| Expected Result | Actual result |

| Simulation of a situation when one of the NetApp nodes loses network links. In this case, the second “head” should take control. | The controller interfaces were extinguished, respectively: e0b and e0c, followed by the down state ifgrp a0a and the VLANs raised on it. After which the node went into an ordinary teikover, we know about it from the first test. |

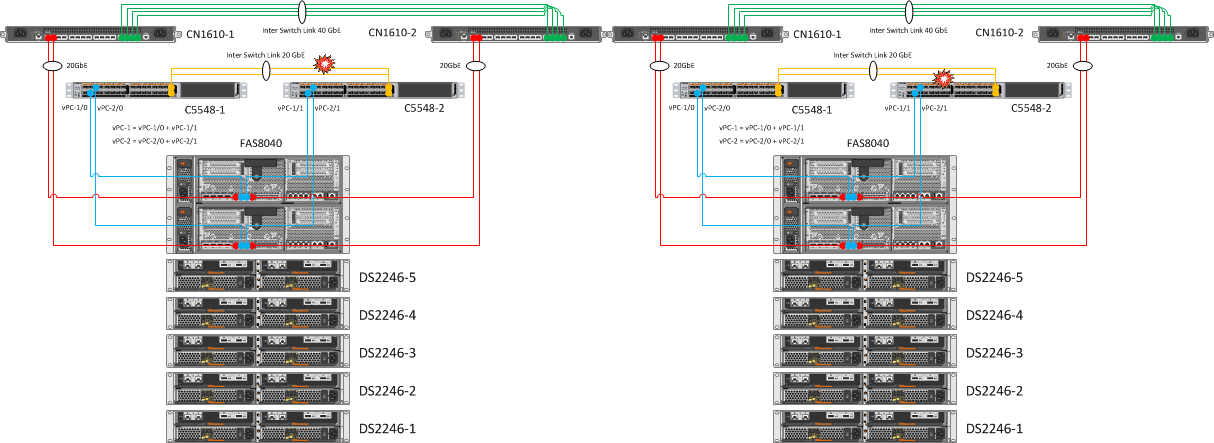

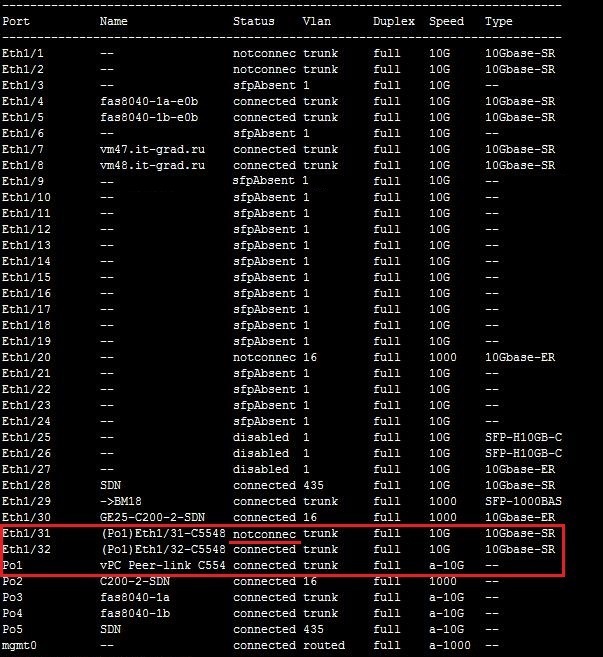

5. Disabling Inter Switch Link Alternately Between Cisco Nexus 5548 Switches

| Expected Result | Actual result |

| Maintain connectivity between switches. | Interfaces Eth1 / 31 and Eth1 / 32 are assembled in Port Channel 1 (Po1). As can be seen from the screenshot below, when one of the links crashes, Po1 remains active and there is no loss of connectivity between the switches. |

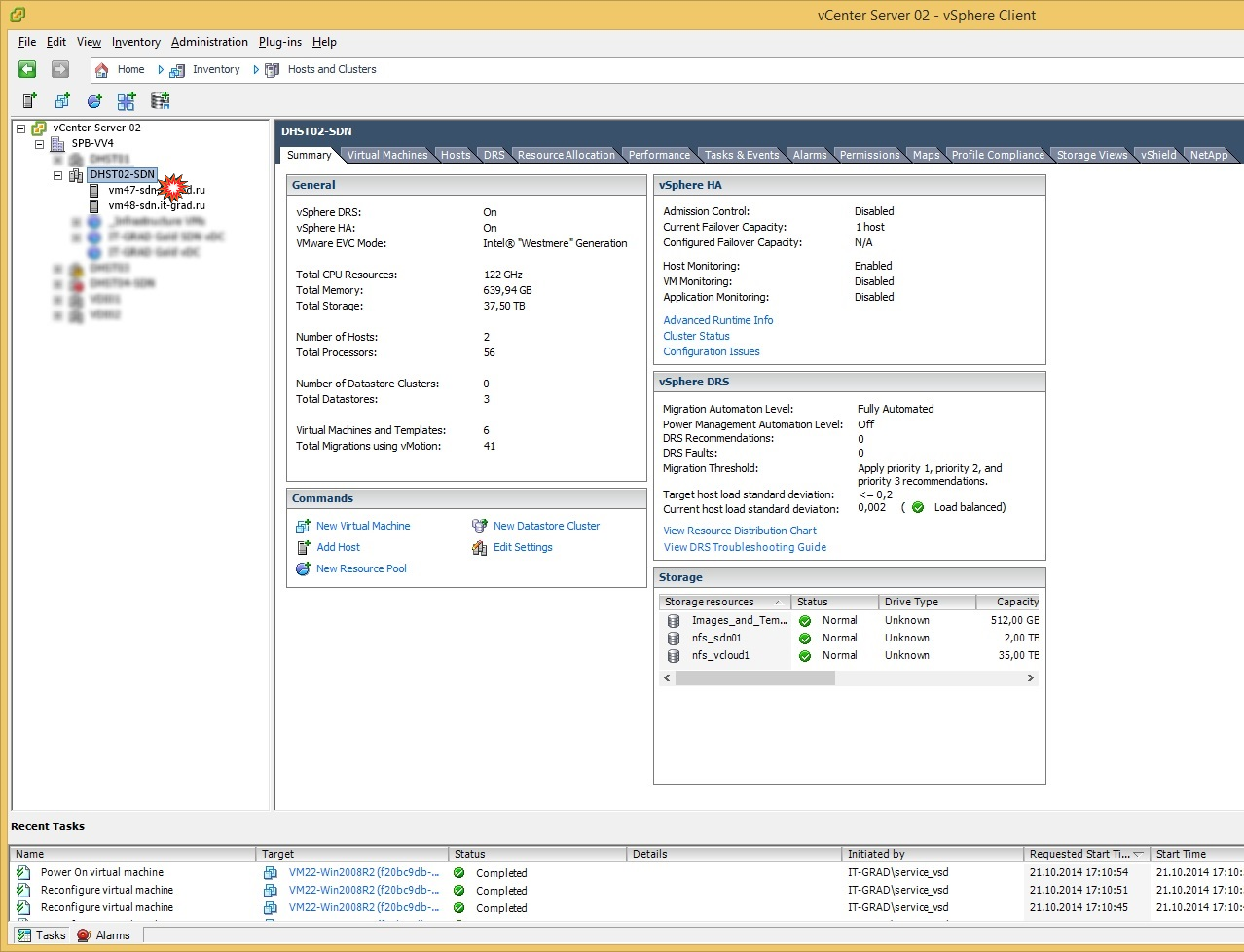

6. Alternate hard shutdown of ESXi

We turned off one of the working ESXi hosts, on which at the time of shutdown there were test machines of different OS (Windows, Linux). Disabling emulated the fall state of the working host. After the trigger for the unavailability of the host (and virtual machines on it) was triggered, the process of re-registering the VM to the second (working) host began. Then the VMs successfully started on it within a few minutes.

| Expected Result | Actual result |

| Restarting virtual machines on a neighboring host. | As expected, after testing HA VMware, the machines restarted on the neighboring host within 5-8 minutes. |

7. Monitoring monitoring progress

| Expected Result | Actual result |

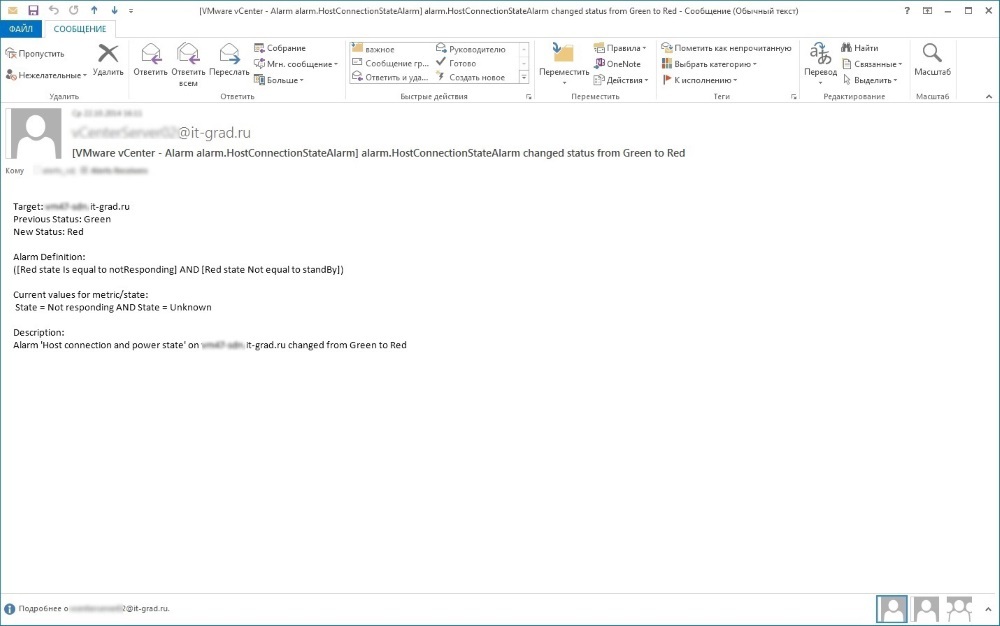

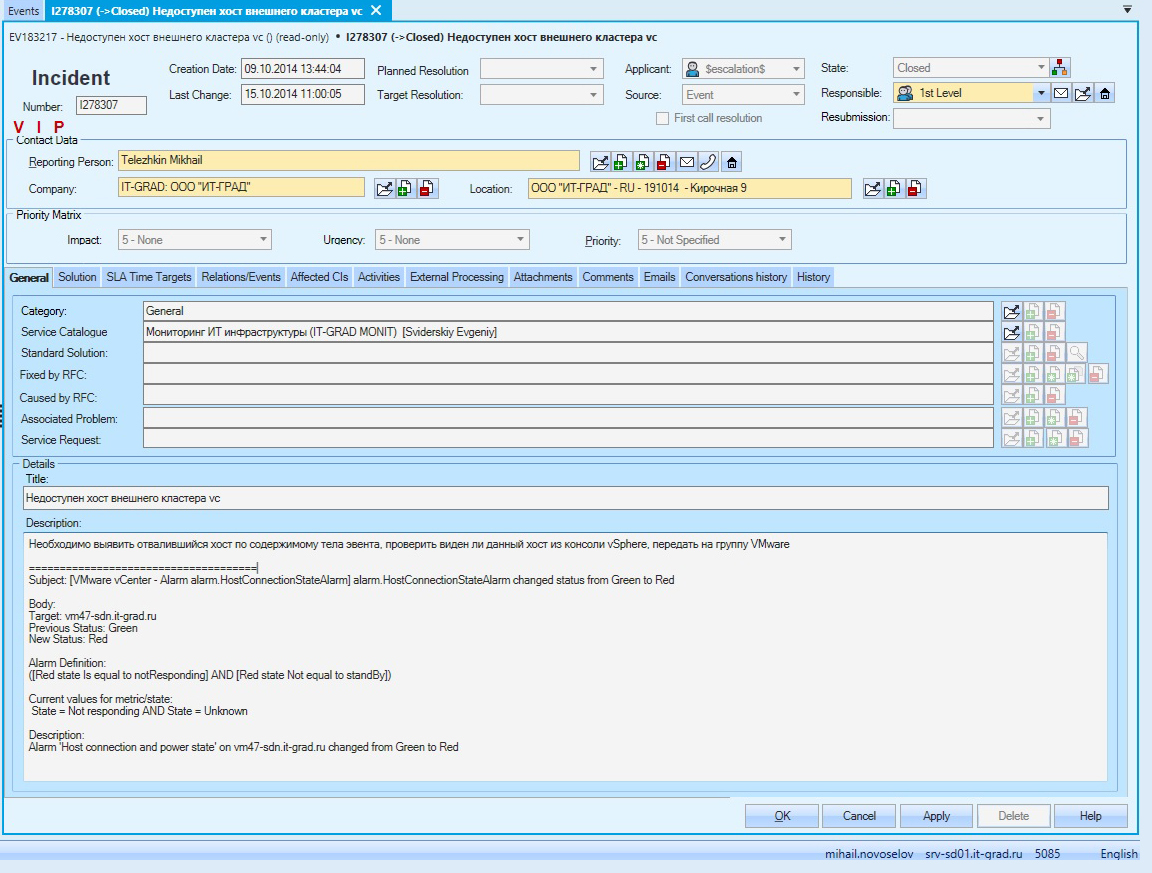

| Receive error messages. | What can I say ... We got multiple mailings of errors and warnings, the system of requests and appeals processed notifications by templates, servicedesk reacted impeccably. |

The ITSM system parsed these letters by templates and generated events. Based on the same events, incidents were automatically completed. Here is one of the incidents that was created by the ITSM system based on events in the monitoring system.

One of these incidents fell on me.

Testing directly on the equipment side

1. Disconnecting power cables (all pieces of equipment)

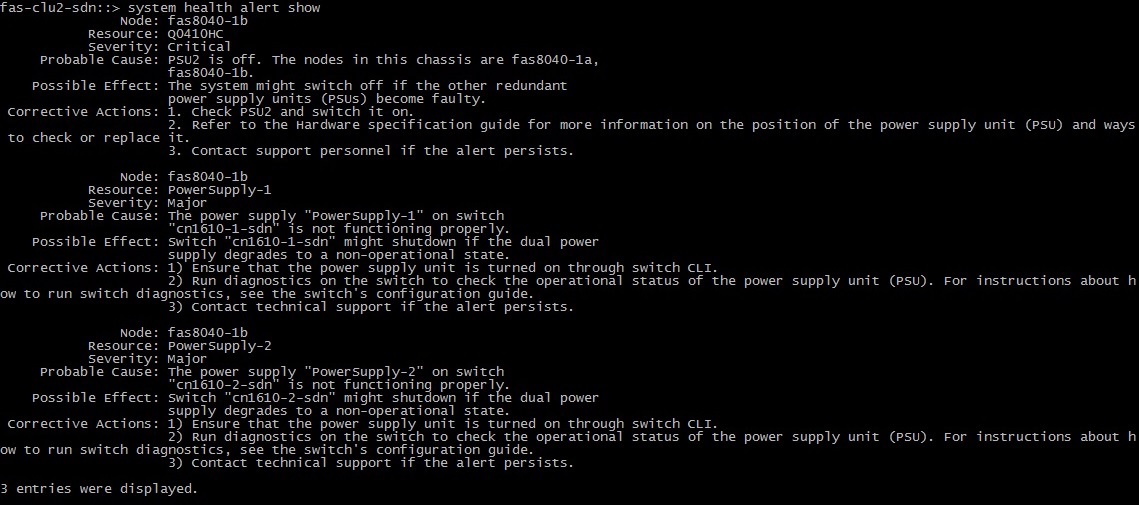

Nothing new, unless, of course, you find out that one of the power supplies is bad.

Throughout the test, not a single piece of iron was harmed.

But NetApp unsubscribed both for itself and for Cluster Interconnect switches:

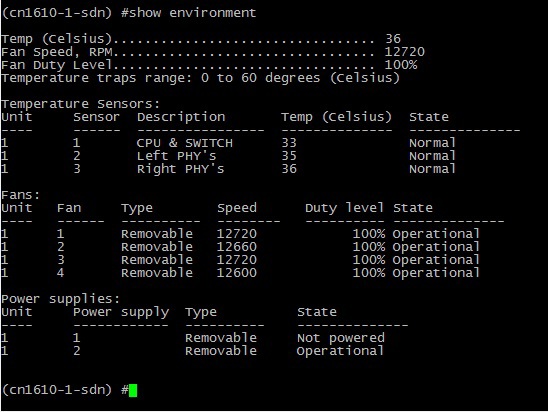

On the Cluster-Net switch:

In VMware vSphere host errors:

Note: The management of the Cisco SG200-26 switch has no power redundancy.

This switch is involved in access network management (to the control ports of storage systems, servers). Turning off the power on this switch will not cause downtime for client services. Also, failure of the Cisco SG200-26 will not lead to a loss of monitoring, since the monitoring of infrastructure availability is carried out through the management network, which is formed at the Cisco Nexus 5548 level. A managed switch logically stands behind it and serves ONLY for access to the equipment management console.

And yet, in order to avoid loss of control through this switch, the Automatic Transfer Switch APC AP7721, which provides redundant power supply from two buses, has already been purchased for help.

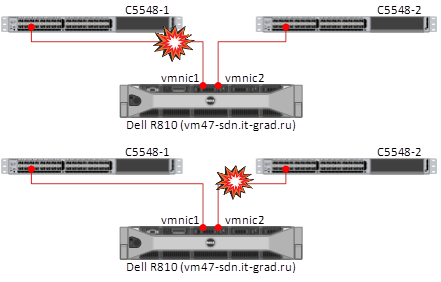

2. One-time disconnection of network links from ESXi (Dell r620 / r810)

The connection between the host and the datastore did not disappear, access to the ESXi was carried out in the second link.

That's all. All tests were successful. Acceptance tests passed. The hardware of the cloud is ready to deploy virtual infrastructure for new customers.

PS

After conducting the tests, for a long time I did not let go of the feeling of power and quality factor of reliable iron, which I had a chance to touch with my own hands during the testing of the entire complex for fault tolerance.