How we transferred the cloud from Ethernet 10G to Infiniband 56G

Cable Mellanox MC2609125-005

In our case, Infiniband would work five times faster than Ethernet, but would cost the same. There was only one difficulty - all this had to be done without interrupting cloud services in the data center. Well, it's about how to rebuild a car’s engine while driving.

In Russia, such projects simply did not exist. Everyone who still tried to switch from Ethernet to Infiniband, one way or another, stopped their infrastructure for a day or two. We have in the cloud "shoulder", which is located in the data center on Volochaevskaya-1, about 60 large customers (including banks, retail, insurance and critical infrastructure) on almost 500 virtual machines hosted on about a hundred physical servers. We were the first in the country to gain experience in rebuilding a hundred-plus-hour and network infrastructure without downtimes and are a little proud of this.

Infiniband-cable at the entrance to the server

As a result, the throughput of communication channels between the cloud servers increased from 10 Gb / s to 56 Gb / s.

What happened

In the data center on Volochaevskaya-1 there was an Ethernet 10 GB, which united the server. We started seriously calculating Infiniband for ourselves at the time of designing our next data center - Compressor (TIER-3 level). Comparing the total cost of ownership with cards, switches, cable, service, it turned out that Infiniband cost almost the same as Ethernet. But it provided much less delays in the network and was corny faster at least 5 times.

Well, plus, it is worth mentioning the excellent fact that Infiniband at such facilities is much easier to set up and support. The network is designed once and then quietly expanded simply by sticking in new pieces of iron. No dancing with a tambourine as in the case of complex Ethernet architectures, no bypassing the switches and settings for the situation. If somewhere on the switch there is a problem, only he falls, and not the entire segment. Inside the infrastructure is a real Plug-and-Play.

So, having built a cloud shoulder in the Compressor data center on Infiniband and felt how wonderful this technology is, we thought about rebuilding the cloud shoulder of the data center on Volochaevskaya-1 using FDR InfiniBand.

The supplier

There are four major suppliers of Infiniband, but the practice is such that it is worth building the entire network on homogeneous equipment from one vendor, namely Mellanox. In addition, at the time of design, this was the only vendor that supported the most modern Infinband standard - FDR. Actually, we had a wonderful experience in reducing network latencies for several customers (including a large financial company) using Mellanox - their technology has proven itself perfectly.

The latency of Infiniband FDR is about 1-1.5 microseconds, and on Ethernet about 30-100 microseconds. That is, the difference is two orders of magnitude, in practice in this particular case it is also approximately the same.

Regarding topology and architecture, we decided to greatly simplify our lives. It turned out that it is not very difficult and costly to make exactly the same scheme as in the second cloud shoulder (our cloud is based in two data centers) - in the data center Compressor - this made it possible to get two identical platforms. What for? It is easier to reserve replacement equipment, easier to maintain, there is no equipment zoo, each of which needs its own approach. Plus, we just had very little left until some equipment came out of support - we at the same time replaced the old servers. I’ll clarify: this fact did not affect decision-making, but it turned out to be a pleasant bonus.

The most important reason was the reduction in network latency. Many of our customers in the "cloud" use synchronous replication, and therefore reducing network latencies can indirectly speed up the work, for example, storage. In addition, using Infiniband allows you to migrate virtual machines much faster. If we talk not about the current moment, but about the future, since Infinband supports RDMA, then in the future we will be able to add the ability to migrate virtual machines orders of magnitude faster, replacing TCP with RDMA.

Of course, RDMA, TRILL, ECMP technologies are now appearing in the Ethernet world, which together provide the same capabilities as Infiniband - but in Infinband, the ability to build Leaf-Spine topologies, RDMA, auto-tuning has existed for a long time and was designed at the protocol level rather than added as AD-HOC solutions, as in Ethernet-e.

Transfer

In the cloud shoulder on Volochayskaya, we had a network and a hundred-segment segment on a 10-gigabit network. We connected the infrastructure segment built on Mellanox to it, so that we got one L2 segment, and everything that was on the first stand online migrated to a new one. Customers did not feel anything but increasing the speed of the services, no cars were turned off, no critical problems occurred during the move.

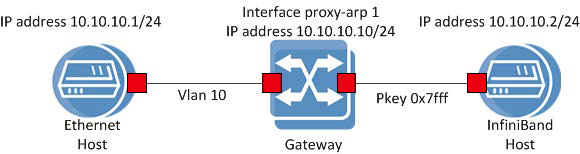

Here is a simplified version of the transfer scheme:

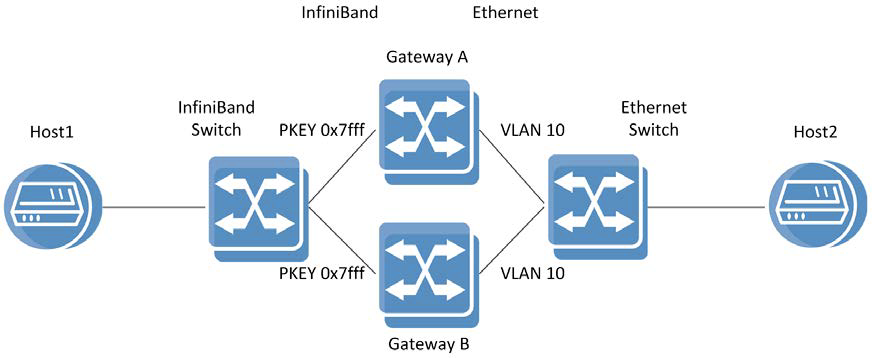

Here is a more complex one:

In fact, we created one data transfer medium between these two stands, although the technologies are not very compatible in principle. We moved the virtual infrastructure of customers, and then disconnected the stands.

To combine the two environments, we used the Mellanox SX6036 managed switches. Before designing a test bench for cloud migration, an engineer from Mellanox came to us for consultations and technical assistance. After agreeing with him on our plans and capabilities of Mellanox equipment, he sent us GW licenses, allowing us to use the switch as a Proxy-ARP gateway, which can transmit traffic from the Ethernet network to the Infiniband segment. In total, the move was done for about a month (not counting the work on the project). It was divided into several stages, the first - we put together a test bench - a mini-model of the "cloud" - in order to test the viability of the idea (it was necessary to migrate not only a regular, but also a hundred-plus network). The transports on the test bench worked several times and began to write a plan of combat migration.

Test bench layout

Next - the operation service switched to the night schedule and slowly began to mount everything necessary. The story about the wife, whose husband went at night, is unclear where and claimed that to work is just about such transfers.

A specific scheme (ip addresses, vlans, pkey necessary days of equipment setup) to implement the transition from 10G network to Infiniband.

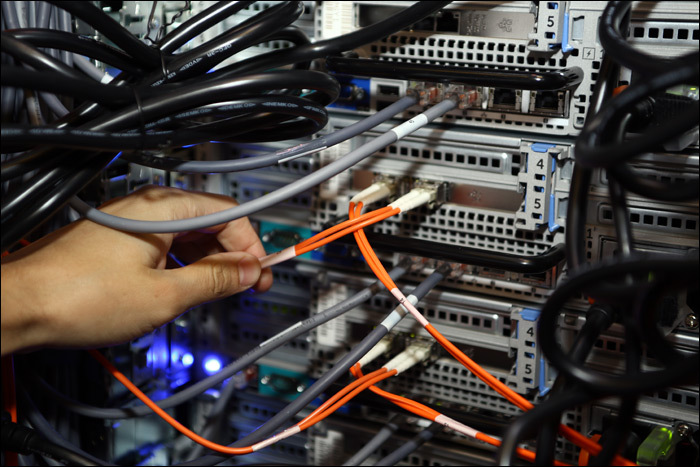

During installation

QSFP-4xSFP + Hybrid cable connected to the Mellanox SX6036

The same cable connected to the 10G Ethernet switch module

Optics at the input to the 10G-Ethernet part

The old equipment is now on the sidelines, and we have already begun to disassemble it. Slowly and calmly, we remove it from the racks and dismantle it. Most likely, it will leave for test environments to help us work out even more complex technical cases, which we will definitely please you with.

Everything, although formally we have not yet finished moving (you need to remove the old racks), the result is already very warm. I will be happy to answer your questions about moving in the comments or by mail mberezin@croc.ru .