Determine the ripeness of watermelon with the help of Keras: a full cycle, from idea to program on Google Play

How it all began

It all started with the Apple Market - I found that they have a program that allows you to determine the ripeness of watermelon. The program ... strange. What is worth, at least, the proposal to knock on the watermelon is not knuckles, and ... phone! Nevertheless, I wanted to repeat this achievement on a more familiar Android platform.

Selection of tools

Our task is solved in several ways, and to be honest, I had to make a lot of effort not to go the “simple” way. That is, take the Fourier transform, wavelets and signal editor. However, I wanted to gain experience with neural networks, so let the networks analyze the data.

Keras was chosen as a library for creating and training neural networks - a Google add-on over TensorFlow and Theano. Generally, if you are just starting to work with networks of deep learning, you will not find a better tool. On the one hand, Keras is a powerful tool optimized for speed, memory and hardware (yes, it can work on video cards and their clusters). On the other hand, everything that can be “hidden” from the user is hidden there, so you don’t have to wrestle with connecting neural network layers, for example. Very comfortably.

Both Keras and neural networks in general require the knowledge of Python - this language, like a snake wrapped around ... sorry, it has become painful. In short, without Python in modern Deep Learning is not worth it. Fortunately, the Python can be studied in two weeks, at most - in a month.

For Python, you will need some more libraries, but these are trifles - I mean, if you have already coped with Python itself. You will need an acquaintance (very superficial) with NumPy, PyPlot, and possibly another pair of libraries, from which we will take literally a couple of functions. Not difficult. True.

Well, in conclusion, I note that we will not need the clusters of video cards mentioned above - our task is normally solved with the help of a computer CPU - slowly, but not critical, slowly.

Work plan

First you need to create a neural network - on Python and Keras, under Ubuntu. You can - on the emulator Ubunt. It is possible - under Vindouz, but you will have enough additional time to study the mentioned Ubuntu, and continue to work under it.

The next step is to write the program. I plan to do this in Java for Android. It will be a prototype of the program, in the sense that it will have a user interface, but there is no neural network yet.

What is the meaning of writing "dummy", you ask. And here is what: any task related to data analysis, sooner or later rests on data retrieval - for training our program. In fact, how many watermelons need to be tapped and tasted so that the neural network can build a reliable model on this data? A hundred? More?

Here our program will help us: fill it up on Google Play, distribute (well, force, twisting hands) all friends who are not lucky to have a phone with Android, and the data, with a tiny stream, begin to flow ... and by the way, where?

The next step is to write a server program that accepts data from our android client. True, this server program is very simple, I finished everything in about twenty minutes. But, nevertheless, this is a separate stage.

Finally, there is enough data. We teach neural network.

We port the neural network to Java and release an update of our program.

Profit Although not. The program was free. Only experience and stuffed bumps.

Creating a neural network

Working with audio, which, of course, is tapping on a watermelon, it is either recurrent neural networks or the so-called one-dimensional convolutional network. Moreover, in recent times, convolutional networks are unambiguously leading, displacing recurrent ones. The idea of the convolutional network is that the data array - the graphics "intersivnost sound - time" - slides the window, and instead of analyzing hundreds of thousands of samples, we work only with what falls into the window. The following layers combine and analyze the results of the work of this layer.

To make it clearer, imagine that you need to find a seagull in the photo of a seascape. You are scanning a picture - the “window” of your attention moves along imaginary rows and columns, in search of a white check mark. This is how a convolutional 2D network works, while the one-dimensional one scans along one coordinate — the optimal choice if we are dealing with a sound signal.

I note, however, that it is not necessary to dwell on 1D networks. As an exercise, I plotted the sound and analyzed the resulting bitmap as a picture using a 2d convolutional network. To my surprise, the result was no worse than in the analysis of "raw one-dimensional" data.

The network used had the following structure:

model = Sequential()

model.add(Conv1D(filters=32, kernel_size=512, strides=3,

padding='valid', use_bias=False, input_shape=(nSampleSize, 1), name='c1d',

activation='relu'))

model.add(Activation('relu', input_shape=(nSampleSize, 1)))

model.add(MaxPooling1D(pool_size=(2)))

model.add(Conv1D(32, (3)))

model.add(Activation('relu'))

model.add(MaxPooling1D(pool_size=(2)))

model.add(Conv1D(64, (3)))

model.add(Activation('relu'))

model.add(MaxPooling1D(pool_size=(2)))

model.add(Flatten())

model.add(Dense(64))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(nNumOfOutputs)) #1))

model.add(Activation('sigmoid'))

model.compile(loss='mean_squared_error',

optimizer='adam',

metrics=['accuracy'])

This network has two output values (it predicts two quantities): sweetness and ripeness. Sweetness is 0 (unsweetened), 1 (normal) and 2 (excellent), and ripeness, respectively, 0 is too hard, 1 is what is needed, and 2 is overripe, like cotton wool with sand.

Estimates for the test sample are set by the person exactly how - we'll talk in the section on the program for Android. The task of a neural network is to predict what kind of assessment a person will give for a given watermelon (by recording a tap).

Writing a program

I have already mentioned that the program should come out as two versions. The first, preliminary, honestly warns the user that her predictions are complete nonsense. But it allows the user to record a knock on the watermelon, set an assessment of the taste of this watermelon and send it over the Internet to the author of the program. That is, the first version simply collects data.

Here is the program page on Google Play, of course, the program is free.

What it does:

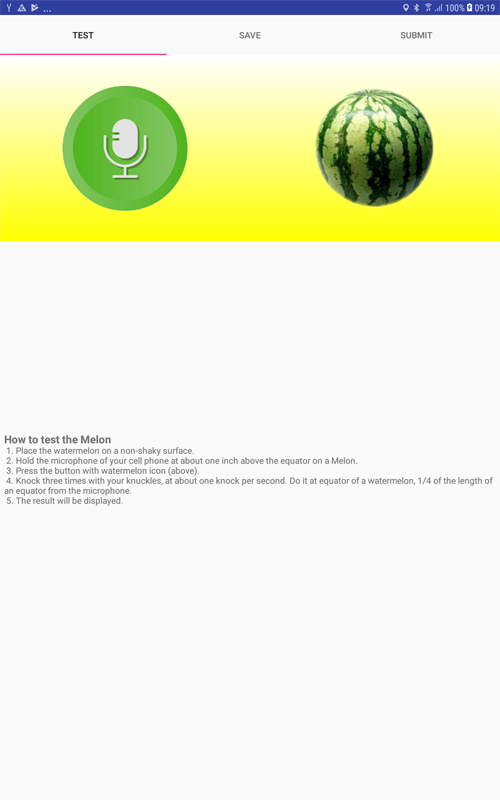

1. Press the button with the microphone and the recording begins. You have five seconds to hit the watermelon three times - tap-tap-tap. The button with a watermelon makes a "prediction", and we do not touch it yet.

Note - if the old version on Google, the recording and prediction are combined in the button with the watermelon, and there are no buttons with the microphone.

2. The saved file is temporary and will be overwritten the next time you press the record button. This allows you to repeat the tapping, if someone says arm in arm (you have no idea how difficult it is to make others shut up for five seconds!) Or just water rustles - dishes ring - the neighbor drills ...

But the watermelon is selected and purchased. You brought it home, recorded the sound and cut it. Now you are ready to evaluate its taste. Select the Save tab.

On this tab, we see two combo boxes for grading - sweetness and ripeness (sweetness and ripeness, translation work is underway). Evaluated - click Save.

Attention! Save can be clicked only once. So, first set a rating. By pressing a button, the sound file is renamed, and now it will not be erased during the next recording.

3. Finally, having written down (and therefore, having eaten) about a dozen watermelons, you returned from the dacha where you did not have the Internet. Now the Internet is. Open the Submit tab and click the button. The package (with a dozen watermelons) goes to the server of the developer.

Writing a server program

Everything is simple, so I'd rather put the full code of this script. The program “catches” files, gives them unique names and puts them in a directory accessible only by the site owner.

<?phpif (is_uploaded_file($_FILES['file']['tmp_name']))

{

$uploads_dir = './melonaire/';

$tmp_name = $_FILES['file']['tmp_name'];

$pic_name = $_FILES['file']['name'];

$filename = md5(date('Y-m-d H:i:s:u'));

move_uploaded_file($tmp_name, $uploads_dir.$filename);

}

else

{

echo"File not uploaded successfully.";

}

?>Neural network training

The data are divided into training and test, 70 and 30 percent, respectively. Neural network - converges. There are no surprises here, however, for beginners: do not forget to normalize the input data, it will save you a lot of nerves. Something like this:

for file_name in os.listdir(path):

nSweetness, nRipeness, arr_loaded = loadData(file_name)

arr_data.append(arr_loaded / max(abs(arr_loaded)))

# 2 stands for num. of inputs of a combo box - 1

arr_labels.append([nSweetness / 2.0, nRipeness / 2.0])

Porting neural network

There are several ways to port a network from the Python environment to Java. Recently, Google has made this process more convenient, so that you will read the textbooks - make sure that they are not out of date. Here's how I did it:

from keras.models import Model

from keras.models import load_model

from keras.layers import *

import os

import sys

import tensorflow as tf

# -------------------defprint_graph_nodes(filename):

g = tf.GraphDef()

g.ParseFromString(open(filename, 'rb').read())

print()

print(filename)

print("=======================INPUT=========================")

print([n for n in g.node if n.name.find('input') != -1])

print("=======================OUTPUT========================")

print([n for n in g.node if n.name.find('output') != -1])

print("===================KERAS_LEARNING=====================")

print([n for n in g.node if n.name.find('keras_learning_phase') != -1])

print("======================================================")

print()

# -------------------defget_script_path():return os.path.dirname(os.path.realpath(sys.argv[0]))

# -------------------defkeras_to_tensorflow(keras_model, output_dir,

model_name,out_prefix="output_", log_tensorboard=True):if os.path.exists(output_dir) == False:

os.mkdir(output_dir)

out_nodes = []

for i in range(len(keras_model.outputs)):

out_nodes.append(out_prefix + str(i + 1))

tf.identity(keras_model.output[i], out_prefix + str(i + 1))

sess = K.get_session()

from tensorflow.python.framework import graph_util, graph_io

init_graph = sess.graph.as_graph_def()

main_graph = graph_util.convert_variables_to_constants(sess, init_graph, out_nodes)

graph_io.write_graph(main_graph, output_dir, name=model_name, as_text=False)

if log_tensorboard:

from tensorflow.python.tools import import_pb_to_tensorboard

import_pb_to_tensorboard.import_to_tensorboard(

os.path.join(output_dir, model_name),

output_dir)

model = load_model(get_script_path() + "/models/model.h5")

#keras_to_tensorflow(model, output_dir=get_script_path() + "/models/model.h5",# model_name=get_script_path() + "/models/converted.pb")

print_graph_nodes(get_script_path() + "/models/converted.pb")

Pay attention to the last line: in Java code you will need to specify the names of the input and output of the network. This “print” just prints them.

So, we put the resulting concerted.pb file in the assets directory of the Android Studio, we connect (see here , or here , or better, here ) the tensorflowinferenceinterface library, that's all.

Everything. When I did this for the first time, I expected it would be difficult, but ... it worked on the first attempt.

This is what a neural network call from Java code looks like:

protected Void doInBackground(Void... params){

try

{

//Pass input into the tensorflow

tf.feed(INPUT_NAME, m_arrInput, 1, // batch ?

m_arrInput.length, 1); // channels ?//compute predictions

tf.run(new String[]{OUTPUT_NAME});

//copy the output into the PREDICTIONS array

tf.fetch(OUTPUT_NAME, m_arrPrediction);

} catch (Exception e)

{

e.getMessage();

}

returnnull;

}

Here m_arrInput is an array with two elements, containing - ta-da! - our prediction, normalized from zero to one.

Conclusion

Here, like, it is necessary to thank for attention, and to express hope that it was interesting. Instead, I note that Google is the first version of the program. The second is completely ready, but there is little data. So, if you like watermelons - please put a program on your Android. The more data you send, the better the second version will work ...

Of course, it will be free.

Good luck, and yes: thank you for your attention. I hope it was interesting.

Important update: a new version has been released with improved analysis. Thanks to everyone who sent watermelons, and please send more!