Cumulus Linux for the network in the data center

The modern data center differs markedly from the traditional corporate network. Applications are more focused on L3 switching instead of assuming that all connections are on the same subnet, more traffic goes “horizontally” than “vertically”, etc. Undoubtedly, the main difference is the gigantic scale.

Thanks to virtualization, the number of network connections in the data center ranges from tens of thousands to millions, while in the good old days there were only a few thousand. The scale of virtual networks has long gone beyond the capabilities of traditional VLAN networks; the reconfiguration speed has also increased by orders of magnitude. In addition, the number of servers in a modern data center is such that the network equipment is needed by a head more than in a traditional corporate network.

The evolution of network infrastructure went its own way, from monolithic pseudo-OSs via embedded OSs like QNX and VxWorks to the modern stage, when the OS is based on Linux or BSD, sometimes this image is run in a virtual machine of a regular Linux distribution. On the other hand, the control method refused to evolve, it is still a command line with a multi-level structure. The complexity is also added by different syntax from different manufacturers, and often from one in different models.

Summing up - the problem lies not in the hardware, but in the network OS. Approaches to the solution were divided, the first path went to the northbound and southbound API approach (OpenFlow gained the greatest popularity), the second - to use Linux and its ecosystem. In other words, the first option is trying to add an advanced management API to the existing OS, the second suggests switching to an OS that already has all the necessary features.

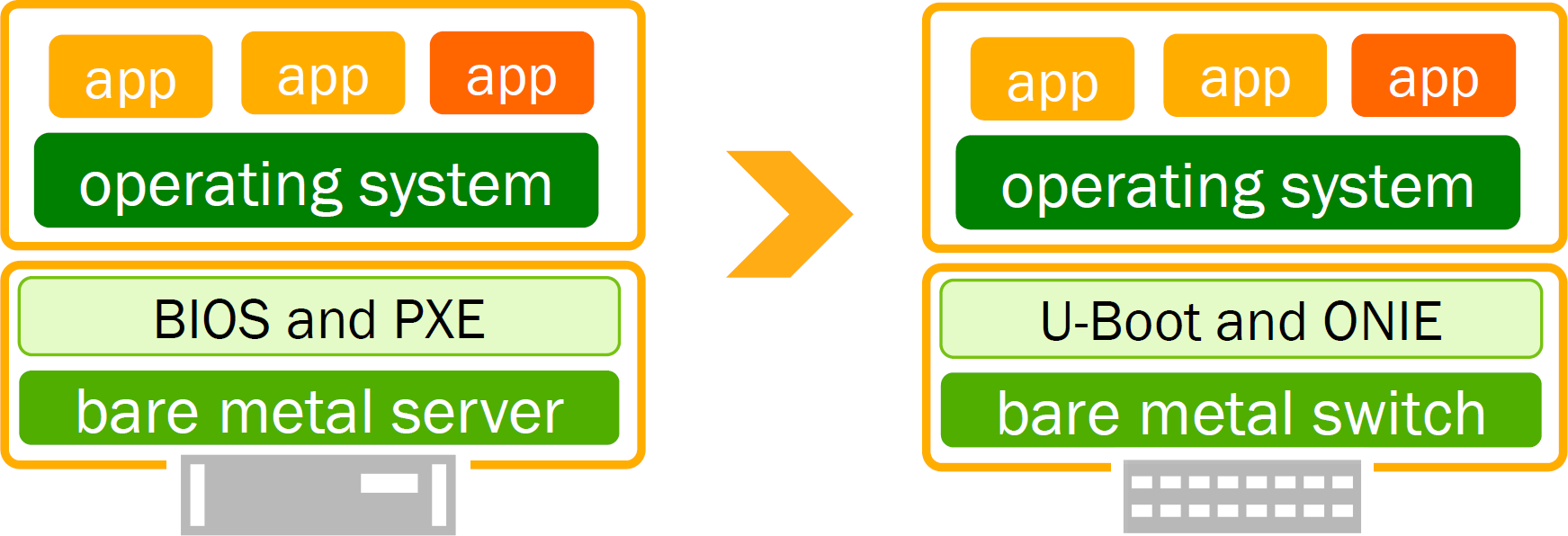

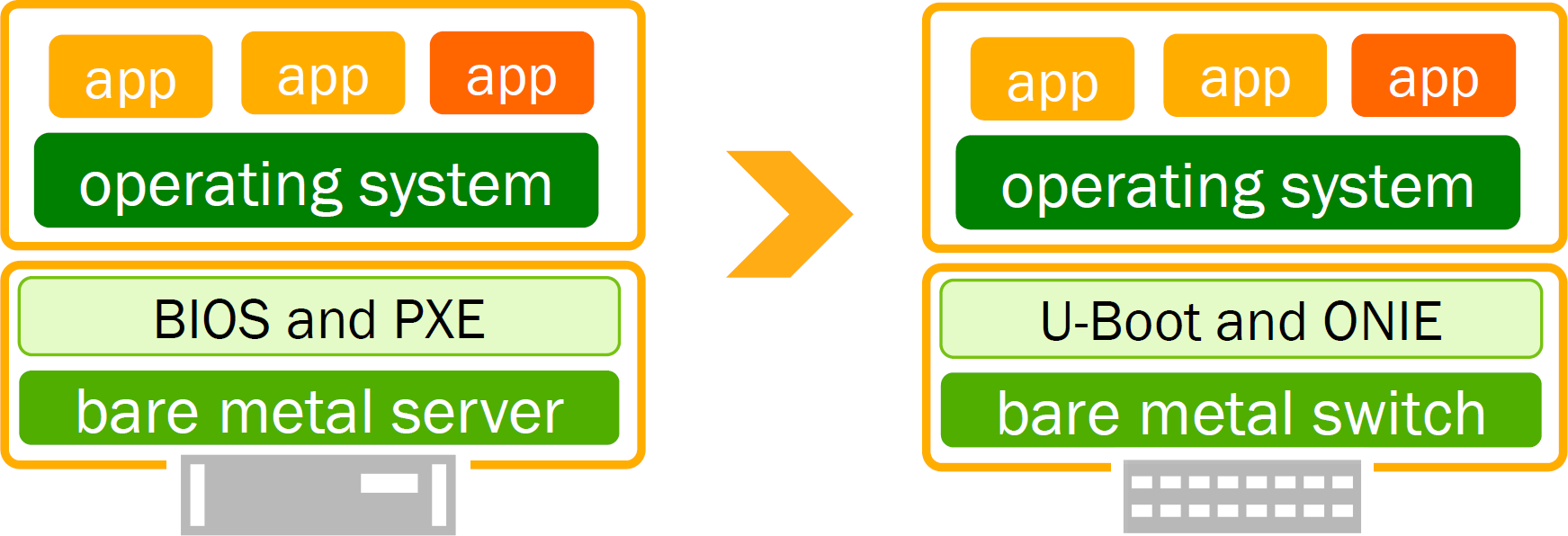

In the previous article, we talked about switches without a pre-installed OS

and the ONIE deployment environment .

It's time to talk about one of the representatives of the second approach, which can be installed on our platforms - Cumulus Linux.

Cumulus Linux is not just based on Linux, like many, it is Linux, so working with it is practically no different from a regular

Linux server. Completely standard applications are installed directly on the switch, if necessary, new utilities are developed

without focusing on specific APIs, the usual tools for working with the network are supplemented by means of building CLOS factories and automation. Architecture Installation and Monitoring What does it look like in a deployment? Everyone is accustomed to the “PXE boot, deployment of OS image” approach, similar here. Habitual approach

Operating mode

The first setup is completed, fine. What's next?

And then you can use all the same tools as for the servers. Orchestration In addition to existing tools, you can integrate any development that is already used in the company. Monitoring? Ganglia, Graphite, collectd, net-SNMP, Icinga - install on the switch and collect data in real time. The delights of automation

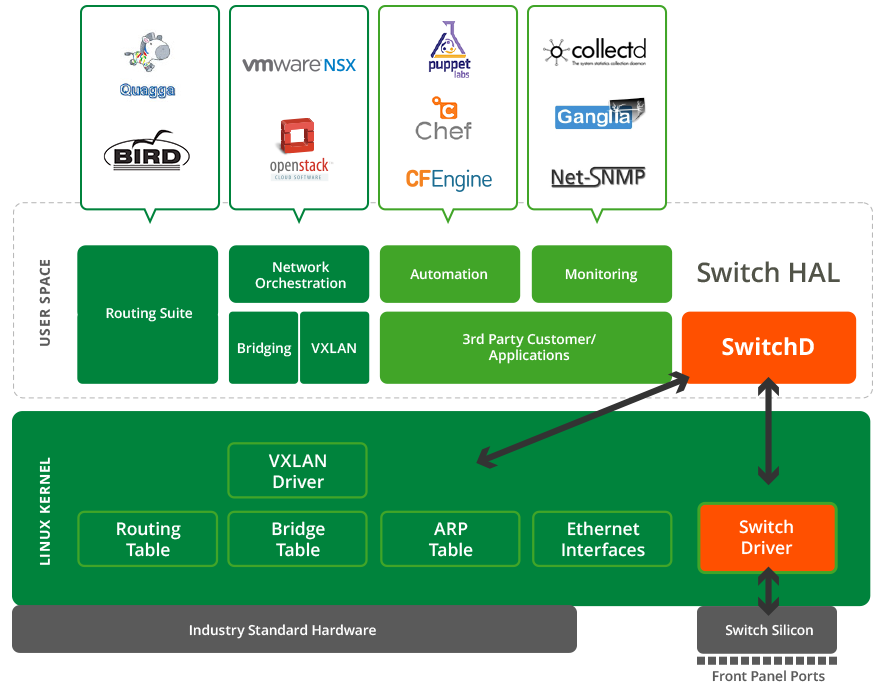

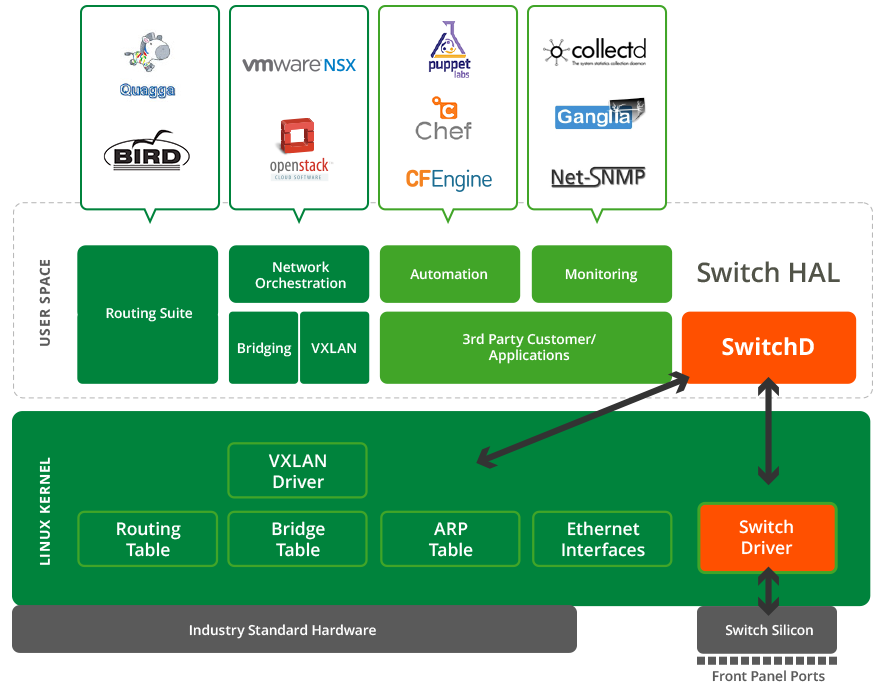

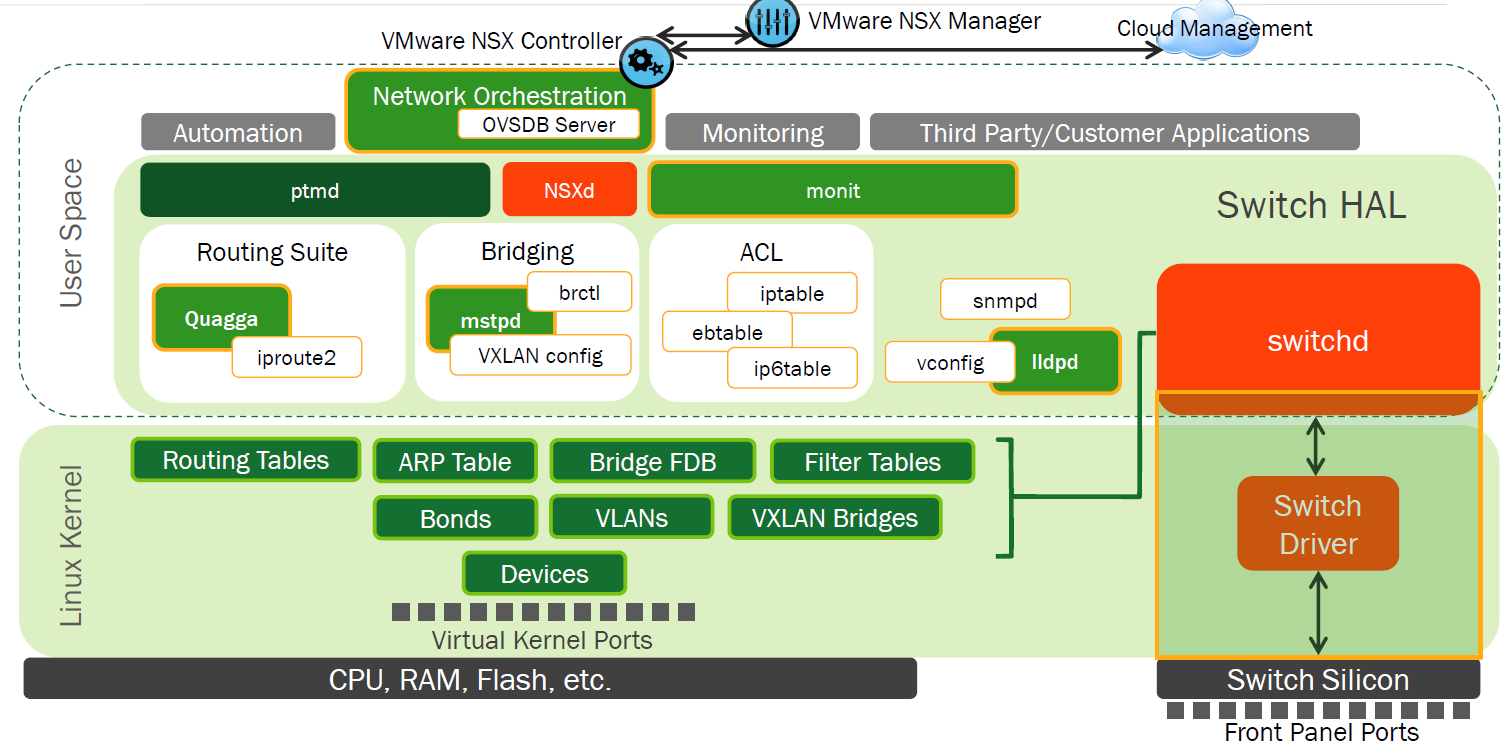

Linux not only has excellent management capabilities and understandable APIs, but it also fits perfectly with the network model of a modern data center. The control layer is in user space and is separate from the packet processing layer in the kernel. FIB (Forwarding Information Base) is located in the kernel, RIB (Routing Information Base) is controlled from the user environment by the corresponding daemons, the basic capabilities of network equipment and a number of advanced functions related to BGP are supported. The current toolkit allows you to directly access interfaces, routing tables, there are mechanisms for notifying about changes, etc.

This base allows you to use the native Linux console, instead of specialized shells JunOS, NX-OS and others. The console, by its nature, is great for using chains of independent commands and scripting.

And the switch is perfectly automated!

All this helps to configure L2 / L3 and simplify the life of the administrator.

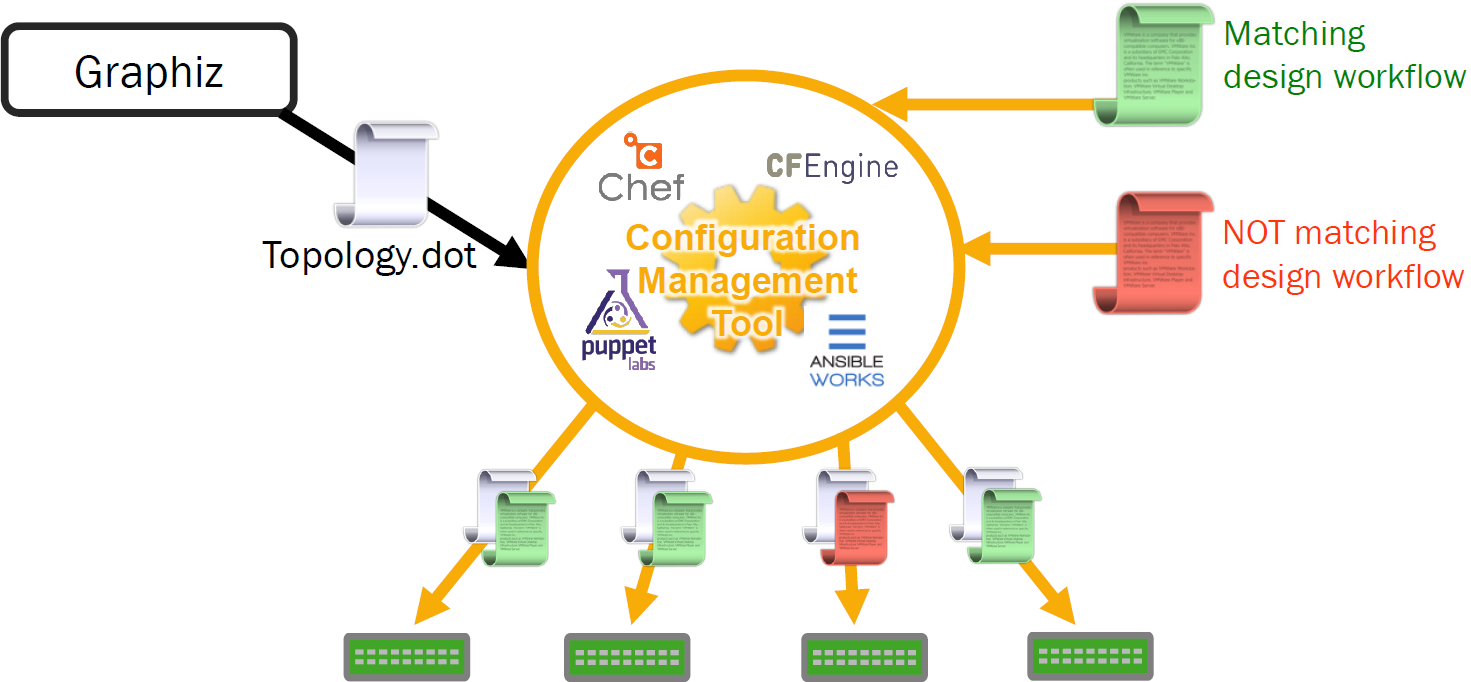

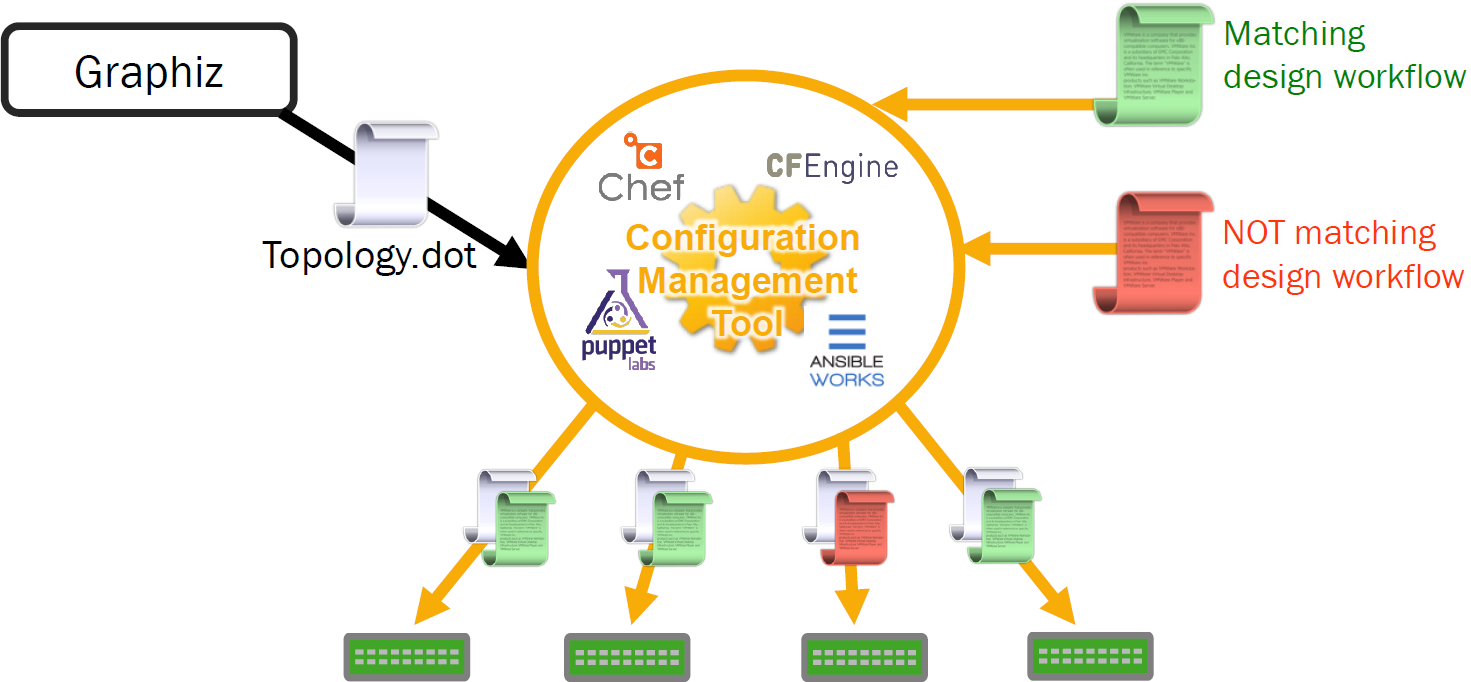

Another handy tool is the Prescriptive Topology Manager (PTM). When the logical topology of the data center is defined, bringing cable management into compliance is a very difficult task, which consumes a lot of time and effort. PTM allows you to check the correct connection of cables in real time and indicate the exact location to resolve the error. It uses a graphviz-DOT format cabling plan (some companies already use it to create a plan) and compares it with the information received through LLDP to verify that the cables are connected correctly. Verify Topology Comparison with LLDP Data Integration Examples

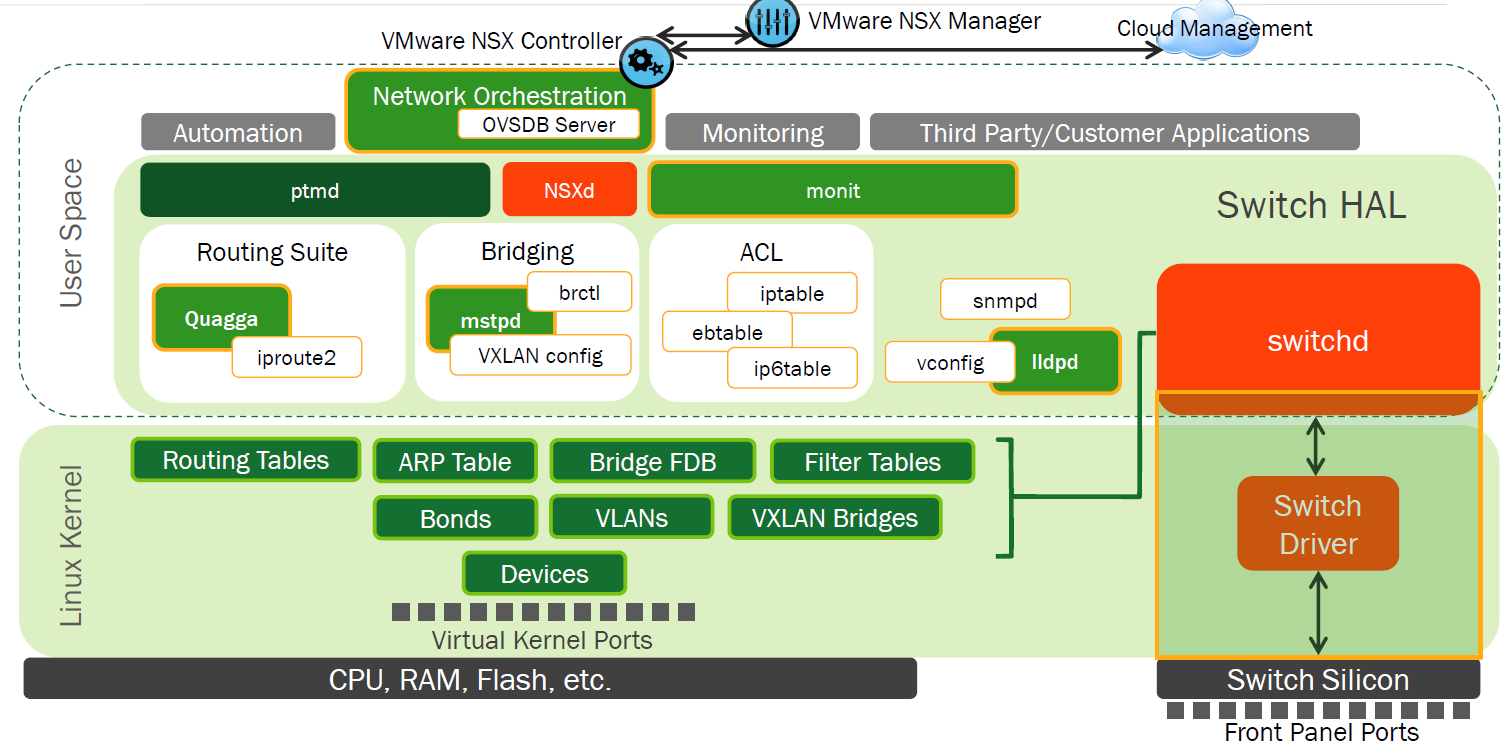

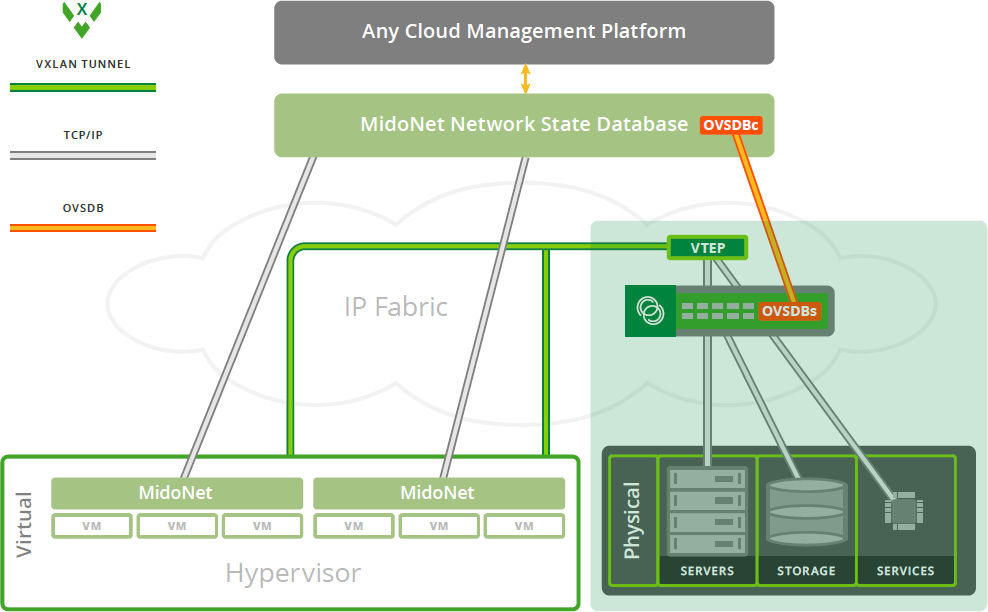

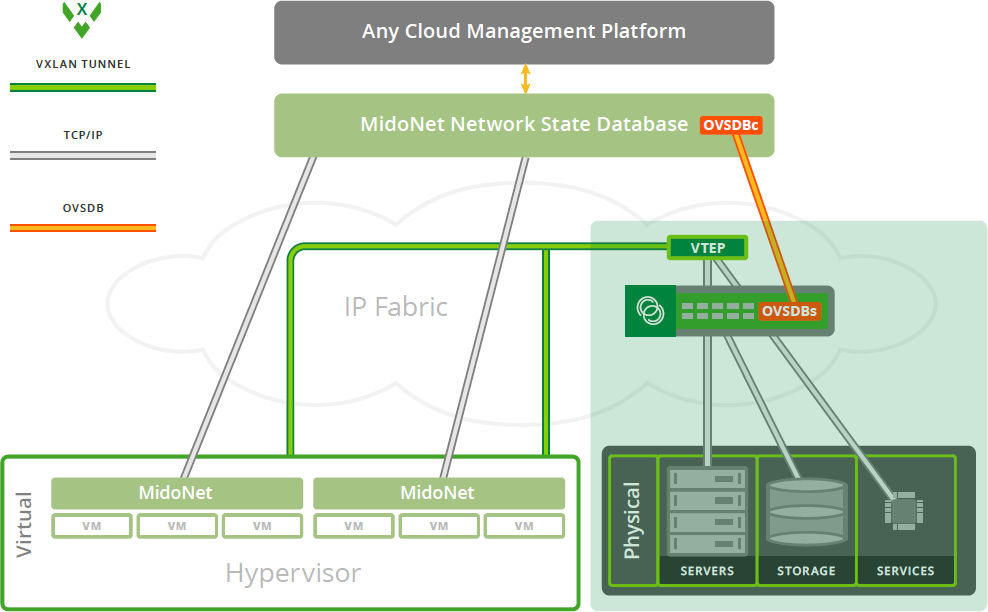

Overlay software solutions limit the ability to control the hardware side of the network, preventing automation and configuration, and complicate problem tracking. Linux supports VXLAN, so it’s no problem to develop agents for various network virtualization solutions. Cumulus Linux can act as a L2 hardware portal, allowing you to bypass the limitations of software solutions and maintain high switching performance (VXLAN tunnel endpoint (VTEP) is processed at channel speed) while taking full advantage of VXLAN technology.

Agents for solutions PLUMgrid, Nuage Networks, Midokura, VMware NSX are developed. VMware Integration Midokura Integration Network Architecture Traditional Network

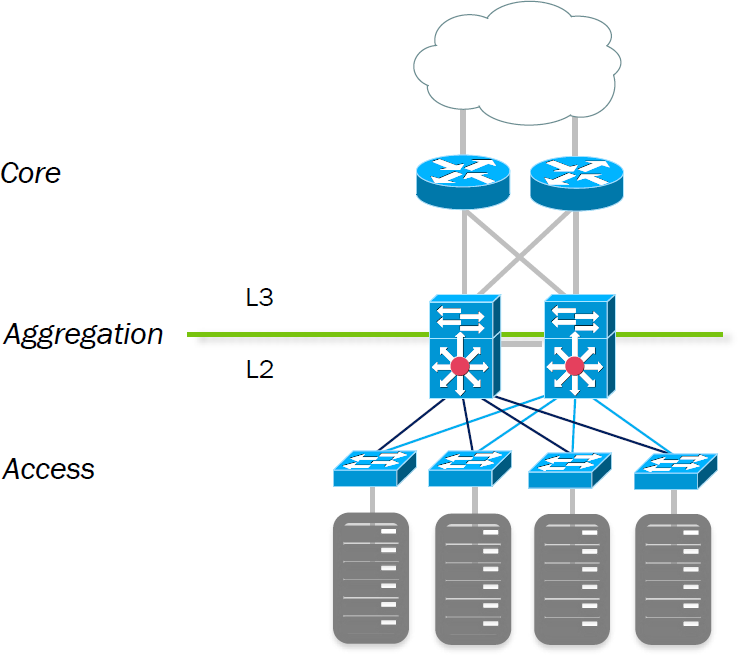

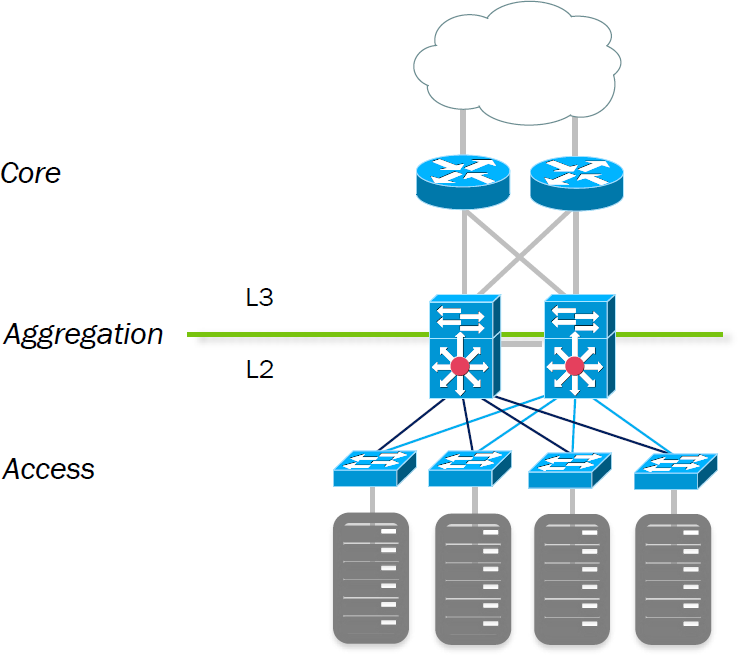

Traditional network architecture includes core, aggregation, and access layers. Three levels are above delay and there are a number of inherited restrictions. Such solutions are not suitable for modern data centers with high server density and significant interserver traffic. The protocols STP / RSTP / PVSTP, VTP, HSRP, MLAG, LACP are used. In general, "we were so taught."

Modern network

Weaknesses

There are no perfect options, due to the focus on the L3 environment, Cumulus Linux has several weaknesses.

Everything related to the traditional approach to building a network and providers are poorly compatible with the methods for building a

network in a modern data center.

Summarizing.

Unbinding the hardware switch from the operating system allows you to take the next step to a software-configurable data center. The ability to choose an OS allows you to focus on those features that are necessary and choose the optimal one from several available options.

The system becomes much more transparent, everything can be checked using the built-in Linux tools without being tied to specific vendor commands. It also becomes possible to work with the router as a server with many network ports, just like at the beginning of the development of network equipment, a UNIX server with several ports. Only in the modern case, all network operations are carried out using ASIC, and the server part provides only management.

As usual, we have a laboratory available with Cumlus Linux on an Eos 220 switch for inhuman experiments and preparation.

Thanks to virtualization, the number of network connections in the data center ranges from tens of thousands to millions, while in the good old days there were only a few thousand. The scale of virtual networks has long gone beyond the capabilities of traditional VLAN networks; the reconfiguration speed has also increased by orders of magnitude. In addition, the number of servers in a modern data center is such that the network equipment is needed by a head more than in a traditional corporate network.

The evolution of network infrastructure went its own way, from monolithic pseudo-OSs via embedded OSs like QNX and VxWorks to the modern stage, when the OS is based on Linux or BSD, sometimes this image is run in a virtual machine of a regular Linux distribution. On the other hand, the control method refused to evolve, it is still a command line with a multi-level structure. The complexity is also added by different syntax from different manufacturers, and often from one in different models.

Summing up - the problem lies not in the hardware, but in the network OS. Approaches to the solution were divided, the first path went to the northbound and southbound API approach (OpenFlow gained the greatest popularity), the second - to use Linux and its ecosystem. In other words, the first option is trying to add an advanced management API to the existing OS, the second suggests switching to an OS that already has all the necessary features.

In the previous article, we talked about switches without a pre-installed OS

and the ONIE deployment environment .

It's time to talk about one of the representatives of the second approach, which can be installed on our platforms - Cumulus Linux.

Cumulus Linux is not just based on Linux, like many, it is Linux, so working with it is practically no different from a regular

Linux server. Completely standard applications are installed directly on the switch, if necessary, new utilities are developed

without focusing on specific APIs, the usual tools for working with the network are supplemented by means of building CLOS factories and automation. Architecture Installation and Monitoring What does it look like in a deployment? Everyone is accustomed to the “PXE boot, deployment of OS image” approach, similar here. Habitual approach

- The first boot goes to ONIE, the process of searching for the source of the OS image starts, it determines the desired image and deploys to the switch. Training

- The first boot in Cumulus Linux, it determines the presence of configuration scripts through option 239 DHCP. If the response is a URL, then a

script is requested from it, CUMULUS-AUTOPROVISIONING is searched for and the script is run as root. Bash, Ruby, Perl, Python are supported. Script search

- The third step is a normal boot in Cumulus Linux

Operating mode

The first setup is completed, fine. What's next?

And then you can use all the same tools as for the servers. Orchestration In addition to existing tools, you can integrate any development that is already used in the company. Monitoring? Ganglia, Graphite, collectd, net-SNMP, Icinga - install on the switch and collect data in real time. The delights of automation

Linux not only has excellent management capabilities and understandable APIs, but it also fits perfectly with the network model of a modern data center. The control layer is in user space and is separate from the packet processing layer in the kernel. FIB (Forwarding Information Base) is located in the kernel, RIB (Routing Information Base) is controlled from the user environment by the corresponding daemons, the basic capabilities of network equipment and a number of advanced functions related to BGP are supported. The current toolkit allows you to directly access interfaces, routing tables, there are mechanisms for notifying about changes, etc.

This base allows you to use the native Linux console, instead of specialized shells JunOS, NX-OS and others. The console, by its nature, is great for using chains of independent commands and scripting.

And the switch is perfectly automated!

- Package and process management

- Configuration templates

- Monitoring automation

All this helps to configure L2 / L3 and simplify the life of the administrator.

Another handy tool is the Prescriptive Topology Manager (PTM). When the logical topology of the data center is defined, bringing cable management into compliance is a very difficult task, which consumes a lot of time and effort. PTM allows you to check the correct connection of cables in real time and indicate the exact location to resolve the error. It uses a graphviz-DOT format cabling plan (some companies already use it to create a plan) and compares it with the information received through LLDP to verify that the cables are connected correctly. Verify Topology Comparison with LLDP Data Integration Examples

Overlay software solutions limit the ability to control the hardware side of the network, preventing automation and configuration, and complicate problem tracking. Linux supports VXLAN, so it’s no problem to develop agents for various network virtualization solutions. Cumulus Linux can act as a L2 hardware portal, allowing you to bypass the limitations of software solutions and maintain high switching performance (VXLAN tunnel endpoint (VTEP) is processed at channel speed) while taking full advantage of VXLAN technology.

Agents for solutions PLUMgrid, Nuage Networks, Midokura, VMware NSX are developed. VMware Integration Midokura Integration Network Architecture Traditional Network

Traditional network architecture includes core, aggregation, and access layers. Three levels are above delay and there are a number of inherited restrictions. Such solutions are not suitable for modern data centers with high server density and significant interserver traffic. The protocols STP / RSTP / PVSTP, VTP, HSRP, MLAG, LACP are used. In general, "we were so taught."

- Orientation to L2.

- Static in nature - VLAN, does not scale well during virtualization.

- Leans on crutches - MLAG, Trill, etc.

- Optimization for north-south traffic.

- Low scalability - hundreds or thousands of connections.

Modern network

- She is easier.

- Less proprietary protocols.

- Predictable delays.

- Horizontal scalability.

- Orientation to L3.

- Great for virtualization and cloud environments with many "owners".

- Scalability to millions of connections.

Weaknesses

There are no perfect options, due to the focus on the L3 environment, Cumulus Linux has several weaknesses.

- The desire to build an L2 factory using MLAG, TRILL, Virtual Chassis, VLT, Chassis Stacking, etc.

- Work with Netflow

- Provider access switches with VRF, MPLS, VPLS, etc.

- Qinq

- Access Switches with Port Security, Complex QoS Rules, 802.1x, PoE

- Routing Protocols IS-IS, RIP, EIGRP.

- Authorization by TACAS or Radius AAA (there is an alternative to LDAP).

Everything related to the traditional approach to building a network and providers are poorly compatible with the methods for building a

network in a modern data center.

Summarizing.

Unbinding the hardware switch from the operating system allows you to take the next step to a software-configurable data center. The ability to choose an OS allows you to focus on those features that are necessary and choose the optimal one from several available options.

The system becomes much more transparent, everything can be checked using the built-in Linux tools without being tied to specific vendor commands. It also becomes possible to work with the router as a server with many network ports, just like at the beginning of the development of network equipment, a UNIX server with several ports. Only in the modern case, all network operations are carried out using ASIC, and the server part provides only management.

As usual, we have a laboratory available with Cumlus Linux on an Eos 220 switch for inhuman experiments and preparation.