ZFS and disk access speeds in hypervisors

This article publishes the results of measurements of the speed of access to the file system inside the hypervisor in various ZFS installation options. Anyone interested in asking for a cat, I warn you about the presence of a large number of images under the spoilers (optimized).

Hello! There are quite a few materials on the network devoted to the ZFS file system (hereinafter FS), its development in Linux and practical application. I was very interested in this FC in the context of improving my home server virtualization (and also due to post user kvaps ), but I could not find on the Internet (it can be bad looking?) Benchmarks the performance of virtualized machines. Therefore, I decided to put together a test platform to conduct my comparative study.

My article does not pretend to any scientific discoveries, it is unlikely to help professionals who have been working with ZFS for a long time, and know all its capabilities, but will help beginners to estimate the “price” of each gigabyte divided by performance.

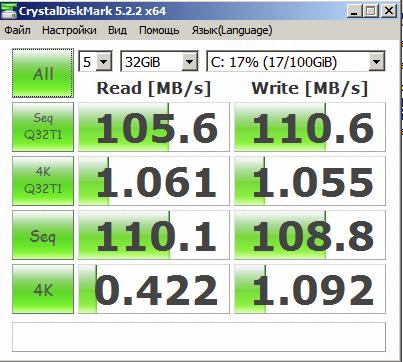

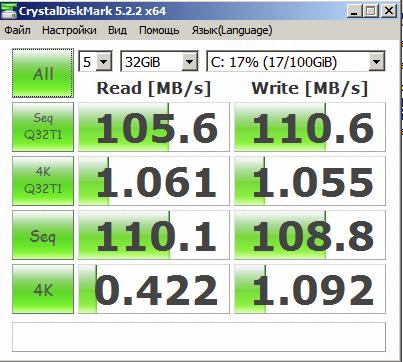

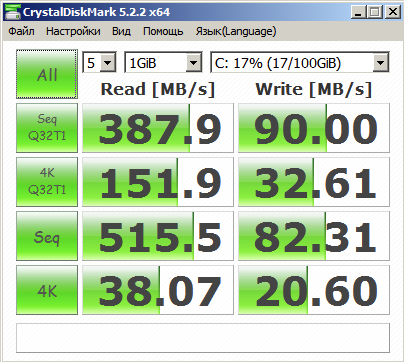

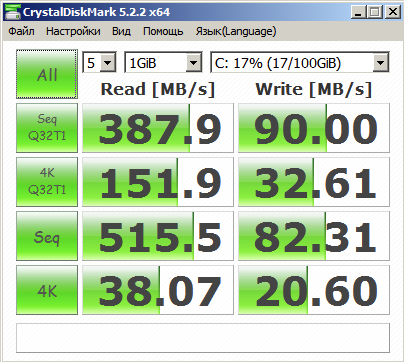

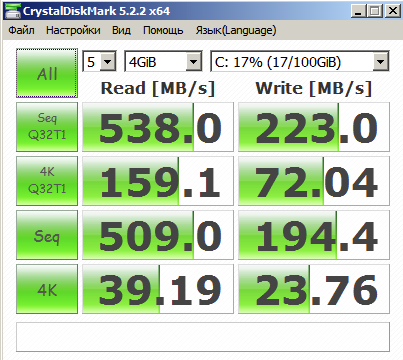

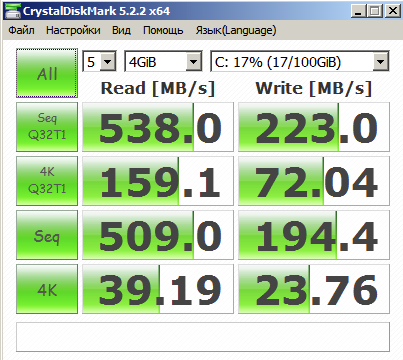

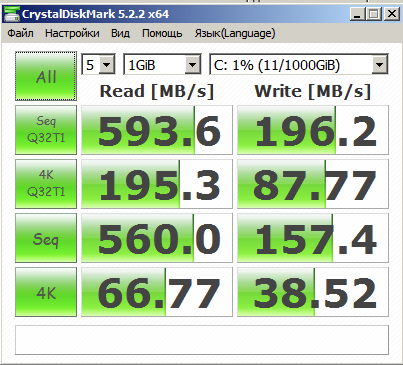

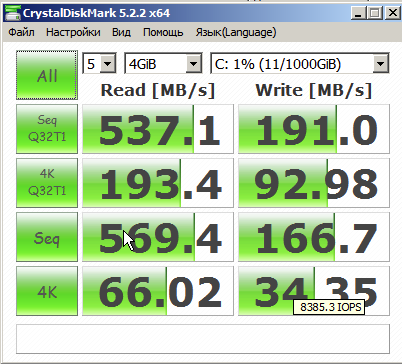

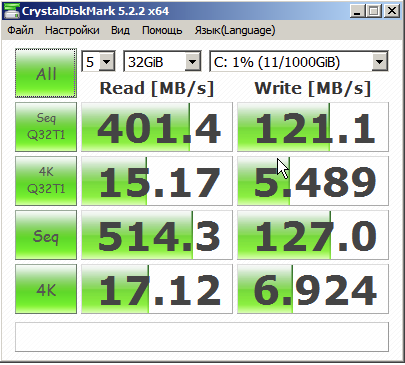

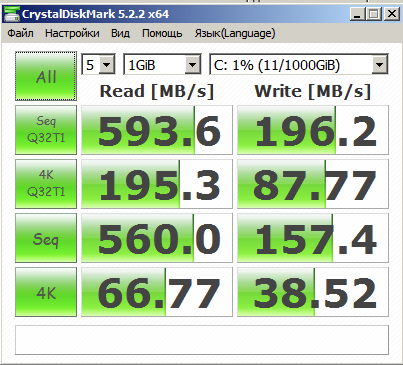

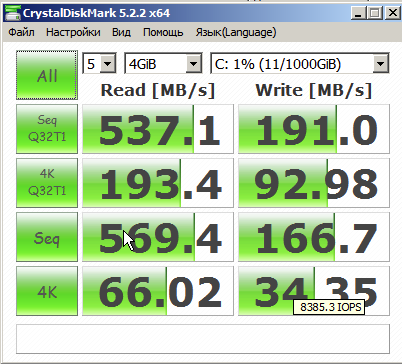

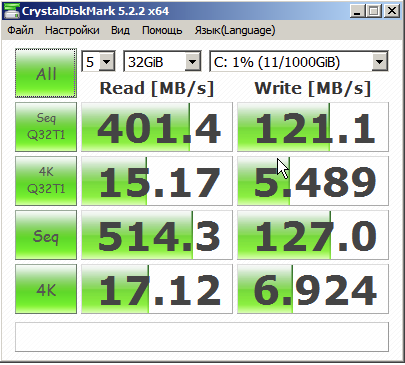

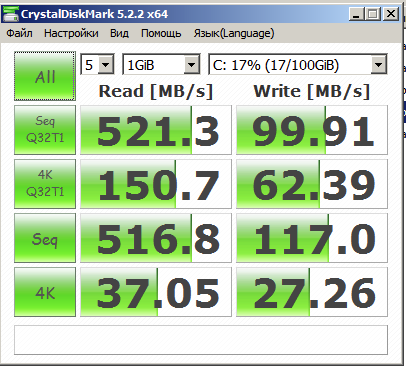

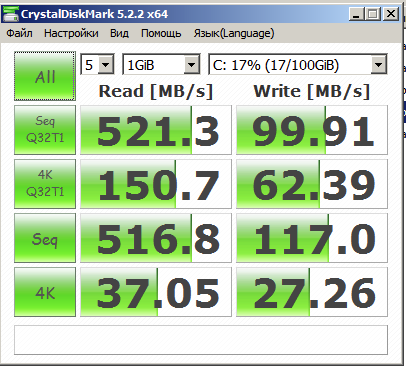

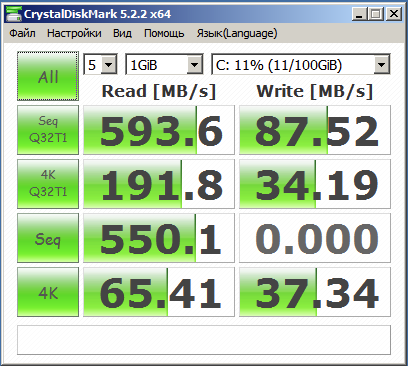

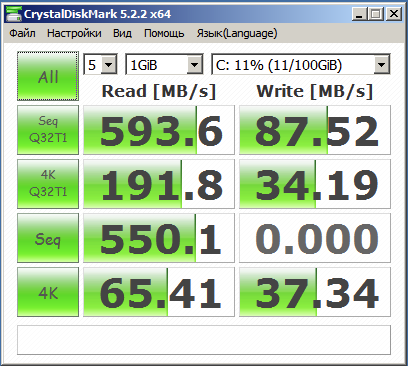

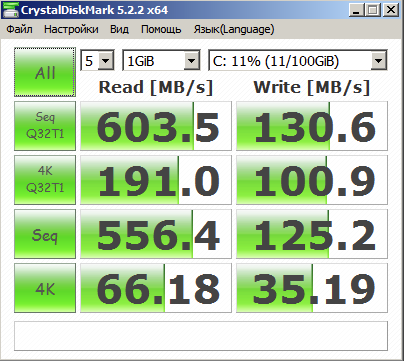

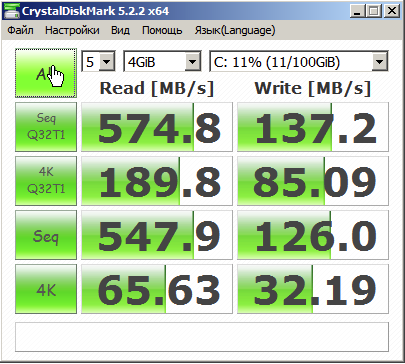

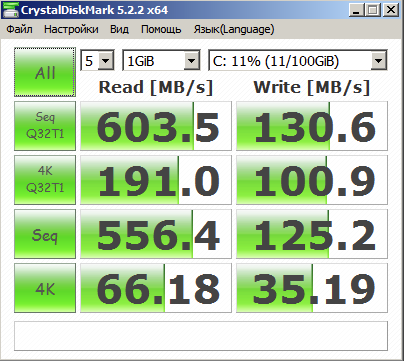

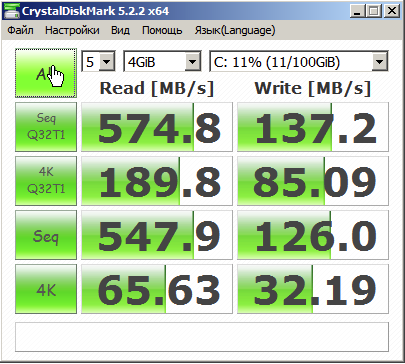

The essence of the experiment was as follows: Proxmox VE 5.2 OS was installed on the machine (each time from the boot disk). During installation, one of the XFS / ZFS options was selected. After that, a virtual machine was created, on which Windows Server 2008 R2 was installed, after which the popular CrystalDiskMark 5.2.2 utility was launched and tests were performed on volumes 1, 4,32 GiB (due to the loss of images with the results of 32 GiB tests, you cannot use solutions, available data are given for extras).

The XFS FS test was used to measure the reference speed of a single HDD (perhaps this is wrong, but I did not think of any other options).

ZFS RAID 0, RAID 1 tests were conducted on two randomly selected drives, ZFS RaidZ1 on 3 drives, ZFS RAID 10, RaidZ2 on 4 drives. Tests with ZFS RaidZ3 were not conducted due to the lack of desire to buy another extremely economically impractical HDD at 500GB.

Under the spoiler, I’ll briefly describe each type of ZFS RAID with my example of the resulting “commercial” gigabytes:

It should be noted that in addition to the different access speeds of the file system, it is also necessary to take into account the total volume of the resulting array, and the reliability of data integrity in cases of hard drive failure.

Technical characteristics of the platform, (possibly) affecting the test results:

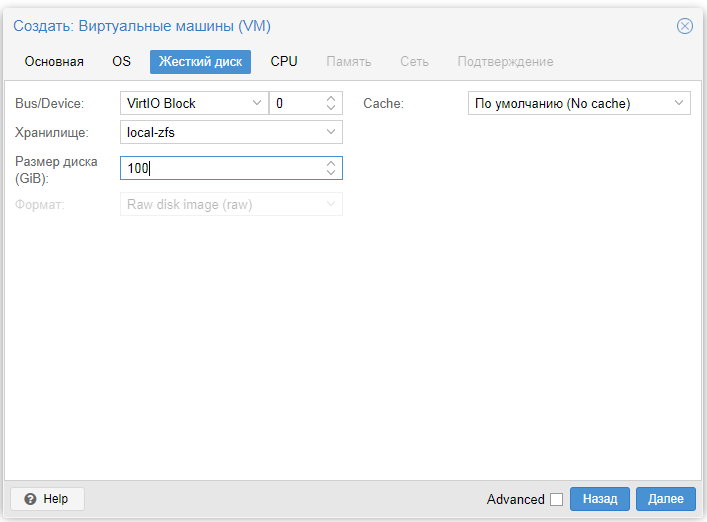

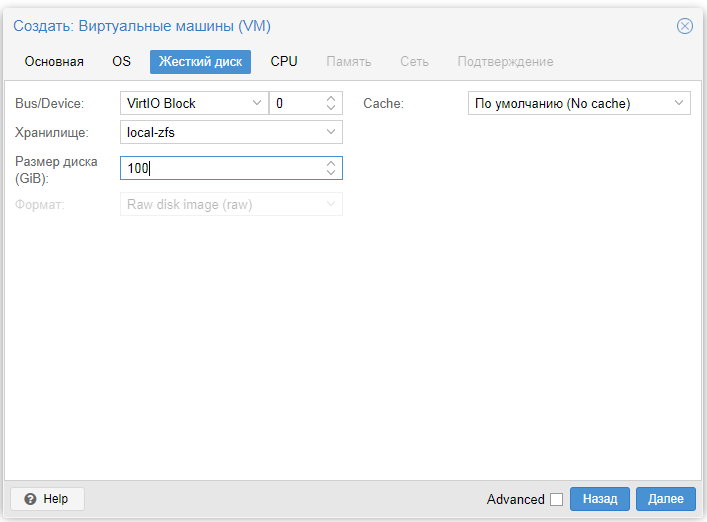

The virtual machine (KVM) for the tests was allocated 4GB of RAM, 1 processor core, VirtIO Block 100GB hard drive.

For systems installed on ZFS, 2 tests were performed; in the second, SSD was connected as a cache disk.

All results are presented in the form of screenshots below. If anyone has a desire to digitize these results - I will be grateful and will include the results of the work in the article.

Thanks to everyone who has paid attention, I hope for someone this sample will be, like for me, useful.

PS For reasons unknown to me, some of the images disappeared somewhere, measurements were taken at the end of spring, the test platform is no longer assembled in that form, fortunately they all fall on 32 GiB tests.

PPS I did not try to advertise any organizations and / or software products, I didn’t have a goal to violate licensing agreements, if I was wrong somewhere, please write in private messages.

PPPS Image with ZFS logo is a reproduction.

Hello! There are quite a few materials on the network devoted to the ZFS file system (hereinafter FS), its development in Linux and practical application. I was very interested in this FC in the context of improving my home server virtualization (and also due to post user kvaps ), but I could not find on the Internet (it can be bad looking?) Benchmarks the performance of virtualized machines. Therefore, I decided to put together a test platform to conduct my comparative study.

My article does not pretend to any scientific discoveries, it is unlikely to help professionals who have been working with ZFS for a long time, and know all its capabilities, but will help beginners to estimate the “price” of each gigabyte divided by performance.

The essence of the experiment was as follows: Proxmox VE 5.2 OS was installed on the machine (each time from the boot disk). During installation, one of the XFS / ZFS options was selected. After that, a virtual machine was created, on which Windows Server 2008 R2 was installed, after which the popular CrystalDiskMark 5.2.2 utility was launched and tests were performed on volumes 1, 4,

The XFS FS test was used to measure the reference speed of a single HDD (perhaps this is wrong, but I did not think of any other options).

ZFS RAID 0, RAID 1 tests were conducted on two randomly selected drives, ZFS RaidZ1 on 3 drives, ZFS RAID 10, RaidZ2 on 4 drives. Tests with ZFS RaidZ3 were not conducted due to the lack of desire to buy another extremely economically impractical HDD at 500GB.

Under the spoiler, I’ll briefly describe each type of ZFS RAID with my example of the resulting “commercial” gigabytes:

ZFS RAID

2 disks:

3 drives:

4 drives:

Очень понятно расписана суть вот тут (англ). А также сколько дисков допустимо потерять, сохранив информацию.

- ZFS RAID 0 - striping, volume 2 * DiskSize = 1000GB.

- ZFS RAID 1 - mirroring (Mirror), volume 1 * DiskSize = 500GB.

3 drives:

- ZFS RaidZ1 - aka ZFS RaidZ, analogue of RAID5, volume (N - 1) * DiskSize = 1000GB.

4 drives:

- ZFS RAID 10 - Striped Mirroring (Striped Mirrored), volume 2 * DiskSize = 1000GB.

- ZFS RaidZ2 — аналог RAID6, объем (N — 2) * DiskSize = 1000ГБ.

- при этом, я такой тест не проводил, но ZFS RaidZ1 при 4 дисках = 1500ГБ.

Очень понятно расписана суть вот тут (англ). А также сколько дисков допустимо потерять, сохранив информацию.

It should be noted that in addition to the different access speeds of the file system, it is also necessary to take into account the total volume of the resulting array, and the reliability of data integrity in cases of hard drive failure.

Technical characteristics of the platform, (possibly) affecting the test results:

- Motherboard: Intel Desktop Board DS67SQ-B3;

- Processor: Intel Pentium G630 2.7GHz;

- RAM: 2 x 4096Mb Hynix PC3-10700;

- Hard drives: 3 x WD 5000AZRX 500GB SATA 64MB Cache, 1 x WD 5000AZRZ 500GB SATA 64MB Cache, SSD SATA Goldenfir T650-8GB;

- Power supply: DeepCool DA500N 500W.

The virtual machine (KVM) for the tests was allocated 4GB of RAM, 1 processor core, VirtIO Block 100GB hard drive.

For systems installed on ZFS, 2 tests were performed; in the second, SSD was connected as a cache disk.

All results are presented in the form of screenshots below. If anyone has a desire to digitize these results - I will be grateful and will include the results of the work in the article.

Xfs

ZFS RAID 0

ZFS RAID 0 + cache

ZFS RAID 1

ZFS RAID 1 + cache

ZFS RAID 10

ZFS RAID 10 + cache

ZFS RaidZ1

ZFS RaidZ1 + cache

ZFS RaidZ2

ZFS RaidZ2 + cache

Thanks to everyone who has paid attention, I hope for someone this sample will be, like for me, useful.

PS For reasons unknown to me, some of the images disappeared somewhere, measurements were taken at the end of spring, the test platform is no longer assembled in that form, fortunately they all fall on 32 GiB tests.

PPS I did not try to advertise any organizations and / or software products, I didn’t have a goal to violate licensing agreements, if I was wrong somewhere, please write in private messages.

PPPS Image with ZFS logo is a reproduction.

Only registered users can participate in the survey. Sign in , please.