Therascale OCS Solution

In the previous article about the Open Compute Project, we talked about the initiative of Facebook to spread an open platform for building hyper-data centers. The idea is simple - existing solutions are not suitable for operation, you need to create something that suits you.

In a blog post, Microsoft talks a lot about how to use the Azure cloud service built on their infrastructure. The infrastructure, as it turned out, was also developed “from scratch” for the vision of Microsoft.

The process of publishing its platform turned out to be contagious, Microsoft joined the initiative and shared its vision of the optimal infrastructure, and we will talk about this in detail.

Key Features

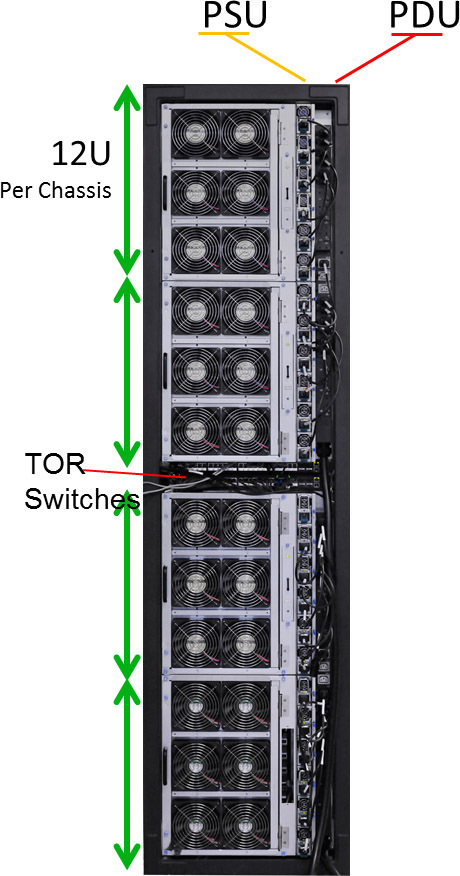

View of the complete rack

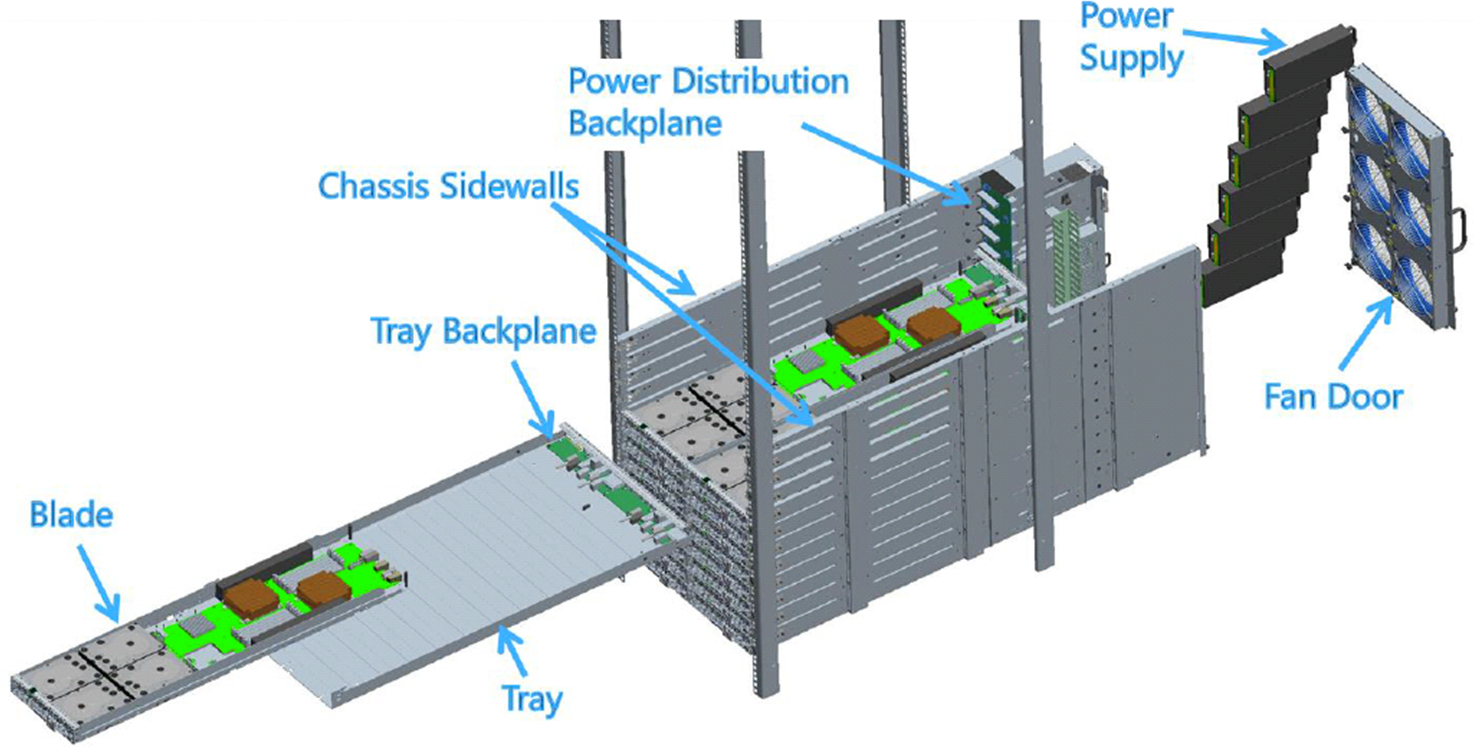

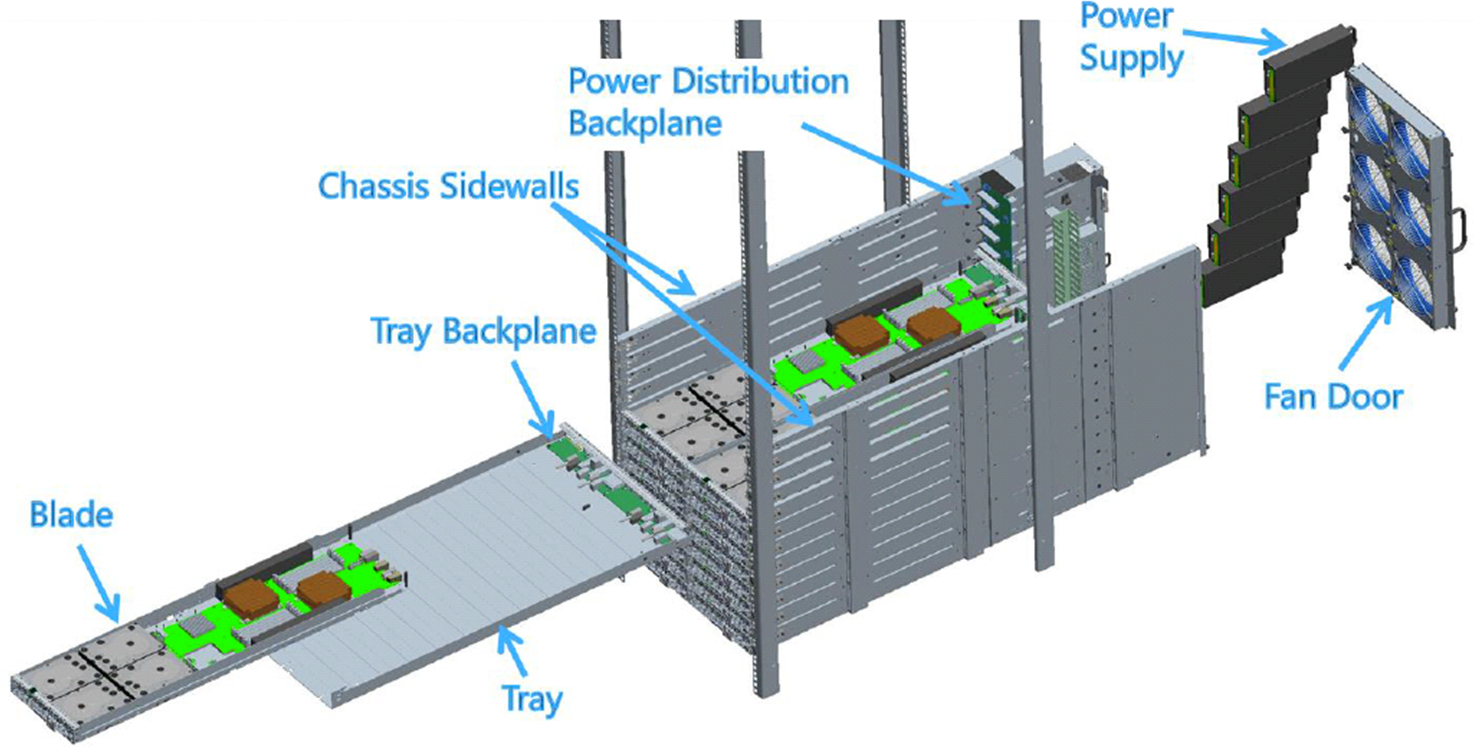

Details about the architecture The structure of the chassis is a complex trough in which baskets with universal backplanes for servers and disk shelves, a power distribution board, power supplies, a remote control controller and a fantext wall are installed. Rear view

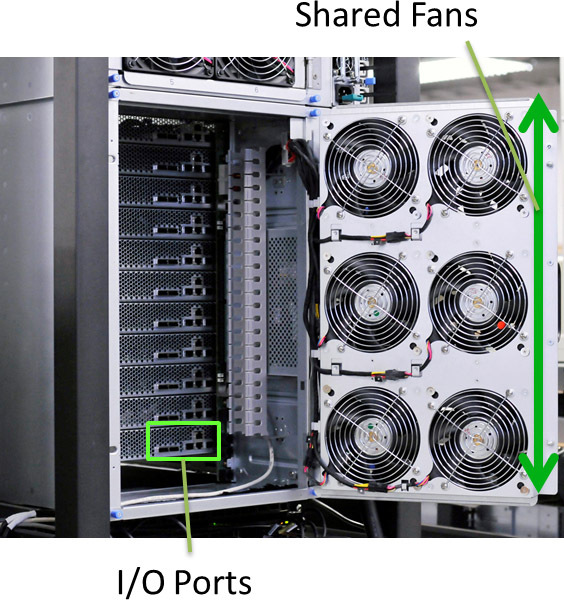

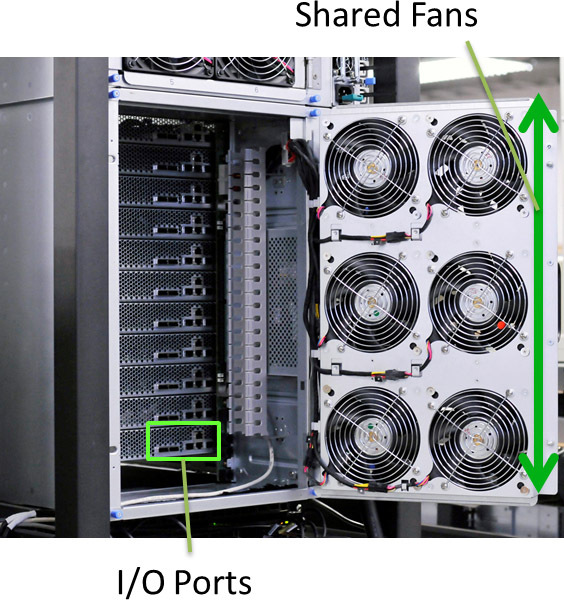

Six large fans (140 * 140 * 38) are combined into a single unit on the hinged door, control is from the chassis controller. The door closes the I / O ports and the space for cables, where special channels are laid for accurate wiring and installation.

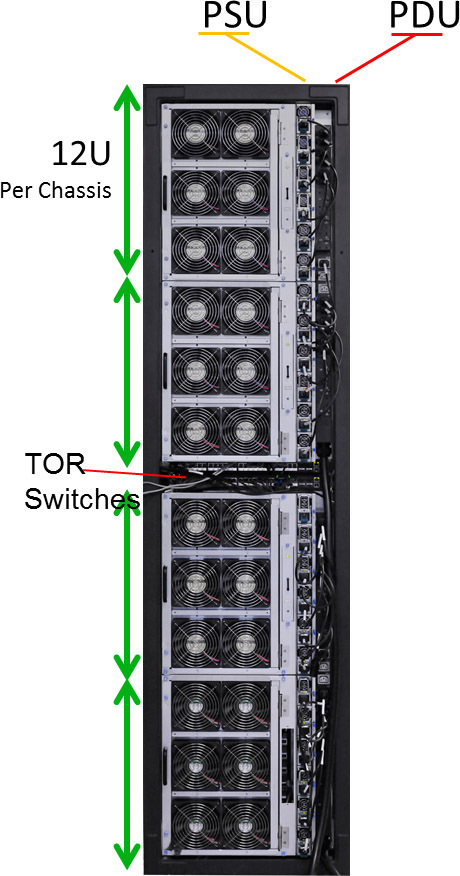

Microsoft uses standard 19 "racks 50U high, of which 48U are occupied directly by servers, and another 2U is allocated for switches. Power supplies and PDUs are located on one side of the rack and do not occupy space, unlike OCP.

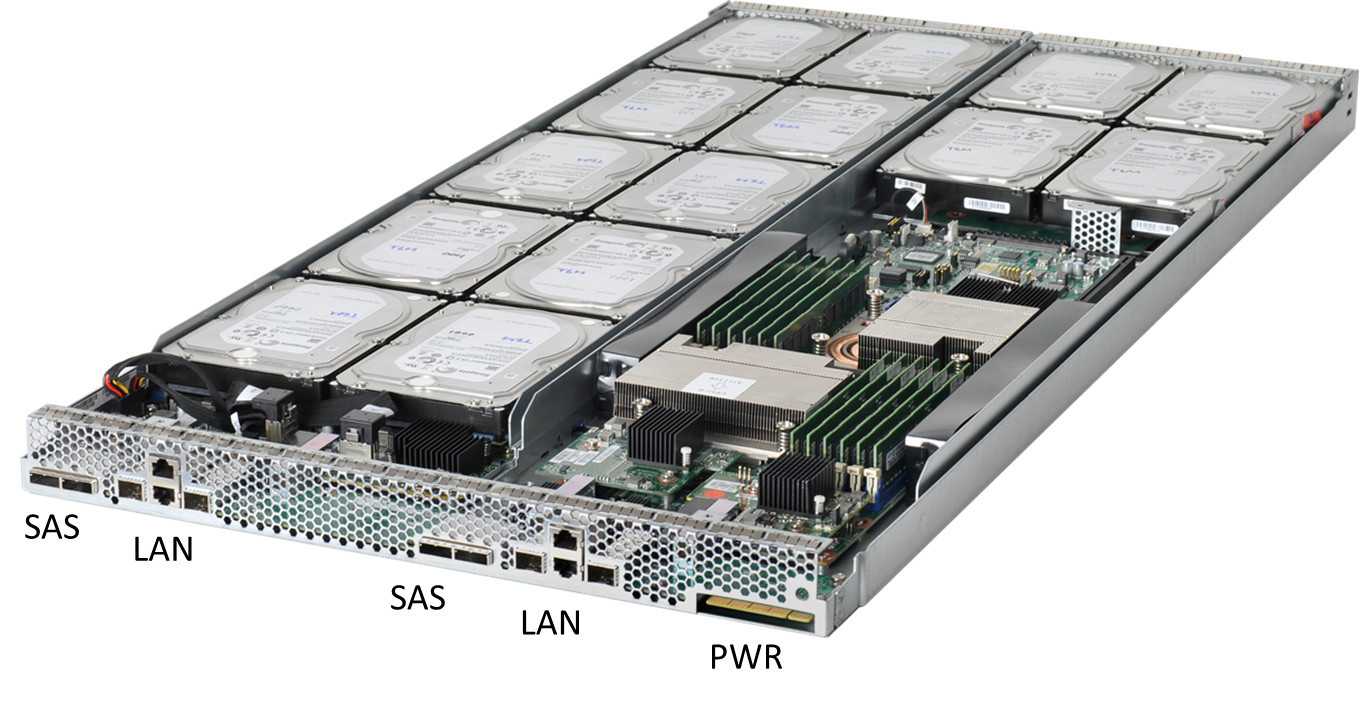

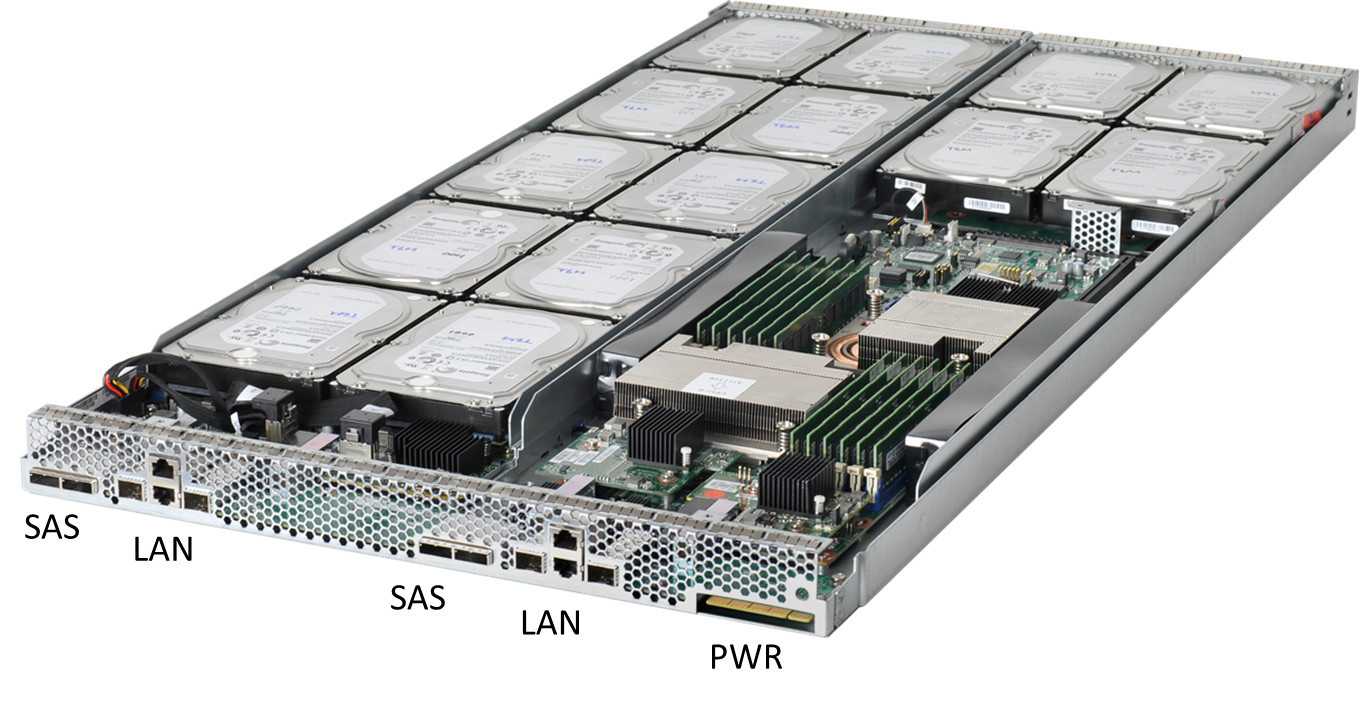

A service module is built into each chassis ( more about it later) and there are 6 power supplies (exactly the same as in our RS130 G4 , RS230 G4 models ) according to the 5 + 1 scheme. Rear view of the basket A good idea is that regardless of the modules used, the set of ports on the basket is the same. Always two SAS ports, two SFP + ports and two RJ45 ports are displayed, and what about it will be connected with the second side - determined on the spot. Compare formats

Cooling system efficiency

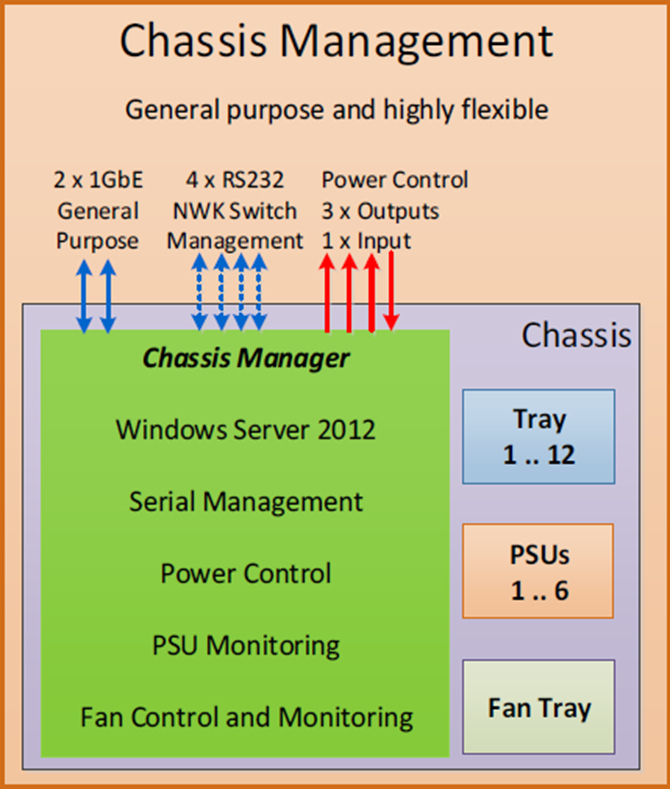

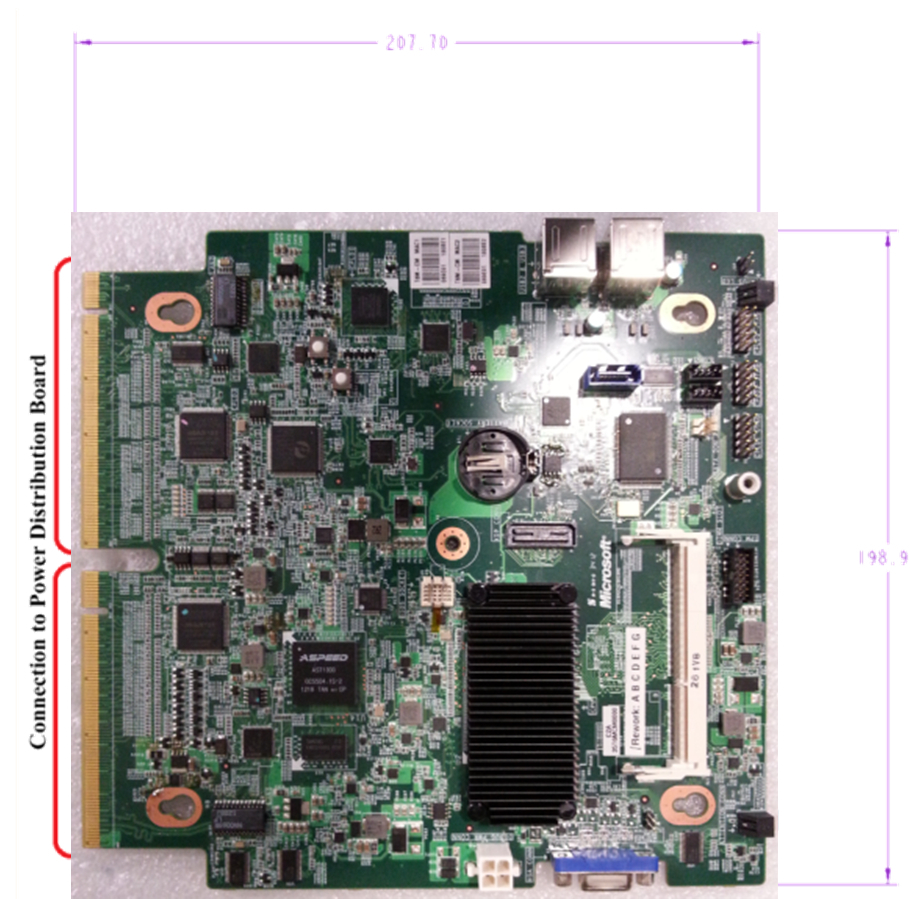

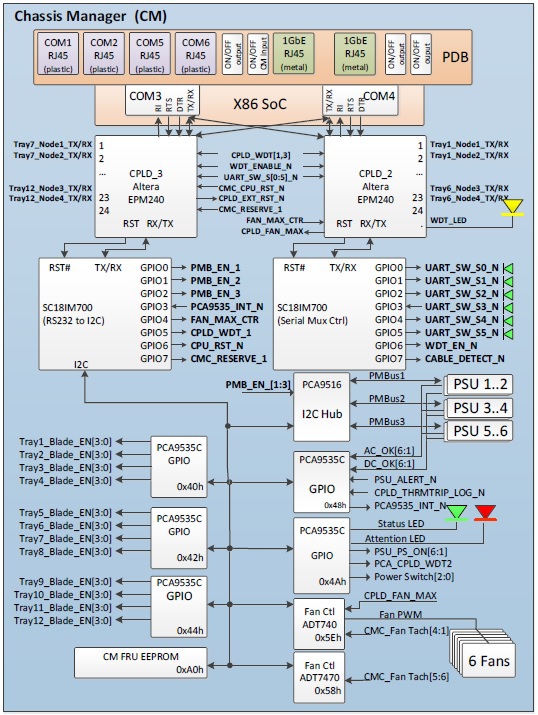

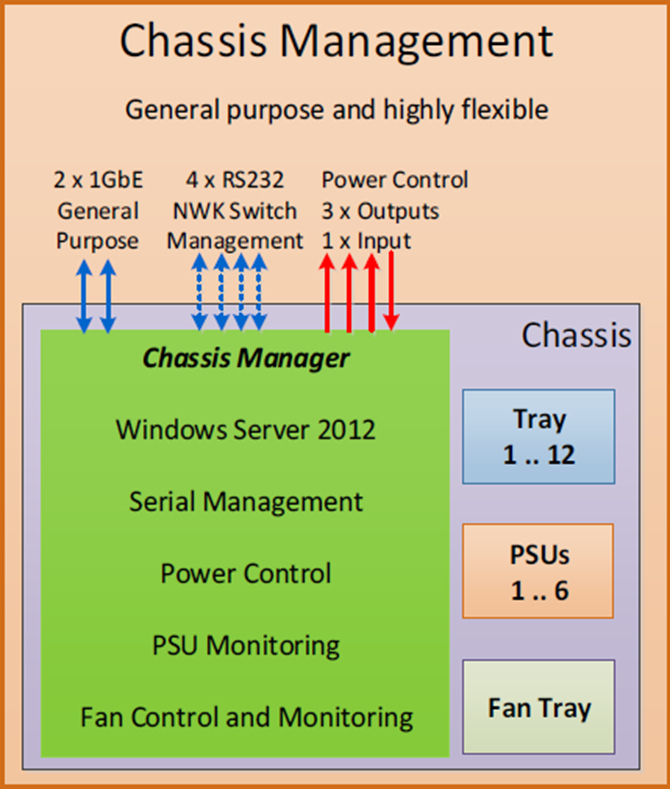

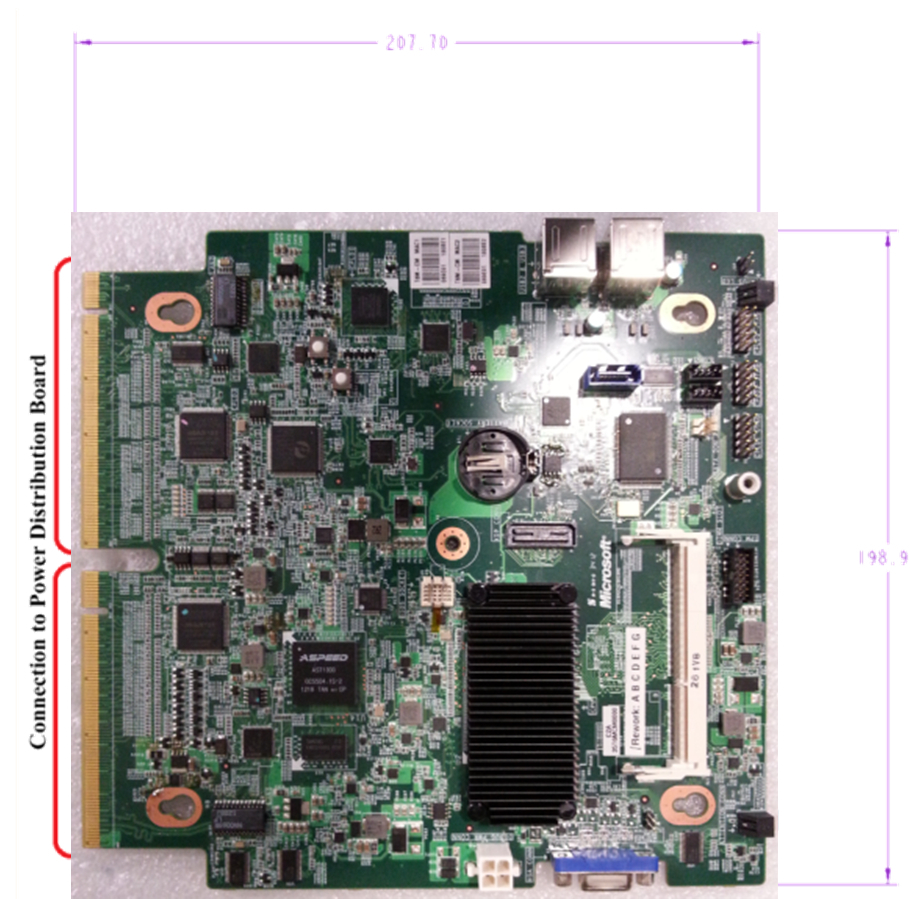

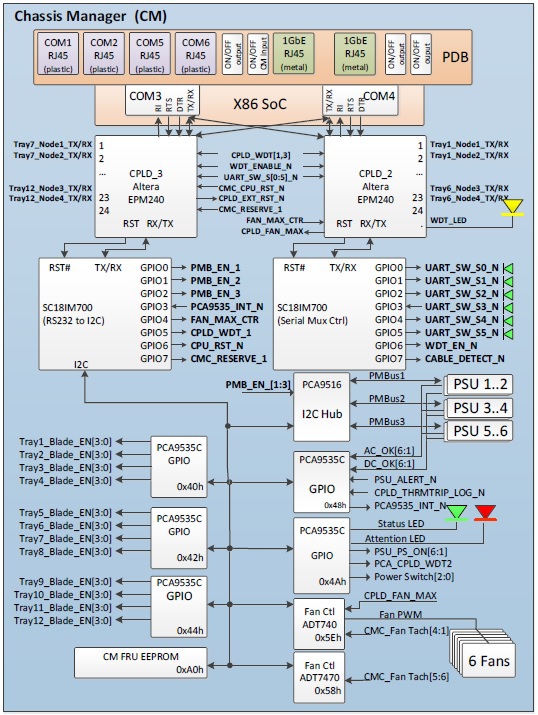

Chassis manager management system The management server is inserted and removed “hot”, the software part is built (which is not surprising) on Windows Server 2012. The source code is available to the general public and everyone can make their own changes (the toolkit is used free of charge). general idea Directly board Functionality:

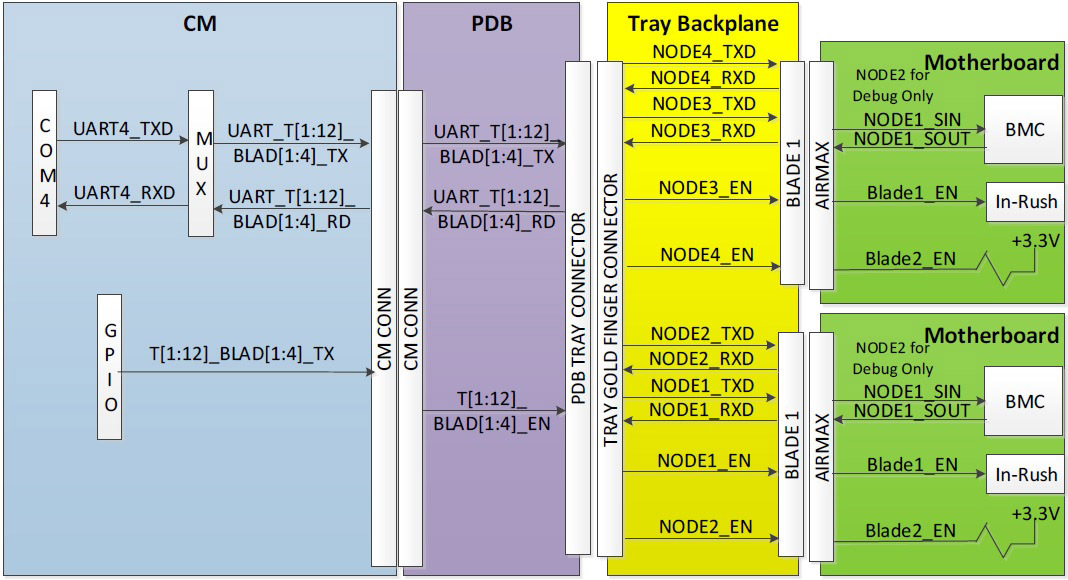

Bus layout

How it goes through the connectors

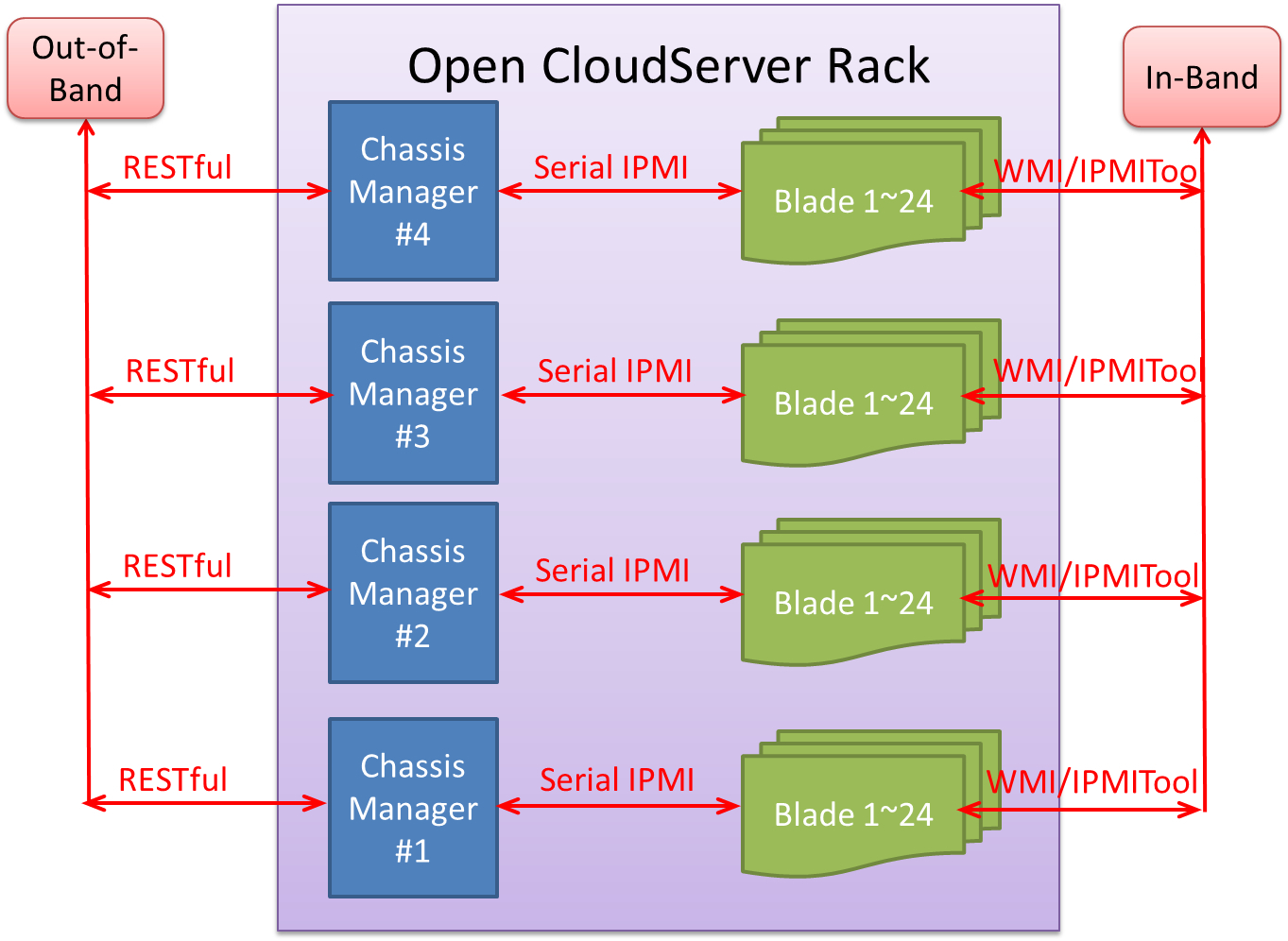

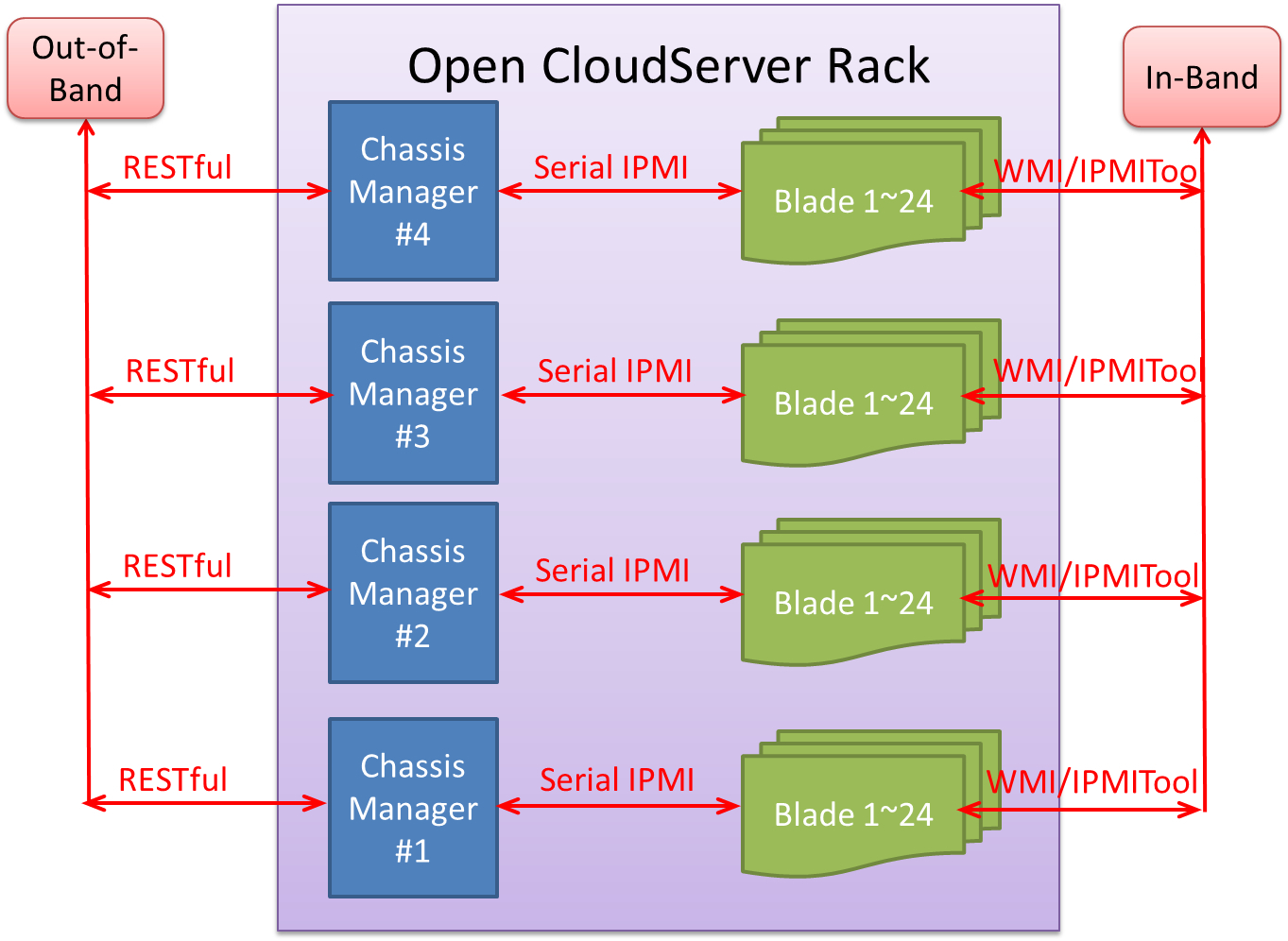

The software part is not forgotten: Out-of-Band logic part

In-band part

Facebook relies on Intel ME capabilities, Microsoft takes advantage of the already familiar

IPMI via BMC.

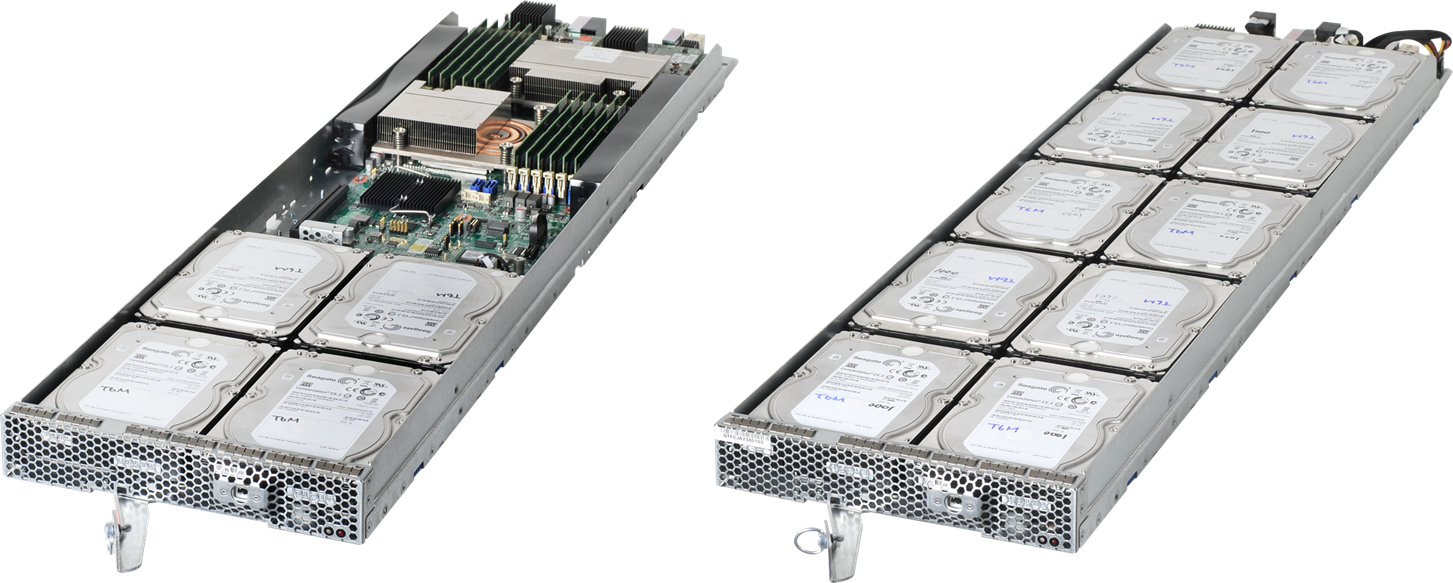

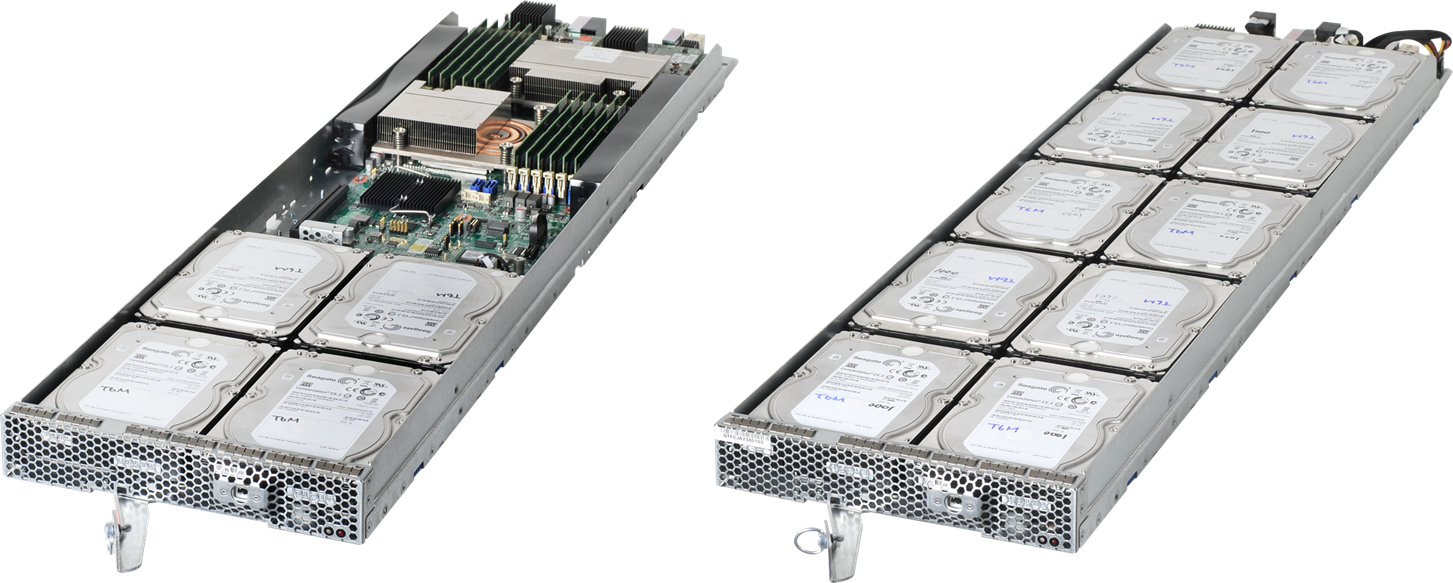

Blades Two types of blades are developed, one with a server, the other with a 10-disk JBOD. Possible combinations You can combine , of course, as you like - at least two servers, at least both JBODs. Cables remain in place Cables are connected to the backplate, therefore, to replace the blade, just remove the basket (a significant difference from OCP). This approach reduces maintenance time and eliminates a possible error when reconnecting cables.

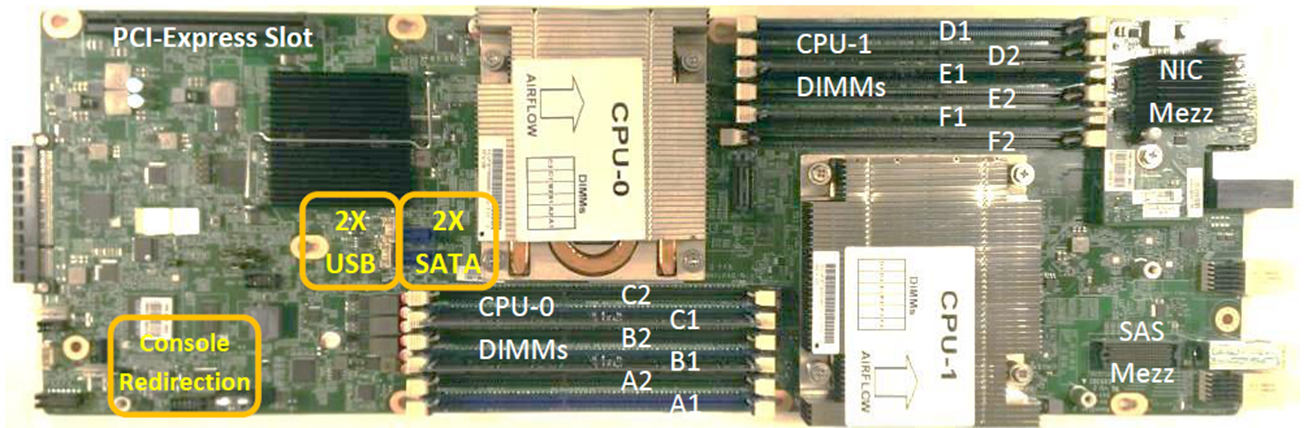

The PCIe slot is designed for a very specific purpose - the use of PCIe Flash cards.

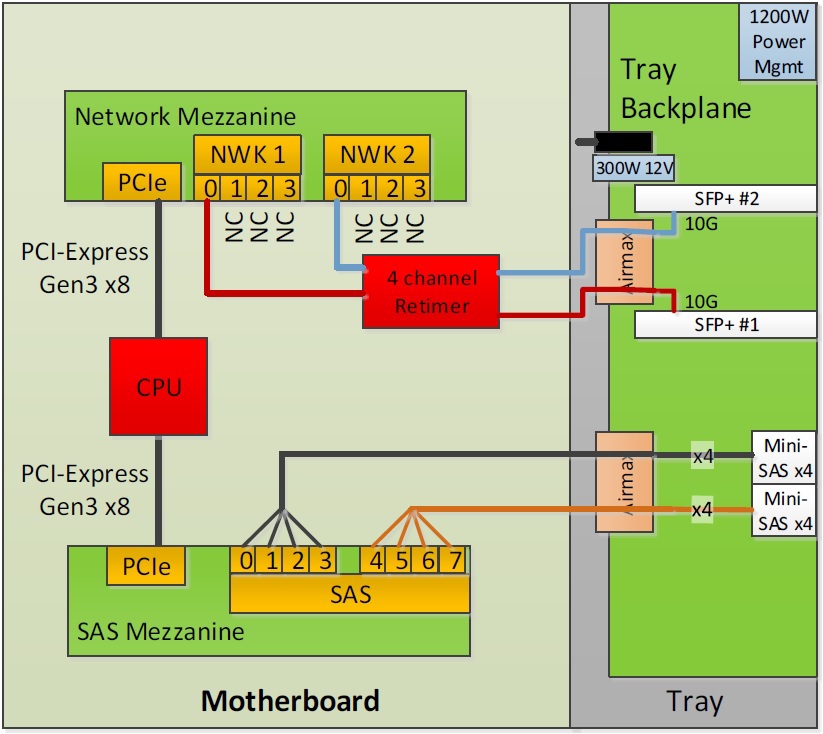

So divorced mezzanine boards

Disk Shelf

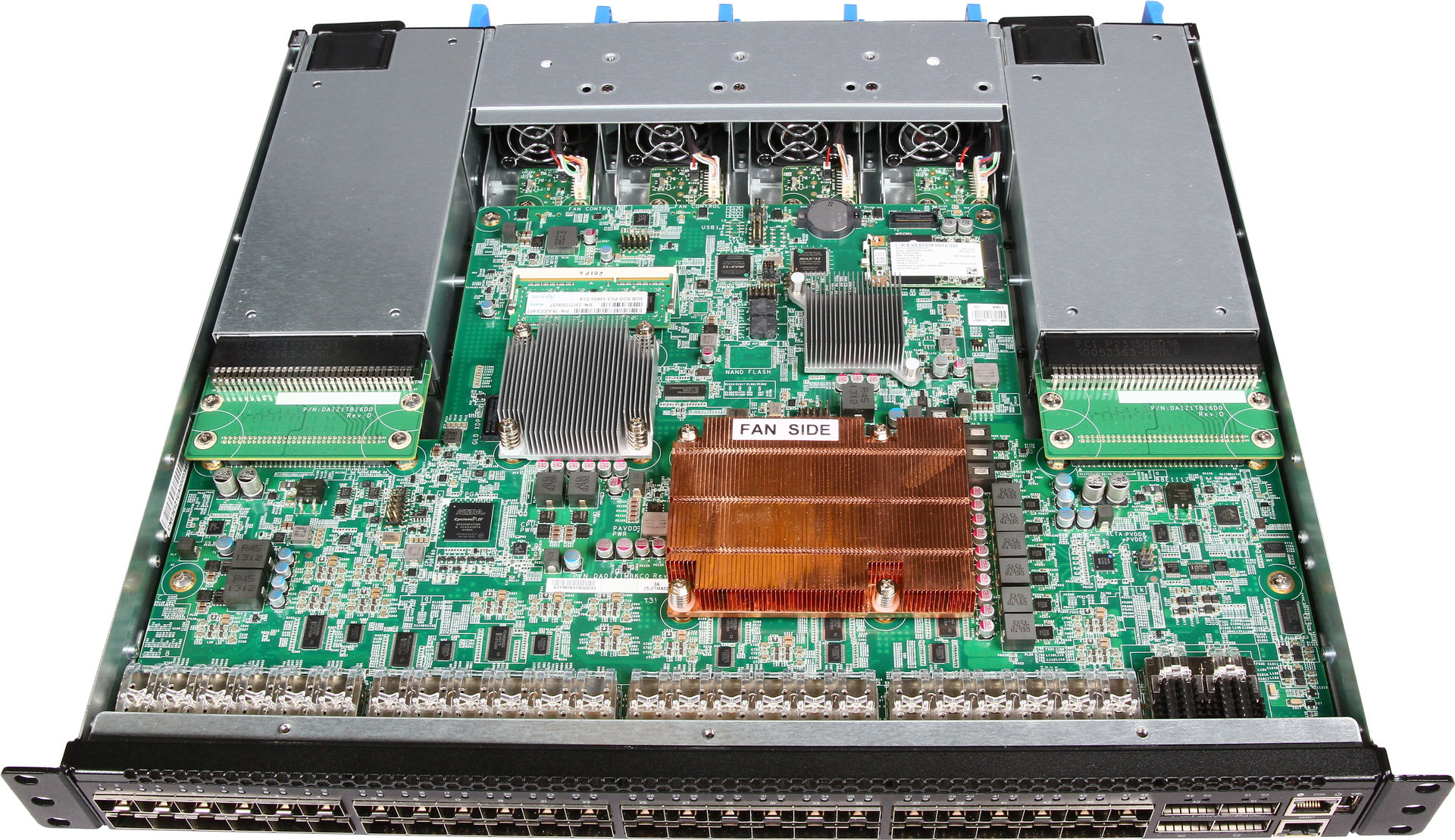

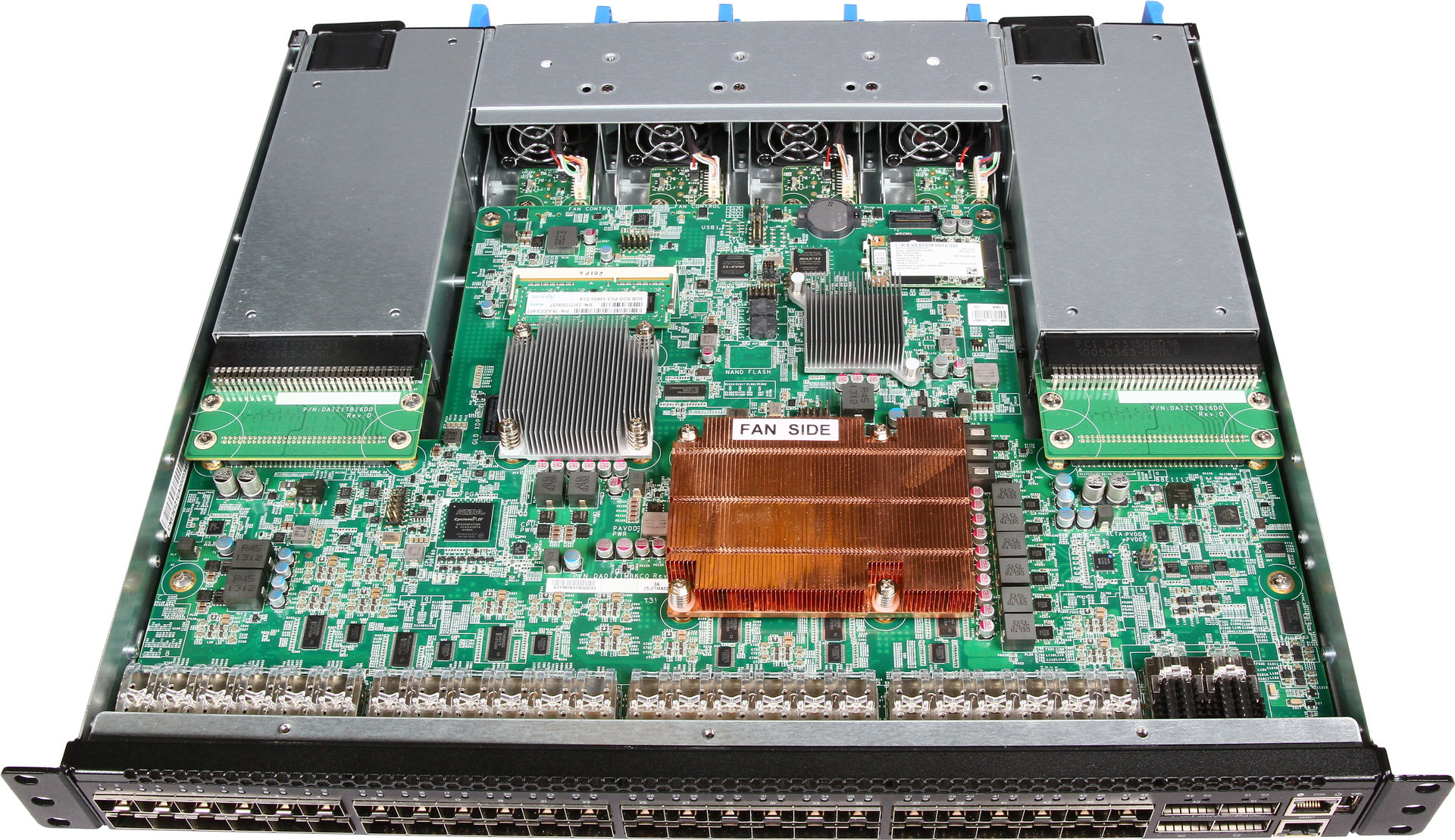

Network Infrastructure

All network connections are 10G, gigabit is used only for management, and not everywhere. An important point - Microsoft is extremely active in popularizing Software Defined Networking (SDN) technologies, their own services are based on software-configured networks.

It is not for nothing that in the past Ethernet Summit, their product for monitoring SDN networks, DEMon, received six of the six possible awards , and the company is listed on the list of platinum sponsors OpenDaylight .

At the same time, we remind you that we have long announced a product for hybrid networks with SDN support - the Eos 410i switch with a port cost below $ 100 :) Total

The largest companies in the industry rely on Software Defined Datacenter and Microsoft is no exception. The development of Ethernet technologies has led to the fact that 10G and RDMA (using RDMA over Converged Ethernet, RoCE) can do without a separate FC network (the increase from RDMA was mentioned here: habrahabr.ru/company/etegro/blog/213911 ) without loss in performance . The capabilities of Windows Storage Spaces are such that hardware storage systems are gradually replaced by solutions on Shared DAS / SAS networks (it was written in detail here habrahabr.ru/company/etegro/blog/215333 and here habrahabr.ru/company/etegro/blog/220613 ).

MS Benefits:

Chassis-based design reduces cost and power consumption

All signal and power lines are transmitted through fixed connectors

Network and SAS cables in a single backplane

Secure and scalable management system

Conclusion

The invention of the next incarnation of blades has taken place. The key difference from the rest

is the completely open platform specifications and the use of standard network switches. In traditional blades from a well-known circle of companies, the choice of the network part is limited by the imagination of the developers, in an open solution you can use any of the existing products, from used Juniper to SDN switches of your own design.

Well, we presented the solution based on Open CloudServer in the product line .

In a blog post, Microsoft talks a lot about how to use the Azure cloud service built on their infrastructure. The infrastructure, as it turned out, was also developed “from scratch” for the vision of Microsoft.

The process of publishing its platform turned out to be contagious, Microsoft joined the initiative and shared its vision of the optimal infrastructure, and we will talk about this in detail.

Key Features

- Width is standard, 19 inches. Chassis 12U high.

- Unified cooling and power system.

- Unified server management system.

- All maintenance does not affect cable management (unless the problem is in the switch).

- Standard PDUs are used.

- Power supplies match our rack mount range in form factor.

View of the complete rack

Details about the architecture The structure of the chassis is a complex trough in which baskets with universal backplanes for servers and disk shelves, a power distribution board, power supplies, a remote control controller and a fan

Microsoft uses standard 19 "racks 50U high, of which 48U are occupied directly by servers, and another 2U is allocated for switches. Power supplies and PDUs are located on one side of the rack and do not occupy space, unlike OCP.

A service module is built into each chassis ( more about it later) and there are 6 power supplies (exactly the same as in our RS130 G4 , RS230 G4 models ) according to the 5 + 1 scheme. Rear view of the basket A good idea is that regardless of the modules used, the set of ports on the basket is the same. Always two SAS ports, two SFP + ports and two RJ45 ports are displayed, and what about it will be connected with the second side - determined on the spot. Compare formats

| Open rack | Open cloud server | 19 ”Stand | |

| Inner width | 21 ” | 19" | 19" |

| Rack height | 2100 mm (41 OU) | 48-52U, 12U / Chassis | 42-48U |

| OU / RU | OU (48 mm) | RU (44.45 mm) | RU (44.45 mm) |

| Cooling | On system (HS / Fixed) | Wall fans (6 pieces) | In system |

| Fan size | 60 mm | 140 mm | Varies |

| Power bus | 3 pairs | - | - |

| Power Zones / Budget | 3, budget up to 36 kW | 4, budget up to 28 kW | - |

| Power shelf | Front | Behind | - |

| Power Shelf Size | 3 OU | 0 U | 0 U |

| Integrated UPS | Yes | Yes (coming soon) | No (but no one bothers to put any) |

| Rack management | Yes, RMC (but not fully resistant) | Yes CM | - |

| Blade Identification (Sled ID) | Not | Yes (in the chassis) | Not |

| Cables | Front | Behind | Front or back |

Cooling system efficiency

| Total system consumption 7200 W (24 blades x 300 W) | ||||

| Outside temperature (° C) | Fan speed (%) | Fan consumption (W) | Fan Consumption (W) | Percentage of consumption per rack (%) |

| 25 | 66 | 19,2 | 115,2 | 1,6 |

| 35 | 93 | 40,2 | 241.2 | 3.35 |

Chassis manager management system The management server is inserted and removed “hot”, the software part is built (which is not surprising) on Windows Server 2012. The source code is available to the general public and everyone can make their own changes (the toolkit is used free of charge). general idea Directly board Functionality:

- Input / Output (I / O):

- 2 x 1GbE Ethernet (to access the network or manage ToR switches)

- 4 x RS-232 (Connect to switches to control their boot)

- Power management (single input, three outputs for connection PDU or other CMM) - Windows Server 2012

- Hot swap module

- Iron:

- Integrated Atom S1200 processor

- 4GB memory with ECC

- 64GB SSD - TPM module as an option

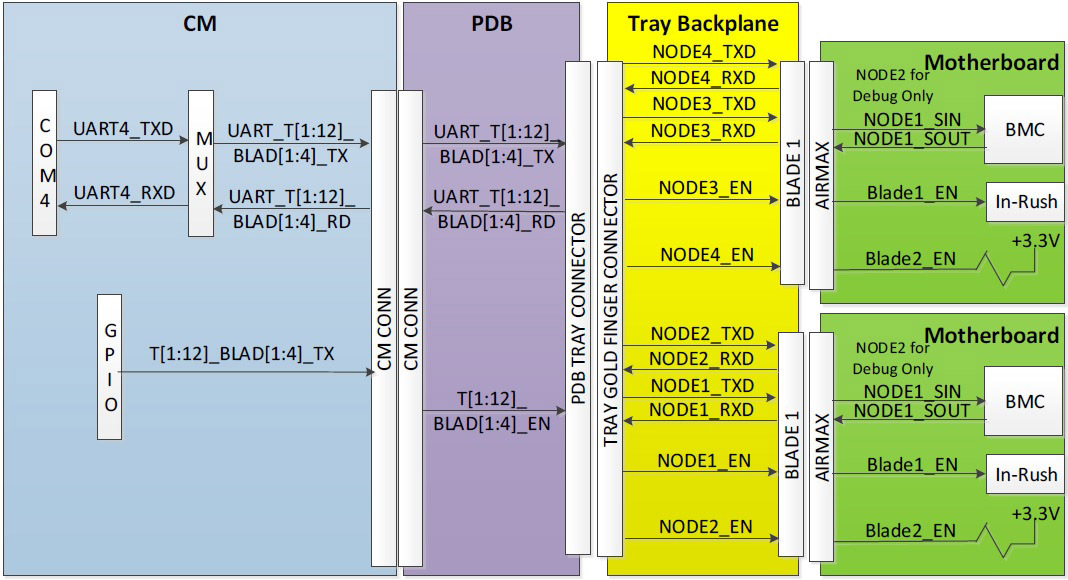

- Integrated blade communication multiplexers

- Blade power management is deduced separately (so more reliable)

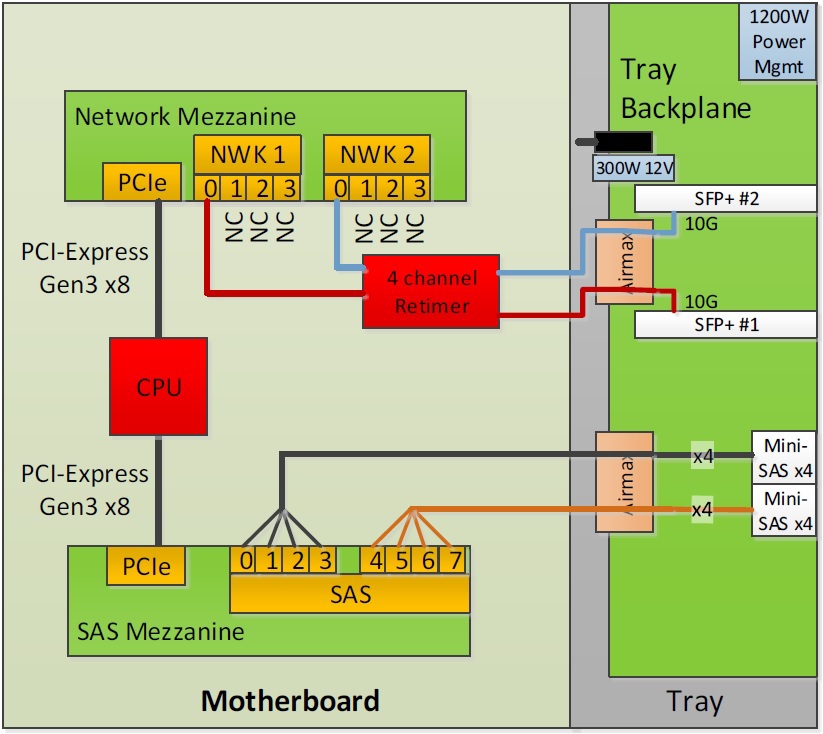

Bus layout

How it goes through the connectors

The software part is not forgotten: Out-of-Band logic part

- CM has a REST-like API and CLI command line to scale the control system

- Fan control / monitor

- PSU Control / Monitor

- Chassis Power Management ON / OFF / Reset

- Serial Console Redirect

- Blade Identify LED

- TOR Power Control ON / OFF / Reset

- Power mapping

In-band part

- BMC blades work with standard IPMI over KCS interface

- Windows - WMI-IPMI

- Linux - IPMItool

- Identification, Chassis Power, Event Logging, Temperature Monitor, Power Management, Power Capping, HDD and SSD monitoring.

Facebook relies on Intel ME capabilities, Microsoft takes advantage of the already familiar

IPMI via BMC.

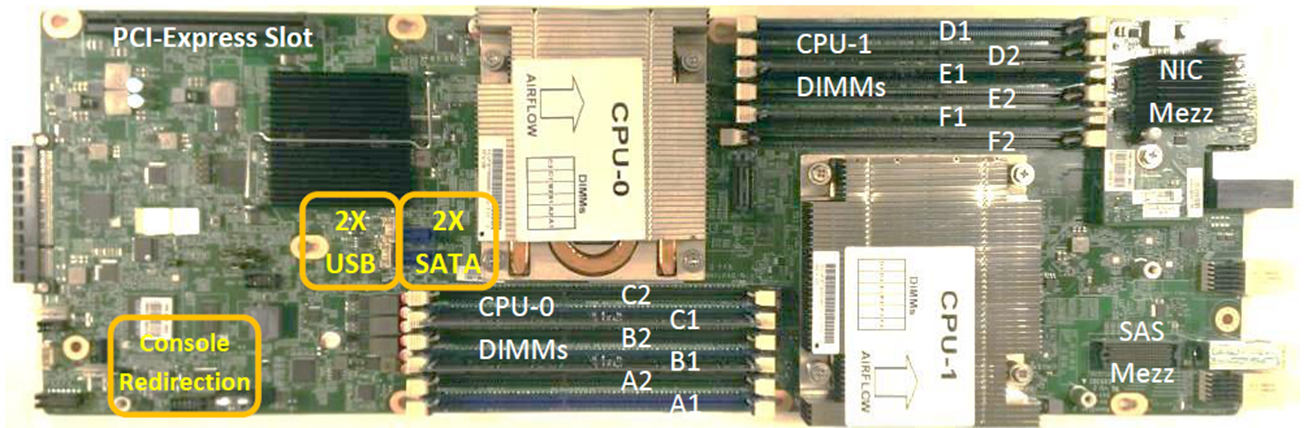

Blades Two types of blades are developed, one with a server, the other with a 10-disk JBOD. Possible combinations You can combine , of course, as you like - at least two servers, at least both JBODs. Cables remain in place Cables are connected to the backplate, therefore, to replace the blade, just remove the basket (a significant difference from OCP). This approach reduces maintenance time and eliminates a possible error when reconnecting cables.

| Specification | OCS server |

| CPU | 2x Intel Xeon E5-2400 v2 per node, up to 115 W |

| Chipset | Intel C602 |

| Memory | 12 DDR3 800/1066/1333/1600 ECC UDIMM / RDIMM / LRDIMM slots per server |

| Disks | 4 3.5 "SATA per server |

| Expansion slots | [options = "compact"] * 1 PCIe x16 G3 * SAS mezz * 10G mezz |

| Control | BMC-lite connected to Chassis Manager via I2C bus |

The PCIe slot is designed for a very specific purpose - the use of PCIe Flash cards.

So divorced mezzanine boards

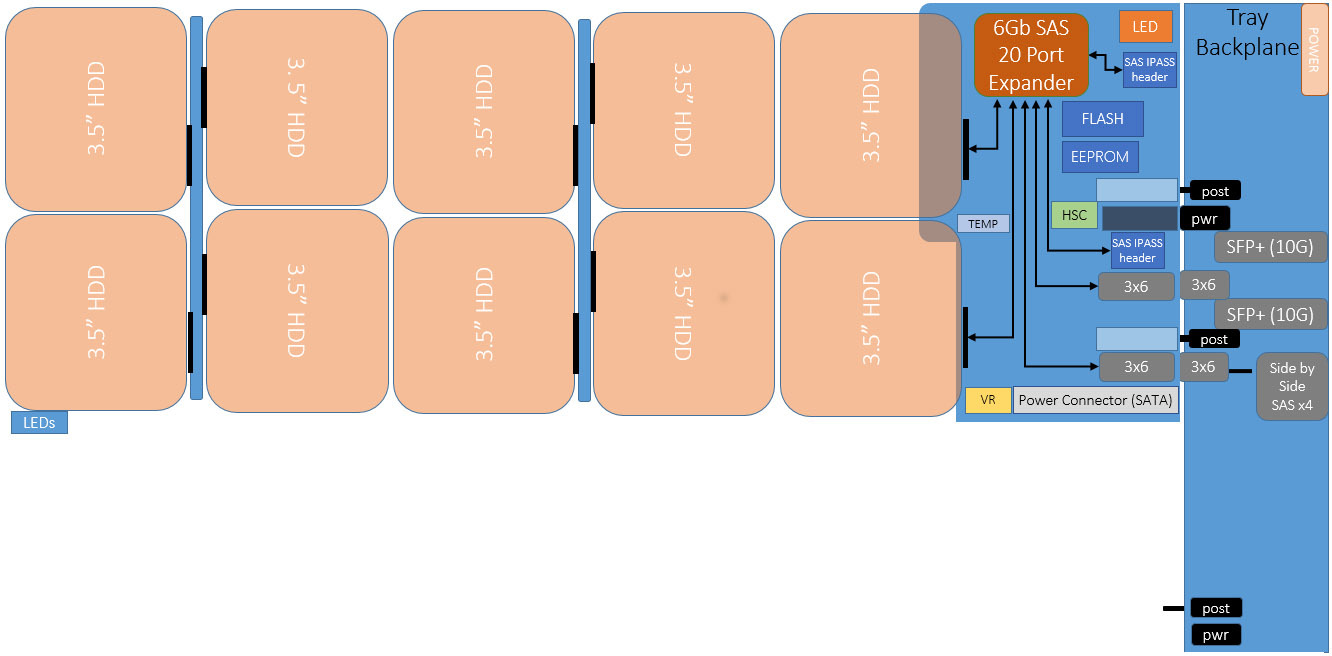

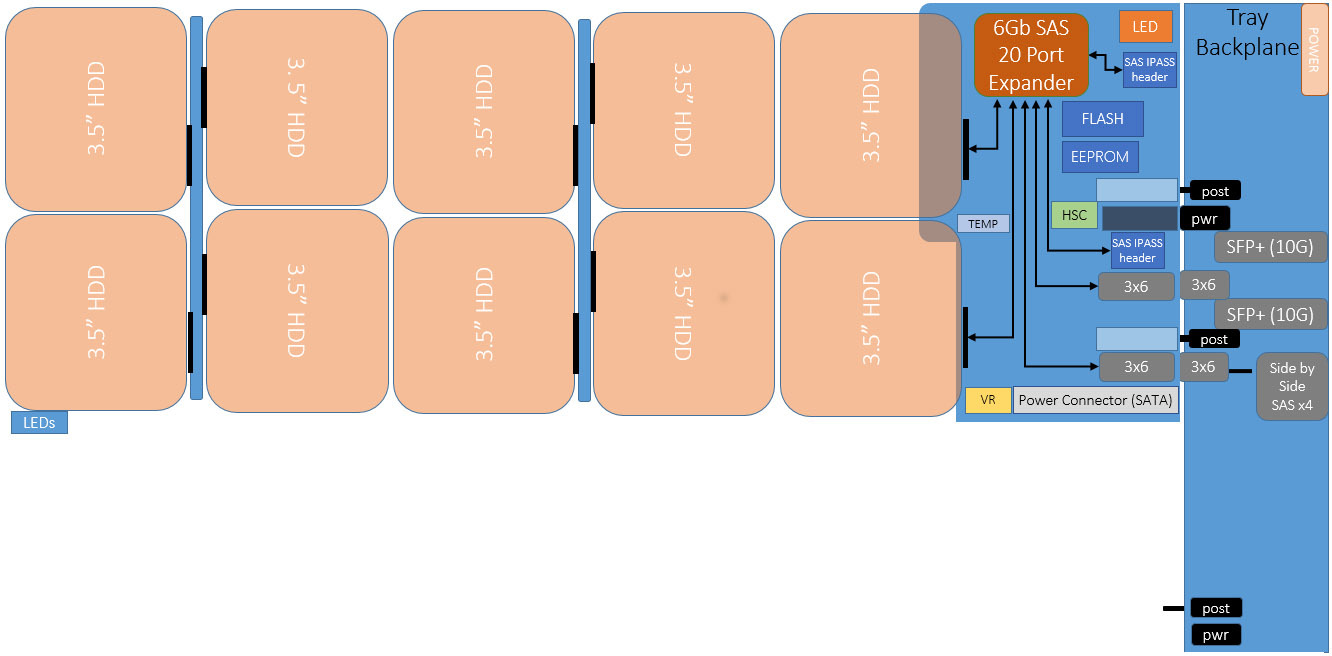

| Specification | OCS JBOD |

| Controllers | 1 SAS Interface Module (SIM) |

| External ports | 2 6Gb / s mini-SAS ports |

| Disks | 10 3.5 "SAS / SATA hot-swappable entire basket |

| Control | SCSI Enclosure Service (SES-2) |

Disk Shelf

Network Infrastructure

All network connections are 10G, gigabit is used only for management, and not everywhere. An important point - Microsoft is extremely active in popularizing Software Defined Networking (SDN) technologies, their own services are based on software-configured networks.

It is not for nothing that in the past Ethernet Summit, their product for monitoring SDN networks, DEMon, received six of the six possible awards , and the company is listed on the list of platinum sponsors OpenDaylight .

At the same time, we remind you that we have long announced a product for hybrid networks with SDN support - the Eos 410i switch with a port cost below $ 100 :) Total

The largest companies in the industry rely on Software Defined Datacenter and Microsoft is no exception. The development of Ethernet technologies has led to the fact that 10G and RDMA (using RDMA over Converged Ethernet, RoCE) can do without a separate FC network (the increase from RDMA was mentioned here: habrahabr.ru/company/etegro/blog/213911 ) without loss in performance . The capabilities of Windows Storage Spaces are such that hardware storage systems are gradually replaced by solutions on Shared DAS / SAS networks (it was written in detail here habrahabr.ru/company/etegro/blog/215333 and here habrahabr.ru/company/etegro/blog/220613 ).

MS Benefits:

Chassis-based design reduces cost and power consumption

- Standard rack EIA 19 "

- Modular design simplifies deployment: mounted sidewalls, 1U baskets, high-performance power supplies, large fans for efficient control, hot-swappable control board

- Up to 24 servers per chassis, optional JBOD shelves

- Optimized for contract manufacturing

- Cost savings of up to 40% and 15% better energy efficiency compared to traditional enterprise servers

- Expected savings of 10,000 tons of metal for every million servers (here they obviously bent, 10 Kg per server is too much)

All signal and power lines are transmitted through fixed connectors

- Server and chassis separation simplifies deployment and recovery

- Cable-free design reduces human error during maintenance

- Less incidents caused by loose cables

- 50% reduction in deployment and maintenance time

Network and SAS cables in a single backplane

- Passive PCB simplifies design and reduces risk of signal loss

- Supports various network and cable types, 10 / 40G Ethernet and copper / optical cables

- Cables are routed once per chassis during assembly

- Cables do not touch during operation and maintenance

- Save hundreds of kilometers of cables on every million servers

Secure and scalable management system

- Server in each chassis

- Several levels of protection: TPM at boot, SSL transport for teams, authentication based on roles from AD

- REST API and CLI to scale management system

- 75% more convenient to use than regular servers

Conclusion

The invention of the next incarnation of blades has taken place. The key difference from the rest

is the completely open platform specifications and the use of standard network switches. In traditional blades from a well-known circle of companies, the choice of the network part is limited by the imagination of the developers, in an open solution you can use any of the existing products, from used Juniper to SDN switches of your own design.

Well, we presented the solution based on Open CloudServer in the product line .