Transactional Memory: History and Development

Definition

Parallel programming is difficult. When using systems with shared memory, synchronization of access of parallel processes / threads to the shared resource (memory) cannot be dispensed with. To do this, use:

- locks (mutex);

- algorithms without locking (lockless, lock-free);

- transactional memory.

Transactional memory is a technology for synchronizing competitive threads. It simplifies concurrent programming by separating instruction groups into atomic transactions. Competitive threads run in parallel 1 until they begin to modify the same piece of memory. For example, the operations of adding nodes to a red-black tree (animation in the header) are able to work in parallel in several threads.

Hidden text

/* Move item from one list to another */

int move(list *from, list *to) {

__transaction_atomic {

node *n = pop(from);

push(to, n);

}

}The approach to managing competitiveness using transactional memory is called optimistic: we believe that threads work independently of each other and in rare cases change the same data. In this case, most transactions complete successfully. In contrast, lock-based approaches are pessimistic: we believe that threads will always conflict and always forbid them to be in the critical section at the same time.

If a data conflict occurs, the transaction is canceled. Cancellation of a transaction leads to the cancellation of the actions that the thread performed during the transaction. After this, the transaction is usually restarted, or the function specified in advance as an “emergency exit” is called, most often a rollback to the use of locks.

Pros of transactional memory:

- relative ease of use (the conclusion of whole methods in a transaction block);

- the complete absence of locks and deadlocks;

- increasing the level of concurrency, and therefore productivity.

Transactional memory is not a silver bullet. There are also disadvantages:

- improper use may result in a drop in performance and incorrect operation;

- limited use - operations that cannot be undone cannot be performed in a transaction;

- debugging complexity - it is impossible to put a breakpoint inside a transaction.

The birth of technology

Database transactions have been around for decades. For the first time, the idea of transferring transactions from the world of databases to the world of parallel programming arose in the 1980s. Developed and popularized technology Maurice Herlihy , Ravi Rajwar , Nir Shavit . The first studies suggested hardware implementations of transactional memory that were not destined to be born for another 30 years.

In the 1990s, the first software implementations of the technology appeared; hardware implementations were pulled back to the 2000s.

Software Implementations (Software Transactional Memory, STM)

Among the many implementations of software transactional memory, I would like to highlight four. Examples are available on github: JIghtuse / tm-experiments .Clojure

Clojure is the only language whose core supports transactional memory. The main constructions of STM:ref(link to data through which data is changed only in a transaction) and dosync(transaction block). The approach to STM in Clojure is called MultiVersion Concurrency Control ( MVCC ): multiple logical versions of the data used in a transaction are stored. During the transaction, the stream observes a snapshot of the data at the time it started.

Hidden text

Link version diagram in a Clojure transaction.

Transactions 1 and 2 begin at the same time, receiving one copy of the version

The calculations in transaction 3 do not change the values

Link version diagram in a Clojure transaction.

Transactions 1 and 2 begin at the same time, receiving one copy of the version

ref v0. Inside the transaction, there is data processing that changes the value ref. The first transaction ends earlier and wins the race for updating with a refnew value. Then the second transaction ends, and its attempt to update reffails (red arrow on the diagram), because the version refwas not the one that was expected. In this case, the transaction is restarted, receiving a copy of the new version ref. Since no other transaction is trying to change ref, the second time, transaction 2 completes successfully. The calculations in transaction 3 do not change the values

ref, so the restart is not called and it completes successfully.Consider an example of a transfer of funds between bank accounts:

Hidden text

The program runs on a single thread, but is thread safe.

(def account1 (ref 100))

(def account2 (ref 0))

; для чтения текущего значения 'ref' используется (deref refname):

(deref account1)

100

; @refname - эквивалент (deref refname)

@account2

0

(defn transfer [amount from to]

(dosync

(alter from - amount)

(alter to + amount)))

(transfer 100 account1 account2)

100

There are options for using competitiveness in which the environment is allowed to “relax” to achieve additional performance. For example, imagine that you keep a transaction log for the day. The order of transactions in the log is not important if you know that the final balance will be correct. If you get two installments of $ 100 and $ 50, the order in which they are entered in the journal does not matter. The contribution from two transactions is commutative, and clojure provides a competitive operation

commutejust for such cases.Hidden text

(defn log-deposit [account amount]

(dosync

(println "Depositing $" amount " into account, balance now: "

(commute account + amount))))

(def myaccount (ref 0))

(log-deposit myaccount 100)

(log-deposit myaccount 50)

; (as good as) equivalent to

(log-deposit myaccount 50)

(log-deposit myaccount 100)

Haskell

Haskell's transactional memory is contained in the STM library, which is part of the Haskell Platform. Incorrect use of transactional types is determined at the stage of compilation of the program.

Haskell has a powerful type system. The language separates functions with side effects and pure functions. Any type value

IO tis called an action. To perform an action atomically in Haskell, the action is preceded by the word atomically. Invoking atomically with an action as an argument gives two guarantees:- atomicity - the effect of

atomically actwill be visible to other flows immediately and in its entirety. No other stream is able to see the state inside the atomic action, only the final state. - isolation - during a call, the

atomically actactionactis performed completely independently of other threads. It removes the state of the world at startup and runs in that state.

atomicallyhas type atomically :: STM a -> IO a. Type action STM ais an argument. Like the action IO, the action STMhas side effects, but their range is much narrower. Basically, the action STMis writing or reading a transactional variable TVar a:readTVar :: TVar a -> STM awriteTVar :: TVar a -> a -> STM ()

Consider an example of transferring funds between bank accounts.

Hidden text

import System.IO

import Control.Concurrent.STM

-- Система типов защищает от чтения или записи переменные типа TVar вне транзакции

type Account = TVar Int

withdraw :: Account -> Int -> STM ()

withdraw acc amount = do

bal <- readTVar acc

writeTVar acc (bal - amount)

deposit :: Account -> Int -> STM ()

deposit acc amount = withdraw acc (- amount)

transfer :: Account -> Account -> Int -> IO ()

-- Перевести ’amount’ с аккаунта ’from’ на аккаунт ’to’

transfer from to amount

= atomically (do deposit to amount

withdraw from amount)

showAccount :: Account -> IO Int

showAccount acc = atomically (readTVar acc)

main = do

from <- atomically (newTVar 200)

to <- atomically (newTVar 100)

transfer from to 50

v1 <- showAccount from

v2 <- showAccount to

putStrLn $ (show v1) ++ ", " ++ (show v2)

-- Программа распечатает "150, 150"

Scala

The STM implementation for Scala (ScalaSTM) was inspired by the implementations in Haskell and Clojure. In addition to Scala, ScalaSTM is called from Java and Clojure. The implementation is used in the popular Akka parallel programming framework.

ScalaSTM provides a cell

Refthat is modified exclusively within a transaction. Data structures are based on immutable objects and Refare used by many threads. Consider implementing a doubly linked thread safe list using transactional memory. Unfortunately, I was not able to collect an example on Scala, I leave this activity to the reader.

Hidden text

Full github example: github.com/nbronson/scala-stm/blob/master/src/test/scala/scala/concurrent/stm/examples/ConcurrentIntList.scala

Pointers to the next and previous nodes are made thread safe to implement the shared structure. If there is a possibility that one thread writes a variable at the same time as the other gets access to it (reads or writes), then use

If it

Pointers to the next and previous nodes are made thread safe to implement the shared structure. If there is a possibility that one thread writes a variable at the same time as the other gets access to it (reads or writes), then use

Ref. Define a class for the list node and initialize the head of the list. The list is circular: when creating, the head list pointers point to it. These pointers are never equal null.import scala.concurrent.stm._

class ConcurrentIntList {

private class Node(val elem: Int, prev0: Node, next0: Node) {

val isHeader = prev0 == null

val prev = Ref(if (isHeader) this else prev0)

val next = Ref(if (isHeader) this else next0)

}

private val header = new Node(-1, null, null)If it

xis Ref, it x()gets the value stored in x, and x() = vsets it equal to the value v. Refare read and written only inside the atomic block (transaction). This is checked at compile time. The following demonstrates the use of a transaction.def addLast(elem: Int) {

atomic { implicit txn =>

val p = header.prev()

val newNode = new Node(elem, p, header)

p.next() = newNode

header.prev() = newNode

}

}Scala brings together the ideas of many programming paradigms. It is not surprising that in the field of transactional memory language has distinctive technologies. The aforementioned Akka framework integrates the capabilities of actors and transactional memory, providing agents and transaction agents that will finally blow your mind.

C / C ++ (GCC 4.7+)

Starting with version 4.7, GCC supports transactional memory. The implementation is a libitm runtime library, the -fgnu-tm (-mrtm, -mhle) flag is specified for compilation. The library was developed with an eye on a draft of transactional constructs for C ++ (inclusion of a language into the standard is proposed).

Most hardware transactional memory implementations use the principle of greatest effort. Therefore, practical implementations use a combination of hardware and software transactional memory technologies. Such systems are called hybrid transactional memory systems. These include, in particular, the implementation of GCC.

Keywords are entered into the language:

__transaction_atomic { … }- an indication that the code block is a transaction;__transaction_relaxed { … }- an indication that the unsafe code inside the block does not lead to side effects;__transaction_cancel- explicit cancellation of the transaction;attribute((transaction_safe))- an indication of a transactionally safe function;attribute((transaction_pure))- indication of function without side effects.

To demonstrate the use of transactional memory in C, we will populate a histogram of data in competitive threads.

Hidden text

Full implementation on github: github.com/JIghtuse/tm-experiments/blob/master/histogram/src/hist.c

One transaction block is used per histogram update cycle. The runtime library (or hardware) will determine when and which transactions to restart.

One transaction block is used per histogram update cycle. The runtime library (or hardware) will determine when and which transactions to restart.

#ifdef _USE_TSX

__transaction_atomic {

#endif

for (i = 0; i < d->sz; ++i) {

struct pixel p = d->pixels[i];

unsigned int luminance = rY * p.red + gY * p.green + bY * p.blue;

#if defined _USE_TSX

++histogram[luminance/BORDER];

#elif defined _USE_MUTEX

pthread_mutex_lock(&mut);

++histogram[luminance/BORDER];

pthread_mutex_unlock(&mut);

#endif

}

#ifdef _USE_TSX

}

#endifHardware Implementations (Hardware Transactional Memory, HTM)

Only recently have the hardware implementations of transactional memory begun to appear.Sun Rock (SPARC v9) 2007-2008

The Rock microprocessor from Sun Microsystems was the first microprocessor with hardware support for transactional memory. The 64-bit SPARC v9 architecture processor had 16 cores.

In 2007, the company announced technology support. For the functioning of transactional memory, two new instructions have been added

chkpt, and commitand a new status register cps. The instruction was used to start the transaction, and the instruction to complete it. If you cancel the transaction took place on the transition , at which time you can check registerchkpt commitcpsto determine the reason for the cancellation. Transactions were interrupted due to data conflicts, TLB misses, interrupts, complex instructions. However, in many cases, the use of transactional memory in the Rock processor provided synchronization advantages. In 2008, Sun engineers introduced the Transactional Memory Interface and the Adaptive Transactional Memory Test Platform simulator. After the acquisition of Sun by Oracle, the Rock project was closed: “This processor had two amazing properties: it was incredibly slow and consumed an enormous amount of energy. It was so hot that they had to put on top of 12 inches of cooling fans so that the processor did not overheat. It would be crazy to continue this project. ” 2

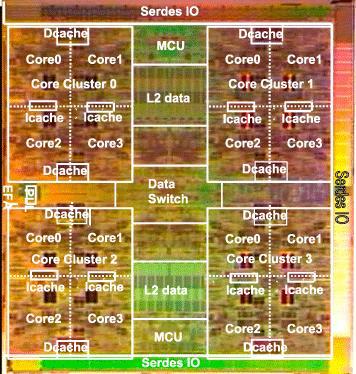

IBM BlueGene / Q (PowerPC A2) 2011

The Sequoia supercomputer with BlueGene / Q architecture was the first commercial system with transactional memory hardware support. The technology operates in a 32 MB cache of the second level of the PowerPC A2 processor (PowerPC BQC 16C). The data in the cache is labeled “version”, and the cache is capable of storing multiple versions of the same data.

The program informs the processor about the start of the transaction, does its work and reports that the transaction must be completed (commit). If other threads changed the same data - creating versions - the cache rejects the transaction and the thread tries to conduct it again. If no other versions of the data appear, the data is modified and the transaction completes successfully.

VersionPC technology in PowerPC A2 is also used for speculative execution. Instead of waiting for a new version of the data, the stream can perform calculations with the available data, speculatively doing useful work. If the data was the same as after the update, the stream completes (commit) work from the cache. Performance is higher: the thread did the work until its final value. Otherwise, the results of speculative work are rejected and the stream performs calculations with the correct values.

Transactional memory support is, in a way, a logical extension of the feature that has long been present in PowerPC processors - “load-link / store-conditional”, or LL / SC. LL / SC is a primitive operation that can be used as a building block for all stream-safe structures. The first part of LL / SC - load-link - is used by the program to retrieve data from memory. The stream then modifies the data and writes it back to memory using store-conditional. The command succeeds if the data has not changed. Otherwise, the program repeats data operations from the beginning.

Transactional memory is LL / SC on steroids: threads perform LL operations on many different memory areas, and the transaction completion operation atomically changes all memory areas or cancels the transaction (like SC).

Ruud Haring, who introduced IBM's work on transactional memory on Hot Chips , admitted that the company faced a number of non-trivial challenges in the implementation. For all its complexity, the implementation has limitations: it does not provide interprocess support for transactions. The problem is not relevant for Sequoia and at the same time allows us to estimate the gain from using transactional memory.

IBM zEnterprise EC12 (Z / Architecture) 2012

The first commercial server to support transactional memory instructions. Using transactional memory, IBM found a 45% performance increase over the z196 machine in the DB2 database and running in virtualized server loads.

IBM Power8 (Power) 2013

Power 8 memory controllers support transactional memory. Support for technology in the Linux kernel appeared since kernel version 3.9.

There wasn’t much information on HTM in Power8, I hope it still appears.

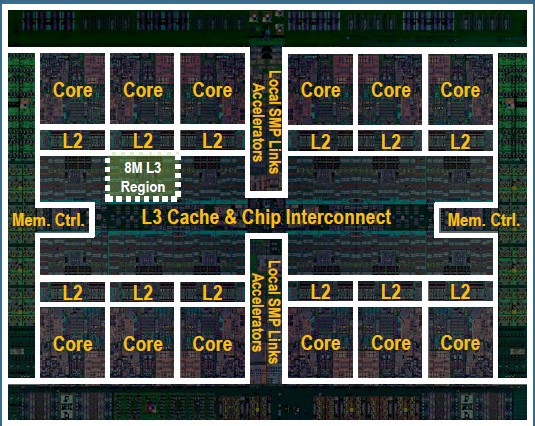

Intel Haswell (x86) 2013

In 2012, Intel announced the introduction of Transactional Syncrhonization Extensions (TSX) in the x86 instruction set. Extensions allow programmers to mark individual sections of code as transactions.

In 2013, with the release of a generation of Haswell processors, hardware support for transactional memory first became available at the consumer level.

Haswell manages read and write sets with cache line granularity by tracking L1 data cache addresses. Conflicts are determined using the cache coherence protocol. Which is logical to assume, since the tasks of identifying transaction conflicts and maintaining cache coherence are very close: if the value changes in one thread, but not in others, then something is amiss.

TSX consists of two parts. Hardware Lock Elision (HLE) provides a simple conversion of lock-based programs to transactional programs, and the resulting programs will be backward compatible with existing processors. Restricted Transactional Memory (RTM) is a more complete implementation of transactional memory.

Hardware Lock Elision

HLE slightly modifies instructions for changing a section of memory. The technology adds prefixes - instructions that do not perform any action, but change the interpretation of the next instruction - to modify the instructions for taking and releasing the lock (XACQUIRE and XRELEASE, respectively).

Between locking and unlocking, the processor keeps track of the memory that reads and writes streams. In the event of a conflict - if two threads modify one data, or one thread reads data that the other writes - the processor cancels the transaction when the lock is released. Otherwise, execution continues normally.

Thus, HLE allows generally accepted lock-based code to be optimistic. Each thread will assume that it has received a lock, while other threads can work simultaneously. As long as it is safe, transactions are not canceled.

The technology is backward compatible with non-HTM processors. Lock management operations remain, but with a special prefix. Haswell processors will consider the prefix and use transactional execution instead of lock manipulation. Any other processor will ignore the prefix and simply control the lock using traditional lock-based behavior. XACQUIRE and XRELEASE instructions already exist, but do not make any sense until used with specific instructions.

HLE allows you to write programs and operating systems that will use transactions on Haswell, increasing concurrency without blocking. The code will work correctly on current processors. As a result, introducing a new feature will be simple and safe.

HLE on a simple example

Operating systems implement locks in the kernel as sections of memory, usually using a processor-specific integer type. The thread taking the lock does something with this piece of memory, for example, increases the value from 0 to 1. To release the lock, the reverse operation is applied (for example, decrement from 1 to 0). Changes are visible to each processor core and each thread in the system, so other threads immediately determine that they cannot take the lock and must wait for it to be released (acquiring the value 0).

With the XACQUIRE / XRELEASE prefixes, the attempt to take the lock succeeds, and the process assumes that the lock belongs to it and continues to work. However, a global change in the lock value does not occur. Thus, a thread with a lock will assume that its value is 1, and the rest of the system threads will still see 0. This allows other threads to simultaneously take a lock, and the processor will lie to them again. The thread will see the lock taken, and other threads will not count so.

This behavior explains the name HLE: instead of changing the value from 0 to 1 and vice versa, it simply remains equal to 0. The “optional” record has disappeared.

With the XACQUIRE / XRELEASE prefixes, the attempt to take the lock succeeds, and the process assumes that the lock belongs to it and continues to work. However, a global change in the lock value does not occur. Thus, a thread with a lock will assume that its value is 1, and the rest of the system threads will still see 0. This allows other threads to simultaneously take a lock, and the processor will lie to them again. The thread will see the lock taken, and other threads will not count so.

This behavior explains the name HLE: instead of changing the value from 0 to 1 and vice versa, it simply remains equal to 0. The “optional” record has disappeared.

Restricted Transactional Memory

RTM requires more involvement: it evades backward compatibility and introduces three new instructions. While HLE implicitly uses transactions, allowing lock-based code to run in parallel, RTM makes the start, end, and interruption of transactions explicit. The thread starts the transaction with the XBEGIN instruction, providing a “fallback” function that is launched if the transaction is interrupted. The transaction ends with the XEND instruction, and the processor conducts the transaction if there were no conflicts or aborts it, passing into the spare function. Transactions are explicitly interrupted in the program by the XABORT instruction.

Thanks to the explicit use of boundaries and “back-up”, RTM allows you to control transactions more than HLE. In the long run, RTM will simplify the implementation of transaction capabilities.

conclusions

Using transactional memory is a viable alternative to existing synchronization techniques that simplifies parallel programming.

For inaccuracies in translation, stylistics, and typos, please write in a personal message.

Notes

- The difference between the concepts of “competitiveness” and “parallelism” was correctly expressed by Rob Pike in his speech “ Concurrency is not Parallelism ”: “Competitiveness - working with many things at a time, parallelism - doing many things at a time”

- Yes, in addition to a bundle of excellent software products (OpenSolaris, MySQL, OpenOffice), Oracle abandoned promising hardware technology

Sources

- Software transactional memory - Wikipedia

- Clojure STM - What? Why? How? - sw1nn

- Software transactional memory - HaskellWiki

- Software Transactional Memory — School of Haskell | FP Complete

- ScalaSTM — Quick Start

- Software Transactional Memory in GCC

- Web Resources about Intel® Transactional Synchronization Extensions

- Rock (processor) — Wikipedia

- Rock (процессор) — Википедия

- Sequoia — BlueGene/Q, Power BQC 16C 1.60 GHz, Custom | TOP500 Supercomputer Sites

- IBM’s new transactional memory: make-or-break time for multithreaded revolution | Ars Technica

- IBM Sequoia named world's fastest supercomputer — E & T Magazine

- You won't find this in your phone: A 4GHz 12-core Power8 for badass boxes • The Register

- Transactional Synchronization Extensions — Wikipedia

- Transactional memory going mainstream with Intel Haswell | Ars Technica

- Intel's Haswell brings transactional memory tech | bit-tech.net

Only registered users can participate in the survey. Please come in.

Do you think transactional memory technology is promising?

- 67.6% yes 310

- 5.2% no 24

- 27% were undecided 124

Is the post interesting, moar required?

- 94.1% yes 369

- 5.8% no 23