Lock-free data structures. Stack evolution

In my previous notes, I described the basis on which lock-free data structures are built, and the basic algorithms for controlling the lifetime of elements of lock-free data structures. This was a prelude to the description of lock-free containers themselves. But then I came across a problem: how to build a further story? Just describe the algorithms I know? This is pretty boring: a lot of [pseudo] code, an abundance of details, important, of course, but very specific. In the end, this is in the published works to which I give references, and in a much more detailed and rigorous presentation. I wanted to tell interestingly about interesting things, to show the ways of developing approaches to the design of competitive containers.

Well, I thought, then the presentation method should be like this: we take some type of container — a queue, map, hash map — and we review the currently known original algorithms for this type of container. Where to begin? And then I remembered the simplest data structure - the stack.

It would seem, what can we say about the stack? This is such a trivial data structure that there is nothing much to say.

Indeed, there are not so many works on the implementation of the competitive stack. But on the other hand, those that are are devoted to a greater degree to approaches than to the stack itself. It is the approaches that interest me.

Lock-free stack

The stack is perhaps the first of the data structures for which the lock-free algorithm was created. It is believed that Treiber was the first to publish it in his 1986 article , although there is evidence that this algorithm was first described in the IBM / 360 system documentation.

Historical retreat

Вообще, статья Treiber'а — своего рода Ветхий Завет, пожалуй, первая статья о lock-free структуре данных. Во всяком случае, более ранних мне не известно. Она до сих пор очень часто упоминается в списках литературы (reference) современных работ, видимо, как дань уважения к родоначальнику lock-free подхода.

The algorithm is so simple that I will give its adapted code from libcds (anyone interested is an intrusive stack

cds::intrusive::TreiberStack):// m_Top – вершина стекаboolpush( value_type& val ){

back_off bkoff;

value_type * t = m_Top.load(std::memory_order_relaxed);

while ( true ) {

val.m_pNext.store( t, std::memory_order_relaxed );

if (m_Top.compare_exchange_weak(t, &val,

std::memory_order_release, std::memory_order_relaxed))

returntrue;

bkoff();

}

}

value_type * pop(){

back_off bkoff;

typename gc::Guard guard; // Hazard pointer guardwhile ( true ) {

value_type * t = guard.protect( m_Top );

if ( t == nullptr )

returnnullptr ; // stack is empty

value_type * pNext = t->m_pNext.load(std::memory_order_relaxed);

if ( m_Top.compare_exchange_weak( t, pNext,

std::memory_order_acquire, std::memory_order_relaxed ))

return t;

bkoff();

}

}

This algorithm is repeatedly disassembled by bones (for example, here ), so I won’t repeat it. A brief description of the algorithm boils down to the fact that we slaughter using the CAS atomic primitive

m_Topuntil we get the desired result. Simple and fairly primitive. I note two interesting details:

- Safe memory reclamation (SMR) is necessary only in the method

pop, since only there we read the fieldsm_Top. Theypusharem_Topnot read into any fields (there is no access by pointerm_Top), so you don’t need to protect Hazard with Pointer. This is interesting because usually SMR is required in all methods of the lock-free container class - Mysterious object

bkoffand its callbkoff()if CAS is unsuccessful

Here on this very

bkoffthing I would like to dwell in more detail.Back-off strategy

Why is CAS unsuccessful? Obviously, because between reading the current value

m_Topand trying to apply CAS, some other thread managed to change the value m_Top. That is, we have a typical example of competition. In the case of a high load (high contention), when N threads execute push/ pop, only one of them will win, the remaining N - 1 will waste CPU time and interfere with each other on CAS (remember the MESI cache protocol ). How to unload the processor when such a situation is detected? You can back off from the main task and do something useful or just wait. That's what back-off strategies are for.

Of course, in the general case, “we will not be able to do something useful,” since we have no idea about a specific task, so we can only wait. How to wait? We note the option

sleep()- few operating systems can provide us with such small wait timeouts, and the overhead of switching contexts is too large - more than the CAS execution time. In the academic environment, the exponential back-off strategy is popular . The idea is very simple:

classexp_backoff {intconst nInitial;

intconst nStep;

intconst nThreshold;

int nCurrent;

public:

exp_backoff( int init=10, int step=2, int threshold=8000 )

: nInitial(init), nStep(step), nThreshold(threshold), nCurrent(init)

{}

voidoperator()(){

for ( int k = 0; k < nCurrent; ++k )

nop();

nCurrent *= nStep;

if ( nCurrent > nThreshold )

nCurrent = nThreshold;

}

voidreset(){ nCurrent = nInitial; }

};

That is, we execute in the cycle

nop(), each time increasing the length of the cycle. Instead, nop()you can call something more useful, for example, The problem with an exponential back-off is simple - hard to find good settings

nInitial, nStep, nThreshold. These constants are architecture and task dependent. In the code above, the default values for them are taken from the ceiling. Therefore, in practice, a pretty good choice for desktop processors and entry-level servers would be

yield()back-off - switching to another stream. In this way, we give our time to other, more successful flows, in the hope that they will do what they need and will not bother us (and we will stop them). Article on IT whether it is to apply the back-off strategy? Experiments show that the art of IT: application in the right tight spot right back-off strategy can yield a performance gain lock-free container at times.

The considered back-off strategies help solve the problem with unsuccessful CAS, but in no way contribute to the implementation of the main task - operations with the stack. Is it possible to somehow combine

push/ pop and back-off so that the back-off strategy actively helps the operation?Elimination back-off

Consider the problem of failed CAS in

push/ popon the other hand. Why is CAS unsuccessful? Because another thread has changed m_Top. And what does this other thread do? Performs push()or pop(). Now, note that the operation pushand popfor the stack are complementary: if one thread executes push(), and the other at the same time - pop(), it does not make sense, in principle, apply to the top of the stack m_Top: push-stream could simply transfer your data pop-flow, with the main property of the stack - LIFO - is not violated. It remains to figure out how to bring both of these flows together, bypassing the top of the stack.

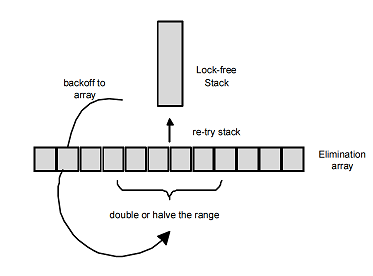

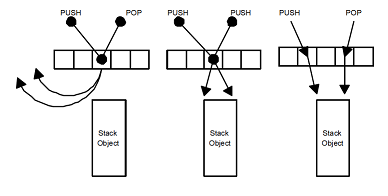

In 2004, Hendler, Shavit, and Yerushalmi proposeda modification of the Treiber algorithm, in which the task of transferring data between push and pop streams without the participation of the top of the stack is solved using a special back-off strategy, which they called the elimination back-off strategy, I would also translate as absorbing back-off strategy).

There is an elimination array of size N (N is a small number). This array is a member of the stack class. If CAS fails, going back-off, the thread creates a handle to its operation (

pushor pop) and randomly selects an arbitrary cell in this array. If the cell is empty, it writes a pointer to its descriptor in it and performs the usual back-off, for example, exponential. In this case, the flow can be called passive.If the selected cell already contains a pointer to the operation descriptor P of some other (passive) stream, then the stream (let's call it active) checks what kind of operation it is. If the operations are complementary -

pushand pop, - then they are mutually absorbed:- if the active thread executes

push, then it writes its argument to the P descriptor, thus passing it to thepoppassive thread operation ; - if the active thread is executing

pop, then it reads from the handle P the argument of the complementary operationpush.

Then the active thread marks the entry in the elimination array cell as processed so that the passive stream when exiting the back-off sees that someone has magically performed its work. As a result, active and passive threads will execute their operations without accessing the top of the stack .

If the operation in the selected elimination array cell is the same (push-push or pop-pop situation), then no luck. In this case, the active thread performs the usual back-off and then tries to execute its

push/ popas usual, CAS of the stack top. Passive stream, having finished back-off, checks its descriptor in elimination array. If the descriptor has a note that the operation is completed, that is, another stream with a complementary operation is found, then the passive stream successfully completes its

push/ pop. All the above actions are performed in a lock-free manner, without any locks, so the real algorithm is more complicated than the one described, but the meaning does not change.

The descriptor contains the operation code -

pushor pop, - and the argument of the operation: in case push- a pointer to the element to be added, in the case of popthe pointer field remains empty ( nullptr), in case of successful elimination, a pointer to the element of the absorbing operation is written to it push.Elimination back-off allows you to significantly unload the stack at high load, and at low, when the CAS top of the stack is almost always successful, this scheme does not introduce any overhead at all. The algorithm requires fine tuning, which consists in choosing the optimal elimination array size, which depends on the task and the actual load. You can also offer an adaptive version of the algorithm, when the size of the elimination array changes within small limits during operation, adapting to the load.

In the case of imbalance, when operations

pushand popgo packs - many pushwithout pop, then many popwithout push- the algorithm will not give any tangible gain, although much loss in performance should not be compared to the classical algorithm Treiber'a.Flat combining

So far, we talked about the lock-free stack. Now consider a regular lock-based stack:

template <classT> classLockStack {std::stack<T *> m_Stack;

std::mutex m_Mutex;

public:

voidpush( T& v ){

m_Mutex.lock();

m_Stack.push( &v );

m_Mutex.unlock();

}

T * pop(){

m_Mutex.lock();

T * pv = m_Stack.top();

m_Stack.pop()

m_Mutex.unlock();

return pv;

}

};

Obviously, with a competitor, its performance will be very low - all calls to the stack are serialized on the mutex, everything is done strictly sequentially. Is there any way to improve performance?

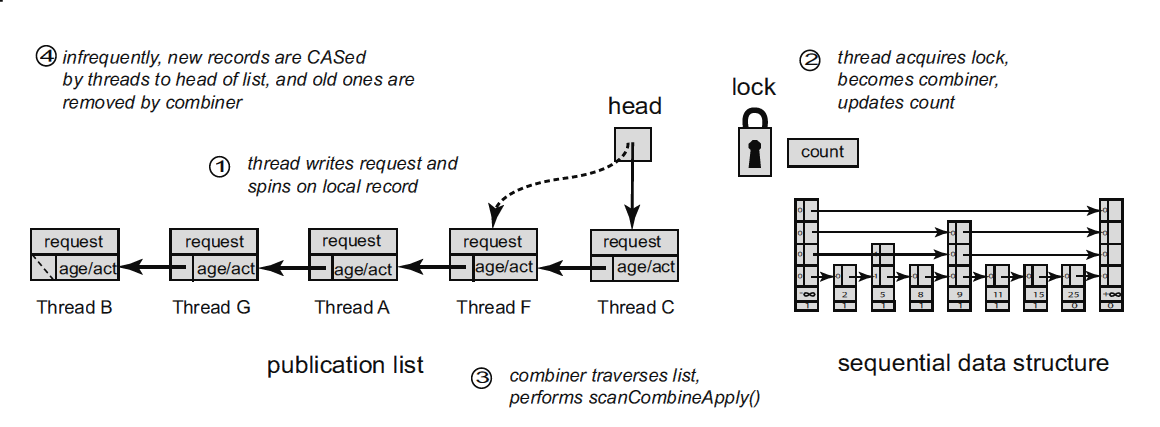

If N threads simultaneously access the stack, only one of them will capture the mutex, the rest will wait for it to be released. But instead of waiting passively on the mutex, the waiting threads could announce their operations, as in elimination back-off, and the winning thread (the owner of the mutex) could perform tasks from their brothers in addition to their work. This idea formed the basis of the flat combining approach , described in 2010 and developing to this day.

The idea of a flat combining approach can be described as follows. With each data structure, in our case, with the stack, a mutex and publication list are associated with a size proportional to the number of threads working with the stack. Each thread on the first call to the stack adds its record to the list of announcements, located in thread local storage (TLS). When the operation is performed, the thread publishes a request in its record - the operation (

pushor pop) and its arguments - and tries to capture the mutex. If the mutex is captured, the flow becomes comb and ynerom (combiner, not to be confused with the harvester e rum): it scans the list of announcements, collects all requests from it, executes them (in the case of the stack, the elimination can be applied here, which was considered earlier), writes the result to the elements of the announcement list, and finally releases the mutex. If the attempt to capture the mutex failed, the thread waits (spinning) at its announcement when the combiner executes its request and places the result in the announcement record.The list of announcements is designed in such a way as to reduce the overhead of managing it. The key point is that the list of announcements rarely changes, otherwise we get a situation where the lock-free publication list is screwed to the side of the stack, which is unlikely to somehow speed up the stack itself. Requests for an operation are entered into an existing entry in the list of announcements, which, I recall, is the property of the streams and is located in TLS. Some list entries may have the status “empty”, meaning that the corresponding thread is not currently performing any actions with the data structure (stack). From time to time, the combiner thins out the list of announcements, excluding long inactive entries (therefore, the entries in the list must have some kind of timestamp), thereby reducing the time it takes to view the list.

In general, flat combining is a very general approach that successfully applies to complex lock-free data structures, allows you to combine different algorithms, for example, add elimination back-off to the implementation of a flat combining stack. The implementation of flat combining in C ++ in the public library is also quite an interesting task: the fact is that in research papers the list of announcements is usually an attribute of each container object, which can be too expensive in real life, since each container must have your own entry in TLS. I would like to have a more general implementation with a single publication list for all flat combining objects, but it is important not to lose speed.

History Spirals

Интересно, что идея анонсирования своей операции перед её выполнением восходит к началу исследований lock-free алгоритмов: в начале 1990-х годов были предприняты попытки построения общих методов преобразования традиционных lock-based структур данных в lock-free. С точки зрения теории эти попытки успешны, но на практике получаются медленные тяжелые lock-free алгоритмы. Идея этих общих подходов как раз и была — анонсировать операцию перед выполнением, чтобы конкурирующие потоки увидели её и помогли её выполнить. На практике такая «помощь» была скорее помехой: потоки выполняли одну и ту же операцию одновременно, толкаясь и мешая друг другу.

Стоило немного переставить акценты — с активной помощи до пассивного делегирования работы другому, более удачливому потоку — и получился быстрый метод flat combining.

Стоило немного переставить акценты — с активной помощи до пассивного делегирования работы другому, более удачливому потоку — и получился быстрый метод flat combining.

Conclusion

It is amazing that such a simple data structure as a stack, where there would seem to be nothing to write about, made it possible to demonstrate so many interesting approaches to the development of competitive data structures!

Back-off strategies are applicable everywhere in the construction of lock-free algorithms: as a rule, each operation is enclosed in an endless loop according to the principle of “we do not succeed”, and at the end of the loop body, that is, exactly when the iteration failed, it will not be superfluous put back-off, which can reduce the pressure on the critical container data under heavy load.

Elimination back-off is a less general approach applicable to the stack and, to a lesser extent, to the queue.

Developed in recent years, flat combiningis a special trend when building competitive containers - both lock-free and fine grained lock-based.

I hope we will meet with these techniques in the future.