Testing on the 1C: Enterprise 8 platform

Introduction.

This article is a fact-finding and informational and does not contain any advertising and especially attempts to extract material profit. The purpose of the article is to shed light on the created opportunity to test the configuration code, briefly familiarize yourself with the successfully used tool, hear feedback and draw conclusions. Perhaps this is an unnecessary bike and someone will share the right testing approach for this platform.

Development on the 1C: Enterprise platform is not the most difficult process. The hardest part is to accept the concept of writing code in your native language :)

But despite the fact that in the 8th version of the platform a positive qualitative leap was made in the device of the platform and the embedded language (for example, the emergence of the MVC concept for configuration metadata objects), many popular encoders continue to issue megabytes of "garbage", which somehow miraculously works in the framework of what I could / managed to check such an encoder.

I don’t have rich "experience" in various franchisees, for all my years I have only the experience of the only department that produces boxed products, products are sold, customers find bugs, terrorize tech support, tech support runs to developers, developers happily fix bugs found, at the same time introducing various new ones, in short - work is for everyone and it will be enough for a long time.

How much time is spent on fixing bugs, and how much on creating new functionality - the proportion is known only approximately. Bugs found by customers are perceived as an inevitable evil, and to reduce the time to fix them, they resort to intensive manual testing of releases and hiring / training of competent developers. Manual testing by the QA department is quite laborious and there is no way to accurately determine the golden mean of the ratio of testing depth to the time spent on testing. There is no need to talk about the presence of a huge number of talented developers.

In "adult" programming languages, such problems are tried to be solved by universal testing. Starting from the developer level - unit tests, then functional and regression tests, and ending with integration tests. In the most interesting cases, tests are run on each

Unfortunately, 1C does not spoil developers on its platform with any worthy tools, well, at least they did a repository / repository.

A few years ago, starting a new project, I personally got tired of regular blows with the same rake on the forehead. The management agreed on the time to develop my knee-tested testing system as I saw it and the work began to boil.

Application.

Personally, on one project, this system saved at least a year of time spent in the debugger. Another project has been using the system for functional tests of a huge number of discount / payment options for several years. Three more projects are starting to use it. In general, of course, to introduce such a system “from below” is like banging your forehead into the wall of a skyscraper. But if you peck every day, then the result will sooner or later appear. The main thing is a successful example and support from above.

Description.

The system works on the principle - “follow the method, compare the result with the standard”. The only and very unpleasant, albeit natural, limitation is that the method should be export (public). In principle, you can screw up the source code into files, parse the listed method names, mark them with export ones and load back and forth. But such a method, firstly, complicates the testing itself, and most importantly, pitfalls appear in the form of intersection of names of methods that “suddenly” become visible from the outside. Therefore, we believe that the problem of encapsulation violation is not as critical as the problem of non-tested code.

Based on this simple principle, the system can:

- unit tests;

- functional tests;

- regression tests *;

- integration tests;

* regression is not brought to mind, there are timestamps of the start of the test and the end, there is no normal report on the change in the difference of these marks.

Device.

Since the system was created back in the days of platform 8.1, there is no support for managed forms in it, because I no longer need it, and current projects do not need it yet. But the main functionality of the system is code testing and the whole drawback is that the testing processing is not written in managed forms.

The main interface for creating and executing tests is external processing. Processing starts directly in the infobase, the configuration of which they want to test.

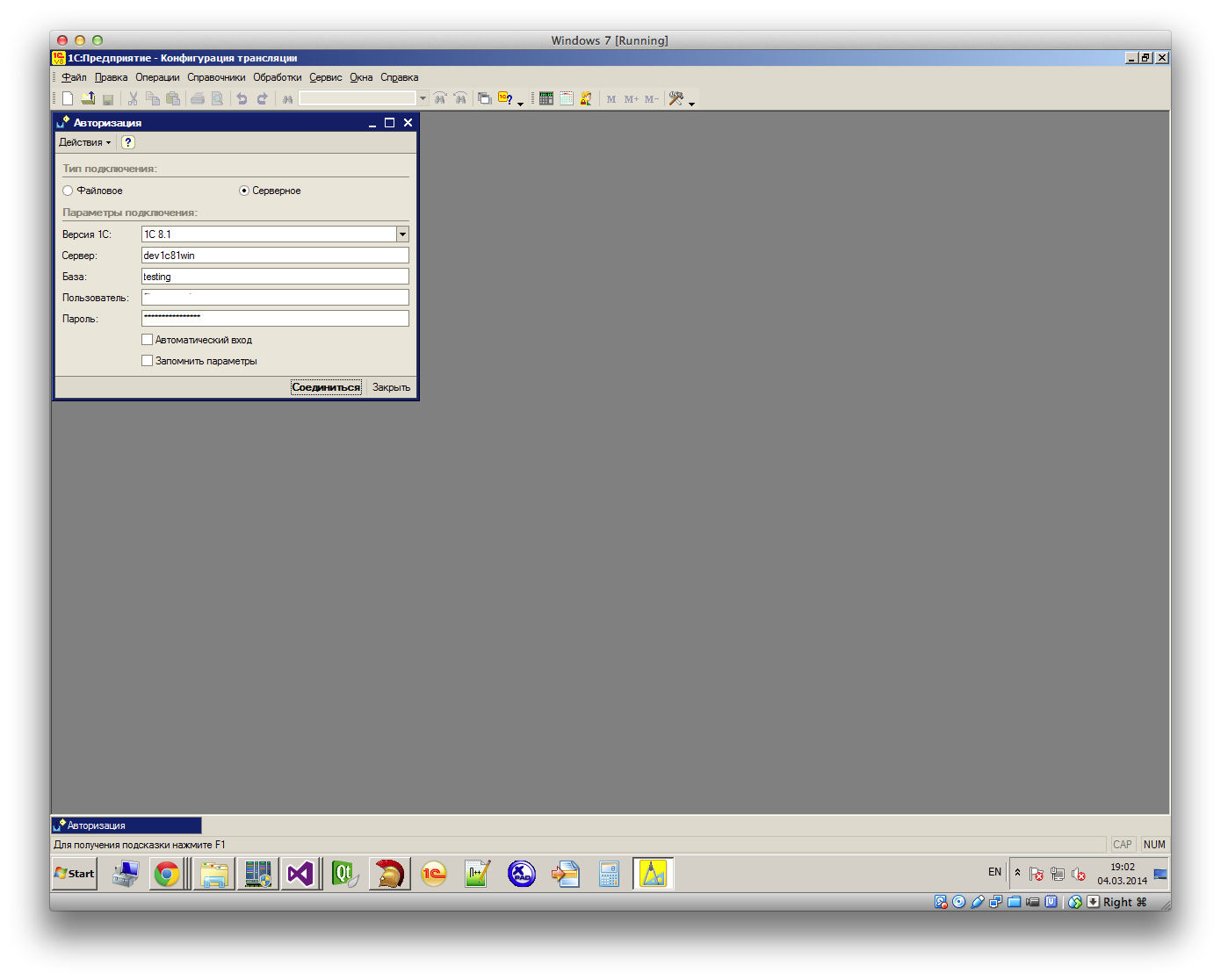

The tests themselves are stored in a separate information base, where external processing is connected and authorized:

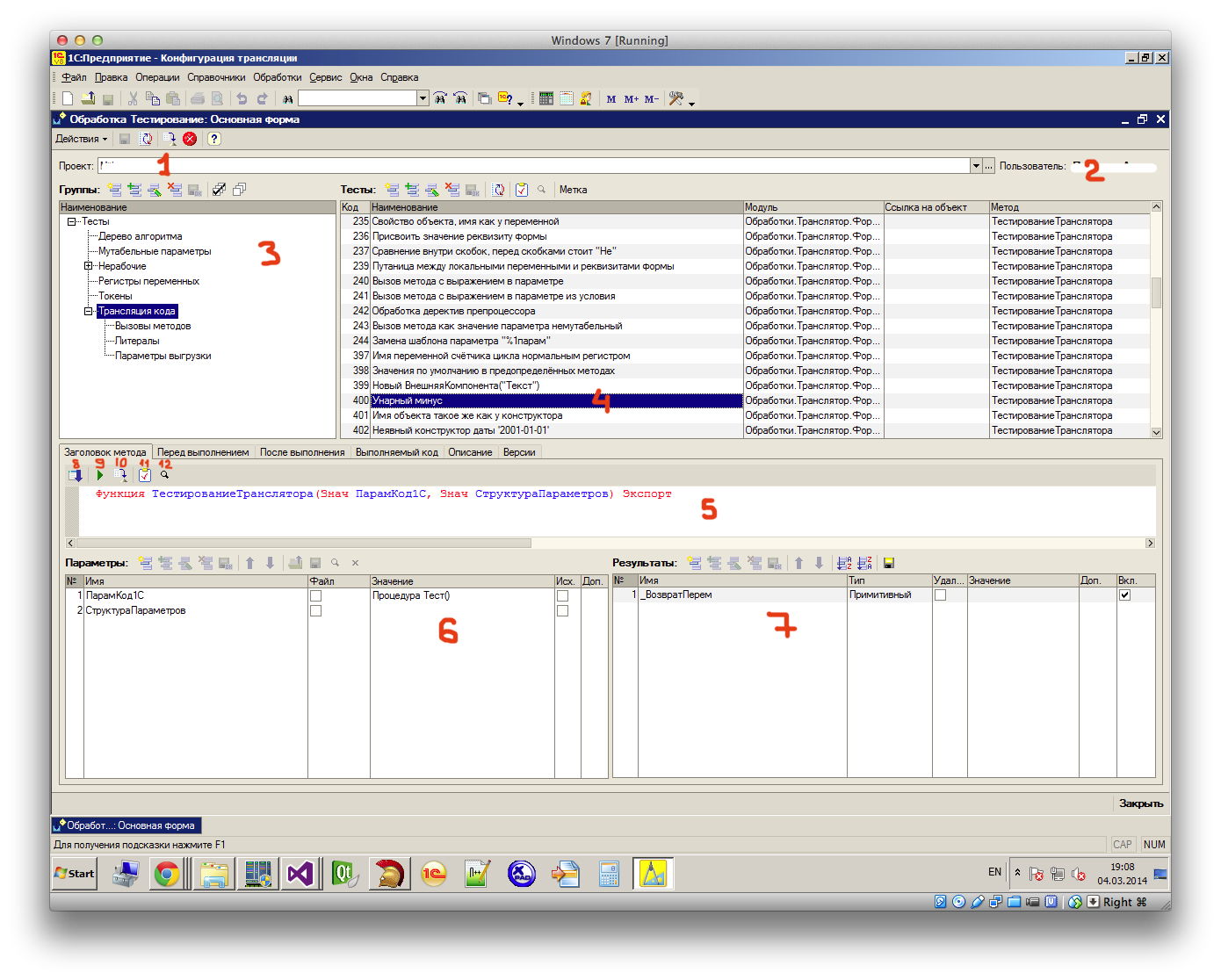

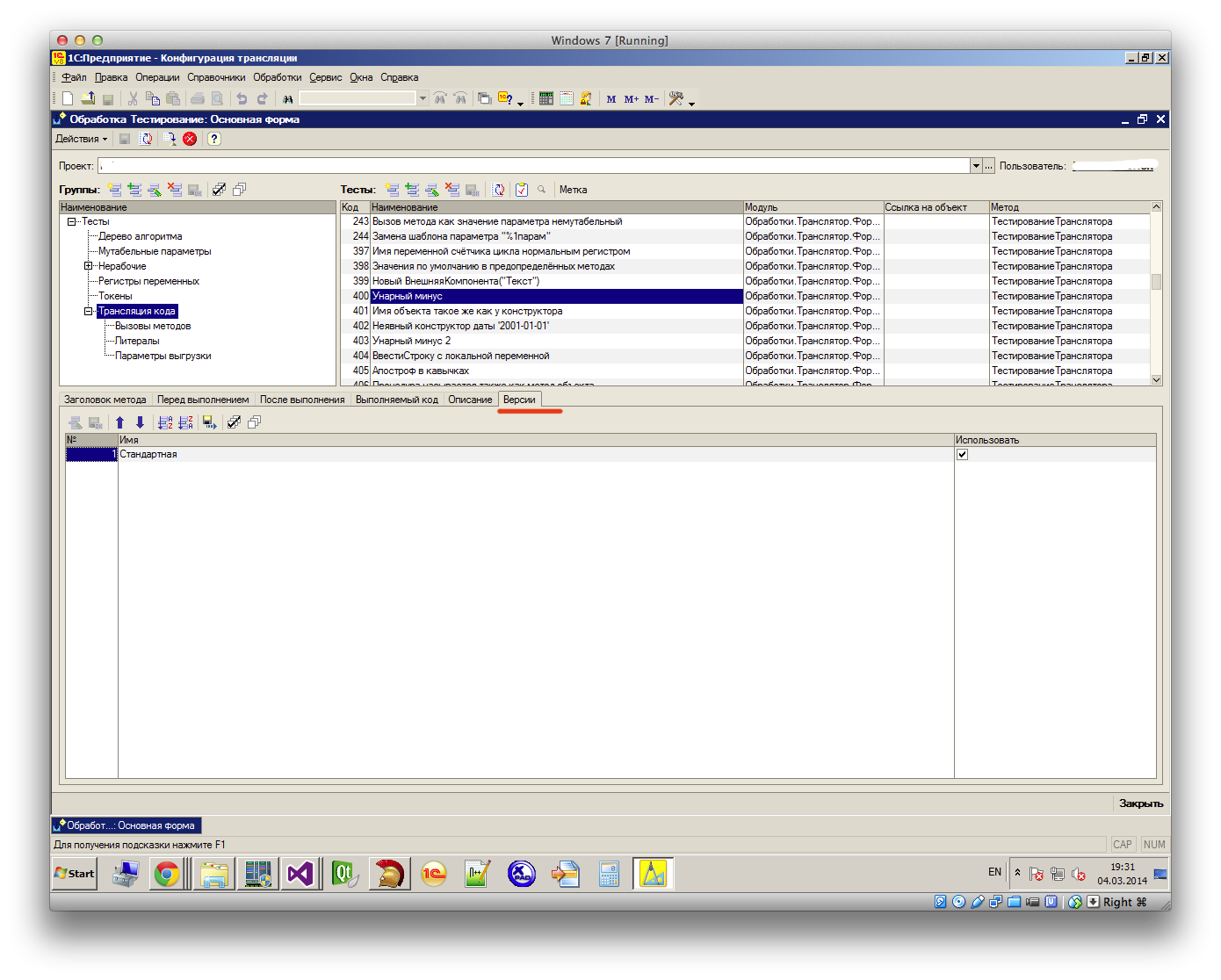

The working form looks like this:

1. Current project

2. Username of the testing system

3. Tests can be divided into groups, this is convenient when setting up automatic testing and allows you to structure the tests themselves.

4. A test with a code of 400 is selected, the name of the test is indicated, the test method is in the form of the built-in processing "Translator", the last column displays the name of the tested method.

5. The full signature of the test method is inserted here, which is automatically parsed (by pressing the button 8) on the incoming parameters - 6 and the results - 7.

6. Here the incoming parameters of primitive and reference types are set, you can also specify the path to the file if the File box is checked .

7. The selected test has only one function return value; the name _ReturnRem is predefined for it .

8. Parse the method signature into incoming parameters and return results.

To understand the further narrative, you need to get distracted by field 5, where there are a few more tabs.

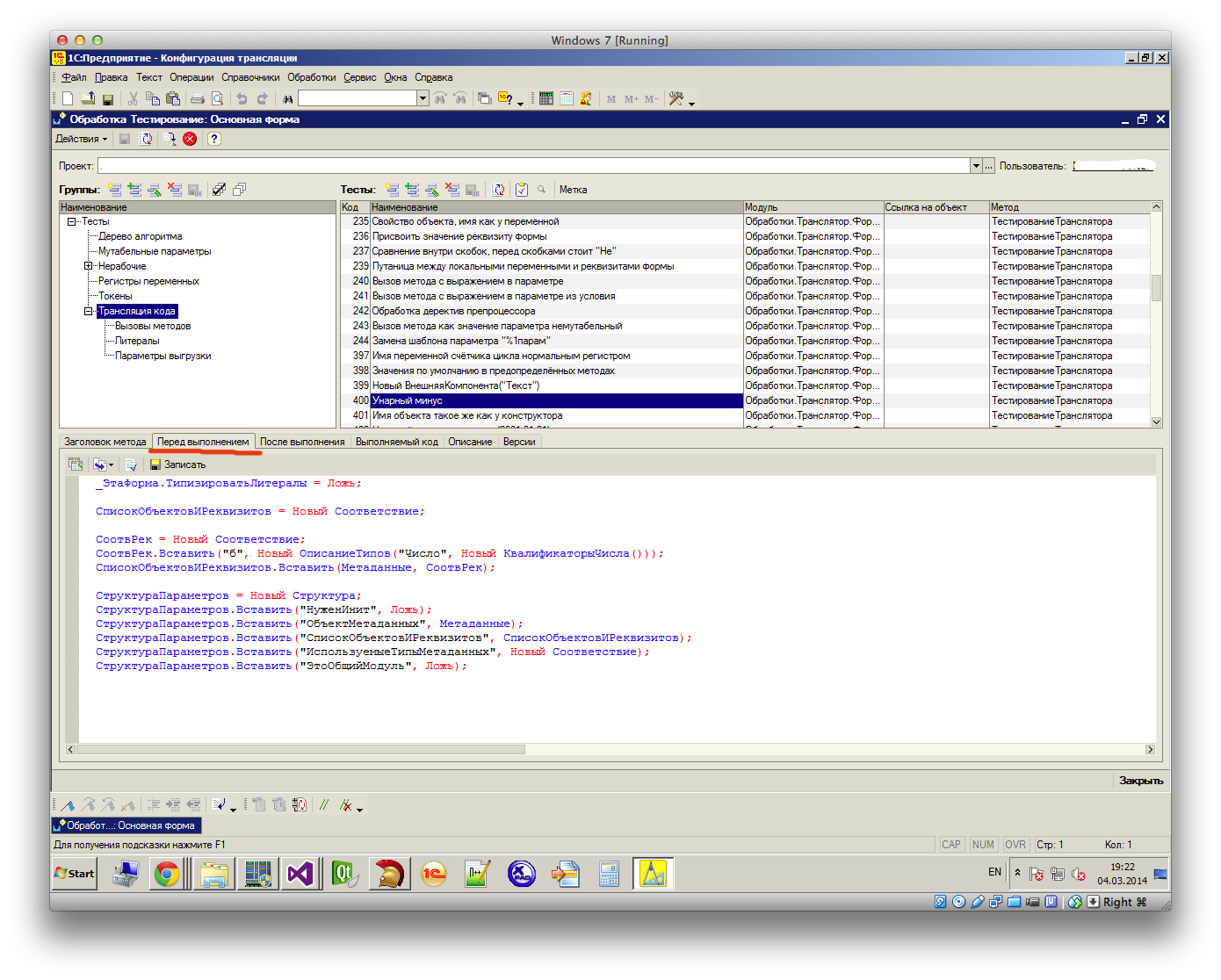

Before execution : The

field is designed to execute arbitrary code before calling the test method. You can modify the incoming parameters as you like, prepare collections or objects of the infobase.

A similar field After execution allows you to process the obtained results of calling the test method before comparing them with previously saved reference values.

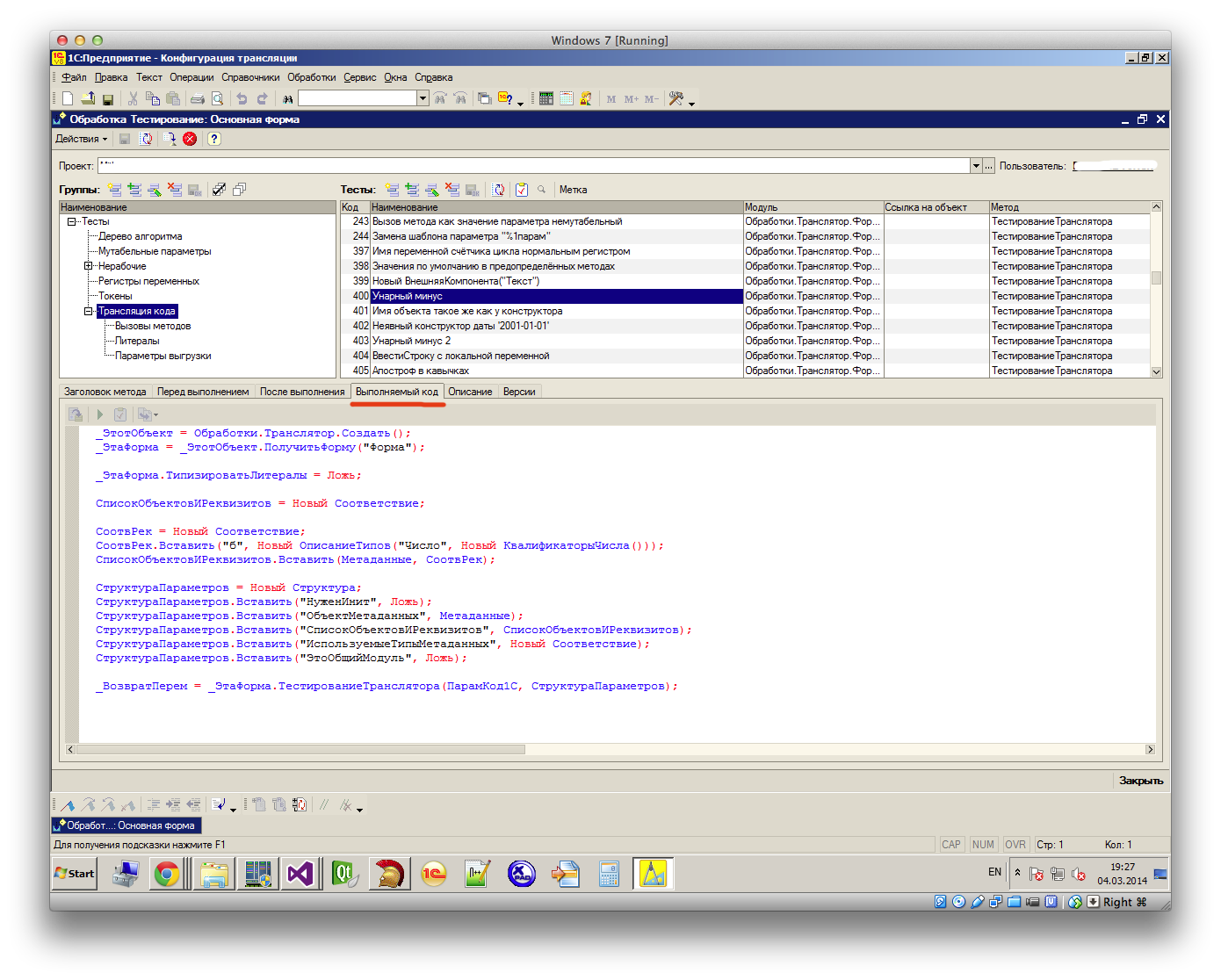

Executable code :

This shows all the code that will be executed when the test starts.

Tab Description- here you can describe in detail what kind of test.

And finally, the Versions tab :

Here are listed all the versions of the configurations of the current project, wound up in the test database. The creator of the test can indicate on which versions this test should pass, and for which the test is not applicable. This information is used for automatic testing.

9. Run the test. The code that is visible on the Executable Code tab is executed . Field 7 is populated with the results of the executed code.

10. The button for saving the results obtained after pressing the button 9 as a reference.

11. Run the test and compare the results with the previously saved standard.

12. View saved reference values.

Example.

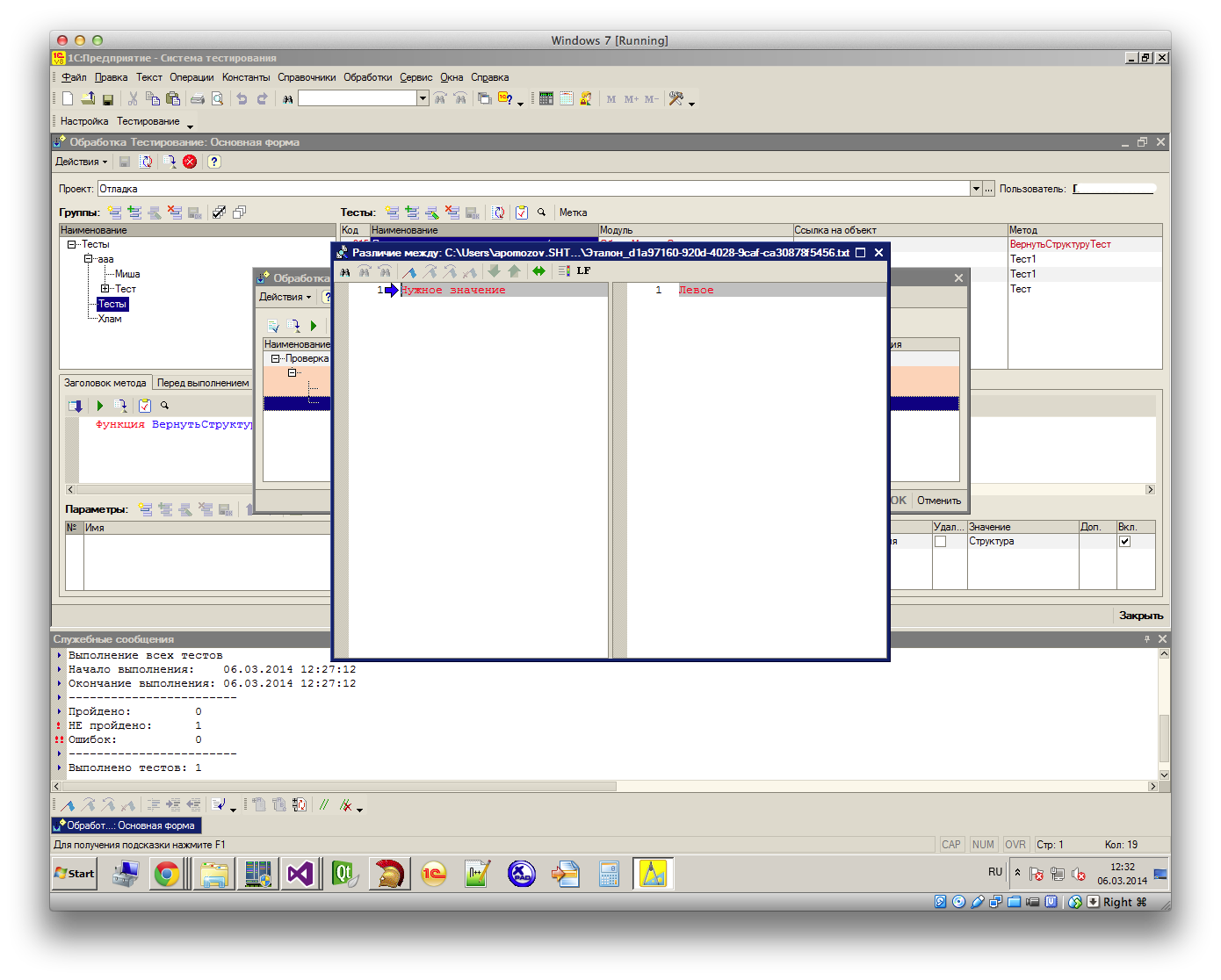

This is what an interactive message looks like the test failed:

It is seen that the return value is of type Structure , the window lists the structure fields whose values differ from the saved reference.

Text values can be compared with the diff-tool built into the platform:

Total

In principle, the described functionality is enough to solve most tasks for testing a specific configuration in semi-automatic mode. But in addition to this, on the server side, it is possible to configure automatic testing of a set of configurations (within the framework of one project) according to a schedule (scheduled tasks). The system can take the current version of the configuration from the storage, roll it onto the test base and run the indicated test groups. Upon completion, the above observers are sent a letter with detailed test results.

The system was not created for sale, the system has not been brought to mind in many places, but even what already exists helps to avoid at least the stupid mistakes from the series - corrected in one place, fell off in three others .