Search for images by fragment

In his speech, Alexander Krainov told how Yandex.Pictures clustered duplicate images. In other words, duplicate pictures were isolated and filtered. Where the main idea was to highlight the contours of the image using the DoG filter , then find the key points and get their descriptors.

Clustering duplicates comes down to matching descriptor matches. This is the “digital format” of key points from the article Clustering Duplicates in Image Search .

But I would like to know a little more about what these descriptors are and how to search for them.

Descriptors

Key points are points that ideally should not change when changing or modifying an image.

Descriptors are, in general terms, a convolution of characteristics and the presentation of key points in a format accessible for checking for coincidence.

The search for effective allocation of key points, their descriptors, as well as methods for checking for matches, is still on the agenda.

But you have to start somewhere, so let's turn to the OpenCV library for help .

The first thing that catches your eye is the SURF descriptors .

Which promise extraordinary accuracy. Which is confirmed after the tests.

But there are a few nuances.

SURF descriptors are vectors of 128 (or 64) numbers per key point. Matching is performed by searching for the closest point (or even two). And the closer the point, the better.

It turns out that for an image with 1,000 key points, 128,000 floating-point numbers are required.

In addition, the detection of points is a rather complicated operation and requires considerable time. What does not allow to effectively use this algorithm on small devices. In addition, the algorithm itself is closed and patented (in the USA).

After getting acquainted with SIFT and SURF, I wanted something simple to implement with the ability to use it on a small server or device.

Perceptual hashes

And perceptual or perceptual hashes were found .

Which task is that with a small change in the image, the hash also changes slightly.

A match check is performed by counting the number of different positions between two hashes. Those. Hamming distance calculation . And the smaller it is, the less different elements are, the greater the coincidence.

This method is designed to search for full or partial duplicate images. Those. with a significant change in the image format, or interference with the content makes it impossible to check for coincidence, since the hashes will be noticeably different.

In other words, perceptual hashes are not suitable for finding half duplicates.

Based on this, an attempt was made to combine SURF descriptors and perceptual hashes in order to solve the problem of searching for fuzzy half duplicates.

The method is based on the clustering of key points in such a way that the centers of the clusters coincide on the original and cropped image. And then a fragment of the image was extracted around the key point and received a perceptual hash. As a result, one image gave about 200 crop of slices and their hashes.

This method has two and a half significant drawbacks:

1. low speed of checking for coincidence on a large set of hashes. Searching 1 million hashes took 20 seconds

2. low speed of obtaining key points

3. low accuracy, a lot of false positives, high requirements for the target base, not suitable for all images, requires pre-moderation, etc.

The very idea that a certain number of fingerprints would be allocated from the image, which could be simply compared with each other, bewitched.

Therefore, it was decided to try to find solutions to these problems.

Slow sampling rate

The complexity of finding and calculating Hamming distances on a large dataset is an independent problem and requires an independent approach.

After some research on the subject, it turned out that there are many solutions to this problem.

The most effective available algorithm called HEngine was selected and implemented , which allowed ~ 60 times to speed up the selection from the database.

SURF and key points

Since we already work with binary hashes, or fingerprints, and we consider the coincidence to be Hamming's distance, it is strange to use such a colossus as SURF and it would be worth considering other methods for obtaining key points and descriptors.

In general, OpenCV provides two types of descriptors:

- Floating-point

descriptors - And binary descriptors

Here, binary descriptors are more suitable than any other for our task, because they also use the Hamming distance to check for matches.

ORB: an efficient alternative to SIFT or SURF

OpenCV already has an excellent alternative to SURF, which is not only open and without licensing restrictions, it is even easier and faster [1].

ORB is the Oriented FAST and Rotated BRIEF - an improved version and combination of the FAST cue point detector and BRIEF binary descriptors.

ORB has one significant nuance for us - the size of descriptors is 32 bytes per point.

A match check is the sum of Hamming distances for each byte of the descriptor (the first is compared with the first, the second with the second, etc.).

In our task, it is assumed that one point gives one value, and here it turns out 32, which must also be summed up with the corresponding ones by index (first with first, second with second, etc.) in the target database.

Since our hash is a 64-bit number, it takes 32 bytes of the descriptor to squeeze into 8 bytes and at the same time do not lose much in accuracy.

After some tests, it was decided to try to represent these 32 bytes as a 16x16 bit matrix. And then pass this matrix through the PHash perceptual hash . The result should have been just a 64 bit number.

Now we come to the full description of the concept.

How indexing works

1. Get the key points and ORB descriptors, select the number of required points in the image.

2. The resulting descriptors of 32 bytes are presented in the form of a 16x16 bit matrix.

3. Convert the matrix to a 64bit number using PHash.

4. Save 64bit fingerprints in MySQL.

5. Select the required Hamming distance and run the HEngine daemon, which will perform the search.

How search works

We perform identical steps 1 to 3 from indexing, but only on the requested image.

4. We make a request to the HEngine daemon, which returns all the hashes in the given limit.

5. If necessary, filter out irrelevant results.

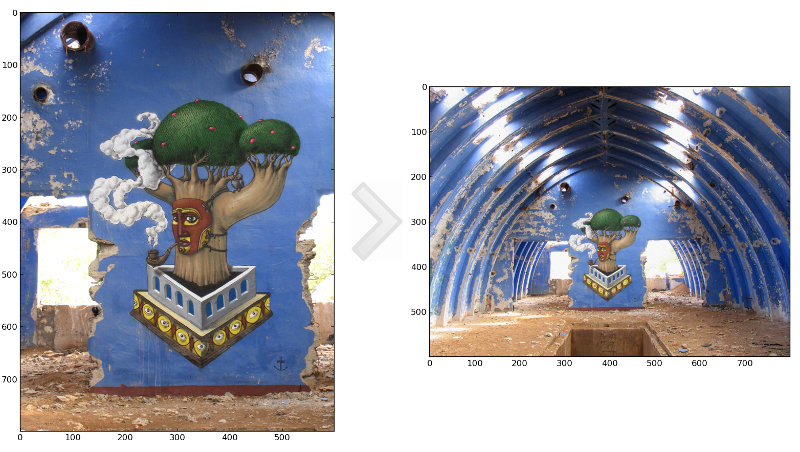

Fig 1. Hamming distance limit 7. Gray dots are found key points. Green - matching points. Red - matching the standard ORB exhaustive search.

What is the result?

As a result, we managed to solve several problems:

- find a way to quickly calculate the Hamming distance on a large data set

- get rid of a large and inconvenient SURF

- increase the speed of highlighting key points and their fingerprints

- and also not lose much in accuracy.

This allowed us to find images by their fragment, as well as fuzzy half-duplicates without large computational resources.

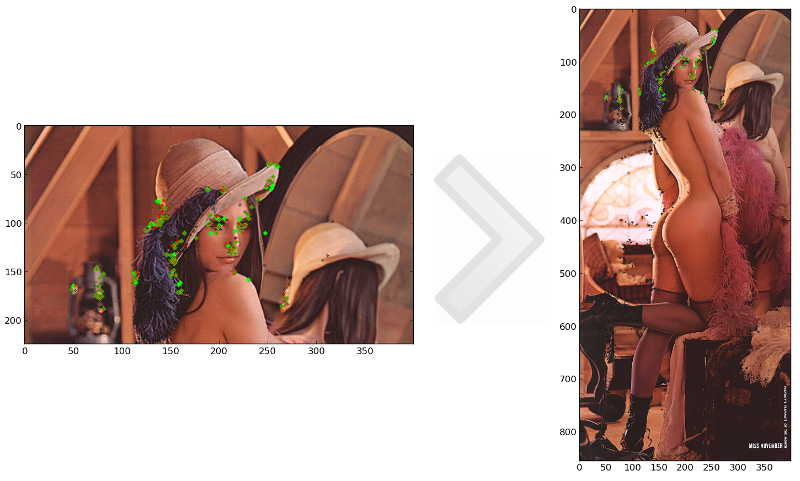

Fig 2. Sweet by Friday

Considering that depending on the settings, the described algorithm through binary descriptors ORB gives about 1,000 hashes per picture.

On a base of 1,000 images, 1,000,000 hashes are obtained in the database. Finding and clustering all duplicates takes a minute and a half. Includes exhaustive search and matching matching hashes.

References

[1] Ethan Rublee, Vincent Rabaud, Kurt Konolige, Gary R. Bradski: ORB: An efficient alternative to SIFT or SURF. ICCV 2011: 2564-2571.

[2] en.wikipedia.org/wiki/SURF

[3] ru.wikipedia.org/wiki/Hamming_Distance

[4] phash.org

[5] habrahabr.ru/post/143667 Duplicate clustering in Yandex.Pictures

[6] habrahabr .ru / post / 211264 HEngine - hash search algorithm within a given Hamming distance on a large dataset

[7] github.com/valbok/img.chk My fragment search prototype