Thinker Thing 3D Printer Prints Your Thoughts

Imagine that you can print objects just by thinking about them. Recently, this is no longer a far-fetched dream, but a very real technology - at least for customers of the Chilean startup Thinker Thing. The potential for their development is enormous, only the technology is damp: you still can’t just start a 3D printer, close your eyes, imagine a flying pasta monster, a sailor made of marshmallows or pink e- unicorn (underline it), brew tea and expect the result - you will have to pretty much achieve the goal prestrain gray matter.

As you know, engineers and designers have been using 3D printers for more than two decades. More recently, the cost of these devices has fallen so much that they have become available to ordinary people. The possibilities seem endless: the creation of food, living tissue, weapons, and even batteries. The revolution has taken place! Hooray, comrades! That's just the majority of vendors so far concentrate their efforts on finalizing only the hardware component of these devices, then much less attention is paid to the development of software for 3D printers. But the Chileans decided to go further and created a mechanism that allows users to reveal their inner creative potential. With it, people who are lazy / cannot engage in modeling objects for 3D printing using the appropriate software and do not want to download ready-made models can do what everyone else can only dream of,

This is not a bird or a plane. An orange piece of plastic that can fit in your palm resembles the limb of a toy tyrannosaurus. It may not look very impressive, but the fact remains: this is the first object printed on a 3D printer using the power of thought.

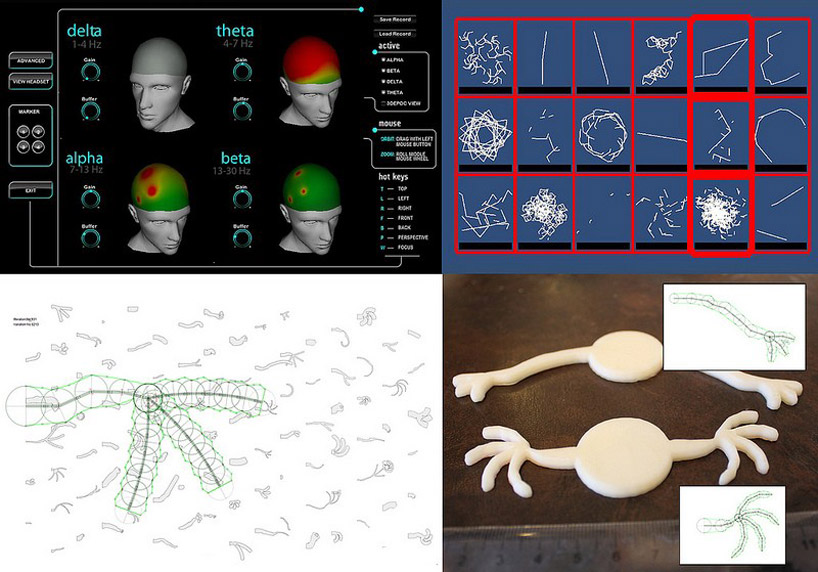

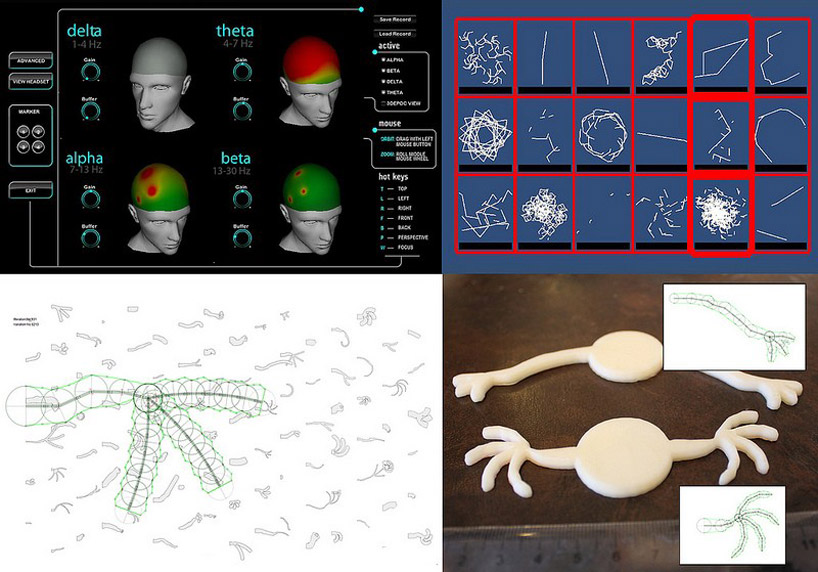

The system is based on the Emotional Evolutionary Design (EED) software suite, which allows a 3D printer to interpret the thoughts of its users, and a $ 300 headset electroencephalograph that monitors brain cell activity using fourteen sensors in contact with the scalp. As you know, for transitions of the brain from one state to another (excitement, boredom, joy, etc.), certain patterns of activity of gray matter are characteristic. A bunch of EED and Emotiv EPOC successfully reads and interprets these patterns. This hardware-software complex is literally able to create order out of chaos.

3D modeling process with Emotional Evolutionary Design

A bunch of EED and Emotiv EPOC in action The

user is shown random forms, and the system analyzes his reaction in real time. Software can distinguish from the total mass of objects that have received a positive emotional reaction. The user’s favorite forms will increase in size on the screen, while the rest will decrease. The largest forms can be combined with each other to create model components. The output will be a unique 3D model that is completely ready for printing. Developers focus on the fact that even a three / four-year-old child who has not even learned how to hold a pencil in his hands can cope with such a system - not to mention working with AutoCAD (here loving mothers rejoice).

The concept of designing models using analysis of emotional reactions is based on the fact that most people more effectively reject individual ideas from the general mass than create new ideas from scratch, especially if they do not have the appropriate training. This is well understood by engineers from Cornell University (USA), who also create a similar technology.

“Currently, one of the weaknesses of 3D printing is the content creation mechanism,” says Professor Hod Lipson, “We have iPods without music. We have machines that can create just about anything, but we haven't can unleash their potential. "

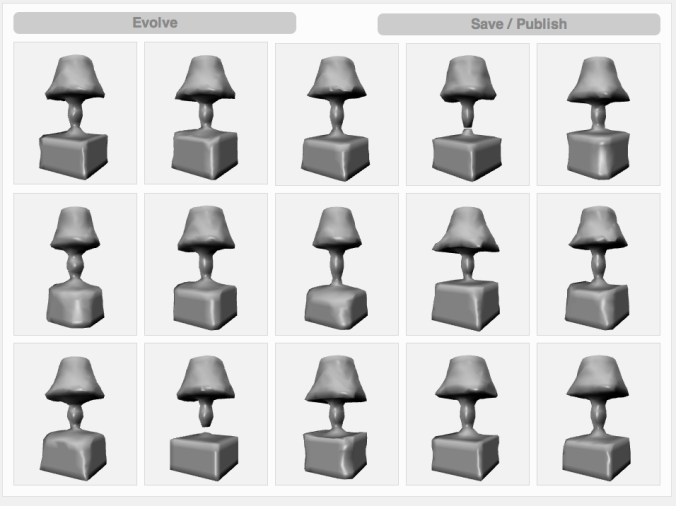

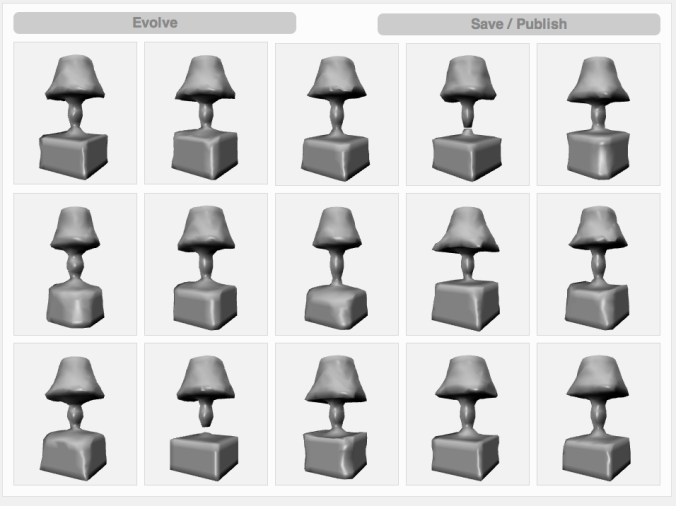

The evolutionary design of 3D objects on the EndlessForms website.

Lipson students created the EndlessForms website, visitors to which can use a rather convenient step-by-step mechanism for designing new 3D models. Initially, the user is shown 15 three-dimensional forms. Having chosen the ones he likes, he can combine them, after which 15 new forms will appear, combining the features of the selected options.

To speed up the process, the Americans decided to use the same idea as the Chileans from the Thinker Thing team. Last year, they used the Emotiv EPOC headset to create 3D models, but faced a problem: at some point, the device stopped functioning normally, as the subjects were tired and the signal became inaccurate. Repeated calibration did not solve the problem. Researchers note that this problem is characteristic of almost all cheap electroencephalographs. The skull extinguishes weak

electrical impulses from brain neurons, while electrical signals from nearby facial muscles may be stronger. More accurate magnetic resonance imaging scanners can be used, but this approach makes off-the-shelf 3D printers too expensive and

impractical.

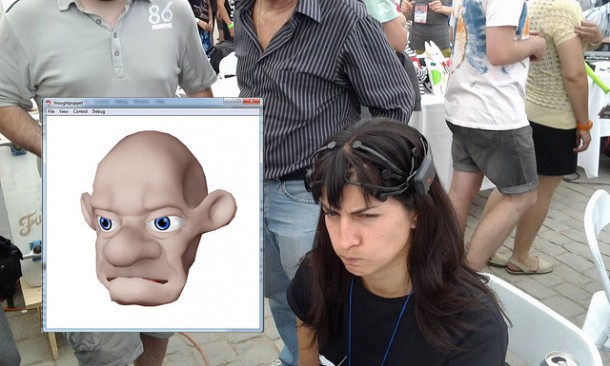

That same headset Emotiv EPOC

Therefore Lipson partners decided to turn the eye of the user into the input interface. The tracking system they created can determine the

forms to which the user pays the most attention. The catch is that without an EEG headset it is impossible to determine the user's reaction to the figure. Therefore, both devices must be used in tandem. Let's hope that in the future, improved brain scanning and eye tracking systems will replace the good old mouse when creating 3D models.

Especially inquisitive minds, I propose to get acquainted with the promotional video of this project. The meat starts from the second minute. Have a nice watching!

PS In the meantime, developers are struggling to create an innovative interface for creating 3D models, advanced models of leading-brand 3D printers can be examined and ordered in detail HERE.

As you know, engineers and designers have been using 3D printers for more than two decades. More recently, the cost of these devices has fallen so much that they have become available to ordinary people. The possibilities seem endless: the creation of food, living tissue, weapons, and even batteries. The revolution has taken place! Hooray, comrades! That's just the majority of vendors so far concentrate their efforts on finalizing only the hardware component of these devices, then much less attention is paid to the development of software for 3D printers. But the Chileans decided to go further and created a mechanism that allows users to reveal their inner creative potential. With it, people who are lazy / cannot engage in modeling objects for 3D printing using the appropriate software and do not want to download ready-made models can do what everyone else can only dream of,

This is not a bird or a plane. An orange piece of plastic that can fit in your palm resembles the limb of a toy tyrannosaurus. It may not look very impressive, but the fact remains: this is the first object printed on a 3D printer using the power of thought.

So how does the Thinker Thing shaitan box work?

The system is based on the Emotional Evolutionary Design (EED) software suite, which allows a 3D printer to interpret the thoughts of its users, and a $ 300 headset electroencephalograph that monitors brain cell activity using fourteen sensors in contact with the scalp. As you know, for transitions of the brain from one state to another (excitement, boredom, joy, etc.), certain patterns of activity of gray matter are characteristic. A bunch of EED and Emotiv EPOC successfully reads and interprets these patterns. This hardware-software complex is literally able to create order out of chaos.

3D modeling process with Emotional Evolutionary Design

A bunch of EED and Emotiv EPOC in action The

user is shown random forms, and the system analyzes his reaction in real time. Software can distinguish from the total mass of objects that have received a positive emotional reaction. The user’s favorite forms will increase in size on the screen, while the rest will decrease. The largest forms can be combined with each other to create model components. The output will be a unique 3D model that is completely ready for printing. Developers focus on the fact that even a three / four-year-old child who has not even learned how to hold a pencil in his hands can cope with such a system - not to mention working with AutoCAD (here loving mothers rejoice).

Competitors

The concept of designing models using analysis of emotional reactions is based on the fact that most people more effectively reject individual ideas from the general mass than create new ideas from scratch, especially if they do not have the appropriate training. This is well understood by engineers from Cornell University (USA), who also create a similar technology.

“Currently, one of the weaknesses of 3D printing is the content creation mechanism,” says Professor Hod Lipson, “We have iPods without music. We have machines that can create just about anything, but we haven't can unleash their potential. "

The evolutionary design of 3D objects on the EndlessForms website.

Lipson students created the EndlessForms website, visitors to which can use a rather convenient step-by-step mechanism for designing new 3D models. Initially, the user is shown 15 three-dimensional forms. Having chosen the ones he likes, he can combine them, after which 15 new forms will appear, combining the features of the selected options.

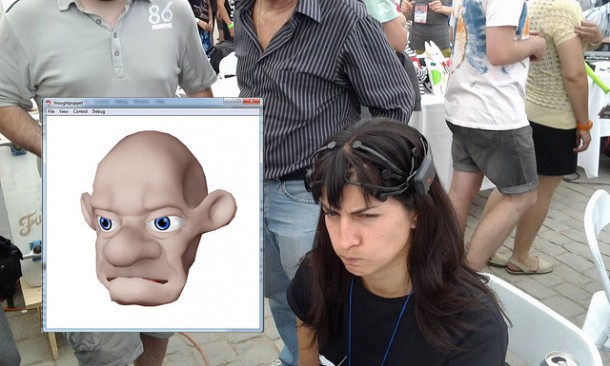

To speed up the process, the Americans decided to use the same idea as the Chileans from the Thinker Thing team. Last year, they used the Emotiv EPOC headset to create 3D models, but faced a problem: at some point, the device stopped functioning normally, as the subjects were tired and the signal became inaccurate. Repeated calibration did not solve the problem. Researchers note that this problem is characteristic of almost all cheap electroencephalographs. The skull extinguishes weak

electrical impulses from brain neurons, while electrical signals from nearby facial muscles may be stronger. More accurate magnetic resonance imaging scanners can be used, but this approach makes off-the-shelf 3D printers too expensive and

impractical.

That same headset Emotiv EPOC

Therefore Lipson partners decided to turn the eye of the user into the input interface. The tracking system they created can determine the

forms to which the user pays the most attention. The catch is that without an EEG headset it is impossible to determine the user's reaction to the figure. Therefore, both devices must be used in tandem. Let's hope that in the future, improved brain scanning and eye tracking systems will replace the good old mouse when creating 3D models.

Especially inquisitive minds, I propose to get acquainted with the promotional video of this project. The meat starts from the second minute. Have a nice watching!

PS In the meantime, developers are struggling to create an innovative interface for creating 3D models, advanced models of leading-brand 3D printers can be examined and ordered in detail HERE.