Python profiling and debugging, let's move on to practice

In the last article, we defined the concepts of profiling and optimization, got acquainted with various approaches to profiling and types of tools. A little touched the history of profilers.

Today I propose moving on to practice and show examples of manual profiling methods (and even the “gaze method”). Tools for statistical profiling will also be examined.

What can be the practice without examples and training? I thought for a long time what kind of project it is worth taking as a training manual, so that it is both indicative and not very complicated. In my opinion, this is Project Euler - a collection of mathematical and computer puzzles, because to solve the proposed problems it is necessary to use numerical tests and without optimization the search for the answer can go on for years.

In general, I highly recommend solving puzzles from the Euler Project. Helps to distract, meditate and relax, and at the same time keep your brain in good shape.

As an example for the article, take task 3 :

We write a simple solution "forehead":

An attentive or experienced reader may immediately call me a noob, name a few problems of this program and give some recipes for its optimization, but we specially took a simple example and pretend that we consider this code good, optimizing it along the way.

To check, run the program with a number, the divisors of which are known to us:

Answer 29, issued by the program, is correct (it can be found in the problem statement). Hurrah! Now run the program with the number 600851475143 of interest to us:

And ... nothing happens. CPU utilization is 100%, the program has not completed even after several hours of operation. We begin to understand. We recall that you can only optimize the code that works correctly, but we checked the program on a small number and the answer was correct. Obviously, the problem is in performance and we need to start optimization.

In real life, I would profile the program with argument 13195 (when its operating time is adequate). But since we are training here and in general, just for jun, we will use the “gaze method”.

We open the code and carefully look at it. We understand (if we are lucky, we quickly understand) that to search for divisors of the number N, it makes no sense to sort out the numbers from the interval sqrt (N) + 1 ... N-1 , because we already found all the divisors from this range when iterating over the numbers from the interval 2 ... sqrt (N) . We slightly modify the code (see lines 9 and 18):

To check, run the program again with a number whose divisors are known to us:

Subjectively, the program worked much faster, then we launch it again with the number of interest to us: 600851475143:

We check the answer on the site, it turns out to be correct, the problem is solved, we feel moral satisfaction.

The program was completed in an acceptable time (less than a minute), the answer was correct, there is no sense in further optimization in this particular case, because we have solved the task. As we remember, the most important thing in optimization is to be able to stop on time.

Yes, I am aware that the guys from Project Euler are asking you not to share answers and solutions in the public domain. But the answer to task 3 is googled (for example, by the condition “project euler problem 3 answer” at a time, so I think it's okay that I wrote the answer.

One of the most common ways to quickly figure out what’s what. In the most elementary case, if we use the unix utility “time” it looks like this (before optimization):

And so (after optimization):

Acceleration of almost 65 times (from ~ 3.87 seconds to ~ 61 milliseconds)!

Manual profiling may also look like this:

Result:

Or using the special “ timeit ” module , which is designed to measure the performance of small programs. Application example:

When can manual profiling be applied? Firstly, this is a great way to conduct various kinds of competitions between developers ("My code is now faster than yours, so I'm a better programmer!"), And that's good. Secondly, when it is required “by eye” to determine the speed of the program (20 seconds? Long!) Or to get the results of the improvements (speed up the code 100 times!).

But the most important application is the collection of statistics about the execution time of the code in almost real time right on the production site. To do this, we send the measured time to any system for collecting metrics and drawing graphs (I really like to use Graphite and StatsD as an aggregator for graphite).

To do this, you can use a simple context manager:

An example of its use:

Or a simple decorator:

An example of using a decorator:

At the output, we get a timeline of the code section of interest to us, for example, this one:

by which you can always see how the code feels on live servers, and is it time to optimize it. You can see how the code feels after the next release. If refactoring or optimization was carried out, the schedule allows you to evaluate the results in a timely manner and to understand whether the situation as a whole has improved or worsened.

The method has its drawbacks, and the most important of them is the lack of dependence on the input data. So, for the function for determining the prime number “is_prime”, the execution time will strongly depend on the value of this number, and if this function is called in the project very often, the schedule will be completely meaningless. It is important to clearly understand where what approach can be used and what we have on the way out.

Of course, you can call the profiling method through the collection of metrics in graphite “statistical”, because we collect statistics of the program. But I propose to adhere to the terminology adopted by us in the first part, and to mean “statistical” profiling is precisely the collection of information (sampling) at certain intervals.

In the case of using graphite and an aggregating server ( StatsD ) for it, for one metric we get several graphs at once: the minimum and maximum code execution time, as well as the median and the number of recorded readings (function calls) per unit time, which is very convenient. Let's see how easy it is to connect StatsD to Django.

We put the module:

Add settings to settings.py: application and middleware:

And that’s it! At the output, we get these graphs:

Pros and cons StatsD:

+ easy to install and use

+ suitable for production (must-have, in general)

- little information (quantity / time)

- I need graphite and statsd (also must- have)

Unlike event profilers, there are few tools for statistical profiling. I will talk about three:

Perhaps the most famous statistical profiler for python is statprof . We put:

We use, for example. So:

Or as a context manager (not in version 0.1.2 from pypi, only in the version from the repository ):

Let's try to profile our code:

We see two hotspots in our program. First: lines 12 and 11 of the is_prime function (which is logical), their execution takes about 82% of the program’s time, and second: line 21 of the find_prime_factors function (about 17% of the time):

It is from this line that the hottest function of the is_prime program is called. Just by swapping the operands in the condition, we will significantly speed up the program, because the operation of obtaining the remainder of division (num% i) is faster than the function "is_prime", and at the same time quite often the remainder of dividing one number by another is not equal to zero, and "not num% i" will return False. Thus, we will drastically reduce the number of calls to the is_prime function:

We start profiling:

The hottest place in our program is now line 21 of the function "find_prime_factors", that is, the operation of obtaining the remainder of the division ("num% i"). The function "is_prime" is now called much less often and only 5% of the time the program runs. The program run time has significantly decreased and the largest simple divisor of the number 600851475143 is now located in just 0.25 seconds (they accelerated the program almost 80 times).

Let us pay attention to how much the accuracy of the profiler has fallen: instead of 3575 samples (in the example before optimization), only 40 measurements were taken and information was received on only five lines. Of course, this is not enough. Actually, this is the peculiarity of statistical profilers: the more time we collect data, the more accurate the analysis. If, for example, we ran the program ten or a hundred times, we would have received much more accurate results.

Advantages and disadvantages of statprof:

+ minimal overhead

+ simple use

- the implementation is quite crude and experimental

- for an adequate result, a long analysis

is needed - there is little data at the output

A separate item worth noting is django-live-profiler - a Django application profiler using statprof. For it to work, you must first install zeromq :

We put the module itself:

And run the aggregator:

Next, add the profiler in settings.py:

And in urls.py:

We start the server:

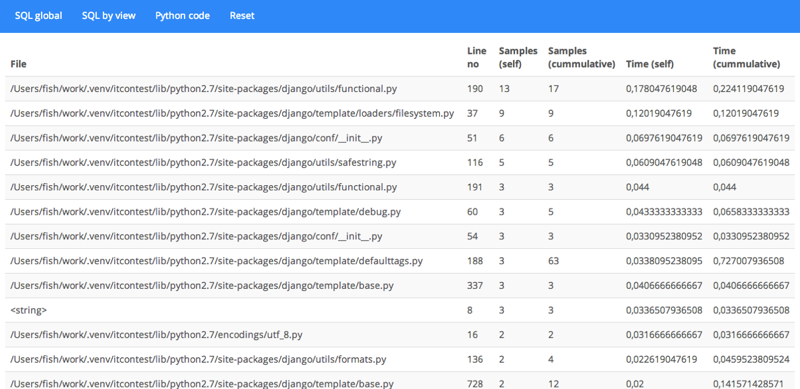

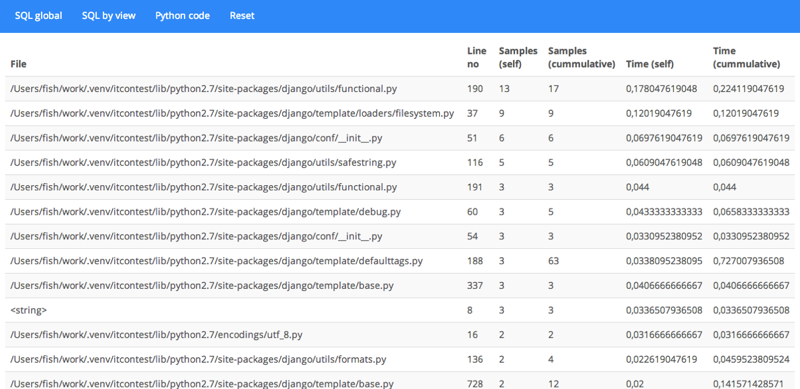

We open the profiler in the browser: 127.0.0.1 : 8000 / profiler / and enjoy life by observing the results of profiling a live project in real time:

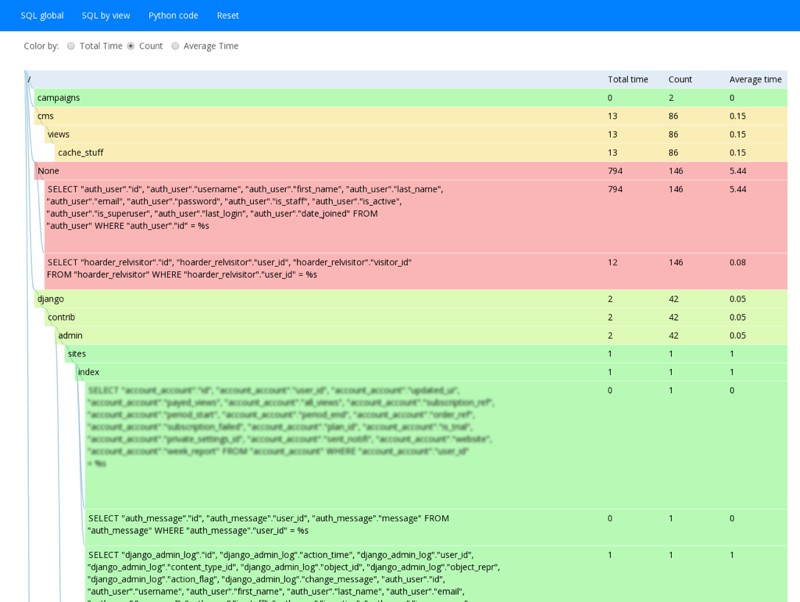

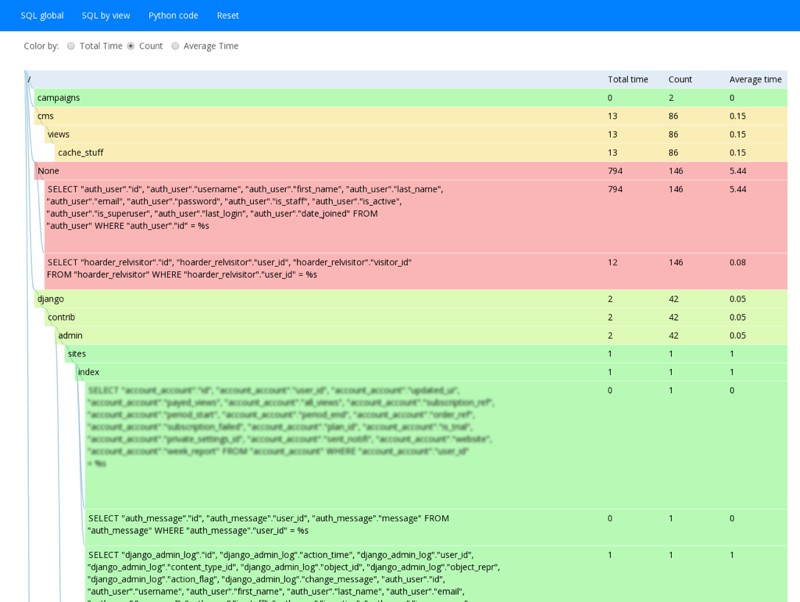

And django-live-profiler can show SQL queries:

Advantages and disadvantages of django-live-profiler:

+ a small overhead

+ can be launched into production (very carefully)

+ profiling SQL queries

- a difficult installation, dependencies

- little data output

Another statistical profiler is called plop (Python Low-Overhead Profiler). The author immediately warns that the implementation is crude and the project is under active development. Installation is trivial:

We start profiling:

We start the server to view the results:

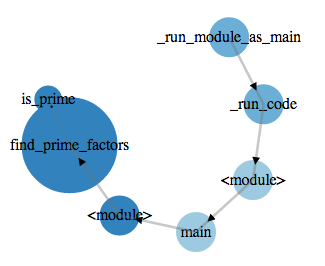

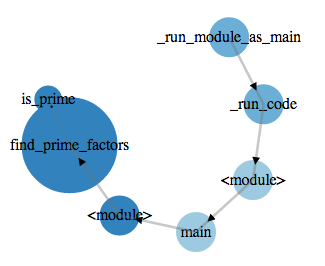

Open the http: // localhost: 8888 / page in the browser and enjoy the results:

Plop can be used to profile Django applications. To do this, install the django-plop package:

Add to settings.py middleware a parameter that tells the profiler where to put the results:

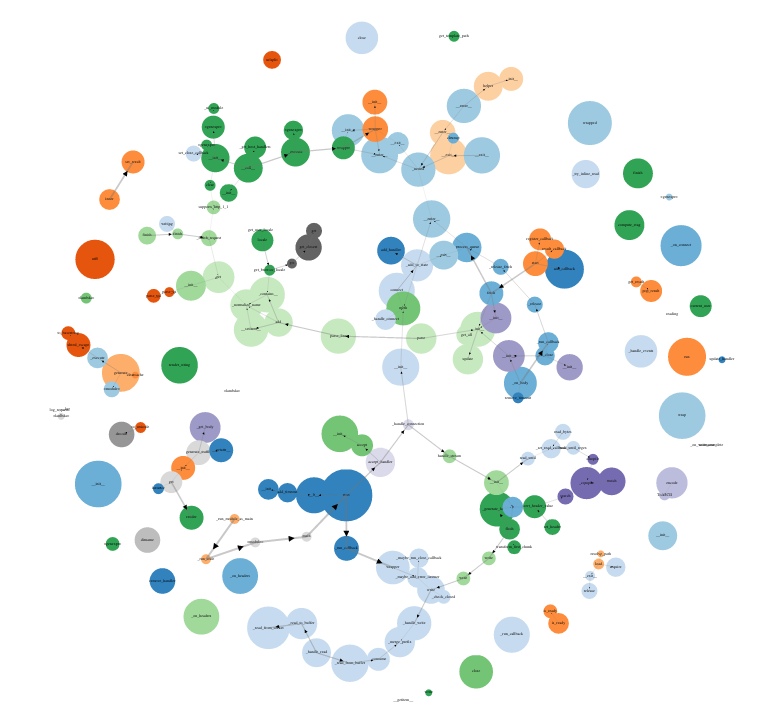

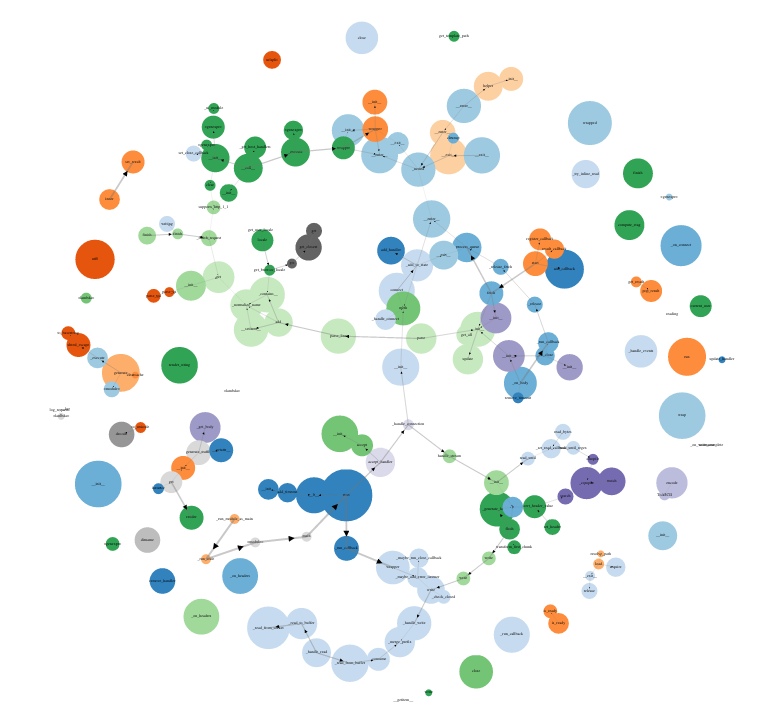

In a large project, the graph will look more impressive:

The picture is quite psychedelic, it’s difficult to call it a serious profiling tool, but nevertheless we have the call graph, the hottest sections of the code are visible, the overhead is minimal (according to the authors, only 2%), and in some cases, this tool is enough to detect sections of code that need profiling. Dropbox service uses plop directly in production, and that says a lot.

Advantages and disadvantages of plop:

+ minimal overhead

+ can be put into production

- complicated installation, dependencies

- very little data output

Говоря о статистических профайлерах нельзя не сказать о сервисе New Relic, который предназначен не только для профилирования, но так же для мониторинга серверов и веб-приложений (а так же мобильных версий). Желающие могут посмотреть всю информацию на сайте компании, а так же попробовать сервис совершенно бесплатно. Я же не буду рассказывать о нём потому, что лично не пробовал работать с New Relic, а я привык говорить только о тех вещах, которые пробовал сам. Посмотреть скриншоты можно на странице, посвящённой профайлеру.

Достоинства и недостатки:

+ предназначен для продакшена

+ много разного функционала (не только профилирование)

– платный (есть бесплатная версия)

– данные отправляются на чужие серверы

В in the next article, we will move on to the study of event profilers, the main tools for profiling Python. Stay in touch!

Today I propose moving on to practice and show examples of manual profiling methods (and even the “gaze method”). Tools for statistical profiling will also be examined.

- Introduction and theory - why do you need profiling, different approaches, tools and differences between them?

- Manual and statistical profiling - let 's move on to practice

- Event profiling - tools and their application

- Debugging - what to do when nothing works

Training

What can be the practice without examples and training? I thought for a long time what kind of project it is worth taking as a training manual, so that it is both indicative and not very complicated. In my opinion, this is Project Euler - a collection of mathematical and computer puzzles, because to solve the proposed problems it is necessary to use numerical tests and without optimization the search for the answer can go on for years.

In general, I highly recommend solving puzzles from the Euler Project. Helps to distract, meditate and relax, and at the same time keep your brain in good shape.

As an example for the article, take task 3 :

The prime divisors of 13195 are 5, 7, 13, and 29.

What is the largest divisor of 600851475143, which is a prime number?

We write a simple solution "forehead":

"""Project Euler problem 3 solve"""

from __future__ import print_function

import sys

def is_prime(num):

"""Checks if num is prime number"""

for i in range(2, num):

if not num % i:

return False

return True

def find_prime_factors(num):

"""Find prime factors of num"""

result = []

for i in range(2, num):

if is_prime(i) and not num % i:

result.append(i)

return result

if __name__ == '__main__':

try:

num = int(sys.argv[1])

except (TypeError, ValueError, IndexError):

sys.exit("Usage: euler_3.py number")

if num < 1:

sys.exit("Error: number must be greater than zero")

prime_factors = find_prime_factors(num)

if len(prime_factors) == 0:

print("Can't find prime factors of %d" % num)

else:

print("Answer: %d" % prime_factors[-1])An attentive or experienced reader may immediately call me a noob, name a few problems of this program and give some recipes for its optimization, but we specially took a simple example and pretend that we consider this code good, optimizing it along the way.

To check, run the program with a number, the divisors of which are known to us:

rudnyh @ work: ~ / work / python-profiling (venv: python-profiling)

➜ python euler_3.py 13195

Answer: 29

Answer 29, issued by the program, is correct (it can be found in the problem statement). Hurrah! Now run the program with the number 600851475143 of interest to us:

rudnyh @ work: ~ / work / python-profiling (venv: python-profiling)

➜ python euler_3.py 600851475143

And ... nothing happens. CPU utilization is 100%, the program has not completed even after several hours of operation. We begin to understand. We recall that you can only optimize the code that works correctly, but we checked the program on a small number and the answer was correct. Obviously, the problem is in performance and we need to start optimization.

Gaze method

In real life, I would profile the program with argument 13195 (when its operating time is adequate). But since we are training here and in general, just for jun, we will use the “gaze method”.

We open the code and carefully look at it. We understand (if we are lucky, we quickly understand) that to search for divisors of the number N, it makes no sense to sort out the numbers from the interval sqrt (N) + 1 ... N-1 , because we already found all the divisors from this range when iterating over the numbers from the interval 2 ... sqrt (N) . We slightly modify the code (see lines 9 and 18):

"""Project Euler problem 3 solve"""

from __future__ import print_function

import math

import sys

def is_prime(num):

"""Checks if num is prime number"""

for i in range(2, int(math.sqrt(num)) + 1):

if not num % i:

return False

return True

def find_prime_factors(num):

"""Find prime factors of num"""

result = []

for i in range(1, int(math.sqrt(num)) + 1):

if is_prime(i) and not num % i:

result.append(i)

if is_prime(num):

result.append(i)

return result

if __name__ == '__main__':

try:

num = int(sys.argv[1])

except (TypeError, ValueError, IndexError):

sys.exit("Usage: euler_3.py number")

if num < 1:

sys.exit("Error: number must be greater than zero")

prime_factors = find_prime_factors(num)

if len(prime_factors) == 0:

print("Can't find prime factors of %d" % num)

else:

print("Answer: %d" % prime_factors[-1])To check, run the program again with a number whose divisors are known to us:

rudnyh @ work: ~ / work / python-profiling (venv: python-profiling)

➜ python euler_3.py 13195

Answer: 29

Subjectively, the program worked much faster, then we launch it again with the number of interest to us: 600851475143:

rudnyh @ work: ~ / work / python-profiling (venv: python-profiling)

➜ python euler_3.py 600851475143

Answer: 6857

We check the answer on the site, it turns out to be correct, the problem is solved, we feel moral satisfaction.

The program was completed in an acceptable time (less than a minute), the answer was correct, there is no sense in further optimization in this particular case, because we have solved the task. As we remember, the most important thing in optimization is to be able to stop on time.

Yes, I am aware that the guys from Project Euler are asking you not to share answers and solutions in the public domain. But the answer to task 3 is googled (for example, by the condition “project euler problem 3 answer” at a time, so I think it's okay that I wrote the answer.

Manual profiling

One of the most common ways to quickly figure out what’s what. In the most elementary case, if we use the unix utility “time” it looks like this (before optimization):

rudnyh @ work: ~ / work / python-profiling (venv: python-profiling)

➜ time python euler_3.py 13195

Answer: 29

python euler_3.py 13195 3,83s user 0,03s system 99% cpu 3,877 total

And so (after optimization):

rudnyh @ work: ~ / work / python-profiling (venv: python-profiling)

➜ time python euler_3.py 13195

Answer: 29

python euler_3.py 13195 0,03s user 0,02s system 90% cpu 0,061 total

Acceleration of almost 65 times (from ~ 3.87 seconds to ~ 61 milliseconds)!

Manual profiling may also look like this:

import time

...

start = time.time()

prime_factors = find_prime_factors(num)

print("Time: %.03f s" % (time.time() - start))Result:

rudnyh @ work: ~ / work / python-profiling (venv: python-profiling)

➜ python euler_3.py 600851475143

Answer: 6857

Time: 19.811 s

Or using the special “ timeit ” module , which is designed to measure the performance of small programs. Application example:

rudnyh @ work: ~ / work / python-profiling (venv: python-profiling)

➜ python -m timeit -n 10 -s'import euler_3 '' euler_3.find_prime_factors (600851475143) '10

loops, best of 3: 21.3 sec per loop

When can manual profiling be applied? Firstly, this is a great way to conduct various kinds of competitions between developers ("My code is now faster than yours, so I'm a better programmer!"), And that's good. Secondly, when it is required “by eye” to determine the speed of the program (20 seconds? Long!) Or to get the results of the improvements (speed up the code 100 times!).

But the most important application is the collection of statistics about the execution time of the code in almost real time right on the production site. To do this, we send the measured time to any system for collecting metrics and drawing graphs (I really like to use Graphite and StatsD as an aggregator for graphite).

To do this, you can use a simple context manager:

"""Collect profiling statistic into graphite"""

import socket

import time

CARBON_SERVER = '127.0.0.1'

CARBON_PORT = 2003

class Stats(object):

"""Context manager for send stats to graphite"""

def __init__(self, name):

self.name = name

def __enter__(self):

self.start = time.time()

return self

def __exit__(self, *args):

duration = (time.time() - self.start) * 1000 # msec

message = '%s %d %d\n' % (self.name, duration, time.time())

sock = socket.socket()

sock.connect((CARBON_SERVER, CARBON_PORT))

sock.sendall(message)

sock.close()An example of its use:

from python_profiling.context_managers import Stats

...

with Stats('project.application.some_action'):

do_some_action()Or a simple decorator:

"""Collect profiling statistic into graphite"""

import socket

import time

CARBON_SERVER = '127.0.0.1'

CARBON_PORT = 2003

def stats(name):

"""Decorator for send stats to graphite"""

def _timing(func):

def _wrapper(*args, **kwargs):

start = time.time()

result = func(*args, **kwargs)

duration = (time.time() - start) * 1000 # msec

message = '%s %d %d\n' % (name, duration, time.time())

sock = socket.socket()

sock.connect((CARBON_SERVER, CARBON_PORT))

sock.sendall(message)

sock.close()

return result

return _wrapper

return _timingAn example of using a decorator:

from python_profiling.decorators import stats

...

@stats('project.application.some_action')

def do_some_action():

"""Doing some useful action"""At the output, we get a timeline of the code section of interest to us, for example, this one:

by which you can always see how the code feels on live servers, and is it time to optimize it. You can see how the code feels after the next release. If refactoring or optimization was carried out, the schedule allows you to evaluate the results in a timely manner and to understand whether the situation as a whole has improved or worsened.

The method has its drawbacks, and the most important of them is the lack of dependence on the input data. So, for the function for determining the prime number “is_prime”, the execution time will strongly depend on the value of this number, and if this function is called in the project very often, the schedule will be completely meaningless. It is important to clearly understand where what approach can be used and what we have on the way out.

Of course, you can call the profiling method through the collection of metrics in graphite “statistical”, because we collect statistics of the program. But I propose to adhere to the terminology adopted by us in the first part, and to mean “statistical” profiling is precisely the collection of information (sampling) at certain intervals.

Django statsd

In the case of using graphite and an aggregating server ( StatsD ) for it, for one metric we get several graphs at once: the minimum and maximum code execution time, as well as the median and the number of recorded readings (function calls) per unit time, which is very convenient. Let's see how easy it is to connect StatsD to Django.

We put the module:

➜ pip install django-statsd-mozilla

Add settings to settings.py: application and middleware:

INSTALLED_APPS += ('django_statsd',)

MIDDLEWARE_CLASSES += (

'django_statsd.middleware.GraphiteRequestTimingMiddleware',

'django_statsd.middleware.GraphiteMiddleware',

)

# send DB timings

STATSD_PATCHES = ['django_statsd.patches.db']And that’s it! At the output, we get these graphs:

Pros and cons StatsD:

+ easy to install and use

+ suitable for production (must-have, in general)

- little information (quantity / time)

- I need graphite and statsd (also must- have)

Statistical Profilers

Unlike event profilers, there are few tools for statistical profiling. I will talk about three:

Statprof

Perhaps the most famous statistical profiler for python is statprof . We put:

➜ pip install statprof

We use, for example. So:

import statprof

...

statprof.start()

try:

do_some_action()

finally:

statprof.stop()

statprof.display()Or as a context manager (not in version 0.1.2 from pypi, only in the version from the repository ):

import statprof

...

with statprof.profile():

do_some_action()Let's try to profile our code:

rudnyh @ work: ~ / work / python-profiling (venv: python-profiling)

➜ python euler_3.py 600851475143

% cumulative self

time seconds seconds name

44.42 8.63 8.63 euler_3.py:12:is_prime

37.12 7.21 7.21 euler_3.py:11: is_prime

16.90 19.40 3.28 euler_3.py:21:find_prime_factors

0.95 0.18 0.18 euler_3.py:9:is_prime

0.48 0.09 0.09 euler_3.py:13:is_prime

0.06 0.01 0.01 euler_3.py:14:is_prime

0.06 0.01 0.01 euler_3.py:20: find_prime_factors

0.03 0.01 0.01 euler_3.py:23:find_prime_factors

0.00 19.42 0.00 euler_3.py:37:

- Sample count: 3575

Total time: 19.420000 seconds

Answer: 6857

We see two hotspots in our program. First: lines 12 and 11 of the is_prime function (which is logical), their execution takes about 82% of the program’s time, and second: line 21 of the find_prime_factors function (about 17% of the time):

if is_prime(i) and not num % i:It is from this line that the hottest function of the is_prime program is called. Just by swapping the operands in the condition, we will significantly speed up the program, because the operation of obtaining the remainder of division (num% i) is faster than the function "is_prime", and at the same time quite often the remainder of dividing one number by another is not equal to zero, and "not num% i" will return False. Thus, we will drastically reduce the number of calls to the is_prime function:

if not num % i and is_prime(i):We start profiling:

rudnyh @ work: ~ / work / python-profiling (venv: python-profiling)

➜ python euler_3.py 600851475143

% cumulative self

time seconds seconds name

87.50 0.22 0.22 euler_3.py:21:find_prime_factors

5.00 0.01 0.01 euler_3.py:20: find_prime_factors

5.00 0.01 0.01 euler_3.py:11:is_prime

2.50 0.01 0.01 euler_3.py:23:find_prime_factors

0.00 0.25 0.00 euler_3.py:37:

- Sample count: 40

Total time: 0.250000 seconds

Answer: 6857

The hottest place in our program is now line 21 of the function "find_prime_factors", that is, the operation of obtaining the remainder of the division ("num% i"). The function "is_prime" is now called much less often and only 5% of the time the program runs. The program run time has significantly decreased and the largest simple divisor of the number 600851475143 is now located in just 0.25 seconds (they accelerated the program almost 80 times).

Let us pay attention to how much the accuracy of the profiler has fallen: instead of 3575 samples (in the example before optimization), only 40 measurements were taken and information was received on only five lines. Of course, this is not enough. Actually, this is the peculiarity of statistical profilers: the more time we collect data, the more accurate the analysis. If, for example, we ran the program ten or a hundred times, we would have received much more accurate results.

Here I have to do a little digression.

99% of all auxiliary utilities, such as profilers or code coverage analysis tools , work with strings as the minimum unit of information. Thus, if we try to write the code as compactly as possible, for example like this:

we can’t see in the profiler which function was called and which was not. And in the coverage report we won’t see whether this or that scenario is covered by tests. Despite the more familiar and seemingly convenient code, in some cases it is still better to write like this:

and then we will immediately see which lines are not executed, and which ones are executed (and how often). Single-line and compactness are good and fun, of course, but sometimes it’s better to spend more time and get code that is easier to profile, test and maintain.

result = foo() if bar else baz()we can’t see in the profiler which function was called and which was not. And in the coverage report we won’t see whether this or that scenario is covered by tests. Despite the more familiar and seemingly convenient code, in some cases it is still better to write like this:

if bar:

result = foo()

else:

result = baz()and then we will immediately see which lines are not executed, and which ones are executed (and how often). Single-line and compactness are good and fun, of course, but sometimes it’s better to spend more time and get code that is easier to profile, test and maintain.

Advantages and disadvantages of statprof:

+ minimal overhead

+ simple use

- the implementation is quite crude and experimental

- for an adequate result, a long analysis

is needed - there is little data at the output

Django-live-profiler

A separate item worth noting is django-live-profiler - a Django application profiler using statprof. For it to work, you must first install zeromq :

➜ brew install zmq

We put the module itself:

➜ pip install django-live-profiler

And run the aggregator:

➜ aggregated --host 127.0.0.1 --port 5556

Next, add the profiler in settings.py:

# добавляем application

INSTALLED_APPS += ('profiler',)

# добавляем middleware

MIDDLEWARE_CLASSES += (

'profiler.middleware.ProfilerMiddleware',

'profiler.middleware.StatProfMiddleware',

)And in urls.py:

url(r'^profiler/', include('profiler.urls'))We start the server:

➜ python manage.py runserver --noreload --nothreading

We open the profiler in the browser: 127.0.0.1 : 8000 / profiler / and enjoy life by observing the results of profiling a live project in real time:

And django-live-profiler can show SQL queries:

Advantages and disadvantages of django-live-profiler:

+ a small overhead

+ can be launched into production (very carefully)

+ profiling SQL queries

- a difficult installation, dependencies

- little data output

Plop

Another statistical profiler is called plop (Python Low-Overhead Profiler). The author immediately warns that the implementation is crude and the project is under active development. Installation is trivial:

➜ pip install plop tornado

We start profiling:

rudnyh @ work: ~ / work / python-profiling (venv: python-profiling)

➜ python -m plop.collector euler_3.py 600851475143

Answer: 6857

profile output saved to /tmp/plop.out

overhead was 5.89810884916e-05 per sample (0.00589810884916%)

We start the server to view the results:

➜ python -m plop.viewer --datadir = / tmp /

Open the http: // localhost: 8888 / page in the browser and enjoy the results:

Plop can be used to profile Django applications. To do this, install the django-plop package:

➜ pip install django-plop

Add to settings.py middleware a parameter that tells the profiler where to put the results:

MIDDLEWARE_CLASSES += (

'django-plop.middleware.PlopMiddleware',

)

PLOP_DIR = os.path.join(PROJECT_ROOT, 'plop')In a large project, the graph will look more impressive:

The picture is quite psychedelic, it’s difficult to call it a serious profiling tool, but nevertheless we have the call graph, the hottest sections of the code are visible, the overhead is minimal (according to the authors, only 2%), and in some cases, this tool is enough to detect sections of code that need profiling. Dropbox service uses plop directly in production, and that says a lot.

Advantages and disadvantages of plop:

+ minimal overhead

+ can be put into production

- complicated installation, dependencies

- very little data output

New relic

Говоря о статистических профайлерах нельзя не сказать о сервисе New Relic, который предназначен не только для профилирования, но так же для мониторинга серверов и веб-приложений (а так же мобильных версий). Желающие могут посмотреть всю информацию на сайте компании, а так же попробовать сервис совершенно бесплатно. Я же не буду рассказывать о нём потому, что лично не пробовал работать с New Relic, а я привык говорить только о тех вещах, которые пробовал сам. Посмотреть скриншоты можно на странице, посвящённой профайлеру.

Достоинства и недостатки:

+ предназначен для продакшена

+ много разного функционала (не только профилирование)

– платный (есть бесплатная версия)

– данные отправляются на чужие серверы

В in the next article, we will move on to the study of event profilers, the main tools for profiling Python. Stay in touch!