Scaling Zabbix

- Transfer

Those who use or intend to use Zabbix on an industrial scale have always been worried about the question: how much real data can Zabbix “digest” before finally choking and choking? Part of my recent work has dealt with this issue. The fact is that I have a huge network with more than 32,000 nodes, and which can potentially be completely monitored by Zabbix in the future. The forum has been discussing for a long time about how to optimize Zabbix to work on a large scale, but, unfortunately, I still could not find a complete solution.

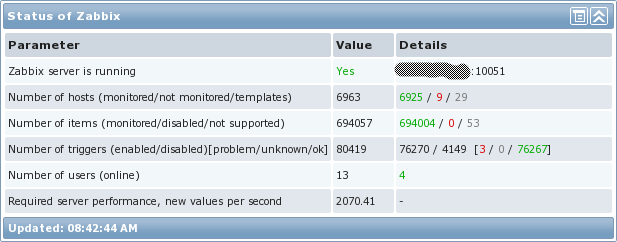

Those who use or intend to use Zabbix on an industrial scale have always been worried about the question: how much real data can Zabbix “digest” before finally choking and choking? Part of my recent work has dealt with this issue. The fact is that I have a huge network with more than 32,000 nodes, and which can potentially be completely monitored by Zabbix in the future. The forum has been discussing for a long time about how to optimize Zabbix to work on a large scale, but, unfortunately, I still could not find a complete solution. In this article I want to show how I set up my system, capable of processing really a lot of data. So that you understand what it is about, here is just a picture with the statistics of the system:

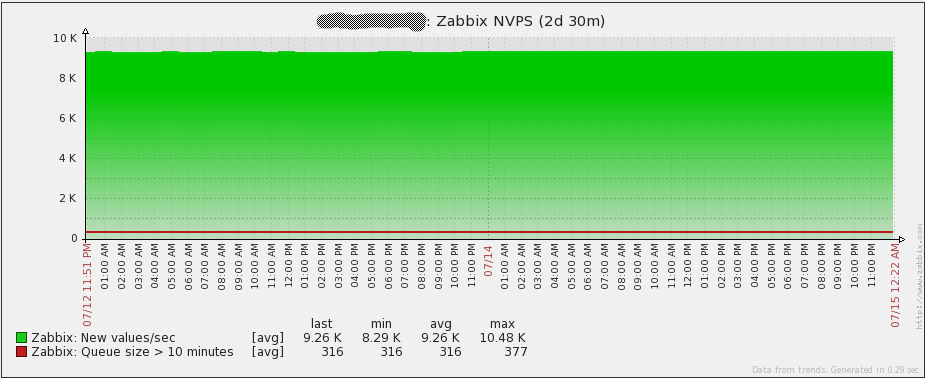

First, I want to discuss what the “Required server performance, new values per second (NVPS)” item really means. So, it does not correspond to how much real data enters the system per second, but is a simple mathematical calculation of all active data elements, taking into account the polling intervals. And then it turns out that Zabbix-trapper is not involved in the calculation. Trapper was used quite actively in our network, so let's see how many real NVPS are in the environment in question:

As shown in the graph, on average, Zabbix processes about 9260 requests per second. In addition, there were short bursts of up to 15,000 NVPS on the network , which the server coped with without problems. Honestly, this is great!

Architecture

The first thing to understand is the architecture of the monitoring system. Should Zabbix be fault tolerant? Will one or two hours of downtime matter? What consequences await if the database crashes? What drives will be required for the base, and which RAID to configure? What is the bandwidth between Zabbix server and Zabbix-proxy? What is the maximum delay? How to collect data? Interrogate the network (passive monitoring) or listen to the network (active monitoring)?

Let's look at each question in detail. To be honest, I did not consider the network issue when deploying the system, which led to problems that were difficult to diagnose in the future. So, here is a general outline of the monitoring system architecture:

Iron

Finding the right iron is not an easy process. The main thing I did here was to use SAN to store data, since the Zabbix database requires a lot of I / O disk system. Simply put, the faster the disks on the database server, the more data Zabbix can process.

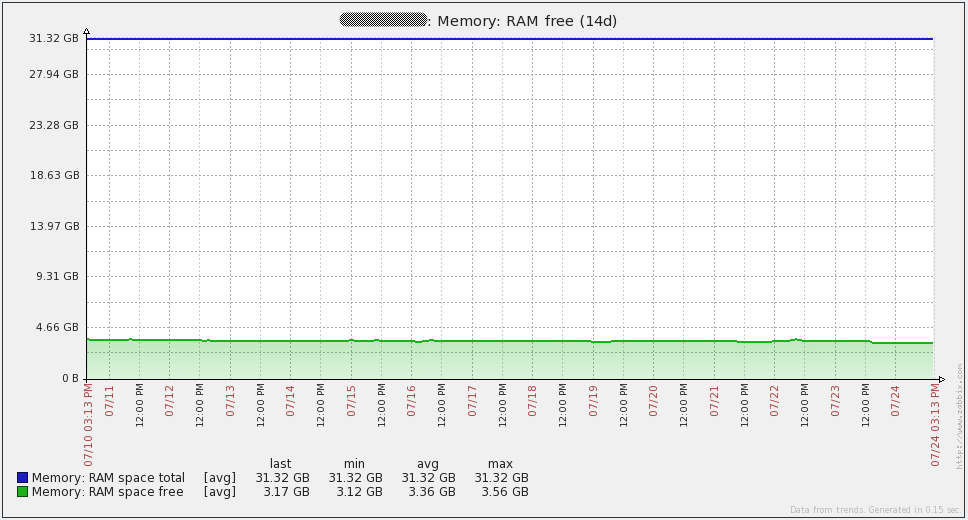

Of course, CPU and memory are also very important for MySQL. A large amount of RAM allows Zabbix to store frequently read data in memory, which naturally contributes to the speed of the system. Initially, I planned for a database server 64GB of memory, but everything has worked fine on 32GB so far.

The servers on which zabbix_server itself is installed must also have sufficiently fast CPUs, since it is necessary that it calmly process hundreds of thousands of triggers. And 12GB would be enough memory - since there are not many processes on the Zabbix server itself (almost all monitoring is through a proxy).

Unlike DBMS and zabbix_server, Zabbix proxies do not require serious hardware, so I used virtual machines. Basically, active data elements are collected, so proxies serve as data collection points, while they themselves practically do not interrogate anything.

Here is a summary table that I used on my system:

| Zabbix server | Zabbix DB | Zabbix proxies | San |

|---|---|---|---|

| HP ProLiant BL460c Gen8 12x Intel Xeon E5-2630 16GB memory 128GB disk CentOS 6.2 x64 Zabbix 2.0.6 | HP ProLiant BL460c Gen8 12x Intel Xeon E5-2630 32GB memory 2TB SAN-backed storage (4Gbps FC) CentOS 6.2 x64 MySQL 5.6.12 | VMware Virtual Machine 4x vCPU 8GB memory 50GB disk CentOS 6.2 x64 Zabbix 2.0.6 MySQL 5.5.18 | Hitachi Unified Storage VM 2x 2TB LUN Tiered storage (with 2TB SSD) |

Fault tolerance Zabbix server

Let us return to the architectural issues that I voiced above. In large networks, for obvious reasons, not working monitoring is a real disaster. However, the Zabbix architecture does not allow you to run more than one instance of the zabbix server process.

So I decided to use Linux HA with Pacemaker and CMAN. For basic setup, please look at the RedHat 6.4 manual . Unfortunately, the instruction was changed from the moment I used it, but the end result should be the same. After the basic setup, I additionally configured:

- Shared IP address

- In the case of a feylover, the IP address goes to the server, which becomes active

- Since the shared IP address is always used by the active Zabbix server, three advantages follow from this:

- It is always easy to find which server is active

- All connections from Zabbix server are always from the same IP (After setting the SourceIP = parameter in zabbix_server.conf )

- All Zabbix proxies and Zabbix agents simply use a common IP as a server

- Zabbix_server process

- in case of a feylover zabbix_server will be stopped on the old server and started on the new

- Symlink for cron jobs

- Simlink points to the directory where the tasks lie, which should be performed only on the active Zabbix server. Crontab must have access to all tasks through this symlink

- In the case of a feylover, the symlink is deleted on the old server and created on the new

- crond

- In the case of a feylover, crond stops on the old server and starts on the new active server

DBMS fault tolerance

Obviously, there is no benefit from the fault tolerance of servers with Zabbix servers if the database can crash at any time. For MySQL, there are a huge number of ways to create a cluster, I will talk about the method that I used.

I also used Linux HA with Pacemaker and CMAN and for the database. As it turned out, it has a couple of great features for managing MySQL replication. I use (used, see the "open problems" section) replication to synchronize data between active (master) and standby (slave) MySQL. For starters, just like for Zabbix server servers, we do basic cluster configuration. Then in the add-on, I configured:

- Shared IP address

- In the case of a feylover, the IP address goes to the server, which becomes active

- Since the shared IP address is always used by the active Zabbix server, two advantages follow from this:

- It's always easy to find which server is active.

- In the case of a failover, no action is required on the Zabbix server itself to indicate the address of the new active MySQL server

- Common Slave IP Address

- This IP address can be used when a read request to the database occurs. Thus, the query can be processed by the MySQL slave server, if available

- any of the servers can have an additional address, it depends on the following:

- if the slave server is available, and the clock does not lag more than 60 seconds, then it will have an address

- Otherwise, the MySQL master server will have the address

- mysqld

- In the case of a failover, the new MySQL server will become active. If after that the old server returns to operation, then it will remain a slave for the already newly made master.

An example of a configuration file can be taken here . Remember to edit the pacemaker parameters enclosed in "<>". You may also need to download another MySQL resource agent for use with pacemaker. The link can be found in the documentation for installing a MySQL cluster with pacemaker in the Percona github repository . Also, just in case of a “fire accident”, a copy is here .

Zabbix proxy

If for some reason you have not heard of Zabbix proxies, then please urgently look in the documentation . Proxies allow Zabbix to distribute the monitoring load across multiple machines. After that, already every Zabbix proxy sends all the collected data to the Zabbix server.

When working with Zabbix proxies, it is important to remember:

- Zabbix proxies are capable of handling very serious amounts of data if configured properly. So, for example, during the tests, the proxy (let's call it Proxy A) processed 1500-1750 NVPS without any problems. And this is a virtual machine with two virtual CPUs, 4GB of RAM and SQLite3 database. At the same time, the proxy was on the same site with the server itself, so delays on the network could simply not be taken into account. Also, almost everything that was collected was active Zabbix agent data elements

- Earlier, I mentioned how important network latency is when monitoring. So, this is true when it comes to large systems. In fact, the amount of data that a proxy can send without lagging directly depends on the network.

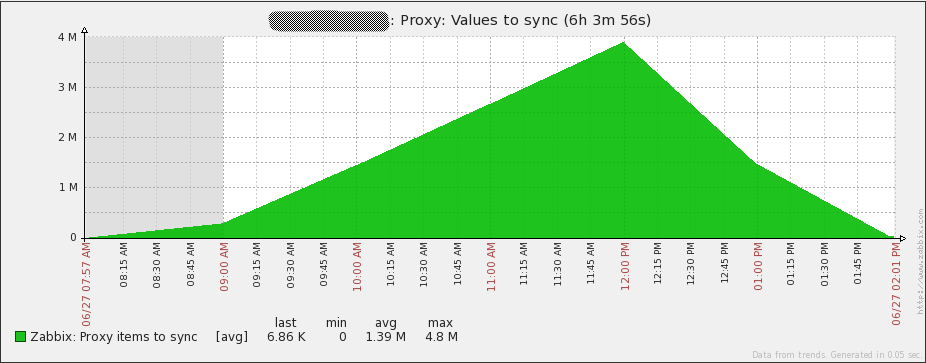

The graph below clearly shows how problems accumulate when network latency is not taken into account. A proxy that does not have time:

Perhaps, it is quite obvious that the queue from the data for transmission should not increase. The graph refers to another Zabbix proxy (Proxy B), which is no different in hardware from Proxy A, but can transmit without problems only 500NVPS and not 1500NVPS, like Proxy A. The difference is that B is located in Singapore and itself server in North America, and the delay between sites is about 230ms. This delay has a serious effect, given the way data is sent. In our case, Proxy B can send only 1000 collected Zabbix elements to the server every 2-3 seconds. According to my observations, this is what happens:

- Proxy establishes a connection to the server

- The maximum proxy sends 1000 collected data item values at a time.

- Proxy closes the connection

This procedure is repeated as many times as required. In the case of a large delay, this method has several serious problems:

- The initial connection is very slow. In my case, it happens in 0.25 seconds. Phew!

- Since the connection is closed after sending 1000 data elements, the TCP connection never lasts long enough to manage to use all the available bandwidth of the channel.

Database performance

High database performance is key to the monitoring system, since absolutely all the collected information gets there. At the same time, given the large number of write operations to the database, disk performance is the first bottleneck you encounter. I was lucky and I had SSDs at my disposal, but still this is not a guarantee of the base’s fast operation. Here is an example:

- Initially, I used MySQL 5.5.18 on the system. At first, no visible performance problems were observed, however, after 700-750 NVPS MySQL began to load the processor at 100% and the system literally “froze”. My further attempts to correct the situation by twisting the parameters in the configuration file, activating large pages or partitioning did not lead to anything. My wife suggested a better solution: first upgrade to MySQL 5.6 and then figure it out. To my surprise, a simple update solved all the performance problems, which I could not defeat in 5.5.18. Just in case, here is a copy of my.cnf .

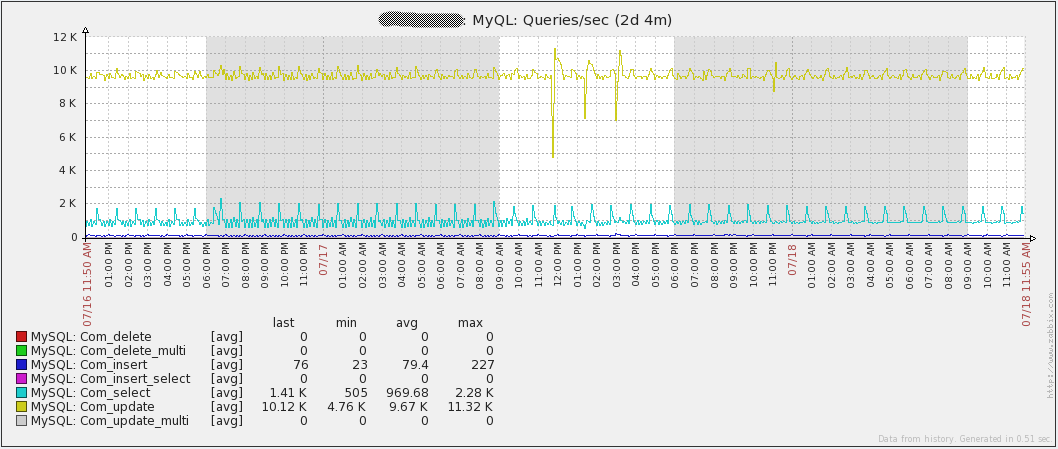

The graph shows the number of queries per second in the database:

Note that the largest number of requests is “Com_update”. The reason lies in the fact that each value received leads Update to the “items” table. The database also has mainly write operations, so the MySQL query cache will not help. In fact, it can even be detrimental to performance, given that you constantly have to mark requests as invalid.

Another performance issue could be Zabbix Housekeeper. In large networks, I highly recommend turning it off. To do this, set DisableHousekeeping = 1 in the config file. It is clear that without Housekeeping old data (data elements, events, actions) will not be deleted from the database. Then removal can be organized through partitioning.

However, one of the limitations of MySQL 5.6.12 is that partitioning cannot be used in tables with foreign keys, and they are present almost everywhere in the Zabbix database. But besides the history tables, which we need. Partitioning gives us two advantages:

- All historical table data broken by day / week / month / etc. can be in separate files, which allows you to delete data in the future without any consequences for the database. It is also very simple to understand how much data is collected over a given period of time.

- After clearing the tables, InnoDB does not return disk space, leaving it to itself for new data. As a result, it is not possible to clear disk space with InnoDB. In the case of partitioning, this is not a problem; space can be freed up by simply deleting old partitions.

About partitioning in Zabbiks was already written on Habré.

Collect or listen

There are two methods of data collection in Zabbix: active and passive: In the case of passive monitoring of Zabbix, the server itself polls the Zabbix agents, and in the case of active, it waits for Zabbix agents to connect to the server themselves. Zabbix trapper also falls under active monitoring , since sending initiation remains on the host side.

The difference in performance can be serious when choosing one or the other method as the main one. Passive monitoring requires running processes on the Zabbix server, which will regularly send a request to the Zabbix agent and wait for a response, in some cases the wait can be delayed even up to several seconds. Now multiply this time by at least a thousand servers, and it becomes clear that polling can take time.

In the case of active monitoring, there are no polling processes, the server is in a waiting state when the agents themselves start connecting to the Zabbix server to get a list of data items that need to be monitored.

Further, the agent will begin to collect data elements taking into account the interval received from the server and send them, while the connection will be open only when the agent has something to send. Thus, there is no need for verification before receiving data, which is present with passive monitoring. Conclusion: active monitoring increases the speed of data collection, which is required in our large network.

Monitoring Zabbix himself

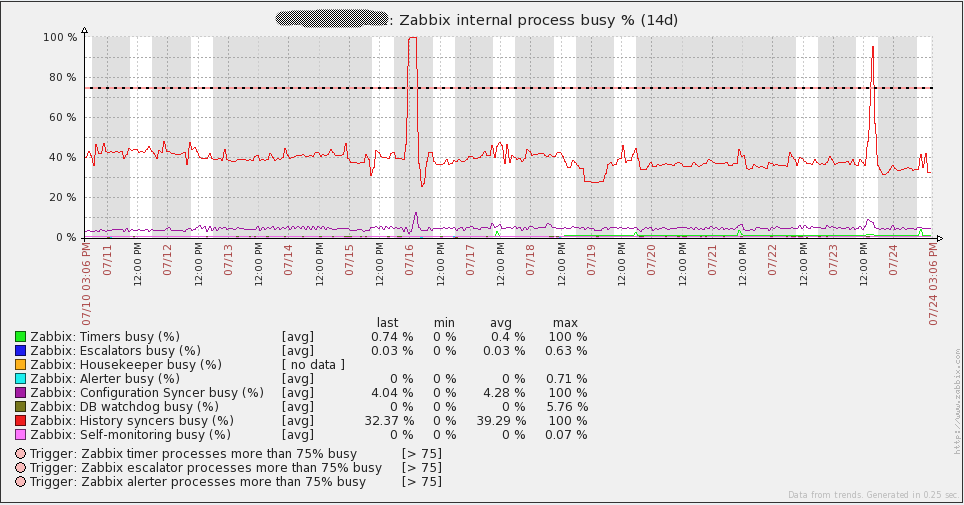

Without monitoring Zabbix itself, the effective operation of a large system is simply not possible - it is critically important to understand where the “plug” will occur when the system refuses to receive new data. Existing Zabbix monitoring data elements can be found here . In versions 2.x of Zabbix, they were kindly assembled into the Zabbix server monitoring template provided “out of the box”. Use it!

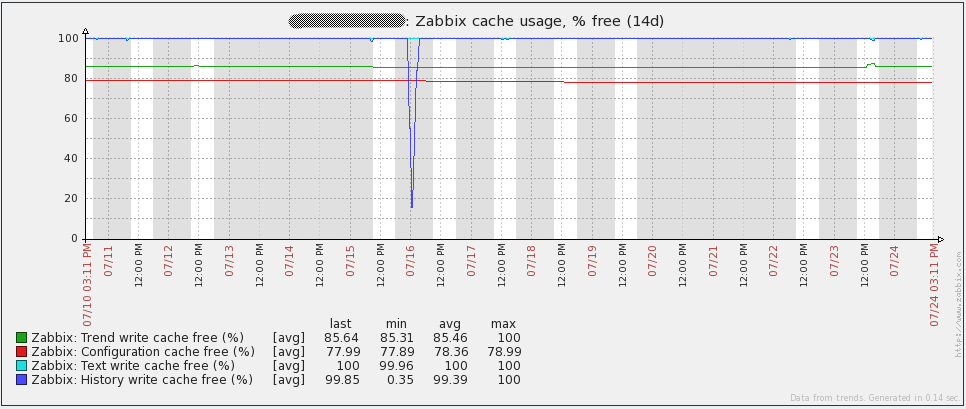

One useful metric is free space in History Write Cache (HistoryCacheSize in the server config file). This parameter should always be close to 100%. If the cache is full, it means that Zabbix does not have time to add incoming data to the database.

Unfortunately, this option is not supported by Zabbix proxy. In addition, in Zabbix, there is no data element indicating how much data is waiting to be sent to the Zabbix server. However, this data item is easy to do yourself through an SQL query to the proxy database: The

SELECT ((SELECT MAX(proxy_history.id) FROM proxy_history)-nextid) FROM ids WHERE field_name='history_lastid'query will return the required number. If you have SQLite3 as the database for the Zabbix proxy, simply add the following command as UserParameter in the config file of the Zabbix agent installed on the machine where the Zabbix proxy is running. Next, just put a trigger that will notify that the proxy is not coping:

UserParameter=zabbix.proxy.items.sync.remaining,/usr/bin/sqlite3 /path/to/the/sqlite/database "SELECT ((SELECT MAX(proxy_history.id) FROM proxy_history)-nextid) FROM ids WHERE field_name='history_lastid'" 2>&1

{Hostname:zabbix.proxy.items.sync.remaining.min(10m)}>100000Total statistics

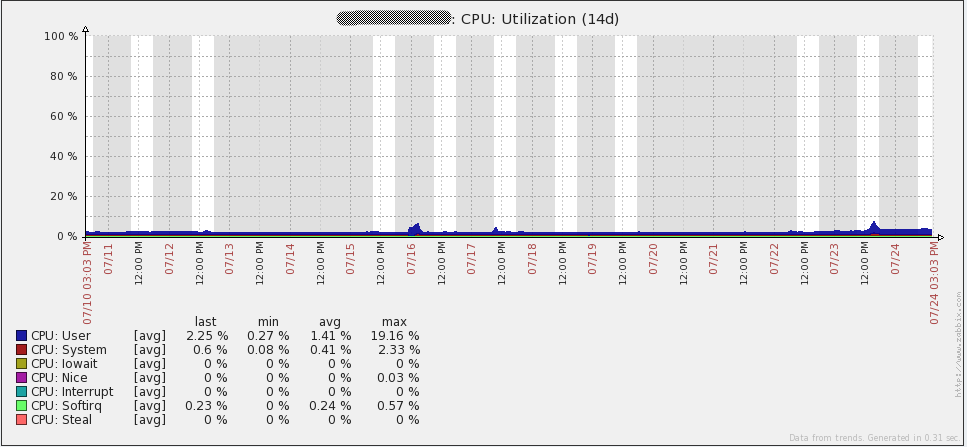

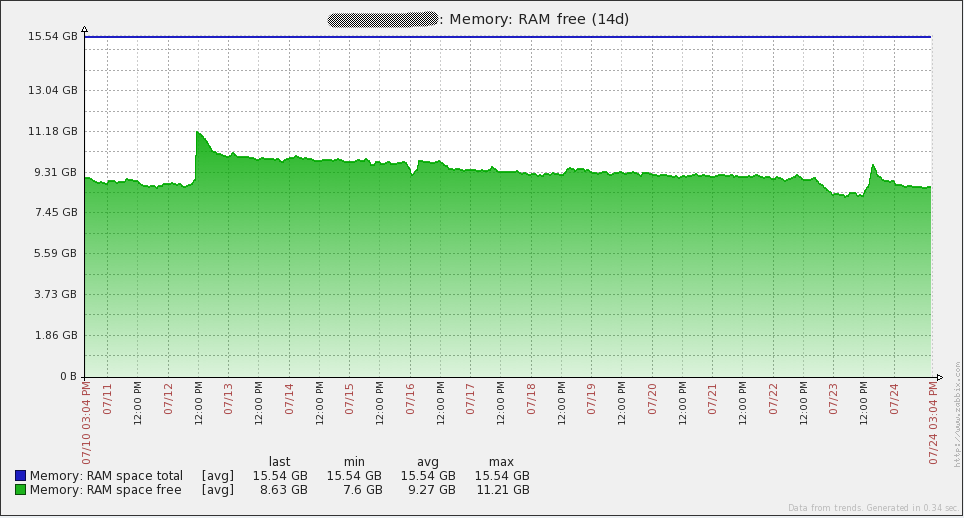

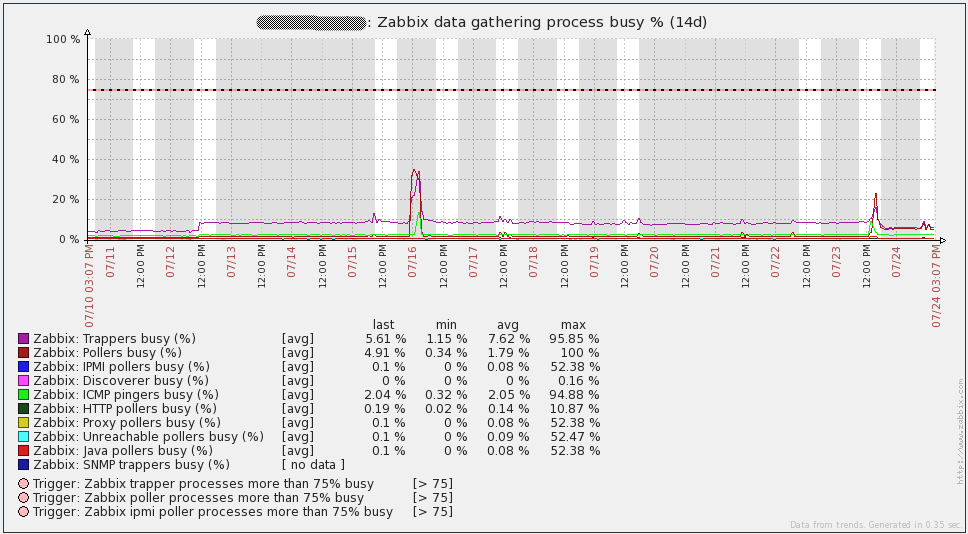

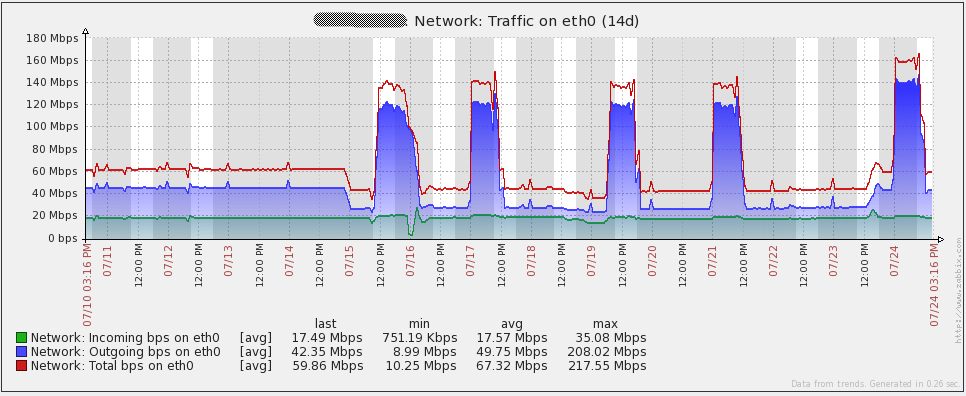

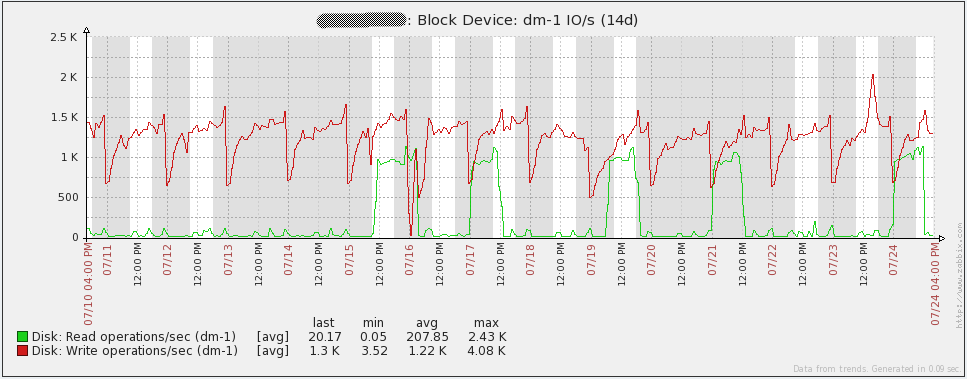

Finally, I propose system loading graphs. I say right away that I don’t know what happened on July 16 - I had to recreate all the proxy databases (SQLite at that time) in order to solve the problem. Since then I have translated all proxies to MySQL and the problem has not been repeated. The remaining “irregularities” of the graphs coincide with the time of the load testing. In general, the graphs show that the iron used has a large margin of safety.

And here are the graphs from the database server. The traffic growth every day corresponds to the time of dumping (mysqldump). Also, the July 16 failure in the query graph (qps) is related to the same problem that I described above.

Control

In total, the system uses 2 servers for Zabbix servers, 2 servers for MySQL, 16 virtual servers for Zabbix proxies and thousands of monitored servers with Zabbix agents. With so many hosts, there was no question of making changes by hand. And the solution was the Git repository, which all servers have access to, and where I located all the configuration files, scripts, and everything else that needs to be distributed. Next, I wrote a script that is called through UserParameter in the agent. After running the script, the server connects to the Git repository, downloads all the necessary files and updates, and then restarts the Zabbix agent / proxy / server if the config files had changes. Updating is no more difficult than running zabbix_get!

Creating new hosts manually via the web interface is also not our method, with so many servers. Our company has CMDB, which contains information about all the servers and services that they provide. Therefore, another magic script collects information from CMDB every hour and compares it with what is in Zabbix. On the conclusions of this comparison, the script removes / adds / turns on / off hosts, creates a group host, and adds templates to hosts. All that remains to be done manually in this case is the implementation of a new type of trigger or data element. Unfortunately, these scripts are strongly tied to our systems, so I can not share them.

Open issues

Despite all the efforts that I have made, there remains one significant problem that I have yet to solve. The fact is that when the system reaches 8000-9000NVPS, then the backup MySQL database no longer keeps up with the main one, so in fact there is no fault tolerance.

I have ideas how to solve this problem, but I have not had time to implement it yet:

- Use Linux-HA with DRBD for partitioning the database.

- LUN replication on a SAN with replication to another LUN

- Percona XtraDB cluster. In version 5.6 it is still unavailable, so you have to wait with it (as I wrote, there were performance problems in MySQL 5.5)

References

- Zip file with all downloads from the article

- Large environment forum thread

- Zabbix server configuration documentation

- Zabbix proxy distributed monitoring documentation

- Zabbix active / passive item documentation

- Zabbix internal item documentation

- Zabbix blogpost on internal items

- Pacemaker / CMAN quickstart guide

- MySQL Pacemaker configuration guide

- MySQL Large Pages

- Partitioning the Zabbix database