Today will be the first match between OpenAI and Dota 2 professionals (people won). We understand how the bot works

[UPD 2] The Pain Gaming team won the OpenAI Five. The match lasted 53 minutes and ended with a score of 45-41 for frags in favor of bots. You can watch game recording on Twitch here . Beginning at 7:38:00

Tonight, August 22, before the start of the next day of the playoffs of The International, the first demonstration match between professional players and the OpenAI Five bot will be held as part of the show activities. Information about the matches appeared on the official website of Dota 2 in the section with the schedule of the playoff games The International. In total, OpenAI will play three matches in three days with pro-players.

Significantly, this event is the fact that a year ago the bot “cracked down” by Daniel Ishutin in the confrontation 1x1 solo mid mirror SF , anda few weeks ago, he overcame the “hodgepodge” of commentators and former pro-players.

This time, the development of a company sponsored by Ilon Mask and other prominent businessmen from the IT sector will meet with a more serious opponent: The International gathers the best teams in the world every year, so bots will not be easy. While the development team did not report whether all the old restrictions on peaks and mechanics that were relevant in the game against people at the beginning of the month, would be in effect, but they are worth recalling.

So, the old rules are as follows:

- пул из 18 героев в режиме Random Draft (Axe, Crystal Maiden, Death Prophet, Earthshaker, Gyrocopter, Lich, Lion, Necrophos, Queen of Pain, Razor, Riki, Shadow Fiend, Slark, Sniper, Sven, Tidehunter, Viper, или Witch Doctor);

- без Divine Rapier, Bottle;

- без подконтрольных существ и иллюзий;

- матч с пятью курьерами (ими нельзя скаутить и танковать);

- без использования скана.

In the comments to our previous publication on this topic a lot of controversy flared up about the methods of teaching neural networks. This time we brought some visual materials about how the OpenAI bot works and how it looks from the point of view of people.

The developers stated that due to serious computing power, a huge number of records and the ability to run training in several streams, OpenAI imitated up to 180 years of continuous play in Dota 2 daily. Obviously, the learning ability of this AI is many orders of magnitude lower than that of not the most “smart” “Animals, not to mention dogs or high primates, to which man applies.

For learning the OpenAI team used their own development called Gym ( repository on github , official documentation ). This “rocking chair” is compatible with any public library, for example TensorFlow or Theano. In the training of neural networks within the Gym, the classic agent-environment loop is used:

The developers claim that anyone can use the Gym to train their neural network to play classic Atari 2600 titles or other relatively easy-to-understand projects. It is obvious that the speed of learning directly depends on the amount of resources involved in this. As an example, the OpenAI developers have taught the neural network to play Montezuma's Revenge .

But the greatest interest for us are the second and fourth stages - action and analysis of the result (awards for action). And in the context of Dota 2, the level of variation just rolls over, and the actions initially rated by a bot as “correct” in the long run can lead to loss.

How the OpenAI team taught AI to play Dota 2 in terms of hardware

To the question of teaching OpenAI to the game in Dota 2 the development team approached more than seriously. You can read the full official report on the project's blog here , we will give the main excerpts on the technical part and implementation without marketing and other curtsies.

The commentators of the last publication were most interested in the power consumed by the OpenAI neural network for training. It is obvious that the Ryzen couple could not manage it there, especially in the context of imitation of 180 years of playing on the real day. At the same time, the bot for Dota 2 is not a bot for the Quake level shooter, which the user yea very clearly noted in response to one of the skeptics:

It seems to me that you are just poorly aware of the size of the tactical space in DotA, because you are not very familiar with the game itself. There are no chances to make bots without attracting neural networks, limiting ourselves to at least some reasonable computing resources. Seriously. This is not Quake, where you can be a full oak in terms of tactics, compensating for this with superhumanly quick shots at the turnips from the rails. Ideal in terms of reaction and mechanical skills, bots who cannot play with five of them and do not “feel the map” are doomed against any skillful meat players.

In addition, DotA is a game with incomplete information, and this dramatically complicates the task. The task of “what to do when I see an enemy” is much simpler than the task of “what to do when enemies are not visible” - not only for cars, but also for people.

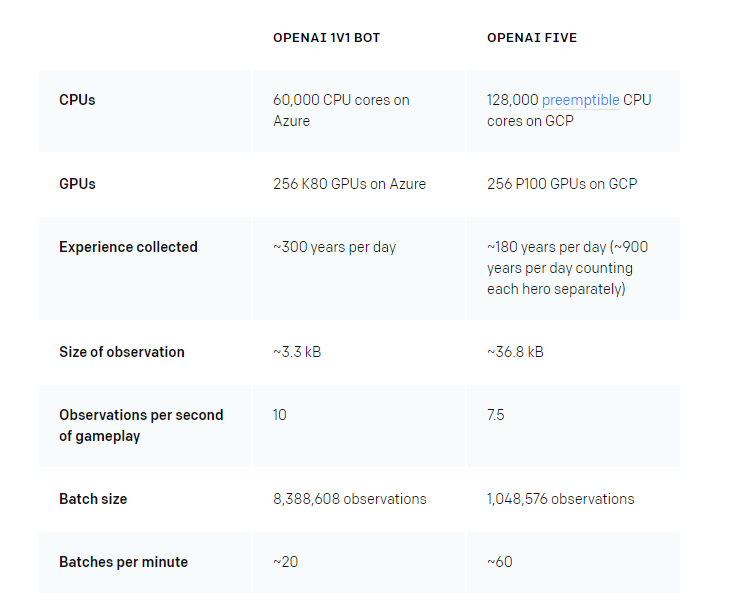

This was clearly understood by the OpenAI developers, so that thousands of virtual machines were involved at the same time to train bots. Specifically, these figures are provided by the official blog of the project for learning the 1x1 Solo Mid bot, which is able to defeat Daniel Ishutin in mid, with some limitations, and for learning a full-fledged team for playing 5x5

This is not a decimal point. For learning OpenAI in 5x5 mode, 128 thousand Google Cloud processor cores are constantly used . But this is not the whole thing. Since machine vision is used in the training of the OpenAI bot (which we will talk about later), another 256 GPU P100 NVIDIA ( Tesla accelerator ) appears in this monstrous configuration .

According to the official NVIDIA brochure, the P100 has the following characteristics:

The official cost of one P100 Tesla 12 GB PCI-E is about $ 5800, the Tesla P100 SXM2 16GB starts at $ 9,400. In OpenAI, the older model SXM2 is used. We need video cards not for rendering “graphics” on virtual machines, but for processing and calculating data that constantly comes from all the running batches. To handle this stream, the team had to deploy a whole system of nodes, within which Tesla P100 work. Video cards process and calculate the data obtained, in order to then produce the result averaged over all batches and compare it with past OpenAI indicators.

Such capacities allow simulating neural networks at about 60 batches per minute, for each of which the neural network analyzes about 1.04 million agent-environment cycles, mentioned earlier.

How OpenAI sees the game

Money - dust, if you have it of course. Even the most approximate estimates of the cost of a single day of training OpenAI cause a light shock, and the heat generated on Google Cloud servers is enough to heat a small city. But much more interesting is how OpenAI “sees” the game.

It is clear that graphic rendering is not necessary for bots, but such powers are not just used. The bot in its actions relies on the standard Valve API for bots , through which the neural network receives a stream of data about the surrounding space. The API is needed in order to run the data through a single-layer LSTM-network of 1024 blocks and receive, as a result, short-term solutions that are consistent with the long-term strategies that the neural network has.

LSTM networks determine the priority of tasks for the bot "here and now", and in accordance with the long-term model of the behavior of the neural network, the most advantageous action is chosen. For example, bots willingly concentrate on the last-hit creeps for earning gold and experience, which is consistent with the model for obtaining long-term benefits in the form of objects and the subsequent advantage of the game.

According to information from the developers, all batches occur with the drawing of events on a map with a frequency of 30 FPS. The OpenAI neural network constantly analyzes each frame through LSTM, based on the result of which it makes further decisions. At the same time, the bot has its own priorities: all possible interactions with the environment through specially selected areas of the bot are 800x800 squares divided into 64 100x100 cells (the in-game range and movement values are taken as the most careful analysis, and the size of the square is 8x8 ). Here's how the bot partially “sees” the game on one specific frame: The

full designer with the ability to switch actions, square size estimates and other “rotate settings” options is available in the official developers blog in the Model structure section

And this is how the visualization of the LSTM network operation looks like in terms of predicting game events in real time:

In addition to LSTM and assessing the situation “here and now”, the OpenAI bot constantly uses a “forecasting” grid and setting its own priorities. This is how it looks for people:

Green square - the area of the highest priority and the current action of the bot (attack, movement, and so on), Light green square has a lower priority, but the bot can switch to this sector at any time. Two more gray squares are zones of potential activity, if nothing changes.

See how the bot "sees" the game and makes decisions based on these four zones can be seen on the video below:

It is worth noting that the priority areas of the bot are not always close to the character model. When hauling on the map, all four squares are easily shifted over several screens from the current position of the bot, that is, OpenAI simultaneously analyzes the entire game space for the availability and expediency of any actions, and not just one screen.

OpenAI constantly plays with itself. At the same time, 80% of bots are trained, and 20% use already developed tactics and strategies. This approach allows neural networks to learn from their own mistakes, finding vulnerable patterns in their own behavior and at the same time pinning off successful behaviors.

Tomorrow. People professionals against the car

Instead, the result is to return to the topic of tomorrow's confrontation between professional players and OpenAI.

While the details are unknown, however, we can confidently say that the neural networks will have a hard time. Unlike its past opponents, OpenAI will face the best of the best, and the ability to maneuver and teamwork within the show match will allow people to reach their full potential. The Solo Mid 1x1 format is, of course, extremely entertaining, but it does not reveal the whole essence of the game and is extremely intolerant of the micro-mistakes that people often make.

The question is how seriously the professionals will take this confrontation. If any additional information appears, the publication will be updated.

Only registered users can participate in the survey. Sign in , please.