Measuring the quality of search in Mail

In January, in this post, I talked about full-text search in Mail.Ru.

However, how do you determine if a new search is really better? About how to measure the quality of the search, I will tell in this post.

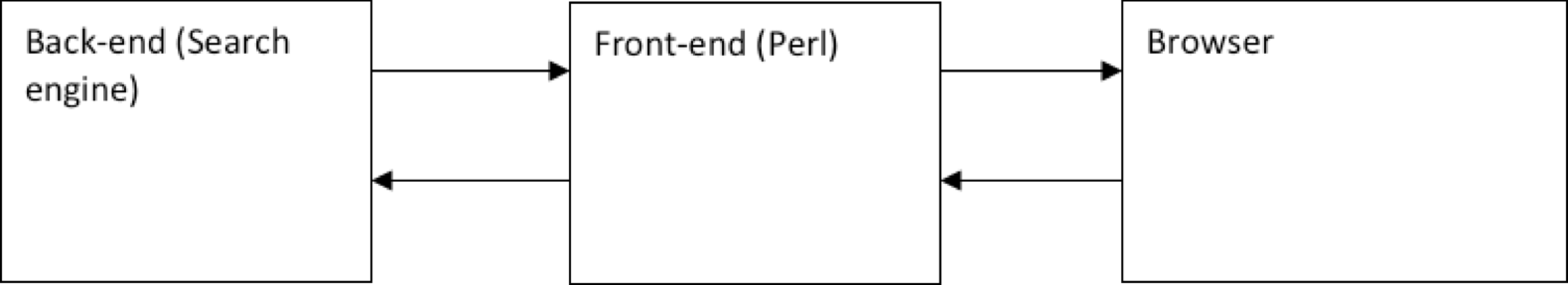

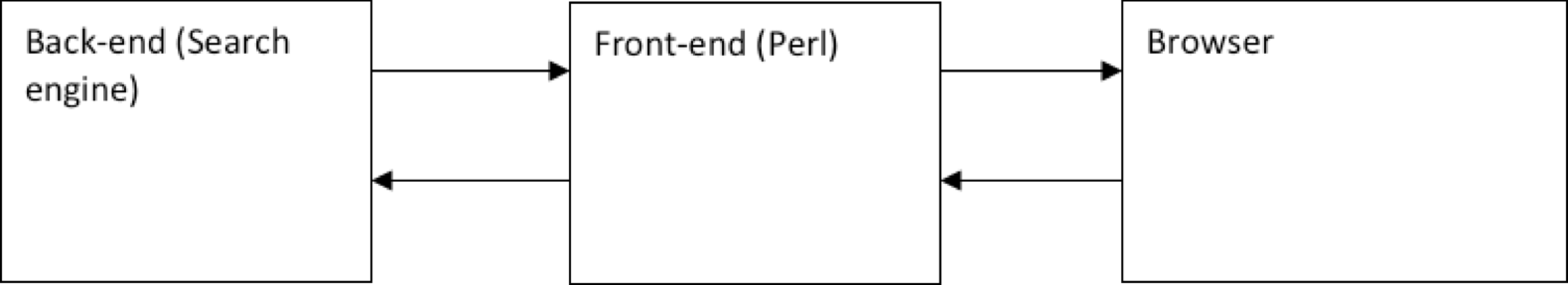

To begin, consider the general execution scheme of a search query.

The main parameter of the search quality is its speed . It is most convenient to measure it on the front end side like this:

Data is written to the log, and a special daemon every 5 minutes collects the next batch of logs from all the front-ends and builds the next segment on the chart. It should be noted that you should have two graphs with a search speed indicator.

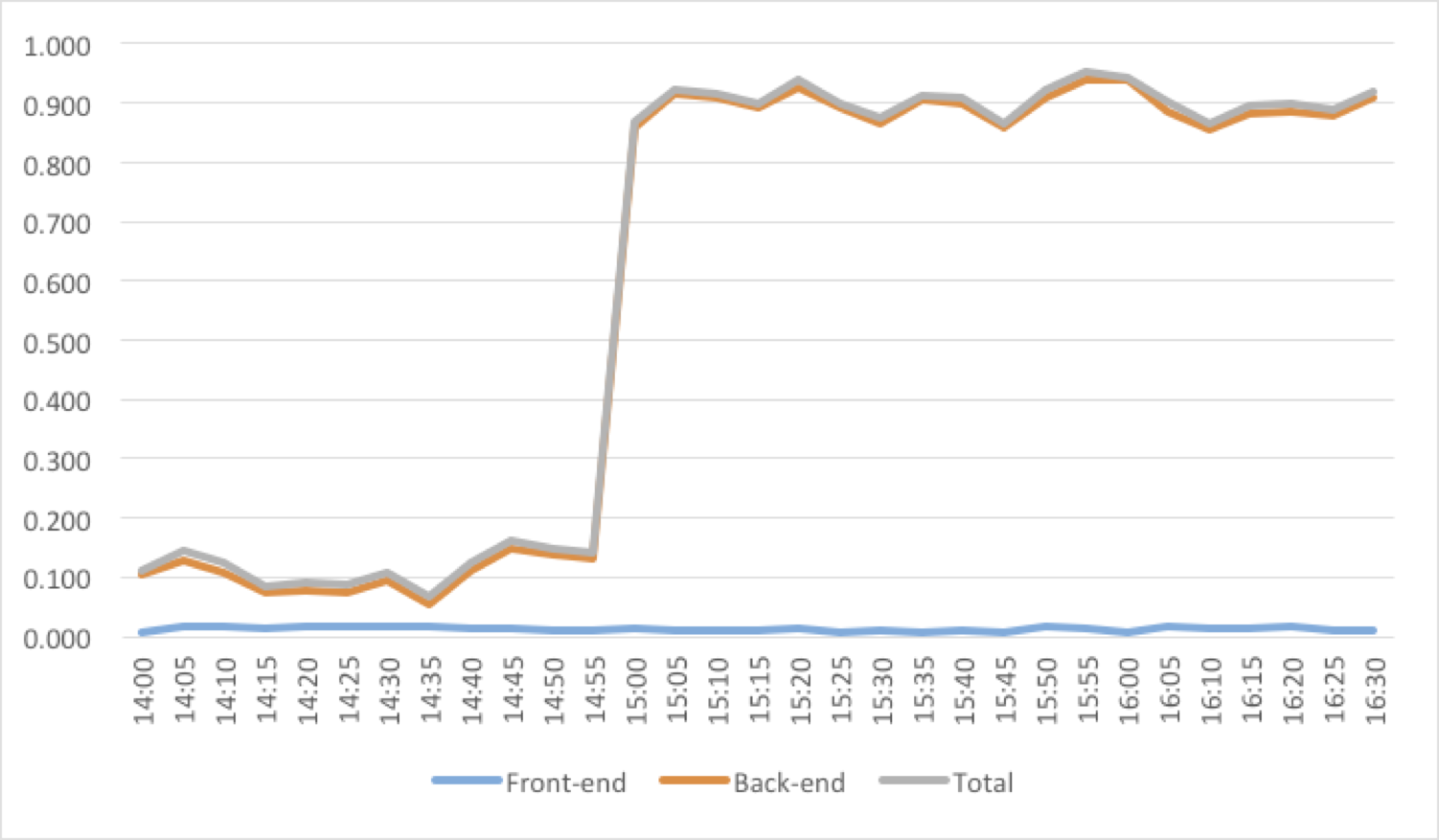

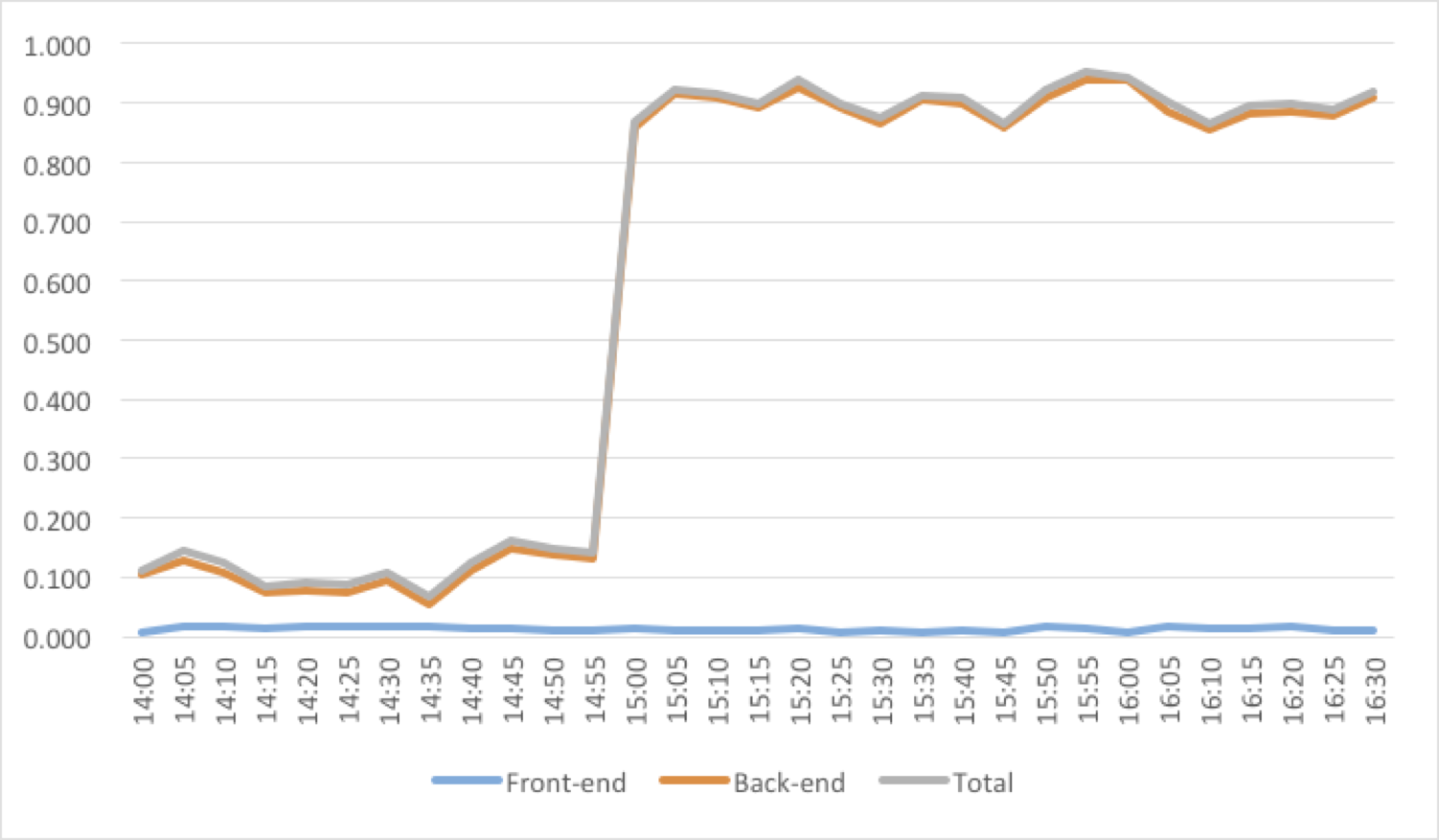

Firstly, a “five-minute” chart showing the current state. It can be useful for diagnosing “acute” conditions, for example, if after the next update the search suddenly began to work not as expected, but much more slowly.

Figure 1. Oops! It seems that they rolled out something wrong ...

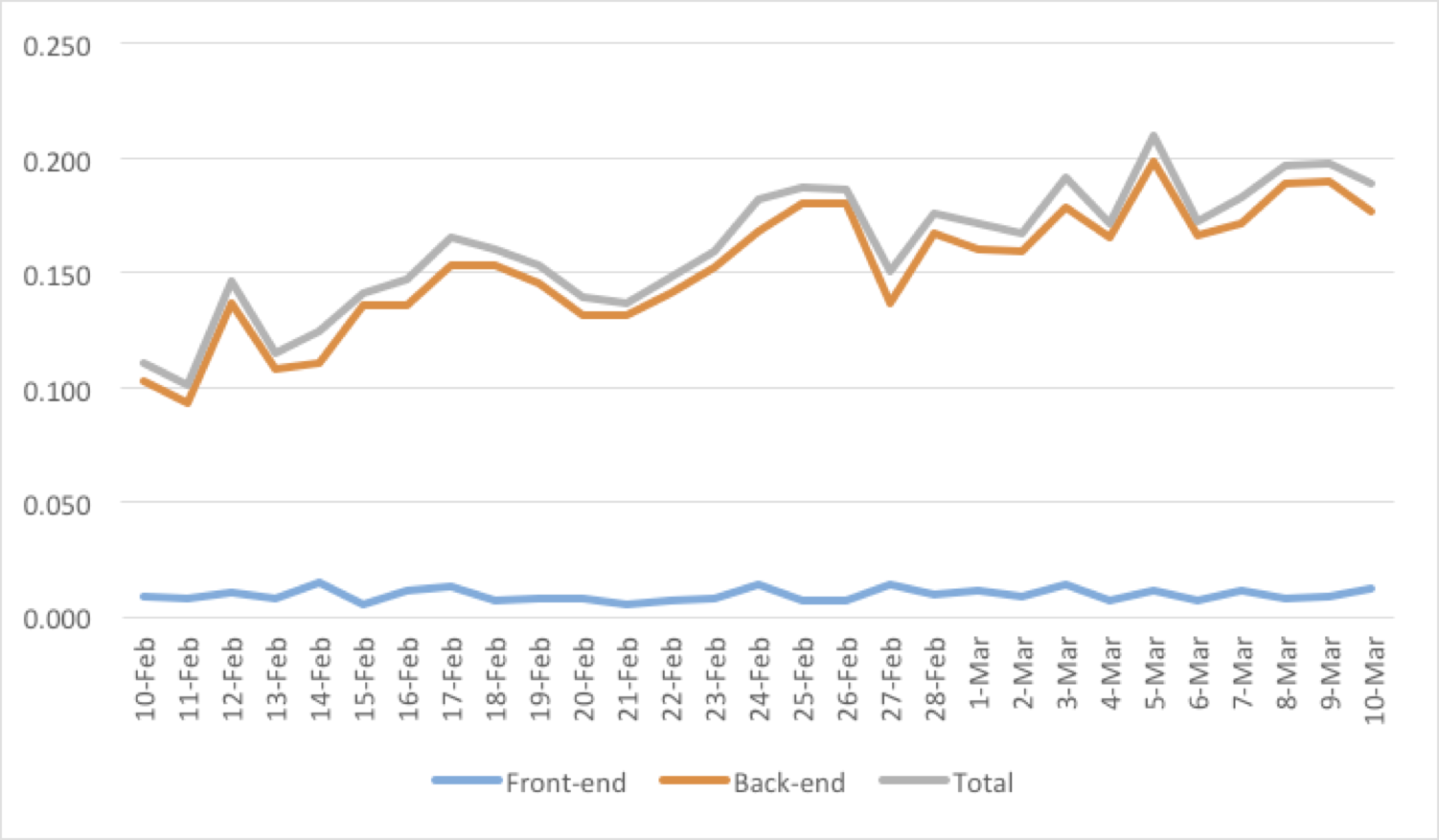

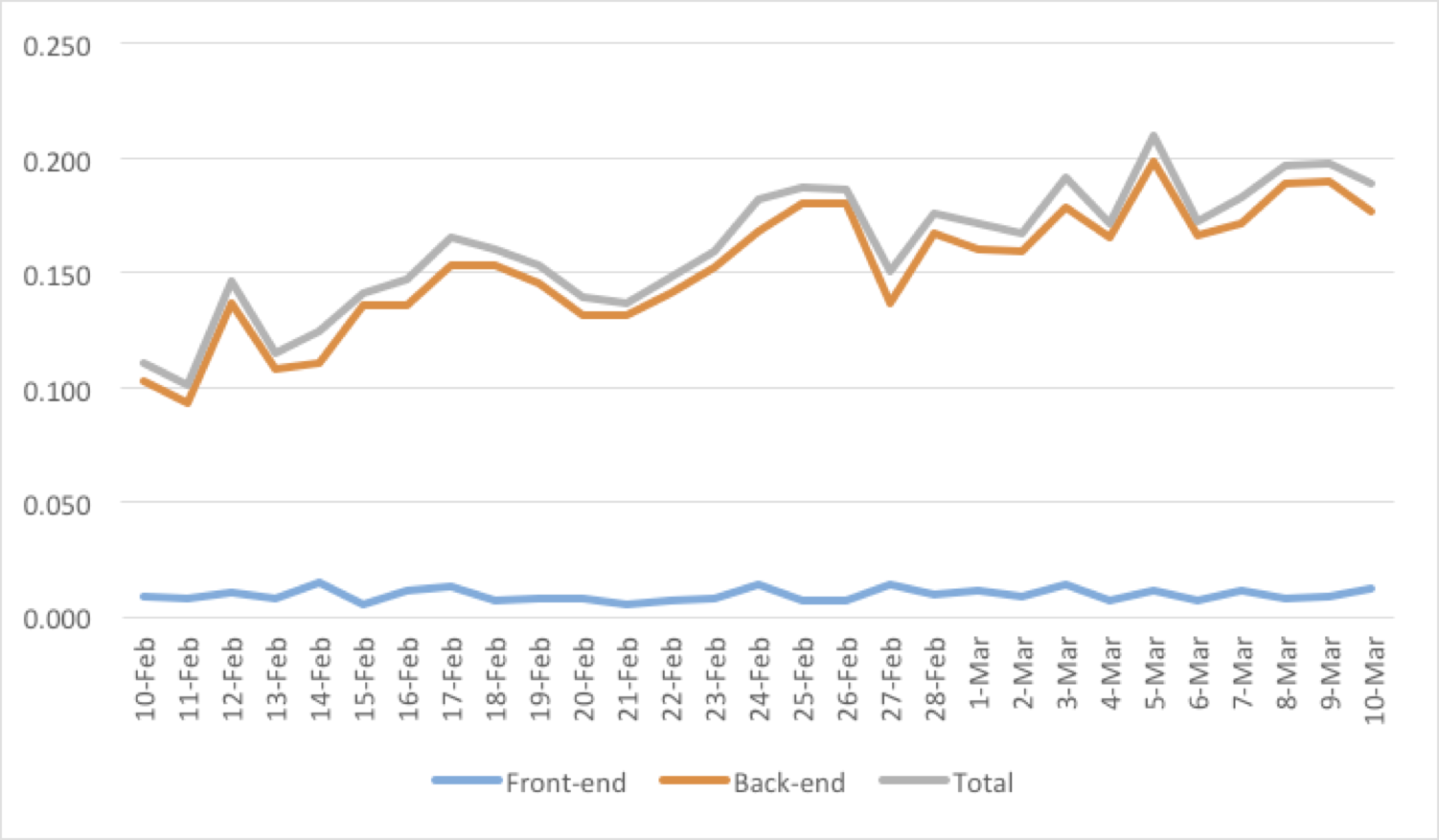

Secondly, we also need a “daily” chart, which allows us to detect deeper and less noticeable at first glance problems. For example, if the rebuilding of indexes is too rare, a certain “debt” will constantly accumulate - a certain amount of non-indexed data for which only a sequential search is possible. This “debt” will be visible on the graph as slow degradation in speed over several days or weeks (Figure 2). Such behavior of the chart is a signal that it would be worth revising the work policy of the indexing planner in the direction of indexing more often.

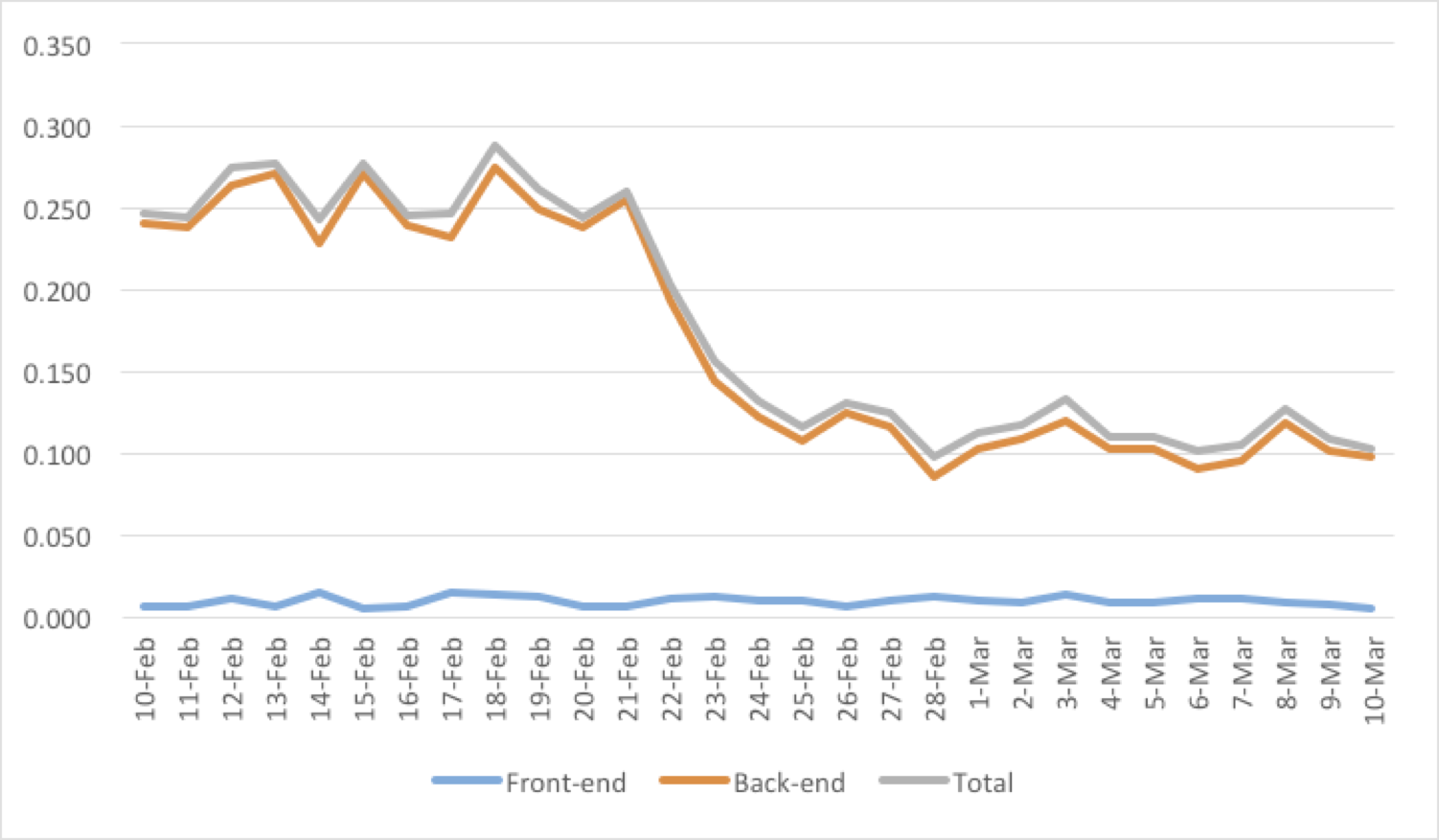

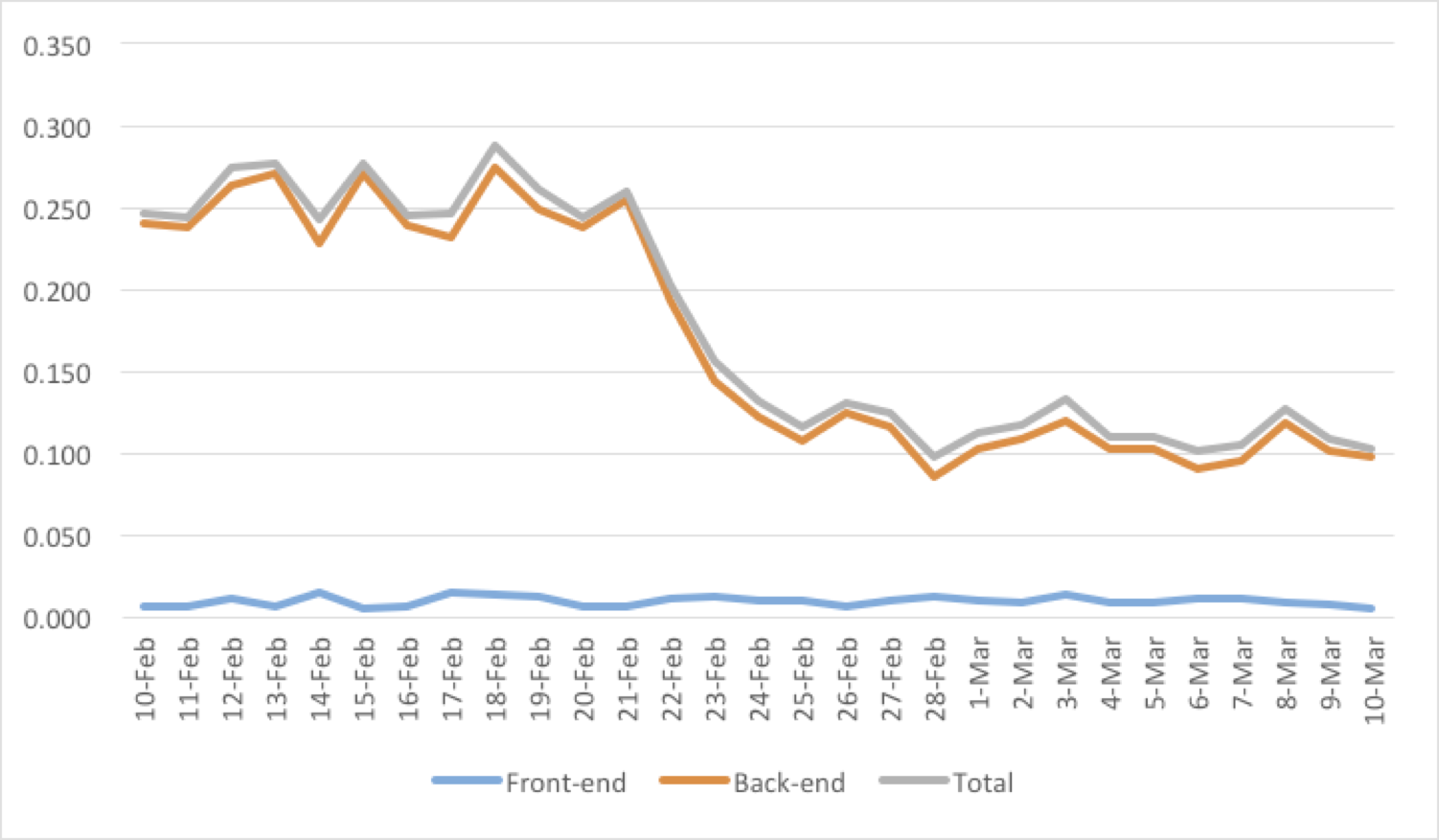

Also, this chart turned out to be very useful for us when switching from the old search engine to the new one. He made it possible to clearly answer the question: "Has it become better with the new search?" Full reindexing of the server usually takes several days, and the daily chart shows how, during this time, the search was gradually accelerated up to a difference of 2.5 times (Figure 3).

Figure 2. Saving up the “debt” by indexation. An occasion to reflect ...

Figure 3. We pass to a new search engine.

The next thing worth noting is the graph of the number of search queries . This graph in itself, without analyzing other data, is difficult to investigate. For example, a decrease in the number of search queries may indicate two opposite things:

Figure 4. In this case, they began to more often find the search on the first try.

An important parameter of the search quality is the number of queries with an empty result . On average, it is 30%, most of these requests are a constantly open page "all unread" for many users (waiting for a new letter). A sharp increase in this indicator may indicate a fatal search error (if this indicator exceeds a certain threshold value, it makes sense to send an SMS notification to developers and system administrators, since such errors must be fixed in a minimal amount of time, see Figure 5).

Figure 5. Some problem appeared ... Time to send SMS.

The number of clicks on letters from the search directly indicates the quality of the selection of results (Figure 6). Due to the fact that there are snippets (small excerpts from the text of the letter with highlighting the words from the request), users rarely click on letters that do not meet their expectations. Typically, quality degradation occurs slowly, and you need to watch the dynamics for a long period of time after the layout of the next version of the search.

Figure 6. Search quality has improved - the number of conversions has increased.

It also makes sense to measure the number of "qualifying" search queries. With a high degree of probability, a search query executed from an open page of search results can be considered a refinement request.. This conclusion is due to the assumption that after the user tried to find something, but the search results did not suit him, he decided to repeat the search, but somehow "differently", with a different request. Such requests are on average 1-3%. The growth of this indicator indicates that the search has ceased to find something from what was previously successful (Figure 7).

Figure 7. Extra 3% of repeated requests.

Developing the topic of “qualifying” search queries, it is worth noting that it makes sense to measure the number of “turning over” of search results as well. The growth of this value may serve as evidence, for example, that the resulting sample is too large. Such requests are on average 1-2%. Most of them are due to the natural need to sometimes look for old letters, flipping “deeply” in time. The growth of this indicator indicates that something "superfluous" fell into the results and pushed back (to the next pages) relevant letters. The fall of this indicator, on the contrary, is definitely a good sign, because users begin to find what they are looking for on the first page of search results (Figure 8).

Figure 8. Now everything you need on the first page, and flipping through less often.

Finally, we consider such a parameter as the average time a user clicks on a letterafter receiving the search results. This graph indicates both the quality of the search sample (its accuracy) and the quality of highlighting snippets. The faster the user finds the letter in the selection with his eyes, the faster he clicks on it (Figure 9). The speed of finding the result increases both the search that works correctly (correct sorting, the absence of "unnecessary" results in the selection) and the quality of the "highlighting" of words from the query in snippets (the better it is, the faster the eye will "catch" on the desired result).

Figure 9. The snippet coloring was reworked, and the average transition time fell.

Assessing the quality of the search, it makes sense to analyze all these indicators together. Similar graphs exist for sadjests (search hints), the quality of the search itself directly depends on the quality of them (3% of search queries are done with the help of sadjests). Due to the fact that often the search quality degrades only after a certain time, all graphs are built on two scales - five-minute and daily. Thus, it becomes possible to diagnose and solve possible problems with the search until they become noticeable to a wide range of users.

If you have questions, ideas or experience in solving problems in the field of QA search, let's discuss in the comments.

Dmitry Kalugin-Balashov

programmer Mail.Ru Mail team

PS: All the graphs were made by me personally in Excel based on real events.

However, how do you determine if a new search is really better? About how to measure the quality of the search, I will tell in this post.

To begin, consider the general execution scheme of a search query.

The main parameter of the search quality is its speed . It is most convenient to measure it on the front end side like this:

$mailsearch_start = Time::HiRes::time();

$answer = MailSearch::Query($request);

$mailsearch_end = Time::HiRes::time();

Data is written to the log, and a special daemon every 5 minutes collects the next batch of logs from all the front-ends and builds the next segment on the chart. It should be noted that you should have two graphs with a search speed indicator.

Firstly, a “five-minute” chart showing the current state. It can be useful for diagnosing “acute” conditions, for example, if after the next update the search suddenly began to work not as expected, but much more slowly.

Figure 1. Oops! It seems that they rolled out something wrong ...

Secondly, we also need a “daily” chart, which allows us to detect deeper and less noticeable at first glance problems. For example, if the rebuilding of indexes is too rare, a certain “debt” will constantly accumulate - a certain amount of non-indexed data for which only a sequential search is possible. This “debt” will be visible on the graph as slow degradation in speed over several days or weeks (Figure 2). Such behavior of the chart is a signal that it would be worth revising the work policy of the indexing planner in the direction of indexing more often.

Also, this chart turned out to be very useful for us when switching from the old search engine to the new one. He made it possible to clearly answer the question: "Has it become better with the new search?" Full reindexing of the server usually takes several days, and the daily chart shows how, during this time, the search was gradually accelerated up to a difference of 2.5 times (Figure 3).

Figure 2. Saving up the “debt” by indexation. An occasion to reflect ...

Figure 3. We pass to a new search engine.

The next thing worth noting is the graph of the number of search queries . This graph in itself, without analyzing other data, is difficult to investigate. For example, a decrease in the number of search queries may indicate two opposite things:

- Users more often began to find what they were looking for on the first attempt (good, see Figure 4)

- Users generally stopped finding what they were looking for (bad)

Figure 4. In this case, they began to more often find the search on the first try.

An important parameter of the search quality is the number of queries with an empty result . On average, it is 30%, most of these requests are a constantly open page "all unread" for many users (waiting for a new letter). A sharp increase in this indicator may indicate a fatal search error (if this indicator exceeds a certain threshold value, it makes sense to send an SMS notification to developers and system administrators, since such errors must be fixed in a minimal amount of time, see Figure 5).

Figure 5. Some problem appeared ... Time to send SMS.

The number of clicks on letters from the search directly indicates the quality of the selection of results (Figure 6). Due to the fact that there are snippets (small excerpts from the text of the letter with highlighting the words from the request), users rarely click on letters that do not meet their expectations. Typically, quality degradation occurs slowly, and you need to watch the dynamics for a long period of time after the layout of the next version of the search.

Figure 6. Search quality has improved - the number of conversions has increased.

It also makes sense to measure the number of "qualifying" search queries. With a high degree of probability, a search query executed from an open page of search results can be considered a refinement request.. This conclusion is due to the assumption that after the user tried to find something, but the search results did not suit him, he decided to repeat the search, but somehow "differently", with a different request. Such requests are on average 1-3%. The growth of this indicator indicates that the search has ceased to find something from what was previously successful (Figure 7).

Figure 7. Extra 3% of repeated requests.

Developing the topic of “qualifying” search queries, it is worth noting that it makes sense to measure the number of “turning over” of search results as well. The growth of this value may serve as evidence, for example, that the resulting sample is too large. Such requests are on average 1-2%. Most of them are due to the natural need to sometimes look for old letters, flipping “deeply” in time. The growth of this indicator indicates that something "superfluous" fell into the results and pushed back (to the next pages) relevant letters. The fall of this indicator, on the contrary, is definitely a good sign, because users begin to find what they are looking for on the first page of search results (Figure 8).

Figure 8. Now everything you need on the first page, and flipping through less often.

Finally, we consider such a parameter as the average time a user clicks on a letterafter receiving the search results. This graph indicates both the quality of the search sample (its accuracy) and the quality of highlighting snippets. The faster the user finds the letter in the selection with his eyes, the faster he clicks on it (Figure 9). The speed of finding the result increases both the search that works correctly (correct sorting, the absence of "unnecessary" results in the selection) and the quality of the "highlighting" of words from the query in snippets (the better it is, the faster the eye will "catch" on the desired result).

Figure 9. The snippet coloring was reworked, and the average transition time fell.

Assessing the quality of the search, it makes sense to analyze all these indicators together. Similar graphs exist for sadjests (search hints), the quality of the search itself directly depends on the quality of them (3% of search queries are done with the help of sadjests). Due to the fact that often the search quality degrades only after a certain time, all graphs are built on two scales - five-minute and daily. Thus, it becomes possible to diagnose and solve possible problems with the search until they become noticeable to a wide range of users.

If you have questions, ideas or experience in solving problems in the field of QA search, let's discuss in the comments.

Dmitry Kalugin-Balashov

programmer Mail.Ru Mail team

PS: All the graphs were made by me personally in Excel based on real events.