Dryad Distributed computing framework

Imagine a general framework for distributed application execution with the following statistics *:

* Statistics for 2011.

Now imagine that this is not Hadoop.

What kind of framework it is, about the ideas and concepts laid in its foundation and why this framework is even more innovative (subjective) than Hadoop will be discussed below.

1. Dryad. General information

Dryad is a general-purpose software framework for distributed application execution . Dryad is a project of Microsoft Research . The central concept of the Dryad project is the construction of a directed acyclic graph (DAG) for each distributed task. The vertices of the graph are operations on the data (in fact, the program), and the edges of the graph are the channels through which data is transmitted.

Abstraction based on the model of a directed acyclic graph makes it possible to effectively implement plans for the execution of a large number of parallel algorithms ,iterative algorithms , machine learning algorithms . Thus, the only (before YARN ) map / reduce programming model implemented in Hadoop ** is essentially just a special case of the distributed computing model provided by Dryad .

Dryad is optimized to run on a medium or large computing cluster (from 100 to 10K computing nodes) and is aimed primarily at long-term batch jobs that do not require frequent interactions.

2004 ... 2008

Attempts to deal with the past, present and future of Dryad led me to a rather limited number of articles, the authors of which, citing and not to the original sources, argue that:

2008 ... 2009

One of the fundamental documents describing the concepts embodied in Dryad, was awarded the " Best Paper " at OSDI'08 (USENIX Symposium on Operating Systems Design and Implementation).

In November 2009, Dryad became available under an academic license .

2010 ... 2011

In 2011, the Windows HPC Team announced the beta version of “LINQ to HPC” (Dryad for Windows HPC Server) for the corresponding Windows operating system line. In the same year, it was announced that LINQ to HPC "will not exit" from the preview version (that is, in fact, about the termination of support).

It is worth noting that the Windows HPC Team did not say anything (and could not say in principle) about the support / development of the Dryad Project. Other statements about further support of Dryad or, on the contrary, an unequivocal refusal of support, have not been noticed over the past (2012) and this year.

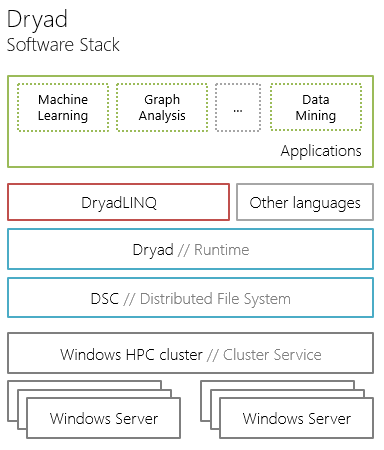

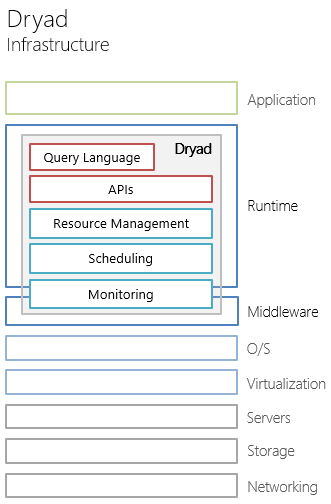

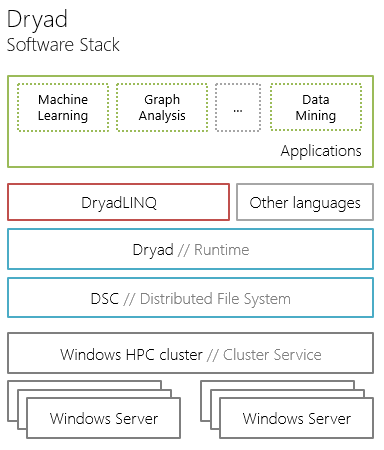

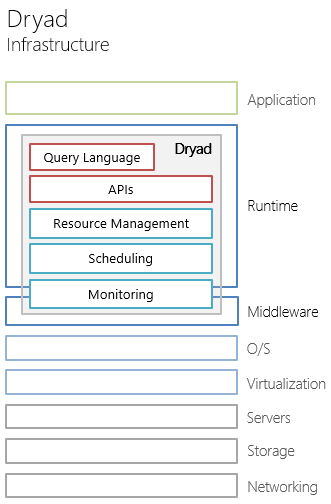

2. Dryad ecosystem

The Dryad project consists of 3 key components:

Below we’ll take a closer look at the elements of the Dryad ecosystem: the Dryad runtime runtime and the DryadLINQ query language.

3. Dryad Runtime

Dryad runtime is a runtime of distributed applications, like Hadoop, taking on such functions as:

The task in Dryad runtime is a directed acyclic graph , where the vertices are programs and the edges of the graph are data channels . This logical graph is mapped by the executable environment to the physical resources in the cluster. In general, the number of vertices in a graph exceeds the number of physical computing nodes in a cluster.

3.1. Data channels

Data channels, as well as vertices, are abstractions and are represented by:

The need to disclose information about the physical resources of the cluster for the most efficient execution of the distributed task makes the channel abstraction not as “clean” as it seems at first glance. Nevertheless, the developer of the distributed application "does not see" a violation of the "channel" abstraction, because she hides under other levels (task manager), which will be discussed below.

The Dryad data model is a shared-nothing architecture . The advantages of such an architecture are traditionally scalability , the absence of the need to support change tracking , the implementation of complex transactions , and the use of synchronization primitives . The Dryad platform is well suited for large static volumes of data and is not suited for frequently changing or streaming data.

Dryad expects to receive immutable and final amountinput data. Distributed program execution results will not be available until all routines are executed. It is logical to assume that tasks with streaming data are fundamentally impossible in Dryad.

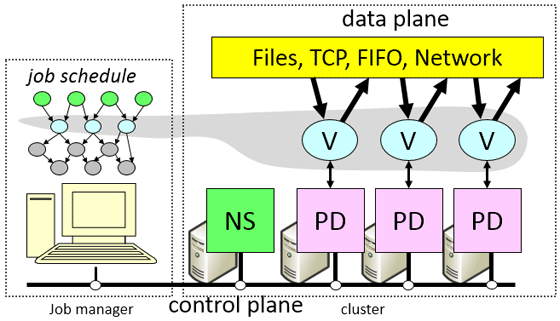

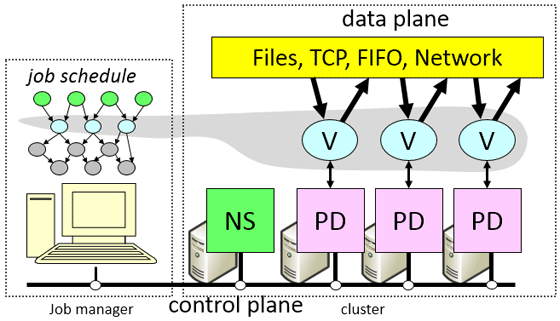

3.3. Job manager

The Dryad job is orchestrated by the Job Manager (JM). Job Manager includes specific application (application-specific) code to create the graph computation tasks.

Job Manager is responsible for:

Application data is sent directly from the vertex operation to the vertex operation. Job Manager is only responsible for initializing, scheduling, and monitoring the execution of distributed tasks. Therefore, JM is not a performance bottleneck . In addition, during forwarding, the vertex operation code can be either forwarded from Job Manager or from the nearest computing node on which a similar vertex operation is performed.

3.4. Name Server. Daemon

The name server Name Server is responsible for the disclosure of information about available compute nodes and their topological location in the cluster .

A Daemon process is launched on each computing node , the main purpose of which is to launch vertex operations sent by Job Manager. Daemon acts as a proxy object, so Job Manager has the ability to find out the status and stage of the vertex operation launched by the remote Daemon.

The Dryad architecture and the place in this architecture for Job Manager , Name Server (NS) and Daemon (PD) are given below.

Source of illustration [3]

( Explanation of the illustration: JM initializes the execution graph based on data on available nodes and their location received from NS. JM controls the execution of the graph, I receive statuses from PD. PDs exchange data on available channels bypassing NS and JM. The shaded area in the illustration shows the operations that are currently executing.)

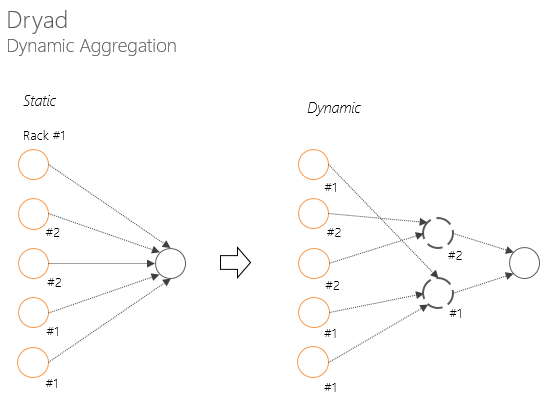

3.5. Dynamic graph change

Job Manager, similar to JobTracker in Hadoop, provides static performance optimization . But unlike Hadoop, Dryad has the ability to dynamically optimize performance .

Thanks to Dryad’s central concept of a directed acyclic graph and support for callback mechanisms (callback informs JM about a change in the execution phase of a vertex operation), the execution graph is able to change during runtime. Dynamic change allows you to quite elegantly solve the following problems:

In addition, due to the dynamic change of the graph at runtime and the abstracting of the concept of “channel” from a specific method of data transfer, it is important (that is, it is possible to implement, if not implemented) the data can reach the vertices not only from the node on which the data is physically stored , but also:

As previously mentioned, Daemon is a proxy, so Job Manager has the ability to find out the status and stage of a vertex operation launched by a remote Daemon. If Daemon “crashes”, then Job Manager will know about it:

After diagnosing a PD failure, the operation performed on it is re-executed on another Daemon.

I did not find information in the Dryad documentation about what will happen in the event of a Name Server crash. It is reasonable to assume that if there is no heartbeat from the NS process, Job Manager will restart the NS on another node. During the downtime of Name Server, part of the computing power of the cluster, for the disclosure of information about which NS is responsible, simply “falls out”.

Also, it did not become clear from the documentation what measures were taken to ensure that Job Manager did not become a single point of failure. If each distributed application has its own Job Manager, then stopping the Job Manager will not lead to a downtime of the whole cluster, as is the case with Hadoop when Name Node fails.

But the presence of separate JM processes for each of the distributed applications immediately presents 2 problems:

4. Practice

Dryad is available free of charge under an academic license [4]. To start the framework, you need a Windows HPC cluster with Microsoft HPC Pack 2008 SP1, 4 Gb RAM, 1 Gb Ethernet and 200 Gb free space on each node of the cluster [1].

Dryad applications can be written both in C ++ and C # (it is reasonable to assume that any CLS-compatible language is suitable).

The instruction for distributed execution of an operation for Dryad is as follows (InitialReduce, Combine, Initialize, Iterate, Merge are the names of the functions responsible for the corresponding stages of distributed execution; examples in the listings consider the arithmetic mean):

The DryadLINQ query language encapsulates the complexity of the code, so, in general, the application developer will not have to write the designs listed in the listings. DryadLINQ will be covered in the next article in this series.

5. Limitations and disadvantages

In conclusion, we review briefly the limitations of Dryad and the “incorrect” expectations associated primarily with the misconception of the purpose of this class of software.

One of the main limitations of Dryad is the difficulty of adapting to work in realtime mode and, probably, the fundamental impossibility of working with streaming data . It should also be added that the Dryad framework will show good efficiency for batch tasks, but the use of Drayd will not be justified for with random-access operations. In addition, I note a potential problem with a single point of failure [at the application level] when the job manager “crashes” the Job Manager (perhaps there is no such point of failure, but it is not clear from the documentation how this problem is solved).

It is also necessary to understand that Dryad is only a distributed computing framework , therefore you should not expect from Dryad:

The rest of Dryad is a powerful and flexible abstraction for a distributed application developer. The platform is an extremely organic symbiosis of modern concepts and concepts of developing parallel software, providing familiar language tools and elegant ways to solve problems that are often encountered (and rarely solved) by distributed computing frameworks .

List of sources

[1] The Dryad Project . Microsoft Research.

[2] Y. Yu, PK Gunda, M. Isard. Distributed Aggregation for Data-Parallel Computing: Interfaces and Implementations , 2009.

[3] M. Isard, M. Budiu, Y. Yu, A. Birrell, and D. Fetterly. Dryad: Distributed data-parallel programs from sequential building blocks .

In Proceedings of European Conference on Computer Systems (EuroSys), 2007.

[4] Dryad and DryadLINQ Academic Release . Microsoft Research.

* Sorted alphabetically.

** Comparison in the article occurs exclusively with the 1st version of the Hadoop platform (i.e. without YARN). A detailed comparison of Dryad with DBMS, GPU computing, and the Hadoop framework will be done inThe final article in the Dryad cycle .

* Statistics for 2011.

Now imagine that this is not Hadoop.

What kind of framework it is, about the ideas and concepts laid in its foundation and why this framework is even more innovative (subjective) than Hadoop will be discussed below.

1. Dryad. General information

Dryad is a general-purpose software framework for distributed application execution . Dryad is a project of Microsoft Research . The central concept of the Dryad project is the construction of a directed acyclic graph (DAG) for each distributed task. The vertices of the graph are operations on the data (in fact, the program), and the edges of the graph are the channels through which data is transmitted.

Abstraction based on the model of a directed acyclic graph makes it possible to effectively implement plans for the execution of a large number of parallel algorithms ,iterative algorithms , machine learning algorithms . Thus, the only (before YARN ) map / reduce programming model implemented in Hadoop ** is essentially just a special case of the distributed computing model provided by Dryad .

Dryad is optimized to run on a medium or large computing cluster (from 100 to 10K computing nodes) and is aimed primarily at long-term batch jobs that do not require frequent interactions.

2004 ... 2008

Attempts to deal with the past, present and future of Dryad led me to a rather limited number of articles, the authors of which, citing and not to the original sources, argue that:

- the idea of constructing such a system arose in 2004 (an analogy with Google MapReduce) by Michael Isard (later he took the position of researcher at Microsoft);

- in 2006, Bill Gates himself presented Dryad himself at a Computer Science conference;

- Dryad is adapted and used in Bing, Microsoft AdCenter,

Kinect, Windows HPC Server 2008 R2 (the latter, known reliably).

2008 ... 2009

One of the fundamental documents describing the concepts embodied in Dryad, was awarded the " Best Paper " at OSDI'08 (USENIX Symposium on Operating Systems Design and Implementation).

In November 2009, Dryad became available under an academic license .

2010 ... 2011

In 2011, the Windows HPC Team announced the beta version of “LINQ to HPC” (Dryad for Windows HPC Server) for the corresponding Windows operating system line. In the same year, it was announced that LINQ to HPC "will not exit" from the preview version (that is, in fact, about the termination of support).

2012 ... 2013/ UPD /

It is worth noting that the Windows HPC Team did not say anything (and could not say in principle) about the support / development of the Dryad Project. Other statements about further support of Dryad or, on the contrary, an unequivocal refusal of support, have not been noticed over the past (2012) and this year.

2. Dryad ecosystem

The Dryad project consists of 3 key components:

- Dryad - runtime of distributed applications (hereinafter, to avoid ambiguity, we will call this component Dryad Runtime);

- DryadLINQ - a high-level query language based on the .NET Language Integrated

Query (LINQ) programming model ; - Distributed Storage Catalog (DSC) is a distributed file system with configurable redundancy.

Below we’ll take a closer look at the elements of the Dryad ecosystem: the Dryad runtime runtime and the DryadLINQ query language.

3. Dryad Runtime

Dryad runtime is a runtime of distributed applications, like Hadoop, taking on such functions as:

- scheduling and management of distributed tasks;

- resource management;

- fault tolerance;

- monitoring

The task in Dryad runtime is a directed acyclic graph , where the vertices are programs and the edges of the graph are data channels . This logical graph is mapped by the executable environment to the physical resources in the cluster. In general, the number of vertices in a graph exceeds the number of physical computing nodes in a cluster.

3.1. Data channels

Data channels, as well as vertices, are abstractions and are represented by:

- Shared-memory FIFO (intra-machine): the fastest way to exchange data between operations (vertices of the graph), but operations must be performed within a single computing node;

- TCP pipes (inter-machine): a channel that does not require access to the disk (we avoid the overhead associated with writing / reading data from the disk); a channel can only be used if the operations between which data is transmitted are available at the time of transmission;

- SMB / NTFS files (temporary files): the output data transmitted over the channel is written

to disk, the input data is read; default channel.

The need to disclose information about the physical resources of the cluster for the most efficient execution of the distributed task makes the channel abstraction not as “clean” as it seems at first glance. Nevertheless, the developer of the distributed application "does not see" a violation of the "channel" abstraction, because she hides under other levels (task manager), which will be discussed below.

3.2. Data model

The Dryad data model is a shared-nothing architecture . The advantages of such an architecture are traditionally scalability , the absence of the need to support change tracking , the implementation of complex transactions , and the use of synchronization primitives . The Dryad platform is well suited for large static volumes of data and is not suited for frequently changing or streaming data.

Dryad expects to receive immutable and final amountinput data. Distributed program execution results will not be available until all routines are executed. It is logical to assume that tasks with streaming data are fundamentally impossible in Dryad.

3.3. Job manager

The Dryad job is orchestrated by the Job Manager (JM). Job Manager includes specific application (application-specific) code to create the graph computation tasks.

Job Manager is responsible for:

- initialization of the calculation graph ;

- planning operations (vertices, hereinafter referred to as vertex operations) so that the physical hardware associated with these vertices is topologically as close as possible to the data processed by these vertices;

- fault tolerance ;

- performance monitoring and statistics collection ;

- dynamic transformation of the graph in accordance with existing policies.

Application data is sent directly from the vertex operation to the vertex operation. Job Manager is only responsible for initializing, scheduling, and monitoring the execution of distributed tasks. Therefore, JM is not a performance bottleneck . In addition, during forwarding, the vertex operation code can be either forwarded from Job Manager or from the nearest computing node on which a similar vertex operation is performed.

3.4. Name Server. Daemon

The name server Name Server is responsible for the disclosure of information about available compute nodes and their topological location in the cluster .

A Daemon process is launched on each computing node , the main purpose of which is to launch vertex operations sent by Job Manager. Daemon acts as a proxy object, so Job Manager has the ability to find out the status and stage of the vertex operation launched by the remote Daemon.

The Dryad architecture and the place in this architecture for Job Manager , Name Server (NS) and Daemon (PD) are given below.

Source of illustration [3]

( Explanation of the illustration: JM initializes the execution graph based on data on available nodes and their location received from NS. JM controls the execution of the graph, I receive statuses from PD. PDs exchange data on available channels bypassing NS and JM. The shaded area in the illustration shows the operations that are currently executing.)

3.5. Dynamic graph change

Job Manager, similar to JobTracker in Hadoop, provides static performance optimization . But unlike Hadoop, Dryad has the ability to dynamically optimize performance .

Thanks to Dryad’s central concept of a directed acyclic graph and support for callback mechanisms (callback informs JM about a change in the execution phase of a vertex operation), the execution graph is able to change during runtime. Dynamic change allows you to quite elegantly solve the following problems:

- network bandwidth degradation , when several nodes need to transfer data to the input of another node (in Hadoop, such degradation is observed when transferring data between the Combine and Reduce stages);

- simple computing nodes in order to wait for the completion of “slow” operations (in Hadoop, convolution cannot begin until all map tasks are completed, which leads to a simple map slot, and makes the best execution time of the program equal to the execution time of the “slowest” map tasks).

In addition, due to the dynamic change of the graph at runtime and the abstracting of the concept of “channel” from a specific method of data transfer, it is important (that is, it is possible to implement, if not implemented) the data can reach the vertices not only from the node on which the data is physically stored , but also:

- one Daemon can save a temporary file with intermediate results in a rack local to the vertex operation, which accepts this data as input;

- one Daemon can store data in the host’s RAM if the vertex operation that accepts this data as input is running on the same compute node.

3.6. Resiliency

As previously mentioned, Daemon is a proxy, so Job Manager has the ability to find out the status and stage of a vertex operation launched by a remote Daemon. If Daemon “crashes”, then Job Manager will know about it:

- An error message that Daemon sent to JM before closing its own process

- by the expired time of the heartbeat message in case if at closing Daemon no diagnostic events were sent to JM.

After diagnosing a PD failure, the operation performed on it is re-executed on another Daemon.

I did not find information in the Dryad documentation about what will happen in the event of a Name Server crash. It is reasonable to assume that if there is no heartbeat from the NS process, Job Manager will restart the NS on another node. During the downtime of Name Server, part of the computing power of the cluster, for the disclosure of information about which NS is responsible, simply “falls out”.

Also, it did not become clear from the documentation what measures were taken to ensure that Job Manager did not become a single point of failure. If each distributed application has its own Job Manager, then stopping the Job Manager will not lead to a downtime of the whole cluster, as is the case with Hadoop when Name Node fails.

But the presence of separate JM processes for each of the distributed applications immediately presents 2 problems:

- Time spent on initialization of Job Manager

- resource sharing problem, Job Manager cannot “adequately” estimate the amount of free resources (CPU, RAM, bandwidth), because Doesn't know how many more JM processes this physical computing node is serving.

4. Practice

Dryad is available free of charge under an academic license [4]. To start the framework, you need a Windows HPC cluster with Microsoft HPC Pack 2008 SP1, 4 Gb RAM, 1 Gb Ethernet and 200 Gb free space on each node of the cluster [1].

Dryad applications can be written both in C ++ and C # (it is reasonable to assume that any CLS-compatible language is suitable).

The instruction for distributed execution of an operation for Dryad is as follows (InitialReduce, Combine, Initialize, Iterate, Merge are the names of the functions responsible for the corresponding stages of distributed execution; examples in the listings consider the arithmetic mean):

///For iterator-based implementation

[AssociativeDecomposable("InitialReduce", "Combine")]

public static TOutput H(IEnumerable source) { … } Listing 1. Iterator-based implementation example. Source [2].

public static double Average(IEnumerable g) {

IntPair final = g.Aggregate(x => PartialSum(x));

if (final.second == 0) return 0.0;

return (double)final.first / (double)final.second;

}

[AssociativeDecomposable("InitialReduce", "Combine")]

public static IntPair PartialSum(IEnumerable g) {

return InitialReduce(g);

}

public static IntPair InitialReduce(IEnumerable g) {

return new IntPair(g.Sum(), g.Count());

}

public static IntPair Combine(IEnumerable g) {

return new IntPair(g.Select(x => x.first).Sum(),

g.Select(x => x.second).Sum());

} ///For accumulator-based implementation

[AssociativeDecomposable("Initialize", "Iterate", "Merge")]

public static TOutput H(IEnumerable source) { … } Listing 2. Accumulator-based implementation example. Source [2].

public static double Average(IEnumerable g) {

IntPair final = g.Aggregate(x => PartialSum(x));

if (final.second == 0) return 0.0;

else return (double)final.first / (double)final.second

}

[AssociativeDecomposable("Initialize", "Iterate", "Merge")]

public static IntPair PartialSum(IEnumerable g) {

return new IntPair(g.Sum(), g.Count());

}

public static IntPair Initialize() {

return new IntPair(0, 0);

}

public static IntPair Iterate(IntPair x, int r) {

x.first += r;

x.second += 1;

return x;

}

public static IntPair Merge(IntPair x, IntPair o) {

x.first += o.first;

x.second += o.second;

return x;

} The DryadLINQ query language encapsulates the complexity of the code, so, in general, the application developer will not have to write the designs listed in the listings. DryadLINQ will be covered in the next article in this series.

5. Limitations and disadvantages

In conclusion, we review briefly the limitations of Dryad and the “incorrect” expectations associated primarily with the misconception of the purpose of this class of software.

One of the main limitations of Dryad is the difficulty of adapting to work in realtime mode and, probably, the fundamental impossibility of working with streaming data . It should also be added that the Dryad framework will show good efficiency for batch tasks, but the use of Drayd will not be justified for with random-access operations. In addition, I note a potential problem with a single point of failure [at the application level] when the job manager “crashes” the Job Manager (perhaps there is no such point of failure, but it is not clear from the documentation how this problem is solved).

It is also necessary to understand that Dryad is only a distributed computing framework , therefore you should not expect from Dryad:

- transaction support because it is not an RDBMS;

- performance comparable to GPU computing , because Dryad solves a fundamentally wider class of problems with fundamentally different tools;

- Open source license because it

Microsoftoriginally a different type of product (but there is still free access under an academic license); - rapid development of distributed applications that work within the framework of the map / reduce paradigm, because Dryad is not Hadoop: the map / reduce model is only a special case for Dryad, while for Hadoop it is the only possible execution model.

Conclusion

The rest of Dryad is a powerful and flexible abstraction for a distributed application developer. The platform is an extremely organic symbiosis of modern concepts and concepts of developing parallel software, providing familiar language tools and elegant ways to solve problems that are often encountered (and rarely solved) by distributed computing frameworks .

List of sources

[1] The Dryad Project . Microsoft Research.

[2] Y. Yu, PK Gunda, M. Isard. Distributed Aggregation for Data-Parallel Computing: Interfaces and Implementations , 2009.

[3] M. Isard, M. Budiu, Y. Yu, A. Birrell, and D. Fetterly. Dryad: Distributed data-parallel programs from sequential building blocks .

In Proceedings of European Conference on Computer Systems (EuroSys), 2007.

[4] Dryad and DryadLINQ Academic Release . Microsoft Research.

* Sorted alphabetically.

** Comparison in the article occurs exclusively with the 1st version of the Hadoop platform (i.e. without YARN). A detailed comparison of Dryad with DBMS, GPU computing, and the Hadoop framework will be done inThe final article in the Dryad cycle .