Shooting the starry sky with Emgu CV

Good day, Habr.

It just so happened that I have been interested in photography and astronomy for quite some time. I like to shoot the starry sky. Since there is little light at night, in order to get really something beautiful, one has to make rather long exposures. But here another problem pops up - due to the fact that the Earth rotates, the stars in the sky move. Accordingly, with relatively long exposures, stars cease to be points, and begin to draw arcs. To compensate for this movement when photographing / observing deep-sky objects, there are devices - mounts . Unfortunately, at the moment there is no way to buy a mount, so I decided to ask myself a question - is it possible to implement a similar effect programmatically and what will come of it?

Under the cut a lot of photos. All the photos in the post are mine, (almost all) are clickable and free-to-download.

Introduction

The problem with shooting stars is this: they move. At first glance it may seem that this movement is completely imperceptible, but even at relatively short shutter speeds (20 ”+), the stars already cease to be dots — short arcs begin to be seen, which they draw through the sky. Exposure ~ 15 ' Exposure ~ 20'

Theory

The earth rotates around its own axis. Relatively distant stars, this period is 86164.090530833 seconds .

Accordingly, knowing the shutter speed you can calculate how many degrees relative to the center all the stars in the frame have rotated. The idea is that if you compensate for this rotation by turning the entire frame in the opposite direction by this value, then all the stars should remain in place.

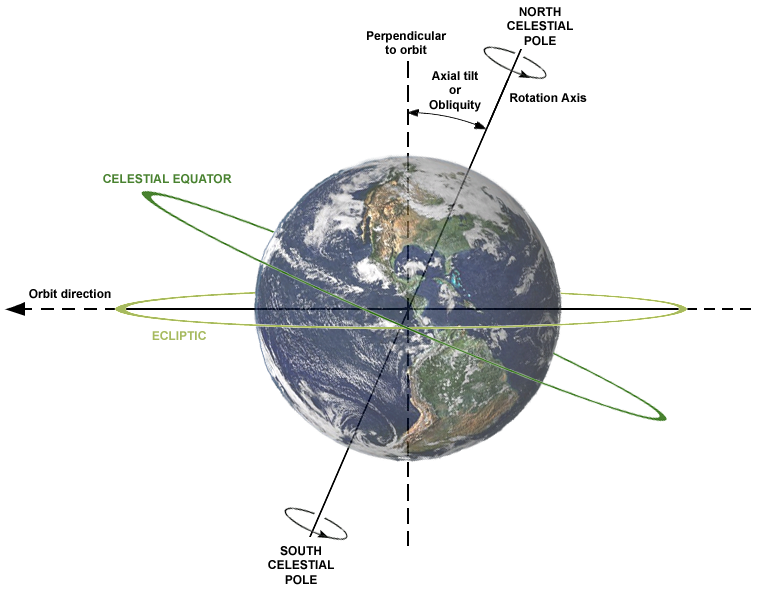

The problem of finding a center around which everything revolves is not a problem. It is enough to find a polar star - the axis of rotation of the Earth passes very close to it.

Celestial Pole - the same axis.

Implementation

To implement this idea, I decided to use the Emgu CV - wrapper library OpenCV for .NET.

I will not paint the entire program - I will only talk about the basic methods.

If the photos overlap one another, then in Photoshop I usually used Blending Mode: Lighten. Its essence is that of the two images, it is one, choosing the pixels with the highest brightness. That is, if there are two pixels in two source images, then the pixel that is brighter will be in the resulting image.

In Emgu CV, this method is already implemented.

public Image Max(

Image img2

) Mode 1: Adding Images Without Rotation

There is nothing complicated here - just alternately lay all the photos from the list one on top of the other.

List fileNames = (List)filesList; // input

Image image = new Image(fileNames.First()); // resulting

foreach (string filename in fileNames)

{

Image nextImage = new Image(filename);

image = image.Max(nextImage);

nextImage.Dispose();

pictureProcessed(this, new PictureProcessedEventArgs(image)); // updating image in imagebox

}

Why is this mode needed? Then, after adding photos, arcs are created due to the movement of stars. You can very easily find the center of rotation (polar star). And sometimes quite interesting photos with traces are obtained.

Mode 2: Compensation of images with rotation compensation

In this mode, everything becomes a little more complicated. Initially, we need to calculate the angle of the star’s displacement during the shooting. There was an idea to count it simply on the basis of endurance, but I realized that this may not be the most accurate. Therefore, we will pull the shooting time from EXIF photo data.

Bitmap referencePic = new Bitmap(fileNames.First()); //loading first image

byte[] timeTakenRaw = referencePic.GetPropertyItem(36867).Value; // EXIF DateTime taken

string timeTaken = System.Text.Encoding.ASCII.GetString(timeTakenRaw, 0, timeTakenRaw.Length - 1); // array to string without last char (newline)

DateTime referenceTime = DateTime.ParseExact(timeTaken, "yyyy:MM:d H:m:s", System.Globalization.CultureInfo.InvariantCulture);

Now in order.

We need to determine the start time of the shooting in order to start counting the offset of the remaining frames from it.

Upload a photo and get PropertyItem ID: 36867 - the date and time the frame was received.

We convert the array of characters to a string (the last character is \ n, so we exclude it).

Got the time when the shooting began.

For each subsequent photo, we will find the same and use the time difference to calculate the angle of rotation.

Everything is considered simple, knowing how many seconds a complete revolution of the Earth takes .

double secondsShift = (dateTimeTaken - referenceTime).TotalSeconds;

double rotationAngle = secondsShift / stellarDay * 360;

We will rotate the images by the affine transformation , but for this we need to calculate the rotation matrix.

Fortunately, in Emgu CV this is all done for us.

RotationMatrix2D rotationMatrix = new RotationMatrix2D(rotationCenter, -rotationAngle, 1); //change rotationAngle sign for CW/CCW

Having a matrix, you can apply the affine transformation for the current photo, and then add them as in the first mode.

using (Image imageToRotate = new Image(currentPic))

{

referenceImage = referenceImage.Max(imageToRotate.WarpAffine(rotationMatrix, Emgu.CV.CvEnum.INTER.CV_INTER_CUBIC, Emgu.CV.CvEnum.WARP.CV_WARP_FILL_OUTLIERS, background));

}

That's all. For each photo, we perform these two methods, and see what happened.

Tests

Original Images:

27 pieces

Test 1: Stack

The results of overlaying all the photos in one.

Test 2: Rotate & Stack

We noted the center of rotation, and forward.

In the second test, a rather strange effect was obtained.

1. The earth is smeared - this is normal. Stars must be fixed.

2. The stars in the center remained in their places, that is, everything worked as it should.

3. Stars "floated" around the edges

Why did this happen? I have no idea! I suppose that due to the distortion of the lens, the trajectory of stars ceased to be a circle - it became an ellipse. Excerpt ~ 43 ' In this photo you can clearly see that the trajectory is different from the ideal circle - and for this everything was calculated after all.

Equipment

Everything was filmed on a Canon 7D with a Canon EF-S 10-22mm. This lens does not have the best optical parameters - namely, distortion. Therefore, it is he who I blame for the fact that everything turned out not so smoothly. Next time I’ll try to correct the distortion and test again.

Clear sky!

Github repository