Heart surgery: how we rewrote the main component of the DLP system

Rewriting the legacy code as a trip to the dentist - everyone seems to understand that they should go, but they still procrastinate and try to delay the inevitable, because they know that it will be painful. In our case, things were even worse: we had to rewrite the key part of the system, and due to external circumstances we could not replace the old pieces of code with new ones in parts, only all at once and completely. And all this in the conditions of lack of time, resources and documentation, but with the demand of management that as a result of the “operation” no customer should suffer.

Under the cut, the story of how we rewrote the main component of the product with a 17-year history (!) From Scheme to Clojure, and everything worked right away (well, almost :)).

Product Solar Dozor - DLP-system with a very long history. The first version appeared back in 2001 as a relatively small mail traffic filtering service. For 17 years, the product has grown to a large software package that collects, filters, and analyzes heterogeneous information plying inside the organization and protects the clients' business from internal threats.

In developing the 6th version of Solar Dozor, we decisively shook the product, threw out the old crutches from the codeand replaced them with new ones , updated the interface, revised the functionality towards modern realities - in general, made the product architecturally and conceptually more holistic.

At that time, under the hood of the updated Solar Dozor, there was a huge layer of monolithic legacy code - that same filtering service, which all these 17 years gradually acquired new functionality, embodying both long-term solutions and short-term business problems, but managed to remain within the original architectural paradigms.

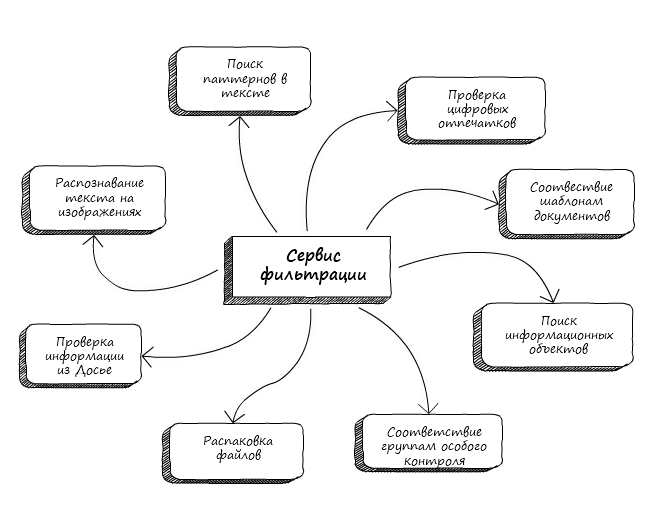

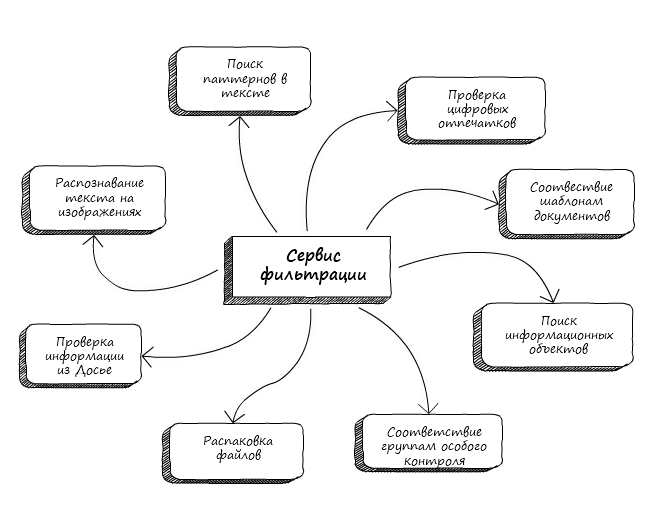

Filtering service

Needless to say, making any changes to such an ancient code required a special delicacy. Developers had to be extremely careful not to accidentally spoil the functionality created a decade ago. In addition, quite new interesting solutions were forced to squeeze into the Procrustean bed of architecture, invented at the dawn of an era.

Understanding that there is a need to update the system, has emerged for quite some time. But the spirit to touch the huge and ancient system service was clearly lacking.

Products with a long history of development have an interesting feature. No matter how strange a piece of functionality may seem, if it has successfully survived to our days, this means that it was created not from the theoretical ideas of developers, but in response to the specific needs of customers.

In this situation, it was not possible to talk about any phased replacement of speech. It was impossible to cut and rewrite the functional in parts, because all these parts were demanded by customers, and we could not “close them for reconstruction”. It was necessary to carefully remove the old service and provide it with a fully functional replacement. Only as a whole, only at once.

Improving the product development process, the speed of making changes and improving the quality in general was a prerequisite, but not sufficient. Management wondered what benefits would bring change to our customers. The answer was to expand the set of interfaces for interacting with new interception systems, which would provide quick feedback and allow interceptors to respond more quickly to incidents.

It also had to compete to reduce resource consumption, while maintaining (and ideally increasing) the current processing rate.

All the way to the development of the product, the Solar Dozor team suffered a functional approach. It follows a rather non-standard choice for programming languages in the mature industry. At different stages of the life of the system, these were Scheme, OCaml, Scala, Clojure, in addition to traditional C (++) and Java.

The main filtering service and other services that help receiving and transmitting messages were written and developed in the Scheme language in its various implementations (the latter was used by Racket). No matter how much one wants to sing the praises of the simplicity and elegance of this language, one cannot but admit that its development meets more academic interests than industrial ones. Especially noticeable lag in comparison with other, more modern services Solar Dozor, which are developed mainly on Scala and Clojure. The new service was also decided to be implemented in the Clojure language.

Here, of course, I need to say a few words about why we chose Clojure as the main implementation language.

First of all, I didn’t want to lose the unique experience of the team developing on Scheme. Clojure is also a modern member of the Lisp language family, and switching from one Lisp to another is usually quite simple.

Secondly, due to the commitment to functional principles and a number of unique architectural solutions, Clojure provides unprecedented ease of manipulation of data flows. It is also important that Clojure functions on the JVM platform, which means that you can use a joint database with other services in Java and Scala, as well as use numerous tools for profiling and debugging.

Thirdly, Clojure is a short and expressive language. This provides ease of reading someone else's code and facilitates the transfer of code to a colleague on the team.

Finally, we appreciate Clojure for the ease of prototyping and the so-called REPL-oriented development. Practically in any situation when there are doubts, you can simply create a prototype and continue the discussion in a more substantive way, with new data. REPL-oriented development gives quick returns, because to check the functionality of a function, it is necessary not only to recompile the program, but even to restart it (even if the program is a service located on a remote server).

Looking ahead, I can say: I believe that we have not lost the choice.

When we talk about a full-featured replacement, the first question is the collection of information about the existing functionality.

This has become quite an interesting task. It would seem that here is a working system, here is the documentation for it, here are the people - experts working closely with the system and teaching others about it. But it was not so easy to get a complete picture from the whole variety, and even more so the requirements for development.

Collecting requirements is not in vain considered a separate engineering discipline. The existing implementation, paradoxically, turns out to be in the role of a “corrupt reference”. It shows how and how it should work, but at the same time developers are expected to get the new version better than the original. It is necessary to separate the mandatory moments (usually associated with external interfaces) from those that can be improved in accordance with the expectations of users.

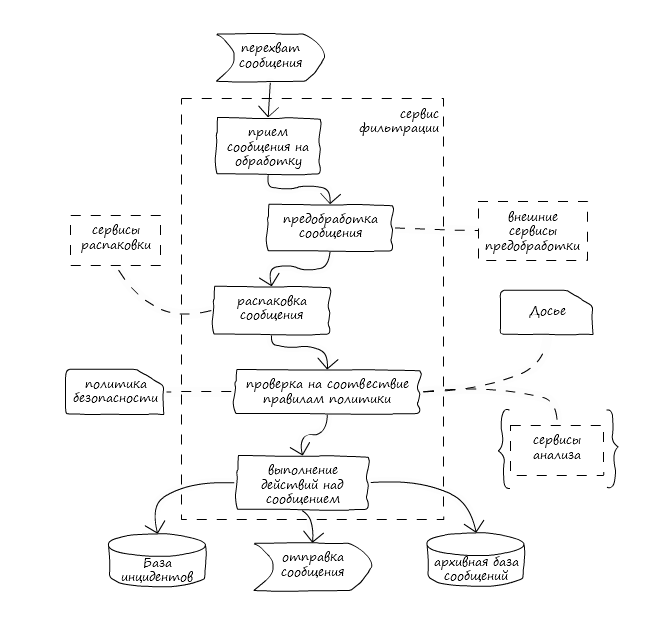

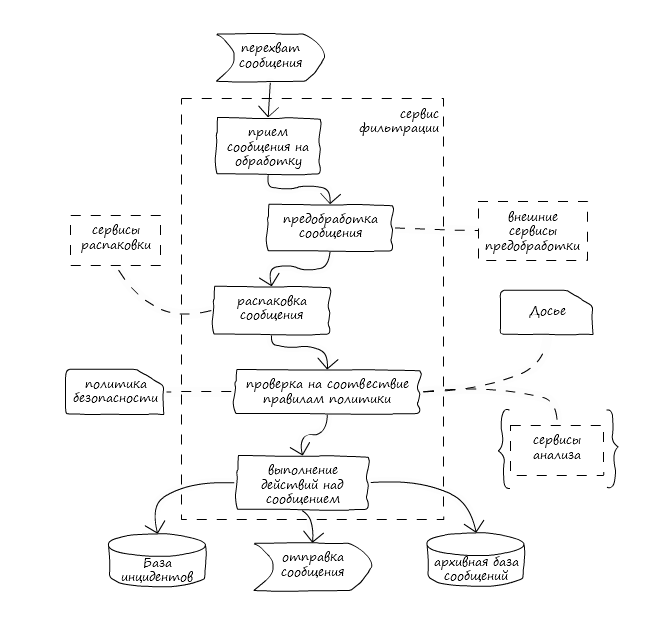

Message filtering process

What is actually the functionality of the system? The answer to this question is given by various descriptions, such as user documentation, manuals and architectural documents, reflecting the structure of the service in various aspects. But when it comes to business, you know perfectly well how much perception and reality diverge, how many nuances and unaccounted possibilities the old code contains.

I want to appeal to all developers. Take care of your code! This is the most important thing for you. Do not rely on documentation. Trust only the source code.

Fortunately for us, the Scheme code, due to the very nature of the language created for teaching programming, is quite easy to read even to an untrained person. The main thing is to get used to some individual forms that carry a light touch of Lisp-archaic.

The amount of work was enormous, and the team is very small. So it was not without organizational difficulties. The workflow of bugs and requests for corrections (and minor improvements) of the old filtering service did not even stop. Developers regularly had to be distracted by these tasks.

Fortunately, it was possible to fight off requests for embedding new pieces of large functionality into the old filter. True, under the promise to embed this functionality in the new service. Nevertheless, the set of release tasks slowly but surely grew.

Another factor that added a lot of trouble was the external dependencies of the service. As a central component, the filtering service uses numerous services for unpacking and analyzing content (texts, images, digital fingerprints, etc.). Work with them was partially guided by old architectural solutions. In the development process, we also had to rewrite some components in a modern fashion (and some in a modern language).

In such conditions, a system of stage testing of the functional was built. We kind of grew the service to a certain state, which was fixed by active testing, and then proceeded to implement a new one.

First of all, the main frame of the service, the basic mechanisms for receiving messages and unpacking files were implemented. It was the absolute minimum necessary in order to start testing for the speed and correctness of the future service.

Here it is necessary to clarify that unpacking refers to the recursive process of obtaining parts from a file and extracting useful information from them. For example, a Word document can contain not only text, but also images, an embedded Excel document, OLE objects, and much more interesting.

The unpacking mechanism does not distinguish between the use of internal libraries, external programs or third-party services, providing a single interface for organizing unpacking pipelines.

Another compliment in the direction of Clojure: we received a working prototype, which outlined the contours of the future functional, as soon as possible.

The second step was to add message validation using filtering policies.

To describe the politician, a special DSL was created - a simple, no-nonsense language that allowed us to present the terms and conditions of the policy in a more or less human readable way. He was named MFLang.

The script on MFLang is interpreted on the fly in Clojure code, caches the results of the checks on the message, keeps a detailed log of the work (and, frankly, deserves a separate article).

The use of DSL was appreciated by testers. Down digging in the database or in export format! Now you could simply send the generated rule for verification, and it immediately became clear what conditions were checked. It also became possible to get a detailed message verification log, from which it is clear what data was taken for verification and what results returned the comparison functions.

It is safe to say that MFLang proved to be a completely invaluable tool for debugging functionality.

At the third stage, a mechanism was added to apply actions defined by the security policy to the message, as well as service hooks to enable the inclusion of new components into the Solar Dozor complex. Finally, we were able to launch the service and observe the result of the work in all its diversity.

The main question, of course, was how well the implemented functionality is as expected and how fully it implements it.

I note that if the need for modular testing has not been questioned for a long time (although the TDD practices themselves are still causing lively debate), the introduction of automated testing of the system functionality often encounters open resistance.

The development of autotests helps all team members to better understand the process of the product, saves the forces of regression, instills a certain confidence in the performance of the product. But the process of their creation is fraught with a number of difficulties - collecting the necessary data, determining the indicators of interest and testing options. Programmers inevitably perceive the creation of autotests as an optional, side job, which is best avoided as much as possible.

But if one succeeds in overcoming resistance, a rather solid foundation is created, which allows one to build an idea of the performance of the system.

And then came the important point: we included the service in the package. So far, along with the old. Thus, it was possible to carry out a version change with one team and compare the behavior of the services.

In this parallel mode, the new filtering service existed for one release. During this time we managed to collect additional statistics on the work, outline and implement the necessary improvements.

Finally, gathering our strength, we removed the old filtration service from the product. I went to the final stage of internal acceptance, bugs were corrected, the developers began to gradually switch to other tasks. Somehow imperceptibly, without fanfare and applause, there was a release of the product with a new service.

And only when questions from the implementation team began to arrive, an understanding came - the service we had been working on for so long was already on the platforms and ... working!

Of course, there were some bugs and minor improvements, but after a month of active use, the customers received a verdict: the introduction of the product with the new version of the filtering service caused fewer problems than the introduction of previous versions. Hey! Looks like we managed!

Development of a new filtering service took about a year and a half. Longer than originally anticipated, but not critical, especially since the actual laboriousness of the work coincided with the initial assessment. More importantly, we were able to meet the expectations of management and customers and lay the foundation for future product improvements. Already in the current state one can see a significant reduction in resource consumption - despite the fact that the product still has wide opportunities for optimization.

I can add some personal impressions.

Replacing the central component with a long history is a breath of fresh air to develop. For the first time in a long time, there is confidence that control over the product returns to our hands.

It is difficult to overestimate the benefits of a properly organized process of communication and development. In this case, it was important to adjust the work not so much within the team, as with numerous product consumers, who had long been formed clear preferences and expectations from the system, and rather vague wishes.

For us, this was the first experience of developing such a large-scale project at Clojure. Initially, there were concerns related to the dynamic nature of the language, speed and resistance to errors. Fortunately, they were not justified.

It remains only to wish that the new component worked as long and successfully as its predecessor.

Under the cut, the story of how we rewrote the main component of the product with a 17-year history (!) From Scheme to Clojure, and everything worked right away (well, almost :)).

17 years in “Patrol”

Product Solar Dozor - DLP-system with a very long history. The first version appeared back in 2001 as a relatively small mail traffic filtering service. For 17 years, the product has grown to a large software package that collects, filters, and analyzes heterogeneous information plying inside the organization and protects the clients' business from internal threats.

In developing the 6th version of Solar Dozor, we decisively shook the product, threw out the old crutches from the code

At that time, under the hood of the updated Solar Dozor, there was a huge layer of monolithic legacy code - that same filtering service, which all these 17 years gradually acquired new functionality, embodying both long-term solutions and short-term business problems, but managed to remain within the original architectural paradigms.

Filtering service

Needless to say, making any changes to such an ancient code required a special delicacy. Developers had to be extremely careful not to accidentally spoil the functionality created a decade ago. In addition, quite new interesting solutions were forced to squeeze into the Procrustean bed of architecture, invented at the dawn of an era.

Understanding that there is a need to update the system, has emerged for quite some time. But the spirit to touch the huge and ancient system service was clearly lacking.

Not trying to delay the inevitable

Products with a long history of development have an interesting feature. No matter how strange a piece of functionality may seem, if it has successfully survived to our days, this means that it was created not from the theoretical ideas of developers, but in response to the specific needs of customers.

In this situation, it was not possible to talk about any phased replacement of speech. It was impossible to cut and rewrite the functional in parts, because all these parts were demanded by customers, and we could not “close them for reconstruction”. It was necessary to carefully remove the old service and provide it with a fully functional replacement. Only as a whole, only at once.

Improving the product development process, the speed of making changes and improving the quality in general was a prerequisite, but not sufficient. Management wondered what benefits would bring change to our customers. The answer was to expand the set of interfaces for interacting with new interception systems, which would provide quick feedback and allow interceptors to respond more quickly to incidents.

It also had to compete to reduce resource consumption, while maintaining (and ideally increasing) the current processing rate.

Little about stuffing

All the way to the development of the product, the Solar Dozor team suffered a functional approach. It follows a rather non-standard choice for programming languages in the mature industry. At different stages of the life of the system, these were Scheme, OCaml, Scala, Clojure, in addition to traditional C (++) and Java.

The main filtering service and other services that help receiving and transmitting messages were written and developed in the Scheme language in its various implementations (the latter was used by Racket). No matter how much one wants to sing the praises of the simplicity and elegance of this language, one cannot but admit that its development meets more academic interests than industrial ones. Especially noticeable lag in comparison with other, more modern services Solar Dozor, which are developed mainly on Scala and Clojure. The new service was also decided to be implemented in the Clojure language.

Clojure ?!

Here, of course, I need to say a few words about why we chose Clojure as the main implementation language.

First of all, I didn’t want to lose the unique experience of the team developing on Scheme. Clojure is also a modern member of the Lisp language family, and switching from one Lisp to another is usually quite simple.

Secondly, due to the commitment to functional principles and a number of unique architectural solutions, Clojure provides unprecedented ease of manipulation of data flows. It is also important that Clojure functions on the JVM platform, which means that you can use a joint database with other services in Java and Scala, as well as use numerous tools for profiling and debugging.

Thirdly, Clojure is a short and expressive language. This provides ease of reading someone else's code and facilitates the transfer of code to a colleague on the team.

Finally, we appreciate Clojure for the ease of prototyping and the so-called REPL-oriented development. Practically in any situation when there are doubts, you can simply create a prototype and continue the discussion in a more substantive way, with new data. REPL-oriented development gives quick returns, because to check the functionality of a function, it is necessary not only to recompile the program, but even to restart it (even if the program is a service located on a remote server).

Looking ahead, I can say: I believe that we have not lost the choice.

We collect functionality bit by bit

When we talk about a full-featured replacement, the first question is the collection of information about the existing functionality.

This has become quite an interesting task. It would seem that here is a working system, here is the documentation for it, here are the people - experts working closely with the system and teaching others about it. But it was not so easy to get a complete picture from the whole variety, and even more so the requirements for development.

Collecting requirements is not in vain considered a separate engineering discipline. The existing implementation, paradoxically, turns out to be in the role of a “corrupt reference”. It shows how and how it should work, but at the same time developers are expected to get the new version better than the original. It is necessary to separate the mandatory moments (usually associated with external interfaces) from those that can be improved in accordance with the expectations of users.

Message filtering process

Documentation is not enough

What is actually the functionality of the system? The answer to this question is given by various descriptions, such as user documentation, manuals and architectural documents, reflecting the structure of the service in various aspects. But when it comes to business, you know perfectly well how much perception and reality diverge, how many nuances and unaccounted possibilities the old code contains.

I want to appeal to all developers. Take care of your code! This is the most important thing for you. Do not rely on documentation. Trust only the source code.

Fortunately for us, the Scheme code, due to the very nature of the language created for teaching programming, is quite easy to read even to an untrained person. The main thing is to get used to some individual forms that carry a light touch of Lisp-archaic.

We build the process

The amount of work was enormous, and the team is very small. So it was not without organizational difficulties. The workflow of bugs and requests for corrections (and minor improvements) of the old filtering service did not even stop. Developers regularly had to be distracted by these tasks.

Fortunately, it was possible to fight off requests for embedding new pieces of large functionality into the old filter. True, under the promise to embed this functionality in the new service. Nevertheless, the set of release tasks slowly but surely grew.

Another factor that added a lot of trouble was the external dependencies of the service. As a central component, the filtering service uses numerous services for unpacking and analyzing content (texts, images, digital fingerprints, etc.). Work with them was partially guided by old architectural solutions. In the development process, we also had to rewrite some components in a modern fashion (and some in a modern language).

In such conditions, a system of stage testing of the functional was built. We kind of grew the service to a certain state, which was fixed by active testing, and then proceeded to implement a new one.

We start the development

First of all, the main frame of the service, the basic mechanisms for receiving messages and unpacking files were implemented. It was the absolute minimum necessary in order to start testing for the speed and correctness of the future service.

Here it is necessary to clarify that unpacking refers to the recursive process of obtaining parts from a file and extracting useful information from them. For example, a Word document can contain not only text, but also images, an embedded Excel document, OLE objects, and much more interesting.

The unpacking mechanism does not distinguish between the use of internal libraries, external programs or third-party services, providing a single interface for organizing unpacking pipelines.

Another compliment in the direction of Clojure: we received a working prototype, which outlined the contours of the future functional, as soon as possible.

DSL for policy

The second step was to add message validation using filtering policies.

To describe the politician, a special DSL was created - a simple, no-nonsense language that allowed us to present the terms and conditions of the policy in a more or less human readable way. He was named MFLang.

The script on MFLang is interpreted on the fly in Clojure code, caches the results of the checks on the message, keeps a detailed log of the work (and, frankly, deserves a separate article).

The use of DSL was appreciated by testers. Down digging in the database or in export format! Now you could simply send the generated rule for verification, and it immediately became clear what conditions were checked. It also became possible to get a detailed message verification log, from which it is clear what data was taken for verification and what results returned the comparison functions.

It is safe to say that MFLang proved to be a completely invaluable tool for debugging functionality.

In full force

At the third stage, a mechanism was added to apply actions defined by the security policy to the message, as well as service hooks to enable the inclusion of new components into the Solar Dozor complex. Finally, we were able to launch the service and observe the result of the work in all its diversity.

The main question, of course, was how well the implemented functionality is as expected and how fully it implements it.

I note that if the need for modular testing has not been questioned for a long time (although the TDD practices themselves are still causing lively debate), the introduction of automated testing of the system functionality often encounters open resistance.

The development of autotests helps all team members to better understand the process of the product, saves the forces of regression, instills a certain confidence in the performance of the product. But the process of their creation is fraught with a number of difficulties - collecting the necessary data, determining the indicators of interest and testing options. Programmers inevitably perceive the creation of autotests as an optional, side job, which is best avoided as much as possible.

But if one succeeds in overcoming resistance, a rather solid foundation is created, which allows one to build an idea of the performance of the system.

We carry out the replacement

And then came the important point: we included the service in the package. So far, along with the old. Thus, it was possible to carry out a version change with one team and compare the behavior of the services.

In this parallel mode, the new filtering service existed for one release. During this time we managed to collect additional statistics on the work, outline and implement the necessary improvements.

Finally, gathering our strength, we removed the old filtration service from the product. I went to the final stage of internal acceptance, bugs were corrected, the developers began to gradually switch to other tasks. Somehow imperceptibly, without fanfare and applause, there was a release of the product with a new service.

And only when questions from the implementation team began to arrive, an understanding came - the service we had been working on for so long was already on the platforms and ... working!

Of course, there were some bugs and minor improvements, but after a month of active use, the customers received a verdict: the introduction of the product with the new version of the filtering service caused fewer problems than the introduction of previous versions. Hey! Looks like we managed!

Eventually

Development of a new filtering service took about a year and a half. Longer than originally anticipated, but not critical, especially since the actual laboriousness of the work coincided with the initial assessment. More importantly, we were able to meet the expectations of management and customers and lay the foundation for future product improvements. Already in the current state one can see a significant reduction in resource consumption - despite the fact that the product still has wide opportunities for optimization.

I can add some personal impressions.

Replacing the central component with a long history is a breath of fresh air to develop. For the first time in a long time, there is confidence that control over the product returns to our hands.

It is difficult to overestimate the benefits of a properly organized process of communication and development. In this case, it was important to adjust the work not so much within the team, as with numerous product consumers, who had long been formed clear preferences and expectations from the system, and rather vague wishes.

For us, this was the first experience of developing such a large-scale project at Clojure. Initially, there were concerns related to the dynamic nature of the language, speed and resistance to errors. Fortunately, they were not justified.

It remains only to wish that the new component worked as long and successfully as its predecessor.