An overview of current protocols for achieving consensus in a decentralized environment

This article is devoted to a superficial review of key approaches to achieving consensus in a decentralized environment. The material will allow to deal with the tasks that solve the considered protocols, the scope of their application, design and use features, and also allow to evaluate the prospects for their development and implementation in decentralized accounting systems.

Note that in a decentralized network, the principle of redundancy applies, which is based on the fact that nodes can do the same work. For a centralized network, this, of course, is inefficient, and for a decentralized network, this is imperative. We turn to the basic requirements.

Protocols must ensure reliable operation of users in fairly harsh conditions and at the same time meet the minimum requirements. The following are the main ones.

The absence of a central trusted party . The network consists of peers. If an intruder or a third party tries to disable a certain number of nodes, the network will continue to operate normally until honest participants monitor the vast majority of network nodes.

Honest members do not know which nodes are controlled by attackers. It is assumed that other nodes can fail at any time or function in an arbitrary manner, including coordinated by attackers to carry out an attack on the network. Again, honest participants do not know which nodes are honest and which are bad, or unreliable.

It is assumed that the network is deliberately unreliable . Some messages may be delivered with a serious delay, while others may be lost on the network and remain completely undeliverable. It is in such conditions that the decentralized consensus should continue to function normally: all honest nodes should come to the same state of the database of confirmed transactions.

Protocols must be completely formal. There should be no additional human involvement and no additional data is required. All honest nodes must come to the same solution, completely following the algorithm that is performed by the computer.

In addition, there are certain assumptions under which the protocol ensures correct operation . Honest nodes should make up the majority of all participants: more than ½ or ⅔ of their total number. However, the decision time is not limited. The limit may concern the number of steps to make a decision, but not the time.

First of all, this applies to cryptocurrencies: Bitcoin uses the Nakamoto algorithm, Ethereum uses a simplified version of GHOST, Bitshares implements the Delegated PoS, etc.

It should be noted that a community that supports a certain digital currency is not always numerous and decentralized. There are a number of other digital currencies that are centralized. However, this does not mean that a mechanism for achieving consensus is not needed. For example, Ripple uses the BFT protocol in a centralized environment.

Consensus protocols are used in highly reliable computing systems. They can be used to access distributed databases in the construction of clusters, and also necessarily used in critical technical systems: these can be control systems for aviation equipment, control systems for nuclear reactors, as well as in space technology.

In 2018, February 6, the Falcon Heavy was launched. It was interesting to watch him and read technical reviews. But it was equally interesting to read about which algorithms for reaching agreement the command uses. Engineers were forced to use simple electronics, which are sold in regular stores and do not work reliably in difficult space conditions. Therefore, they apply multiple redundancy in their work and consensus in this case is necessary.

Cryptocurrencies work in peer-to-peer networks. In this case, messages or transactions are transmitted between network participants at arbitrary points in time. Suppose there are members in the US and members in Australia. Payments that are sent from the United States will be seen by participants from America before payments sent from Australia. This is due to the presence of serious, by computer standards, delays in the delivery of messages from one continent to another. The situation will be similar for Australians, because they will see payments from Australia earlier than payments from the United States.

The situation described above means that different network members will see at one point in time they will see a different final state of the database with transactions. Since transactions come from users to validators at different times, a protocol is needed that will allow each node, regardless of its location, to receive all transactions in the same order.

Basically, two approaches are used for the operation of accounting systems cryptocurrency: PoW, which is the most common, and PoS, which has been actively developing lately.

In PoW, an extra amount of work is being done, which now consists of finding a prototype of the hash function. In fact, there is an artificial slowdown of the network to ensure security. To accomplish a malicious act, an attacker will have to perform the required amount of work. This requires a huge amount of energy and makes the implementation of attacks not very effective. Through this approach, network reliability is ensured. However, the need to perform such a resource-demanding task limits the bandwidth of the network that operates under this protocol. But over the PoW approach, scientists continue to work and propose alternative schemes, as well as other resource-intensive tasks, in addition to finding the prototype of the hash function. Recently, an algorithm based on proof-of-space-and-time has been proposed..

PoS allows you to provide higher network bandwidth and does not require excessive energy costs, like PoW. However, PoS requires more serious analysis in the development and implementation of cryptocurrency.

In PoS-based accounting systems, it is assumed that it is unprofitable for users who own a large number of coins to make attacks. If an entrepreneur invests money in equipment and pays electricity in accounting systems based on the PoW system, and then makes a successful attack on the system, he will lose his investment in mining. PoS uses a different approach: if it is assumed that the attacker has invested in specific coins, in whose values he is interested, then in the event of a successful attack on the system, he will lose his investments, as coins will depreciate. Therefore, honest adherence to the protocol is considered to be the most profitable strategy.

Safety - the ability of the accounting system to maintain the basic principles of operation and the interests of honest participants in any malicious influences.

Finality is a property that indicates the irrevocability of the decision made or confirmed data.

Liveness is a property that ensures that if all honest participants want to add an entry to the common database, then it will eventually be added there.

Persistence - the ability of the accounting system to maintain the immutability of the final state of its database even after all its validators have failed.

Permissioned - indicates a restriction, that is, the need to obtain permission to participate in some process.

Permissionless - means free access to take part in a process.

Ouroboros is a PoS-based protocol that achieves consensus between the validators of Cardano’s digital currency transactions. Moreover, the algorithm itself is the first provably persistent among all PoS-alternatives.

First, we consider a simpler option, oriented at the static stake, when it is assumed that the existing distribution of coins does not change.

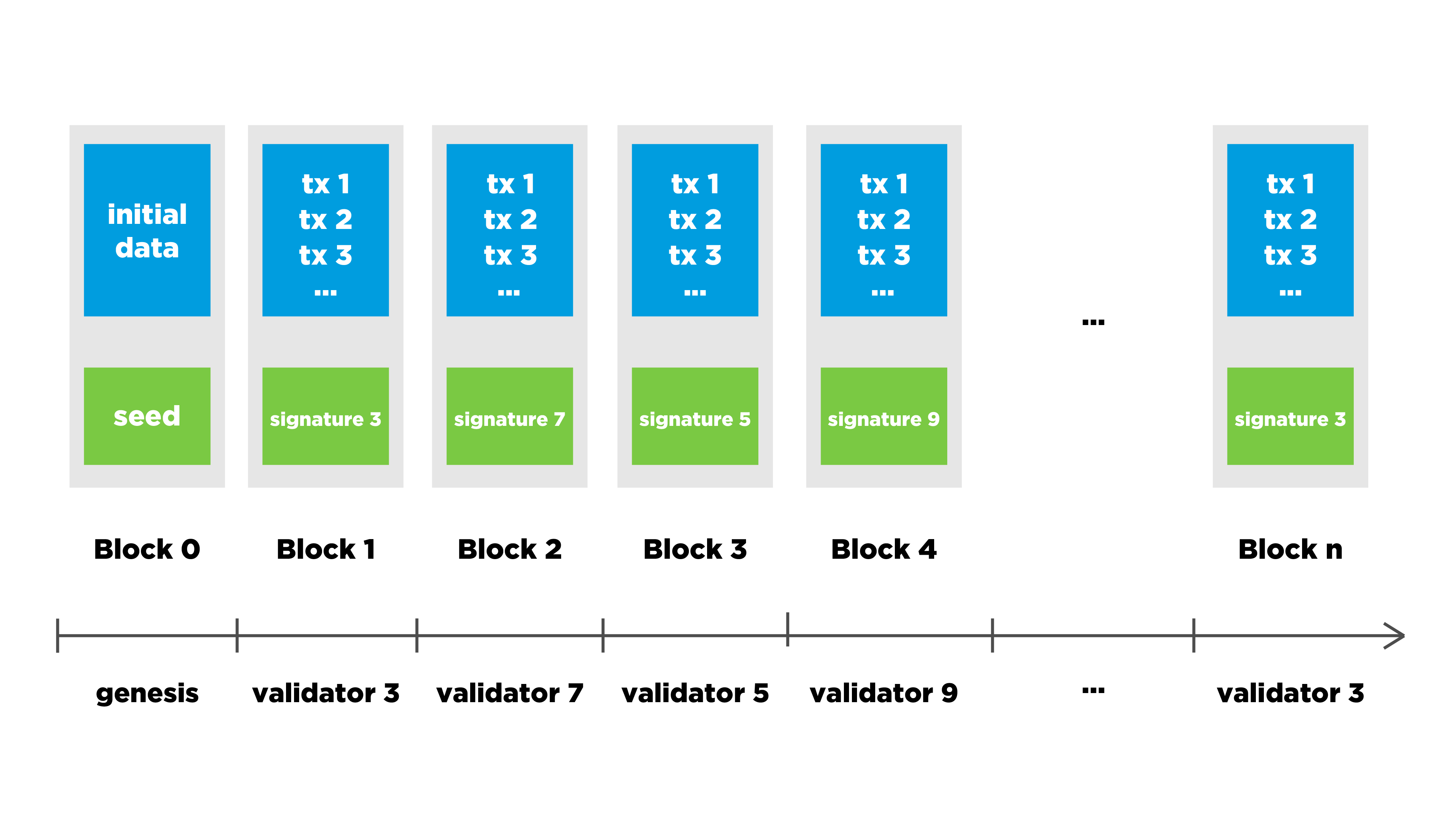

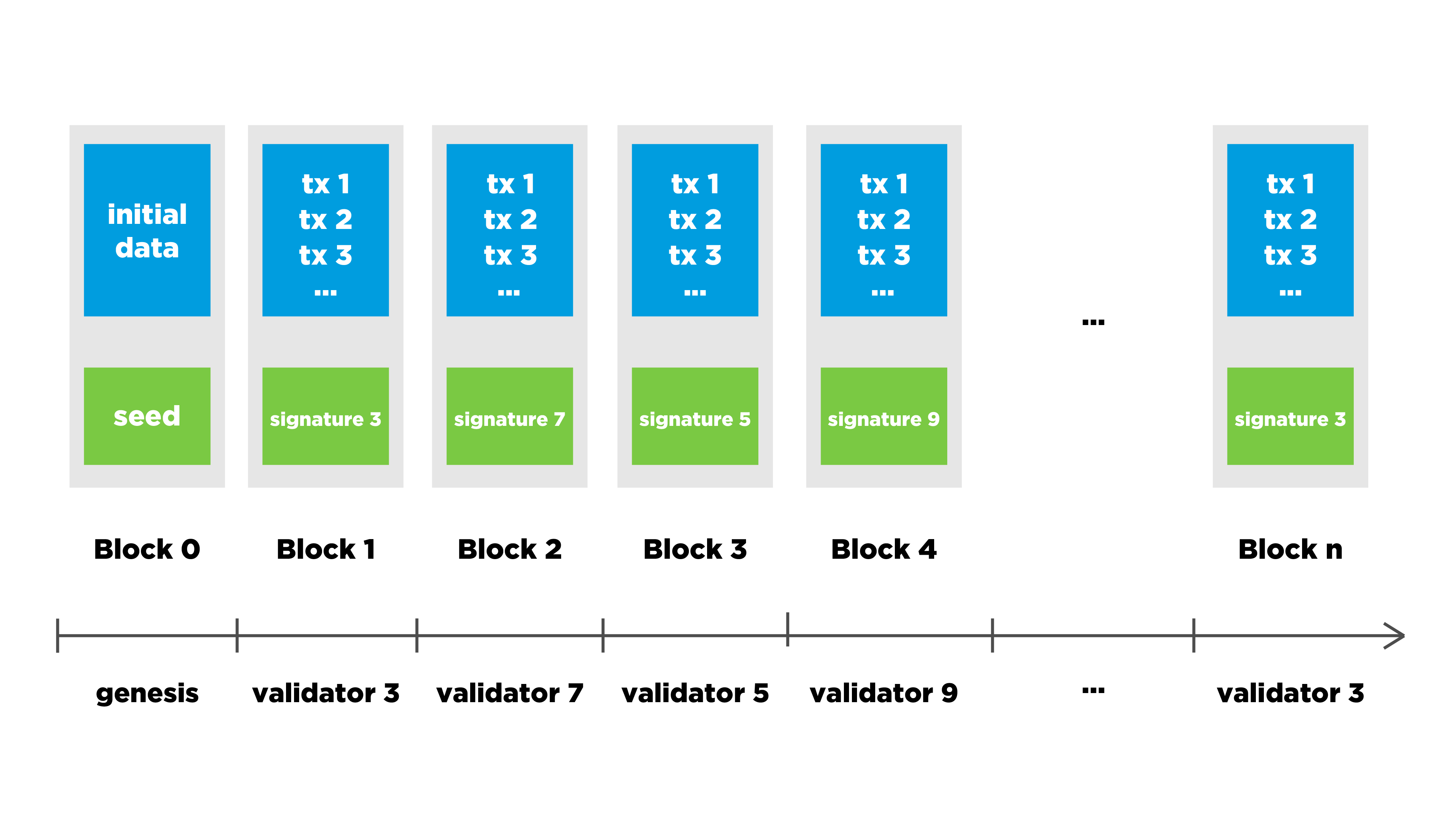

There is some genesis block and users form new transactions, but they do not significantly affect distribution.

Genesis block contains data with some random value, with the help of which validators are selected. They allow you to release a block at a certain point in time. A validator who has received such a right collects transactions, receiving them from another validator, checks the correctness and releases the block into the network. If he sees several chains, he chooses the longest of them and attaches a block to it.

In the context of a situation with a static stake, we can use this approach for a certain period of time, but then the distribution of the stake (stake distribution) between different users may change. In other words, part of the money goes from one user to another, and the probability of obtaining the right to choose a unit must be adjusted.

Note: static stake implies that a validator's stake time period is considered unchanged. The validator may at this time participate in the decision making and make payments, but the number of coins in his stake, and hence the weight of his vote, will remain unchanged until the next time period.

In the case of dynamic stake, time is divided into slots, and slots are divided into epochs. The duration of one epoch is approximately equal to the duration of one day. This ratio is determined from the fact that during this period of time the distribution of coins cannot change significantly.

At the end of an era, the current distribution of coins for each of the users is recorded. In addition, a new randomness value is generated to ensure that, in the next era, users who are given the right to generate blocks will indeed be randomly selected according to the number of coins they have.

Similarly, protection against so-called grinding attacks occurs when a particular user can iterate through various block options, different randomness variants, to form the chain in which he can maximize his profit. Such attacks are potentially vulnerable cryptocurrency based on the first-generation PoS-protocols, such as Peercoin and NXT.

The creators of this algorithm solved the above problems. Validators launch a special protocol between themselves, called MPC (multi-party computation), which makes it possible to generate randomness together. This protocol is also provably persistent, it is based on the already well-known approaches.

The Ouroboros protocol provides durability under the condition of the majority of honest validators in the system. If honest participants who work on the release of blocks control more than 50% of the coins in the system, the protocol can be considered protected.

A mechanism of incentives (motivation) to honest behavior has been developed. With the help of game theory, it is proved that the validator gets the maximum benefit when he follows the rules of the protocol. Any participation in the commission of attacks not only does not increase the participant's profit, but in some cases may reduce it. Therefore, the most profitable strategy for a validator is to honestly follow the rules of the protocol.

The capacity of the accounting system will be limited only by delays during network synchronization. This protocol provides high energy efficiency compared to proof-of-work, since mining farms are not needed here. Now for the collection of transactions and the release of blocks rather ordinary personal computer. In the future, these calculations can be carried out even on a regular smartphone.

The limitations of the Ouroboros protocol include the fact that the first version of the protocol is synchronous. This means that messages between participants must be delivered in a limited period of time. If the network will appear longer delays than laid down in the rules, it can reduce security. Nevertheless, the use of the next version of the protocol, Ouroboros Praos, is already planned. In it, even with increased network delays, complete security is guaranteed.

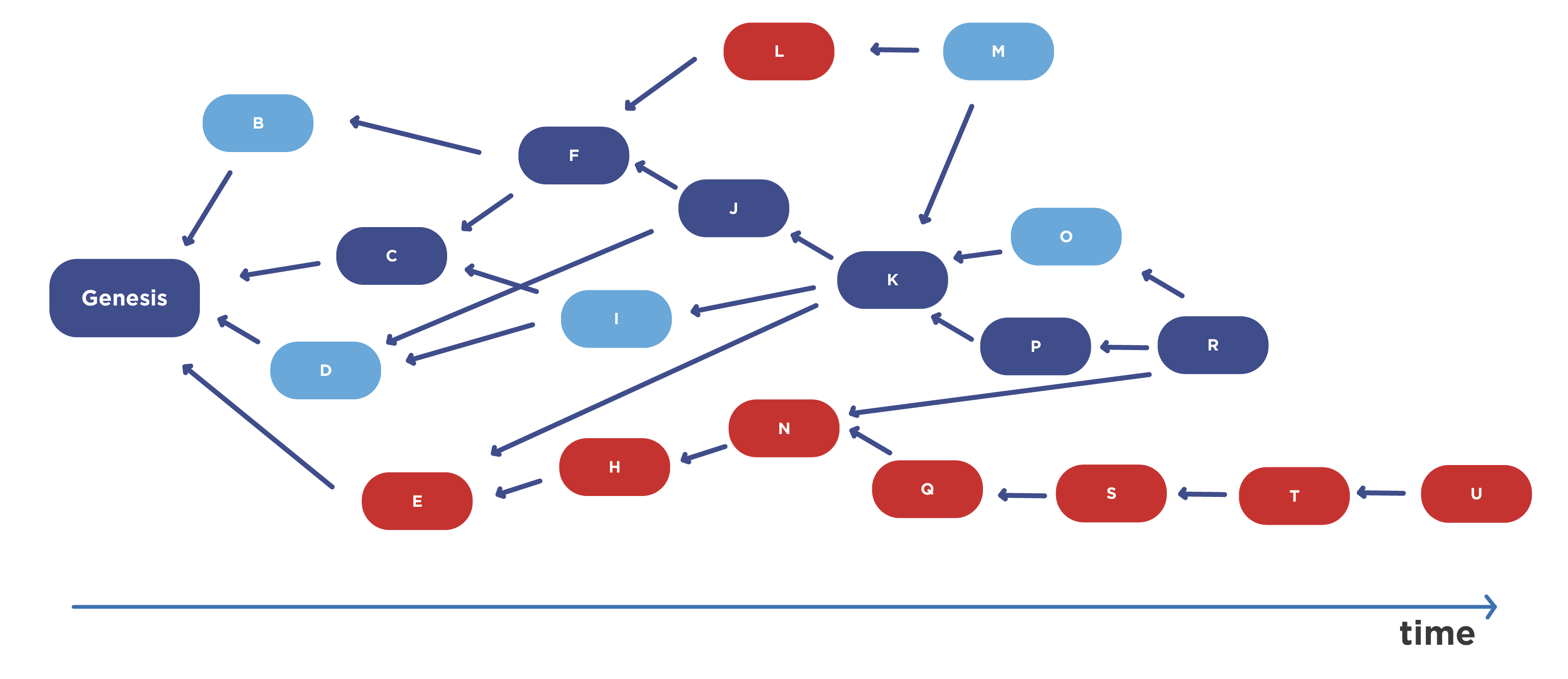

Consider a way to achieve the consensus that underlies bitcoin. Some of the blocks that are generated by honest users are still discarded - these are the so-called orphan blocks. These blocks are generated in parallel with the main chain, but most of the network decided that this chain should not be continued, and the blocks are discarded.

When there are few such blocks, there is nothing scary about it. However, if there are many of them, the network does not have time to synchronize and it turns out that some of the computing power of honest network users is wasted. This means that now the attacker must fight not for 51% of the computing power of the network, but in fact for a smaller percentage. If this value is 20%, then an attacker with a 20% computational power and a large delay in the delivery of network messages can realize a double-spending attack.

Therefore, in Bitcoin, the time interval between mined blocks lasting 10 minutes is set. Due to this, the network manages to synchronize accurately and the probability of the appearance of such blocks decreases. If you need to increase network bandwidth by increasing the frequency of block formation, you will need another solution.

The first such solution was the GHOST PoW protocol. Its simplified version is used in the Ethereum platform.

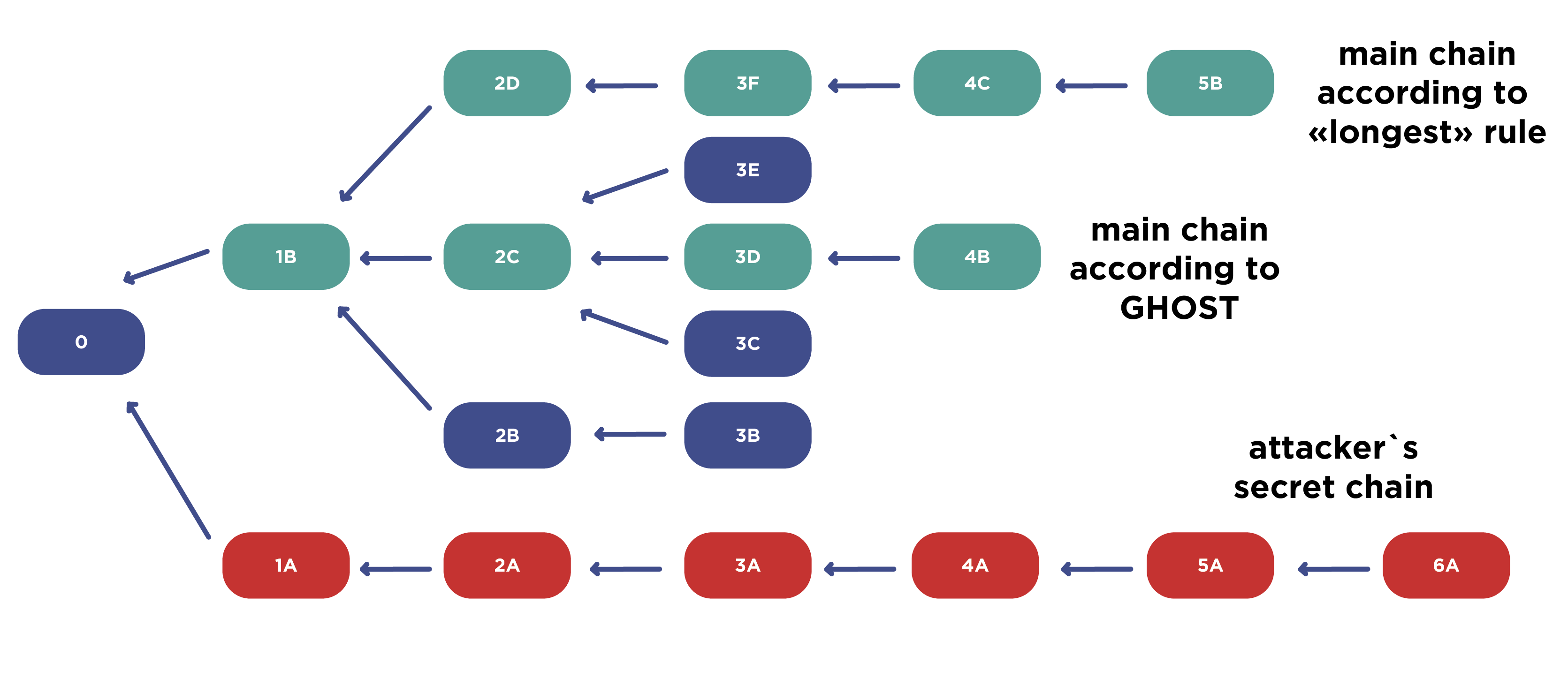

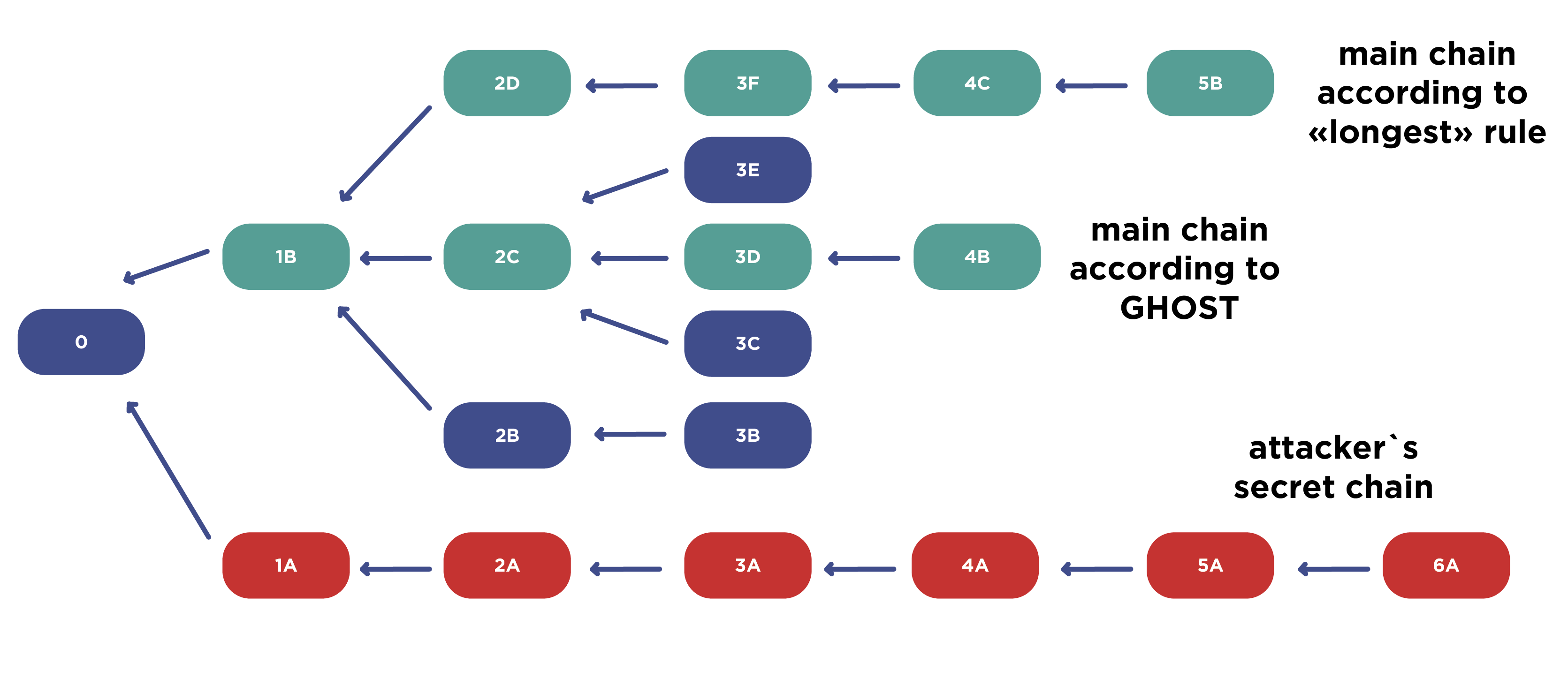

In this case, the attacker can pull his chain (indicated in red in the figure) and make it the longest, but it will not be advantageous. Honest users will follow the chain that they built before.

Honest users in this case may have two chains. The longest (1B-2D-3F-4C-5B), but it will be shorter than the attacker's chain. The peculiarity of GHOST is that the algorithm does not focus on the longest chain, but on the number of blocks in the tree formed by the current chain. It takes into account not only the length of the chain itself, but also the blocks at its various heights. Thus, it turns out not a linear chain, but a tree. It takes into account the number of blocks that it contains.

If you look at the chain 1B-2C-3D-4B, you can see the accompanying blocks 3E and 3C. By the number of blocks and the work expended, this chain has the greatest complexity and it will be taken as the main one. Honest users will continue to consider it the main one, despite attempts by the attacker to attack the network. In the traditional Nakamoto consensus, such an attack would have been successful, but for GHOST it does not pose a threat.

Nevertheless, the disadvantage of GHOST is the fact that some blocks are still lost. In this case, the 2D-3F-4C-5B chain will still be dropped. Therefore, the question of dropping blocks of honest users remains open.

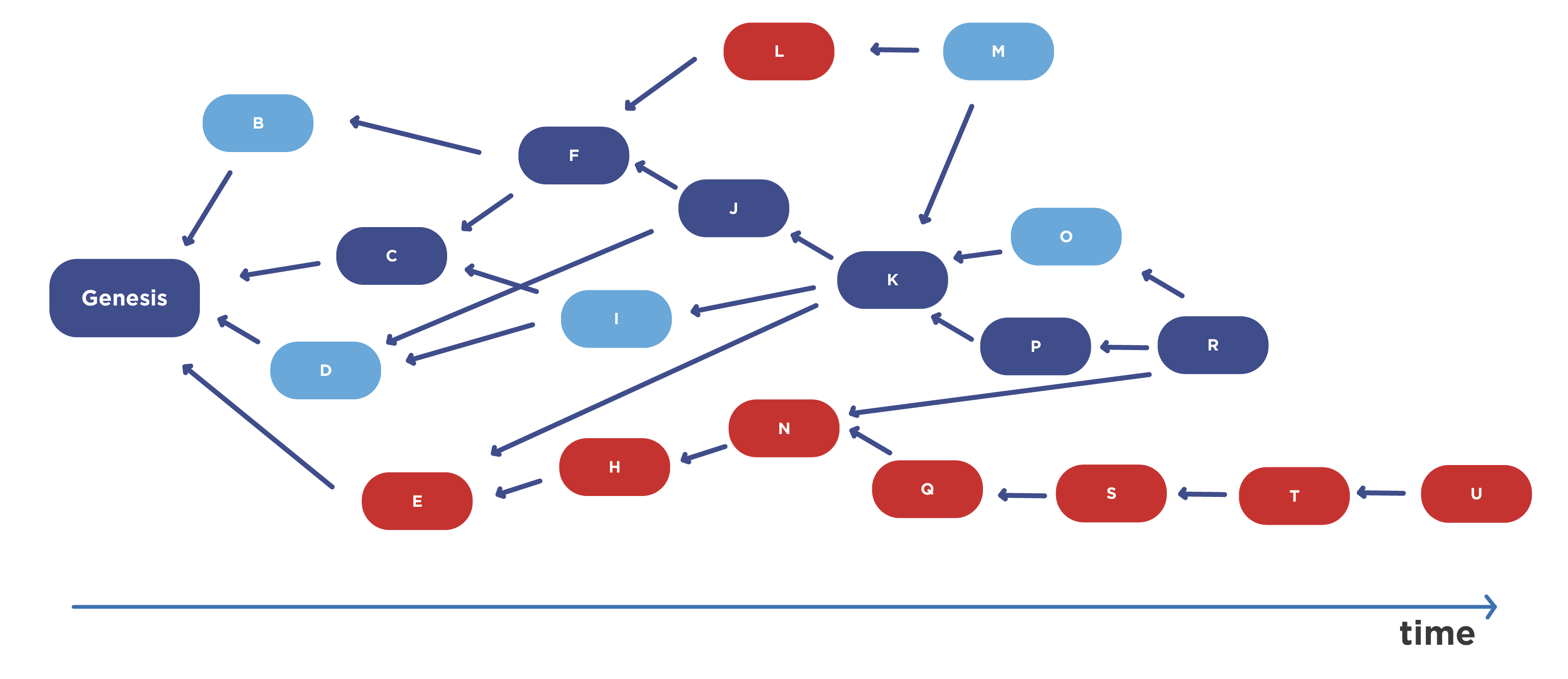

In order to increase the frequency of block formation and solve the problem of dropping blocks of honest users, two more PoW protocols were proposed: SPECTER and PHANTOM.

They use not even a tree structure, but a so-called directed acyclic graph (directed acyclic graph, DAG). In this case, the validator includes in the header of its block pointers to blocks that other validators do not refer to, at least in the network state that it sees at the current time, and sends the block further.

Therefore, it turns out such a structure, which generally includes all the blocks that validators see at the current time. Here, the mining power of honest users is not lost at all. Moreover, it provides high network bandwidth and high security. The advantage of this approach is the fact that the system is truly decentralized.

Let's compare the features of the Bitcoin network and the network that operates using SPECTER and PHANTOM protocols. If we talk about the current position in the Bitcoin network, then great importance should be given to mining pools. In modern Bitcoin 144 blocks are released per day. This figure is obtained if the number of minutes in a day is divided by 10. It may be quite possible that the validator bought the equipment, paid for electricity for a long time, but it did not work out to generate the unit, but during this time the equipment is outdated and use it more it makes no sense. To avoid this situation, enterprising validators are combined into mining pools. In most mining pools there is a lead (company / organization), which inclines modern Bitcoin to centralize the process of validating new transactions.

In the case of SPECTER and PHANTOM, mining pools are not needed at all. If we draw a parallel with the modern Bitcoin network, then each user of the network that uses the SPECTER and PHANTOM protocols has a chance to release one block per day. Thus, in the mining pools there is no need at all and we come to a truly decentralized network.

So, SPECTER and PHANTOM protocols provide a high level of decentralization and high network bandwidth, but a large amount of information is required to be stored.

The next type of protocol that is applied is the BFT protocols (Byzantine Fault Tolerance).

The name is derived from the comic description of the problem that was made in the 80s of the 20th century when developing a protocol for highly reliable systems. An example was given about the Byzantine generals who are organizing an attack on a city. The task of the generals was to come to a single solution. But it was necessary to take into account that among them there are several traitors. Traitors could behave in an arbitrary manner and, moreover, they could influence the transfer of messages between honest generals, for whom it is important to either simultaneously attack the city, or simultaneously retreat. If one of the honest generals retreats, the enemy will defeat the army piece by piece. Therefore, they needed to come to an agreed decision. The formulation of the problem in this way was done in the work, from which came the name of the algorithm for achieving consensus.

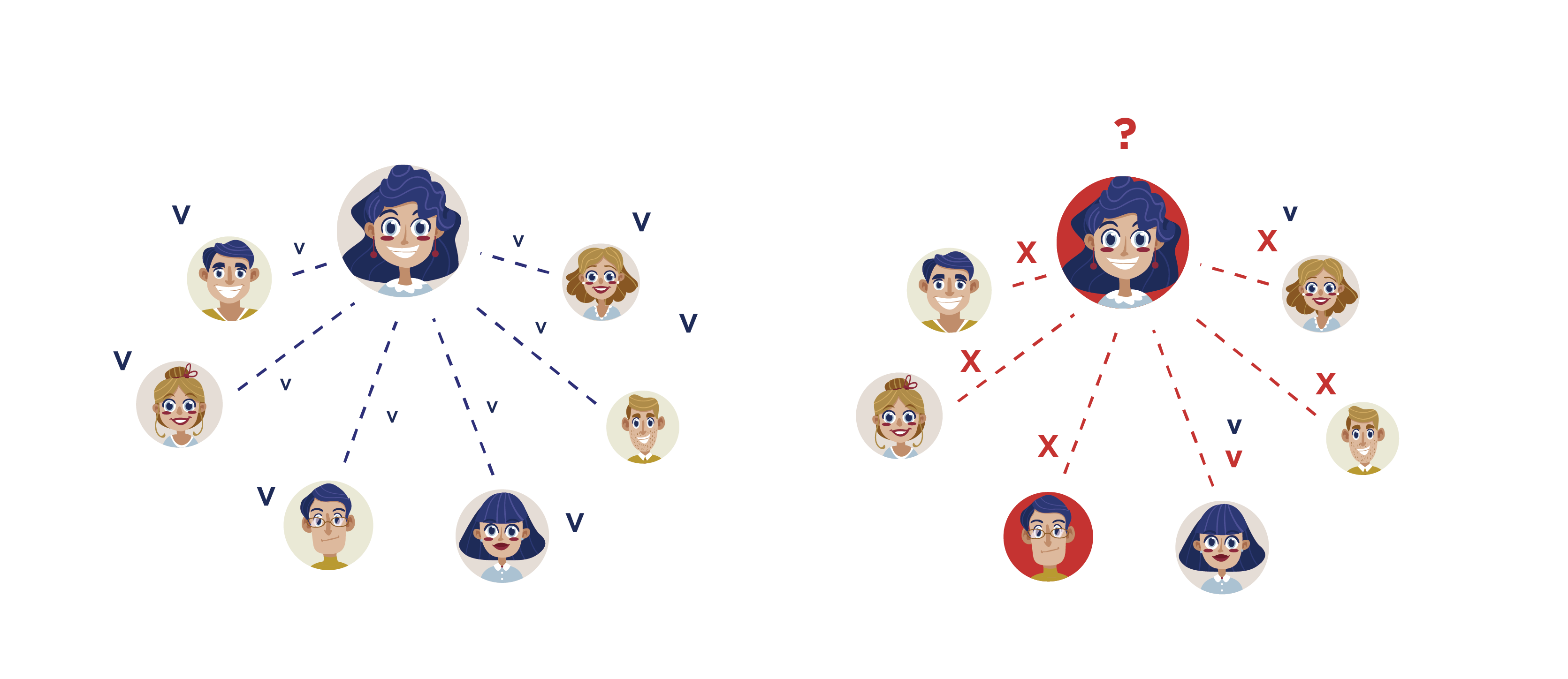

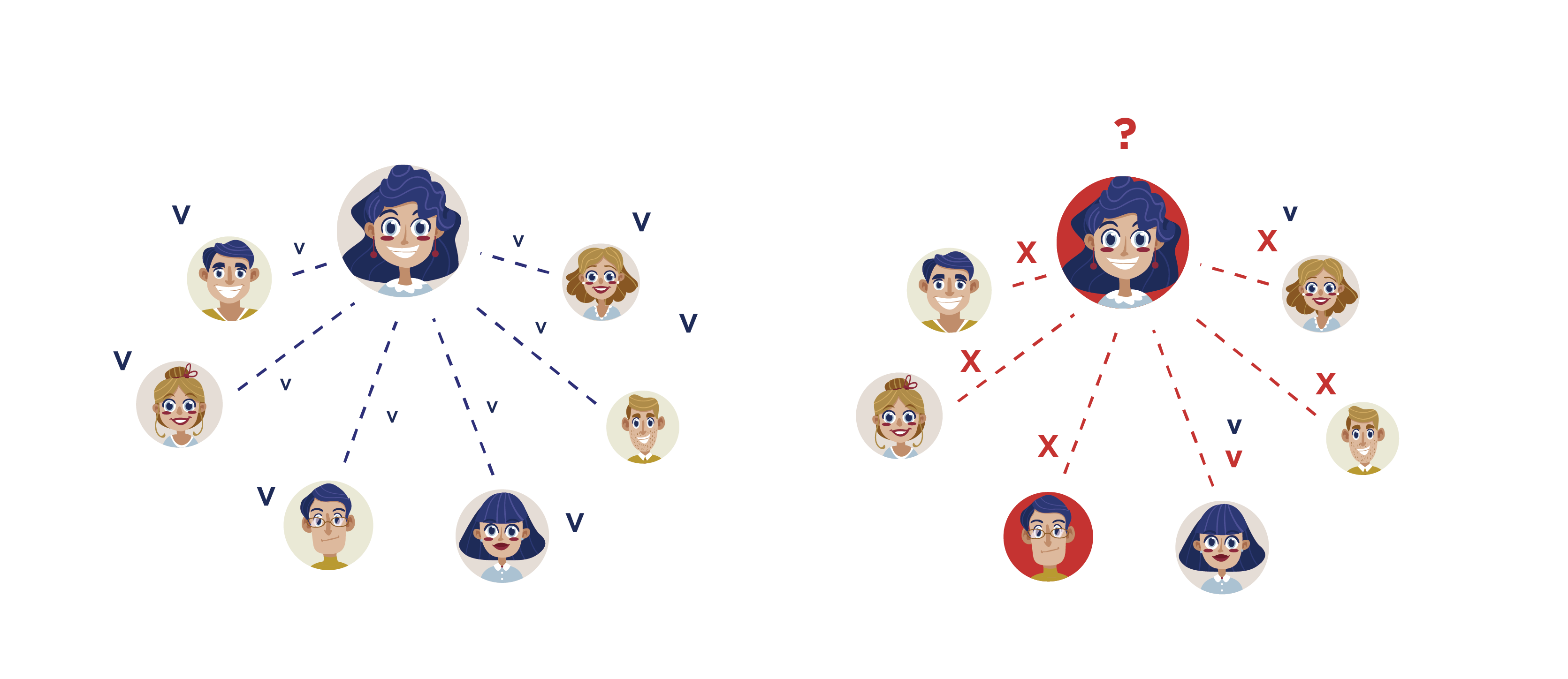

For BFT protocols, peer-to-peer communication is assumed. It is assumed that there are a certain number of intruders who can act in a coordinated manner. They are unknown to honest participants. The network may have failures and delays, that is, some messages may disappear, and some may come with a long delay. However, a limit is imposed on the number of steps during which all honest validators must come to a common solution. In the example task there are two options: attack or retreat.

It is assumed that honest nodes are more than participants. BFT protocols are guaranteed to come to a common solution, which cannot be canceled in the future. For non-BFT protocols, the probability of reversal is exponentially decreasing, but not zero.

At the core of Bitcoin and other modern widespread cryptocurrencies are not BFT protocols. For Bitcoin, there is a non-zero probability, which is negligible, that there is an alternative chain extending, for example, from the previous month, year, or even from the Genesis block. The probability of cancellation of the current chain is exponentially decreasing, but it exists. In the case of a BFT protocol, the decision cannot be canceled.

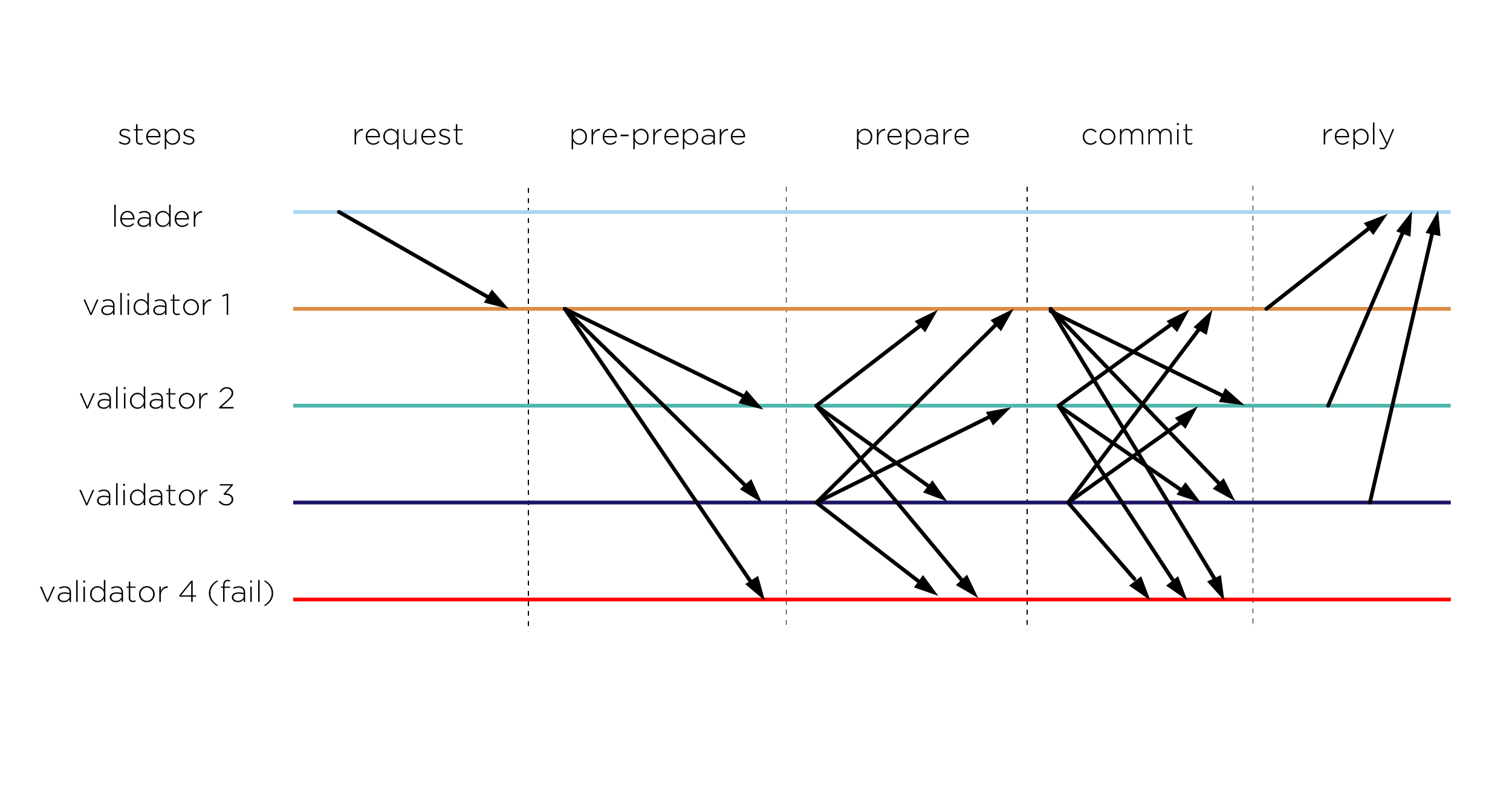

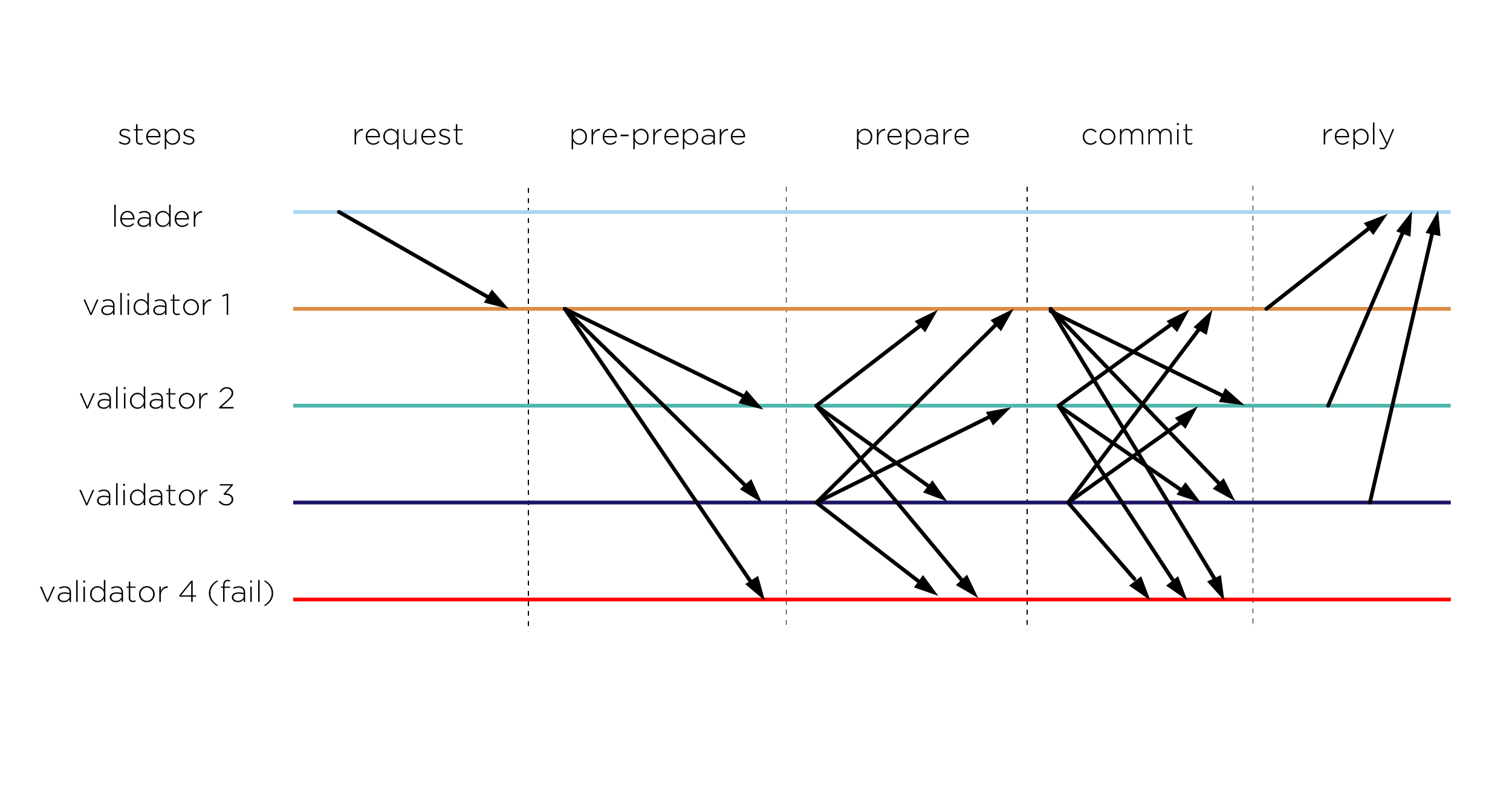

The first protocol that was put into practice was called Practical BFT. It was proposed in 1999. He has a fairly simple functioning. The client accesses the server that he chooses the leader. The leader sends these messages to other servers. After this, the servers inform each other that certain messages have arrived and they need to be entered into a joint database. Each validator must confirm that he received confirmation from ⅔ other participants.

When the validator received confirmation from ⅔ other participants about the inclusion of the message in its copy of the database, it is entered into the local copy of this validator.

The protocol is energy efficient, provides high bandwidth and fast transaction confirmation, but unfortunately works for a small number of validators. If we design a cluster, file system or cloud on the basis of this protocol, then it works efficiently, but if we start using a large number of nodes, we get a rapid increase in the number of messages and a very large increase in network load. In the latter case, the protocol does not work efficiently, especially when the network works with delays, skips messages, etc.

In addition, the basic version assumes the presence of a leader, which can be a DoS attack point for stopping the work of the accounting system by an attacker.

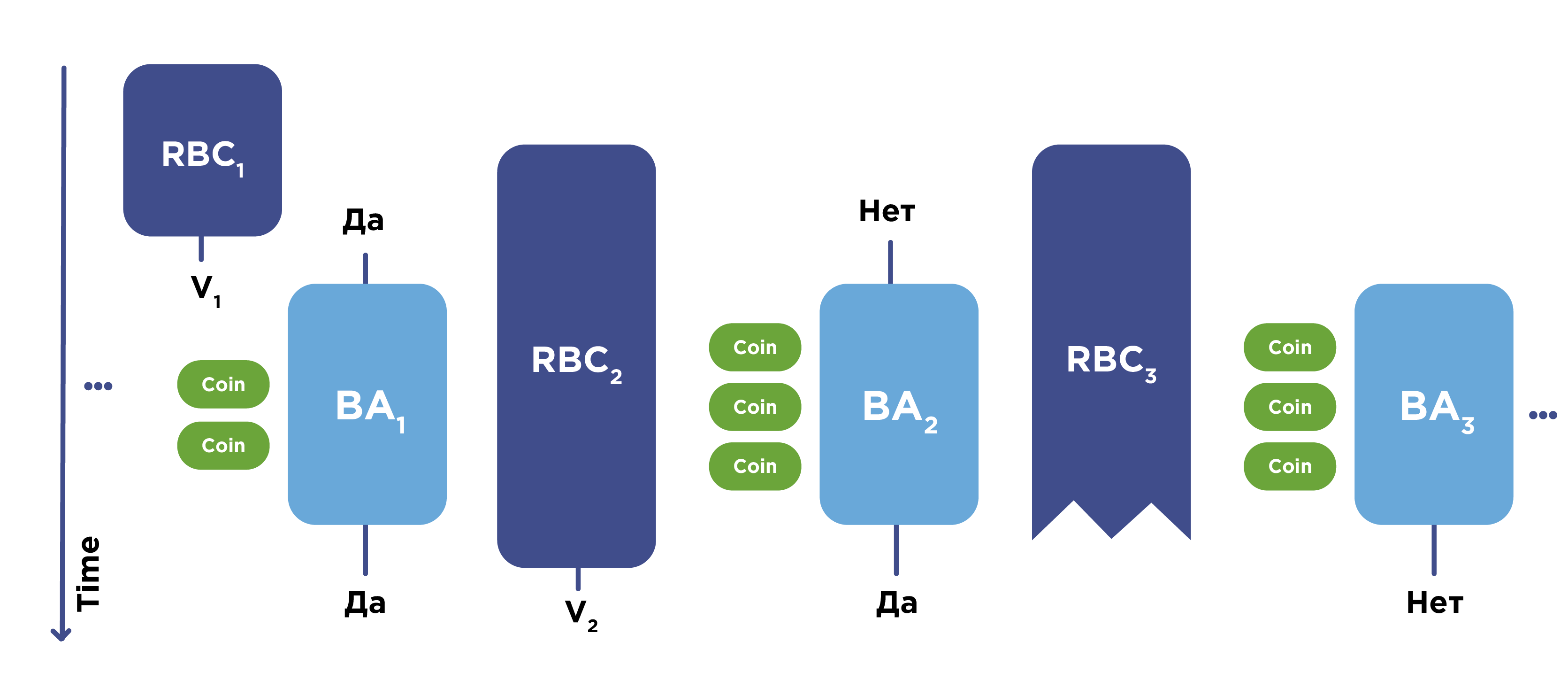

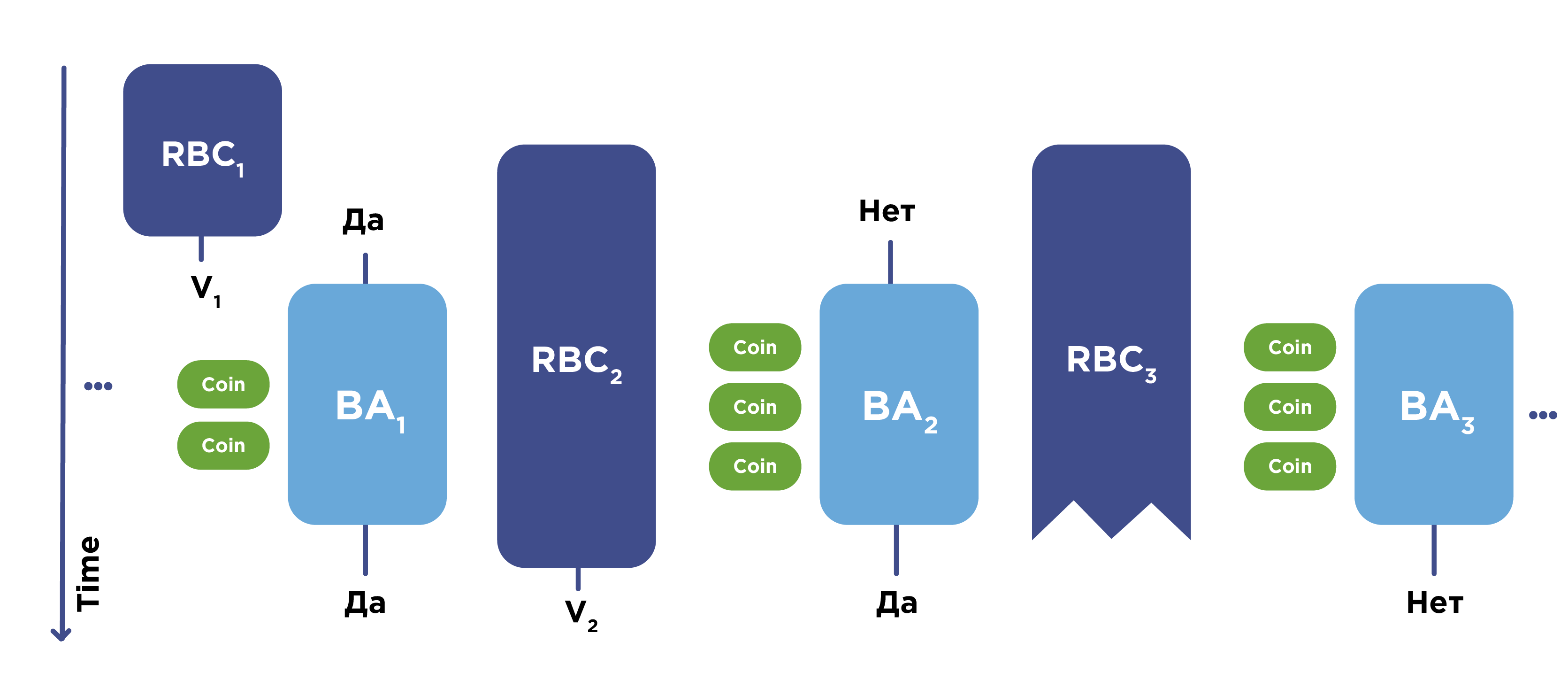

HoneyBadger BFT has proven itself and was developed as an improved version of one of the existing algorithms. It uses a number of features, including cryptographic protection of all messages that are transmitted over the network. Transactions are decrypted only after reaching a certain agreement on them. HoneyBadger consists of two protocols: RBC (Reliable broadcast) and BA (Byzantine Agreement).

Using the RBC protocol, messages are delivered over the network. This protocol completes its work only after it receives confirmation that at least ⅔ honest validators received the required set of messages. After that, the validators go to the BA-protocol and the coordination of the transactions themselves, which are then sent to the network.

This protocol provides strong cryptographic protection. It works well in networks with poor data transmission quality, where there are gaps in transmitted messages and delays in their delivery, as well as in networks with serious malicious interference with work. There is no leader here. The protocol suffers when trying to scale significantly: with hundreds of validator nodes, it still works fine, but with several thousand it already puts too much load on the network and the transaction confirmation time is greatly increased. In a fully decentralized network of the accounting system, where thousands of validators work, the protocol becomes ineffective.

The result of further development are the Algorand and Hashgraph protocols.

Algorand protocol was proposed in 2017. He uses the same BFT approach, but he is focused on the fact that among all the participants a certain subcommittee is selected at random, which performs the confirmation of transactions. Moreover, this confirmation occurs in several stages, at each of which a subcommittee is selected.

The protocol guarantees with a high probability close to one that the subcommittee is honest, if more than ⅔ of the network members are honest, that is, each of the subcommittees will also have ⅔ honest participants. There is no leader here, so there is no point for a denial of service attack (DoS attacks). This protocol provides a high bandwidth accounting system, fast transaction confirmation, as well as protection against adaptive corruption.

It is assumed that an attacker can choose an arbitrary validator and declare that it is he who works in his interests. An attacker can choose the nodes that will work for him (mandatory for correct work is the condition that there are more honest participants участников). And even in such conditions, the protocol provides durability.

Since this is a BFT protocol, in any case, it provides safety and persistence properties. And the rest of its properties will depend on delays in the transmission of data via communication channels.

If this protocol works in a problem network, there is a threat of liveness, due to the fact that new transactions may not receive confirmation. If there are too long delays on the network or an attacker gets the opportunity to throw out the messages that he needs to throw out to achieve the goal, then this will not affect the old (already confirmed) transactions. He will not be able to cancel them. The bottom line is that he will be able to reject the acceptance of new transactions.

The Hashgraph protocol is a fresh solution with very good properties. Each of the validators collects their transactions, randomly selects another participant and sends him data about his transactions, as well as information that other nodes informed him when they connected to him.

So, the operation is very simple: the validator “listens”, accepts all the messages that come to him, and then arbitrarily chooses another participant and sends him all the transactions with his.

Hashgraph ensures that in a finite number of steps, that is, rounds of the protocol, all nodes come to a single solution. The protocol works quickly and scales well. It requires more traffic than Algorand, but at the same time is very efficient. Hashgraph provides the properties of safety and liveness, that is, adding new transactions and coming to a single solution. It even works in a fully asynchronous network, when messages can be delayed for a very long period of time or thrown out altogether.

In addition, there is no leader in the network, which allows an attacker to disable those nodes that are interesting to him, which can force them to work according to their intentions. However, while there are ⅔ honest users, the protocol provides a high level of security.

Let's summarize the BFT protocols. They provide very high throughput and allow validators to agree on the status of transactions for a fixed number of steps. These properties allow them to be successfully used in cryptocurrencies. The protocol ensures reliable operation even in unreliable networks, provided that more than ⅔ of the validators of the accounting system are honest. Protocols such as Algorand and Hashgraph support high bandwidth and they can have a really large number of validators.

At the same time, they have a very serious drawback: in the current state of development, protocols have been developed for the so-called permissioned accounting systems. In other words, they are designed without considering the incentive for validators to work honestly in the network itself. It is assumed that such incentives are outside the scope of this protocol. Rewards for the formation of the block, various strategies of user behavior are not considered.

To deploy such protocols to work in permissionless accounting systems, researchers will need to carry out serious work. In this regard, they have a wide field for research from the point of view of game theory and from the point of view of developing a reward system for validators, etc.

Distributed Lab has prepared this article based on video lecture material Roman Oleynikov.

- Is it possible in the near future to introduce a radically new consensus protocol and is there any development?

Now, indeed, consensus protocols are being actively developed. Some of them we discussed today: Algorand and Hashgraph from the BFT protocols, as well as the protocol based on the Ouroboros PoS consensus. At the beginning of 2018, the PHANTOM protocol was presented. These are new protocols with new principles.

- How does Ouroboros ensure that the list of nodes that have the right to form a block at certain time intervals is the same for all nodes?

The issue is related to the grinding attacks mentioned above. Ouroboros provides persistence and liveness properties: if a transaction hit at a certain point in time and received confirmation, then it can no longer be canceled or deleted, since the probability of replacing this block tends to zero. In other words, the developers believe that the transaction cannot disappear from the shared database, since it is available to anyone.

Due to the persistence property, all network nodes form the same set of transactions in their local copy of the database. Potential validators send transactions with their own randomness, which is initially hidden, and at the time when the MPC protocol ends, everyone can calculate the resulting randomness. All users have a consistent transaction history, so that all nodes get the same list of actual validators. It is impossible to manipulate randomness here, because the MPC protocol provides properties related to the fact that none of the participants can receive this randomness until all have entered it into the blockchain. Only after this accident is revealed. Even if someone does not want to open his chance, the protocol itself guarantees that this data will be opened. Everyone has the same vision of history and chance.

- In which book can you read about the algorithms for achieving consensus, discussed in this chapter?

Unfortunately, there are no such books yet. There are only selected scientific papers and articles. You can see articles on PHANTOM, SPECTER and Ouroboros. You can see the site where these articles can be found by title.

- Why so many blocks to issue in PHANTOM and SPECTER, if there is no such a large number of transactions? Most blocks will be empty or contain few transactions.

PHANTOM and SPECTER are focused on scaling. For Bitcoin, there have often been situations where transactions with a low commission could be on the network unconfirmed even for several months. PHANTOM and SPECTER guarantee that all transactions will be included in the blocks and network bandwidth will be increased. These protocols can provide tens of thousands of transactions per second, with each receiving a confirmation. In other words, they can provide very low transaction costs in the network itself. If there are no transactions, then you can really release empty blocks.

- How and by whom in BFT is selected the leading server?

It depends on the specific protocol. If we are talking about PBFT, then in this case a specific user chooses the leading server that will conduct his transaction. If we are talking about Algorand or Hashgraph, there is no leader at all.

- What will happen if in PBFT the initial request comes to a compromised node?

In this case, the compromised (or inoperative) node may not process it. If a user randomly selects a node to which he refers, and we have two thirds of honest nodes, then in this case his transaction will receive a confirmation with a high probability after several attempts.

- Is it correct to say that Algorand is something like DPoS (Delegated Proof-of-stake)

No, this is not entirely correct. Delegated Proof-of-stake implies that users must select a validator and delegate his right to vote. Here the nodes are selected to confirm transactions really random. If you look far into the future and consider the options for building cryptocurrency on Algorand, then you really need to develop delegation options, but delegation does not need to work for the protocol itself.

- Which of the listed protocols are not yet applied, but are considered promising in the future?

SPECTER and PHANTOM are really promising protocols. And SPECTER developers are working to launch a new digital currency.

Note that in a decentralized network, the principle of redundancy applies, which is based on the fact that nodes can do the same work. For a centralized network, this, of course, is inefficient, and for a decentralized network, this is imperative. We turn to the basic requirements.

Consensus protocol requirements

Protocols must ensure reliable operation of users in fairly harsh conditions and at the same time meet the minimum requirements. The following are the main ones.

The absence of a central trusted party . The network consists of peers. If an intruder or a third party tries to disable a certain number of nodes, the network will continue to operate normally until honest participants monitor the vast majority of network nodes.

Honest members do not know which nodes are controlled by attackers. It is assumed that other nodes can fail at any time or function in an arbitrary manner, including coordinated by attackers to carry out an attack on the network. Again, honest participants do not know which nodes are honest and which are bad, or unreliable.

It is assumed that the network is deliberately unreliable . Some messages may be delivered with a serious delay, while others may be lost on the network and remain completely undeliverable. It is in such conditions that the decentralized consensus should continue to function normally: all honest nodes should come to the same state of the database of confirmed transactions.

Protocols must be completely formal. There should be no additional human involvement and no additional data is required. All honest nodes must come to the same solution, completely following the algorithm that is performed by the computer.

In addition, there are certain assumptions under which the protocol ensures correct operation . Honest nodes should make up the majority of all participants: more than ½ or ⅔ of their total number. However, the decision time is not limited. The limit may concern the number of steps to make a decision, but not the time.

Consensus protocols

Digital currencies

First of all, this applies to cryptocurrencies: Bitcoin uses the Nakamoto algorithm, Ethereum uses a simplified version of GHOST, Bitshares implements the Delegated PoS, etc.

It should be noted that a community that supports a certain digital currency is not always numerous and decentralized. There are a number of other digital currencies that are centralized. However, this does not mean that a mechanism for achieving consensus is not needed. For example, Ripple uses the BFT protocol in a centralized environment.

Highly reliable and critical systems

Consensus protocols are used in highly reliable computing systems. They can be used to access distributed databases in the construction of clusters, and also necessarily used in critical technical systems: these can be control systems for aviation equipment, control systems for nuclear reactors, as well as in space technology.

In 2018, February 6, the Falcon Heavy was launched. It was interesting to watch him and read technical reviews. But it was equally interesting to read about which algorithms for reaching agreement the command uses. Engineers were forced to use simple electronics, which are sold in regular stores and do not work reliably in difficult space conditions. Therefore, they apply multiple redundancy in their work and consensus in this case is necessary.

Cryptocurrencies

Cryptocurrencies work in peer-to-peer networks. In this case, messages or transactions are transmitted between network participants at arbitrary points in time. Suppose there are members in the US and members in Australia. Payments that are sent from the United States will be seen by participants from America before payments sent from Australia. This is due to the presence of serious, by computer standards, delays in the delivery of messages from one continent to another. The situation will be similar for Australians, because they will see payments from Australia earlier than payments from the United States.

The situation described above means that different network members will see at one point in time they will see a different final state of the database with transactions. Since transactions come from users to validators at different times, a protocol is needed that will allow each node, regardless of its location, to receive all transactions in the same order.

Basically, two approaches are used for the operation of accounting systems cryptocurrency: PoW, which is the most common, and PoS, which has been actively developing lately.

In PoW, an extra amount of work is being done, which now consists of finding a prototype of the hash function. In fact, there is an artificial slowdown of the network to ensure security. To accomplish a malicious act, an attacker will have to perform the required amount of work. This requires a huge amount of energy and makes the implementation of attacks not very effective. Through this approach, network reliability is ensured. However, the need to perform such a resource-demanding task limits the bandwidth of the network that operates under this protocol. But over the PoW approach, scientists continue to work and propose alternative schemes, as well as other resource-intensive tasks, in addition to finding the prototype of the hash function. Recently, an algorithm based on proof-of-space-and-time has been proposed..

PoS allows you to provide higher network bandwidth and does not require excessive energy costs, like PoW. However, PoS requires more serious analysis in the development and implementation of cryptocurrency.

In PoS-based accounting systems, it is assumed that it is unprofitable for users who own a large number of coins to make attacks. If an entrepreneur invests money in equipment and pays electricity in accounting systems based on the PoW system, and then makes a successful attack on the system, he will lose his investment in mining. PoS uses a different approach: if it is assumed that the attacker has invested in specific coins, in whose values he is interested, then in the event of a successful attack on the system, he will lose his investments, as coins will depreciate. Therefore, honest adherence to the protocol is considered to be the most profitable strategy.

Short glossary

Safety - the ability of the accounting system to maintain the basic principles of operation and the interests of honest participants in any malicious influences.

Finality is a property that indicates the irrevocability of the decision made or confirmed data.

Liveness is a property that ensures that if all honest participants want to add an entry to the common database, then it will eventually be added there.

Persistence - the ability of the accounting system to maintain the immutability of the final state of its database even after all its validators have failed.

Permissioned - indicates a restriction, that is, the need to obtain permission to participate in some process.

Permissionless - means free access to take part in a process.

Protocol for achieving consensus Ouroboros

Ouroboros is a PoS-based protocol that achieves consensus between the validators of Cardano’s digital currency transactions. Moreover, the algorithm itself is the first provably persistent among all PoS-alternatives.

First, we consider a simpler option, oriented at the static stake, when it is assumed that the existing distribution of coins does not change.

There is some genesis block and users form new transactions, but they do not significantly affect distribution.

Genesis block contains data with some random value, with the help of which validators are selected. They allow you to release a block at a certain point in time. A validator who has received such a right collects transactions, receiving them from another validator, checks the correctness and releases the block into the network. If he sees several chains, he chooses the longest of them and attaches a block to it.

In the context of a situation with a static stake, we can use this approach for a certain period of time, but then the distribution of the stake (stake distribution) between different users may change. In other words, part of the money goes from one user to another, and the probability of obtaining the right to choose a unit must be adjusted.

Note: static stake implies that a validator's stake time period is considered unchanged. The validator may at this time participate in the decision making and make payments, but the number of coins in his stake, and hence the weight of his vote, will remain unchanged until the next time period.

In the case of dynamic stake, time is divided into slots, and slots are divided into epochs. The duration of one epoch is approximately equal to the duration of one day. This ratio is determined from the fact that during this period of time the distribution of coins cannot change significantly.

At the end of an era, the current distribution of coins for each of the users is recorded. In addition, a new randomness value is generated to ensure that, in the next era, users who are given the right to generate blocks will indeed be randomly selected according to the number of coins they have.

Similarly, protection against so-called grinding attacks occurs when a particular user can iterate through various block options, different randomness variants, to form the chain in which he can maximize his profit. Such attacks are potentially vulnerable cryptocurrency based on the first-generation PoS-protocols, such as Peercoin and NXT.

The creators of this algorithm solved the above problems. Validators launch a special protocol between themselves, called MPC (multi-party computation), which makes it possible to generate randomness together. This protocol is also provably persistent, it is based on the already well-known approaches.

The Ouroboros protocol provides durability under the condition of the majority of honest validators in the system. If honest participants who work on the release of blocks control more than 50% of the coins in the system, the protocol can be considered protected.

A mechanism of incentives (motivation) to honest behavior has been developed. With the help of game theory, it is proved that the validator gets the maximum benefit when he follows the rules of the protocol. Any participation in the commission of attacks not only does not increase the participant's profit, but in some cases may reduce it. Therefore, the most profitable strategy for a validator is to honestly follow the rules of the protocol.

The capacity of the accounting system will be limited only by delays during network synchronization. This protocol provides high energy efficiency compared to proof-of-work, since mining farms are not needed here. Now for the collection of transactions and the release of blocks rather ordinary personal computer. In the future, these calculations can be carried out even on a regular smartphone.

The limitations of the Ouroboros protocol include the fact that the first version of the protocol is synchronous. This means that messages between participants must be delivered in a limited period of time. If the network will appear longer delays than laid down in the rules, it can reduce security. Nevertheless, the use of the next version of the protocol, Ouroboros Praos, is already planned. In it, even with increased network delays, complete security is guaranteed.

Problems with PoW in Bitcoin

Consider a way to achieve the consensus that underlies bitcoin. Some of the blocks that are generated by honest users are still discarded - these are the so-called orphan blocks. These blocks are generated in parallel with the main chain, but most of the network decided that this chain should not be continued, and the blocks are discarded.

When there are few such blocks, there is nothing scary about it. However, if there are many of them, the network does not have time to synchronize and it turns out that some of the computing power of honest network users is wasted. This means that now the attacker must fight not for 51% of the computing power of the network, but in fact for a smaller percentage. If this value is 20%, then an attacker with a 20% computational power and a large delay in the delivery of network messages can realize a double-spending attack.

Therefore, in Bitcoin, the time interval between mined blocks lasting 10 minutes is set. Due to this, the network manages to synchronize accurately and the probability of the appearance of such blocks decreases. If you need to increase network bandwidth by increasing the frequency of block formation, you will need another solution.

Ghost

The first such solution was the GHOST PoW protocol. Its simplified version is used in the Ethereum platform.

In this case, the attacker can pull his chain (indicated in red in the figure) and make it the longest, but it will not be advantageous. Honest users will follow the chain that they built before.

Honest users in this case may have two chains. The longest (1B-2D-3F-4C-5B), but it will be shorter than the attacker's chain. The peculiarity of GHOST is that the algorithm does not focus on the longest chain, but on the number of blocks in the tree formed by the current chain. It takes into account not only the length of the chain itself, but also the blocks at its various heights. Thus, it turns out not a linear chain, but a tree. It takes into account the number of blocks that it contains.

If you look at the chain 1B-2C-3D-4B, you can see the accompanying blocks 3E and 3C. By the number of blocks and the work expended, this chain has the greatest complexity and it will be taken as the main one. Honest users will continue to consider it the main one, despite attempts by the attacker to attack the network. In the traditional Nakamoto consensus, such an attack would have been successful, but for GHOST it does not pose a threat.

Nevertheless, the disadvantage of GHOST is the fact that some blocks are still lost. In this case, the 2D-3F-4C-5B chain will still be dropped. Therefore, the question of dropping blocks of honest users remains open.

SPECTER and PHANTOM

In order to increase the frequency of block formation and solve the problem of dropping blocks of honest users, two more PoW protocols were proposed: SPECTER and PHANTOM.

They use not even a tree structure, but a so-called directed acyclic graph (directed acyclic graph, DAG). In this case, the validator includes in the header of its block pointers to blocks that other validators do not refer to, at least in the network state that it sees at the current time, and sends the block further.

Therefore, it turns out such a structure, which generally includes all the blocks that validators see at the current time. Here, the mining power of honest users is not lost at all. Moreover, it provides high network bandwidth and high security. The advantage of this approach is the fact that the system is truly decentralized.

Let's compare the features of the Bitcoin network and the network that operates using SPECTER and PHANTOM protocols. If we talk about the current position in the Bitcoin network, then great importance should be given to mining pools. In modern Bitcoin 144 blocks are released per day. This figure is obtained if the number of minutes in a day is divided by 10. It may be quite possible that the validator bought the equipment, paid for electricity for a long time, but it did not work out to generate the unit, but during this time the equipment is outdated and use it more it makes no sense. To avoid this situation, enterprising validators are combined into mining pools. In most mining pools there is a lead (company / organization), which inclines modern Bitcoin to centralize the process of validating new transactions.

In the case of SPECTER and PHANTOM, mining pools are not needed at all. If we draw a parallel with the modern Bitcoin network, then each user of the network that uses the SPECTER and PHANTOM protocols has a chance to release one block per day. Thus, in the mining pools there is no need at all and we come to a truly decentralized network.

So, SPECTER and PHANTOM protocols provide a high level of decentralization and high network bandwidth, but a large amount of information is required to be stored.

BFT protocols

The next type of protocol that is applied is the BFT protocols (Byzantine Fault Tolerance).

The name is derived from the comic description of the problem that was made in the 80s of the 20th century when developing a protocol for highly reliable systems. An example was given about the Byzantine generals who are organizing an attack on a city. The task of the generals was to come to a single solution. But it was necessary to take into account that among them there are several traitors. Traitors could behave in an arbitrary manner and, moreover, they could influence the transfer of messages between honest generals, for whom it is important to either simultaneously attack the city, or simultaneously retreat. If one of the honest generals retreats, the enemy will defeat the army piece by piece. Therefore, they needed to come to an agreed decision. The formulation of the problem in this way was done in the work, from which came the name of the algorithm for achieving consensus.

For BFT protocols, peer-to-peer communication is assumed. It is assumed that there are a certain number of intruders who can act in a coordinated manner. They are unknown to honest participants. The network may have failures and delays, that is, some messages may disappear, and some may come with a long delay. However, a limit is imposed on the number of steps during which all honest validators must come to a common solution. In the example task there are two options: attack or retreat.

It is assumed that honest nodes are more than participants. BFT protocols are guaranteed to come to a common solution, which cannot be canceled in the future. For non-BFT protocols, the probability of reversal is exponentially decreasing, but not zero.

At the core of Bitcoin and other modern widespread cryptocurrencies are not BFT protocols. For Bitcoin, there is a non-zero probability, which is negligible, that there is an alternative chain extending, for example, from the previous month, year, or even from the Genesis block. The probability of cancellation of the current chain is exponentially decreasing, but it exists. In the case of a BFT protocol, the decision cannot be canceled.

BFT protocol examples

Practical bft

The first protocol that was put into practice was called Practical BFT. It was proposed in 1999. He has a fairly simple functioning. The client accesses the server that he chooses the leader. The leader sends these messages to other servers. After this, the servers inform each other that certain messages have arrived and they need to be entered into a joint database. Each validator must confirm that he received confirmation from ⅔ other participants.

When the validator received confirmation from ⅔ other participants about the inclusion of the message in its copy of the database, it is entered into the local copy of this validator.

The protocol is energy efficient, provides high bandwidth and fast transaction confirmation, but unfortunately works for a small number of validators. If we design a cluster, file system or cloud on the basis of this protocol, then it works efficiently, but if we start using a large number of nodes, we get a rapid increase in the number of messages and a very large increase in network load. In the latter case, the protocol does not work efficiently, especially when the network works with delays, skips messages, etc.

In addition, the basic version assumes the presence of a leader, which can be a DoS attack point for stopping the work of the accounting system by an attacker.

HoneyBadger BFT

HoneyBadger BFT has proven itself and was developed as an improved version of one of the existing algorithms. It uses a number of features, including cryptographic protection of all messages that are transmitted over the network. Transactions are decrypted only after reaching a certain agreement on them. HoneyBadger consists of two protocols: RBC (Reliable broadcast) and BA (Byzantine Agreement).

Using the RBC protocol, messages are delivered over the network. This protocol completes its work only after it receives confirmation that at least ⅔ honest validators received the required set of messages. After that, the validators go to the BA-protocol and the coordination of the transactions themselves, which are then sent to the network.

This protocol provides strong cryptographic protection. It works well in networks with poor data transmission quality, where there are gaps in transmitted messages and delays in their delivery, as well as in networks with serious malicious interference with work. There is no leader here. The protocol suffers when trying to scale significantly: with hundreds of validator nodes, it still works fine, but with several thousand it already puts too much load on the network and the transaction confirmation time is greatly increased. In a fully decentralized network of the accounting system, where thousands of validators work, the protocol becomes ineffective.

The result of further development are the Algorand and Hashgraph protocols.

Algorand

Algorand protocol was proposed in 2017. He uses the same BFT approach, but he is focused on the fact that among all the participants a certain subcommittee is selected at random, which performs the confirmation of transactions. Moreover, this confirmation occurs in several stages, at each of which a subcommittee is selected.

The protocol guarantees with a high probability close to one that the subcommittee is honest, if more than ⅔ of the network members are honest, that is, each of the subcommittees will also have ⅔ honest participants. There is no leader here, so there is no point for a denial of service attack (DoS attacks). This protocol provides a high bandwidth accounting system, fast transaction confirmation, as well as protection against adaptive corruption.

It is assumed that an attacker can choose an arbitrary validator and declare that it is he who works in his interests. An attacker can choose the nodes that will work for him (mandatory for correct work is the condition that there are more honest participants участников). And even in such conditions, the protocol provides durability.

Since this is a BFT protocol, in any case, it provides safety and persistence properties. And the rest of its properties will depend on delays in the transmission of data via communication channels.

If this protocol works in a problem network, there is a threat of liveness, due to the fact that new transactions may not receive confirmation. If there are too long delays on the network or an attacker gets the opportunity to throw out the messages that he needs to throw out to achieve the goal, then this will not affect the old (already confirmed) transactions. He will not be able to cancel them. The bottom line is that he will be able to reject the acceptance of new transactions.

Hashgraph

The Hashgraph protocol is a fresh solution with very good properties. Each of the validators collects their transactions, randomly selects another participant and sends him data about his transactions, as well as information that other nodes informed him when they connected to him.

So, the operation is very simple: the validator “listens”, accepts all the messages that come to him, and then arbitrarily chooses another participant and sends him all the transactions with his.

Hashgraph ensures that in a finite number of steps, that is, rounds of the protocol, all nodes come to a single solution. The protocol works quickly and scales well. It requires more traffic than Algorand, but at the same time is very efficient. Hashgraph provides the properties of safety and liveness, that is, adding new transactions and coming to a single solution. It even works in a fully asynchronous network, when messages can be delayed for a very long period of time or thrown out altogether.

In addition, there is no leader in the network, which allows an attacker to disable those nodes that are interesting to him, which can force them to work according to their intentions. However, while there are ⅔ honest users, the protocol provides a high level of security.

BFT totals

Let's summarize the BFT protocols. They provide very high throughput and allow validators to agree on the status of transactions for a fixed number of steps. These properties allow them to be successfully used in cryptocurrencies. The protocol ensures reliable operation even in unreliable networks, provided that more than ⅔ of the validators of the accounting system are honest. Protocols such as Algorand and Hashgraph support high bandwidth and they can have a really large number of validators.

At the same time, they have a very serious drawback: in the current state of development, protocols have been developed for the so-called permissioned accounting systems. In other words, they are designed without considering the incentive for validators to work honestly in the network itself. It is assumed that such incentives are outside the scope of this protocol. Rewards for the formation of the block, various strategies of user behavior are not considered.

To deploy such protocols to work in permissionless accounting systems, researchers will need to carry out serious work. In this regard, they have a wide field for research from the point of view of game theory and from the point of view of developing a reward system for validators, etc.

Distributed Lab has prepared this article based on video lecture material Roman Oleynikov.

Frequently asked Questions

- Is it possible in the near future to introduce a radically new consensus protocol and is there any development?

Now, indeed, consensus protocols are being actively developed. Some of them we discussed today: Algorand and Hashgraph from the BFT protocols, as well as the protocol based on the Ouroboros PoS consensus. At the beginning of 2018, the PHANTOM protocol was presented. These are new protocols with new principles.

- How does Ouroboros ensure that the list of nodes that have the right to form a block at certain time intervals is the same for all nodes?

The issue is related to the grinding attacks mentioned above. Ouroboros provides persistence and liveness properties: if a transaction hit at a certain point in time and received confirmation, then it can no longer be canceled or deleted, since the probability of replacing this block tends to zero. In other words, the developers believe that the transaction cannot disappear from the shared database, since it is available to anyone.

Due to the persistence property, all network nodes form the same set of transactions in their local copy of the database. Potential validators send transactions with their own randomness, which is initially hidden, and at the time when the MPC protocol ends, everyone can calculate the resulting randomness. All users have a consistent transaction history, so that all nodes get the same list of actual validators. It is impossible to manipulate randomness here, because the MPC protocol provides properties related to the fact that none of the participants can receive this randomness until all have entered it into the blockchain. Only after this accident is revealed. Even if someone does not want to open his chance, the protocol itself guarantees that this data will be opened. Everyone has the same vision of history and chance.

- In which book can you read about the algorithms for achieving consensus, discussed in this chapter?

Unfortunately, there are no such books yet. There are only selected scientific papers and articles. You can see articles on PHANTOM, SPECTER and Ouroboros. You can see the site where these articles can be found by title.

- Why so many blocks to issue in PHANTOM and SPECTER, if there is no such a large number of transactions? Most blocks will be empty or contain few transactions.

PHANTOM and SPECTER are focused on scaling. For Bitcoin, there have often been situations where transactions with a low commission could be on the network unconfirmed even for several months. PHANTOM and SPECTER guarantee that all transactions will be included in the blocks and network bandwidth will be increased. These protocols can provide tens of thousands of transactions per second, with each receiving a confirmation. In other words, they can provide very low transaction costs in the network itself. If there are no transactions, then you can really release empty blocks.

- How and by whom in BFT is selected the leading server?

It depends on the specific protocol. If we are talking about PBFT, then in this case a specific user chooses the leading server that will conduct his transaction. If we are talking about Algorand or Hashgraph, there is no leader at all.

- What will happen if in PBFT the initial request comes to a compromised node?

In this case, the compromised (or inoperative) node may not process it. If a user randomly selects a node to which he refers, and we have two thirds of honest nodes, then in this case his transaction will receive a confirmation with a high probability after several attempts.

- Is it correct to say that Algorand is something like DPoS (Delegated Proof-of-stake)

No, this is not entirely correct. Delegated Proof-of-stake implies that users must select a validator and delegate his right to vote. Here the nodes are selected to confirm transactions really random. If you look far into the future and consider the options for building cryptocurrency on Algorand, then you really need to develop delegation options, but delegation does not need to work for the protocol itself.

- Which of the listed protocols are not yet applied, but are considered promising in the future?

SPECTER and PHANTOM are really promising protocols. And SPECTER developers are working to launch a new digital currency.