Testing Adaptec RAID Caching Technology

Solutions for working with RAIDs from hard drives have been in use for a very long time. In general, they continue to be popular in many areas where a relatively inexpensive, high-availability, high-volume array is required. Considering the size of modern hard drives, their speed, as well as other reasons, RAID6 arrays (or RAID60, if there are many disks) are of the greatest practical interest. But this type of arrays has low performance on random write operations and it’s not easy to do something about it.

Of course, in this case we are talking about the speed of the “raw volume”. In real life, it is added to the file system, operating system, applications and all that. So it's not all that bad. However, there are software and hardware ways to increase performance, independent of these subsystems. We are talking about caching technologies, when a substantially faster flash drive-based drive is added to the array of hard drives.

In particular, Adaptec RAID controllers call this technology maxCache and its version 3.0 is implemented in the ASR-8885Q and ASR-81605ZQ models. When using it, several features must be taken into account: only one maxCache volume per controller is allowed, the maximum volume of maxCache volume is 1 TB, for a write caching operation it is necessary to have a fault-tolerant configuration of the maxCache volume (for example, a mirror). At the same time, the user can independently specify for each logical volume how exactly he will work with maxCache - read and / or write and in what mode.

For testing, we used a server based on the Supermicro X10SLM-F motherboard, Intel Xeon E3-1225 v3 processor (4C / 8T, 3.2 GHz), 32 GB of RAM, running under Debian 9 OS.

The ASR-81605ZQ controller under test regularly has a memory protection unit and, when working with an array of hard disks, it has active caches for both reading and writing. Recall that the amount of private memory in this model is 1 GB. A RAID6 array with a 256 KB block was created from six Seagate ST10000NM0086 hard drives with a SATA interface and 10 TB capacity. The total volume was about 36 TB.

Two pairs of devices were used as SSD drives for the maxCache volume: two second-generation Samsung 850 EVO 1 TB SATA and two Seagate 1200 SSD (ST400FM0053) 400 GB each with SAS interface, from which RAID1 arrays were created. Of course, the first model can already be considered outdated and not only morally. But to illustrate the budget scenario, it is suitable. The second is formally better suited to the "corporate" category, but it is difficult to consider it modern. In the settings of the maxCache array itself, there is only the Flush and Fetch Rate option, which remained at the default value (Medium). There is no possibility to select priority by operations or disk volumes. Note that the drives were not in the new state and TRIM is not used in this configuration.

After creating the volume, maxCache needs to be included in the properties of the logical volume parameters to use it. There are three options here: turning on the cache for reading, turning on the cache for writing and cache type for writing.

The fio utility was used as a test tool, and a set of scripts included sequential and random operations with different numbers of threads. It should be noted that the study of the performance of products with caching technology synthetic tests is difficult to recognize the best option. Adequately assessing the effect is better on real-life tasks, since the synthetic load to a certain extent contradicts the very idea of caching. In addition, in this case we consider low-level operations, and in fact the user usually deals with files and, as we said above, the volume file system, the operating system, and the software itself are included in the work with them. So it is synthetics that are attractive for their simplicity and repeatability, it makes sense not by itself,

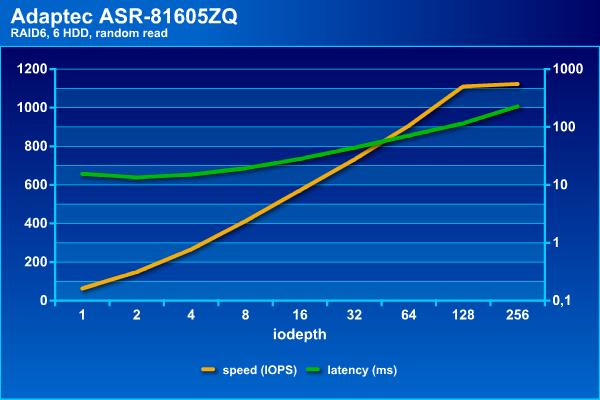

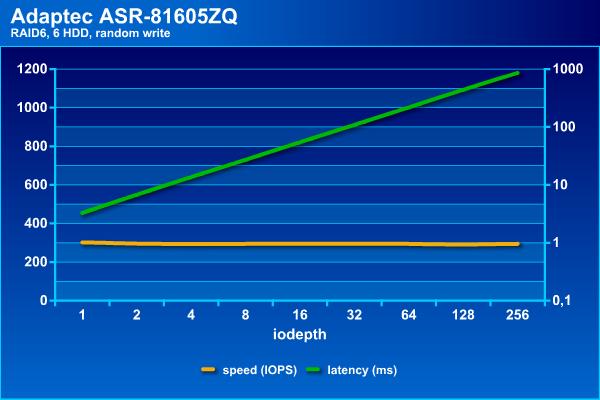

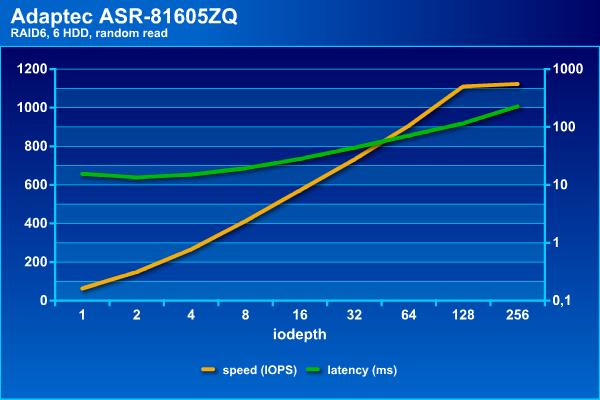

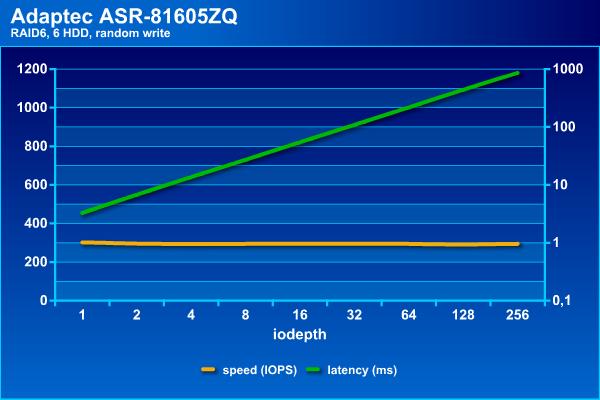

We'll see first what our array is capable of on its own. Recall that in successive operations, the speed in MB / s and delays (on a logarithmic scale) are interesting, and on random ones - IOPS and also delays.

The speed of streaming operations with an array of this configuration is at the level of 900 MB / s. In this case, the delays do not exceed 70 ms, even with a large number of threads.

For hard drives, random operations are the most difficult workload, as can be seen from the results. If you set the wait time threshold at 100 ms, then about 1,100 IOPS can be obtained at reading, and at recording, regardless of the load, the array is able to produce about 300 IOPS. Note that with a RAID60 array of 36 disks on the same controller, you can get more interesting numbers, thanks to the configuration of three blocks of 12 hard drives. This allows you to add alternation and raise the speed to 3500 and 1200 IOPS on random reading and writing, respectively (in this configuration, the old enough SAS hard drives from HGST were 2 TB each). The negative side of this option is the additional cost of the volume, since it’s not two disks per volume that are “lost”, but two for each group.

So, without caching, our array looks rather sad on random operations. Of course, this is the “raw” speed of the volume and programs rarely provide an entirely random load (recall that here we still have an array for storing large files, and not the database).

Let's see how SSD can help in this situation. The tests will use the four available configuration options - read only, read and write Write Through, read and write Write Back, read and write Instant Write Back:

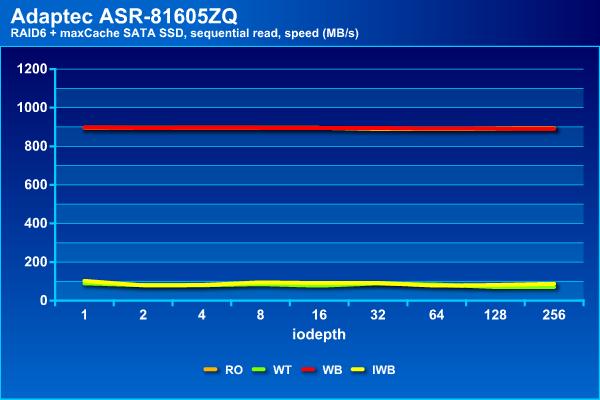

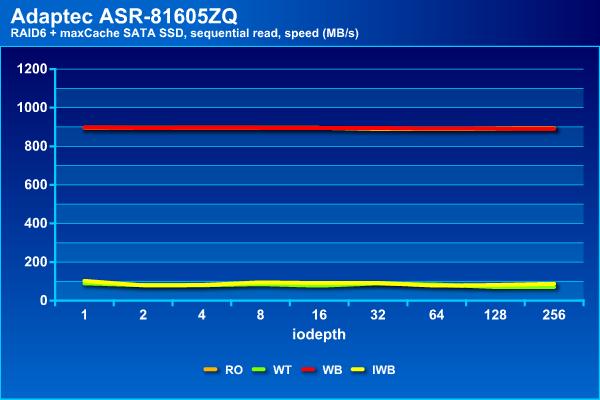

Let's start with SATA drives with a fairly large amount. The graphs this time will be separate - the speed and delays for each of the four test scenarios.

On sequential read operations, the array shows stable results regardless of the type of cache used, which is quite expected. However, they differ little from an array without a cache - all the same 900 MB / s and latencies of about 70 ms.

There are two groups on a sequential write — read only and Write Back show results similar to an array without a cache — about 900 MB / s and up to 100 ms, while Write Through and Instant Write Back can pull out no more than 100 MB / s and with significantly larger delays.

Recall that in reading the array of hard drives showed a maximum of about 1100 IOPS, but at this boundary, delays have already begun to exceed 100 ms. With SATA SSD caching, slightly better results can be achieved - around 1500 IOPS and with the same latency.

In the operations of random recording, we see the greatest effect - the growth of indicators by two and a half times with a simultaneous increase in load capacity. When using the cache, you can have delays of up to 100 ms with two to three times more threads.

General conclusion on this configuration: on sequential reading does not interfere, on sequential writing in some configurations does not interfere, on random reading adds about 35%, on random writing increases performance a couple of times.

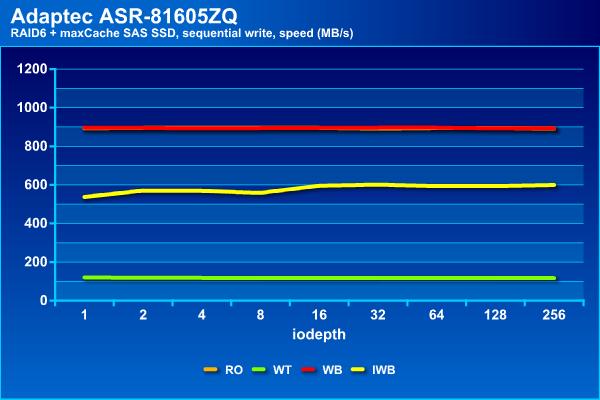

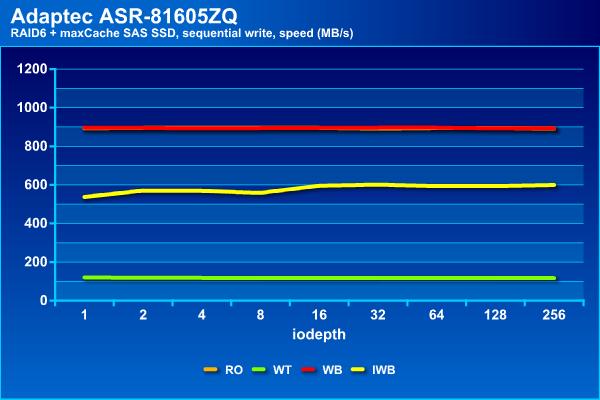

Let us now look at the variant of the caching volume of the second pair of SSDs. Note that in our case they had a significantly smaller volume, a SAS interface of 12 Gb / s and higher speed characteristics (as stated by the manufacturer).

On sequential reading, the results do not differ from those given earlier, which is quite expected.

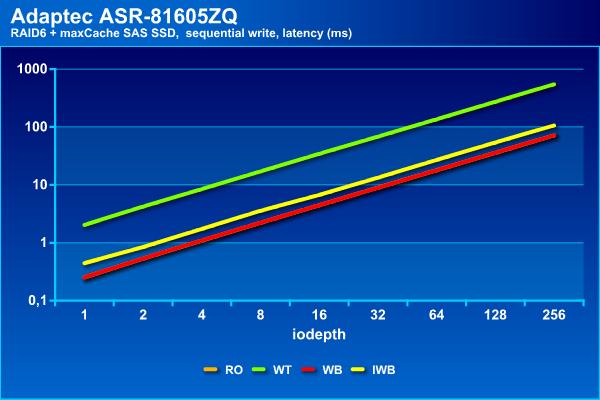

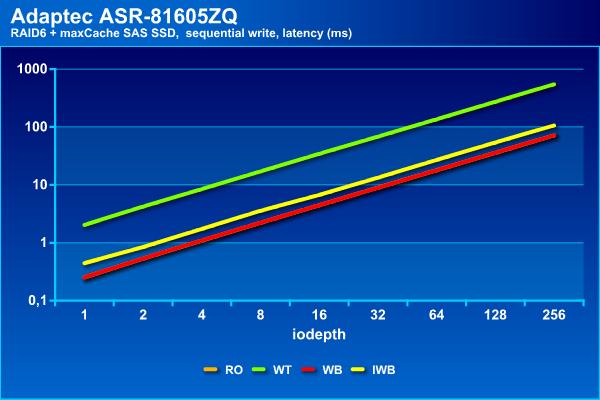

On the sequential write, we now have three groups - the configuration with Write Write cache lags behind, Instant Write Back shows approximately half the maximum speed, and only Write Back does not differ from an array without a cache. The same alignment and with the waiting time.

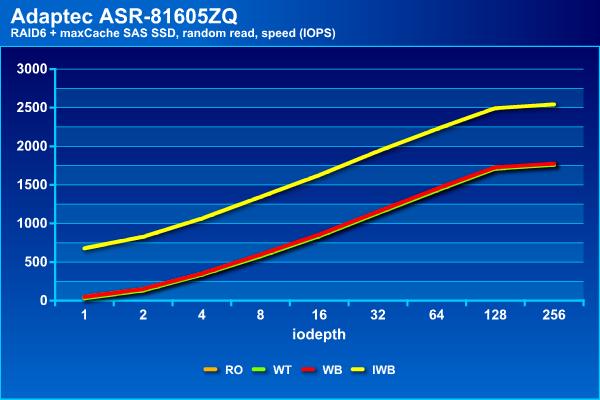

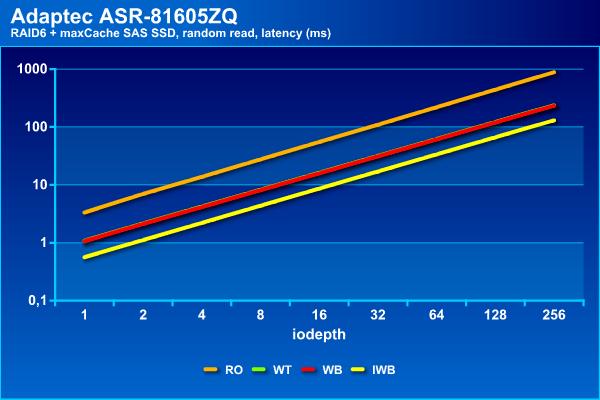

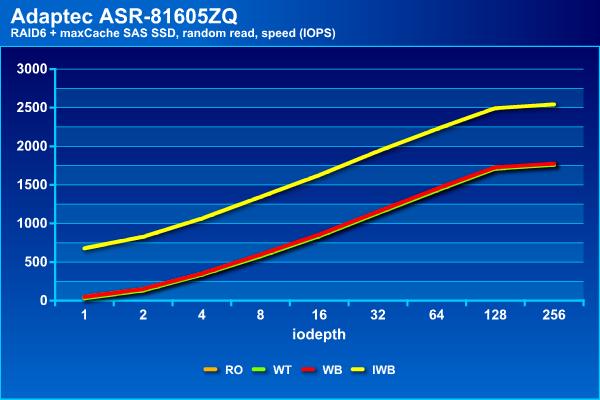

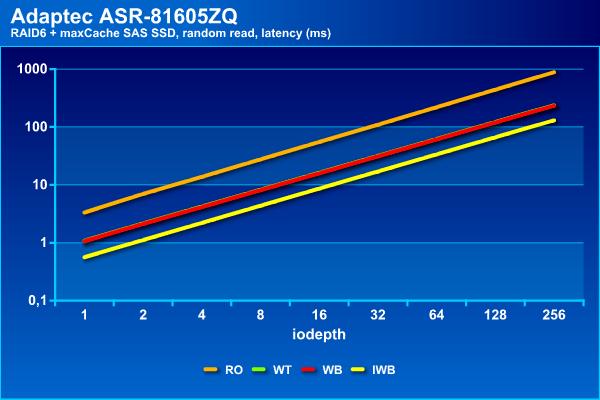

But on random reading, Instant Write Back showed itself best, reaching 2500 IOPS, while other configurations can only pull up to 1800 IOPS. Note that all the options with write caching are noticeably faster than the “clean” array. At the same time, the wait time does not exceed 100 ms, even with a large number of threads.

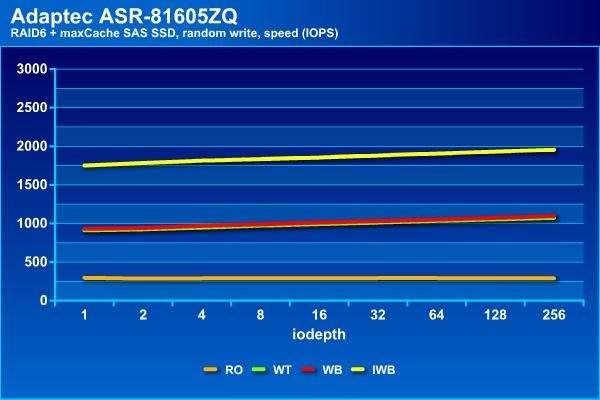

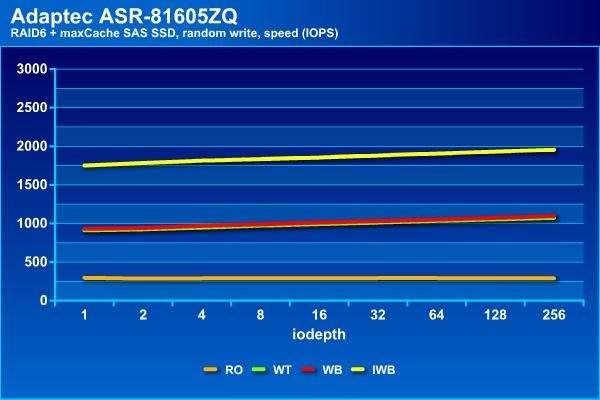

On random read operations, Instant Write Back comes forward again, showing almost 2000 IOPS. The second group contains the Write Through and Write Back configurations with 1000 IOPS.

The last participant, who does not use the cache on write operations, shows about 300 IOPS, just like an array of hard drives.

Perhaps the most interesting option for this caching volume is Instant Write Back. True, it is slower on streaming read operations. It may be possible to fix this using the RAID10 configuration for the maxCache volume, but this will already cost four compartments in the storage enclosure.

In general, we can say that the application of the maxCache technology can really be useful for improving the performance of arrays from hard disks, especially if there are many random operations in the load. However, it is still impossible to assume that this is as effective as replacing a hard disk with an SSD in a desktop computer or workstation.

The greatest effect that was noted in the tests is an increase in the speed of random operations by a factor of 2-3. Of course, not the fastest SSDs were used, which obviously had an effect in some tests (for example, sequential writing in Write Through mode). In addition, I would like to once again draw attention to the fact that the choice of caching configuration significantly affects the results. Considering that changing the settings is possible “on the fly” without losing data, you should check all the options yourself for your tasks and choose the best option.

Of course, in this case we are talking about the speed of the “raw volume”. In real life, it is added to the file system, operating system, applications and all that. So it's not all that bad. However, there are software and hardware ways to increase performance, independent of these subsystems. We are talking about caching technologies, when a substantially faster flash drive-based drive is added to the array of hard drives.

In particular, Adaptec RAID controllers call this technology maxCache and its version 3.0 is implemented in the ASR-8885Q and ASR-81605ZQ models. When using it, several features must be taken into account: only one maxCache volume per controller is allowed, the maximum volume of maxCache volume is 1 TB, for a write caching operation it is necessary to have a fault-tolerant configuration of the maxCache volume (for example, a mirror). At the same time, the user can independently specify for each logical volume how exactly he will work with maxCache - read and / or write and in what mode.

For testing, we used a server based on the Supermicro X10SLM-F motherboard, Intel Xeon E3-1225 v3 processor (4C / 8T, 3.2 GHz), 32 GB of RAM, running under Debian 9 OS.

The ASR-81605ZQ controller under test regularly has a memory protection unit and, when working with an array of hard disks, it has active caches for both reading and writing. Recall that the amount of private memory in this model is 1 GB. A RAID6 array with a 256 KB block was created from six Seagate ST10000NM0086 hard drives with a SATA interface and 10 TB capacity. The total volume was about 36 TB.

Two pairs of devices were used as SSD drives for the maxCache volume: two second-generation Samsung 850 EVO 1 TB SATA and two Seagate 1200 SSD (ST400FM0053) 400 GB each with SAS interface, from which RAID1 arrays were created. Of course, the first model can already be considered outdated and not only morally. But to illustrate the budget scenario, it is suitable. The second is formally better suited to the "corporate" category, but it is difficult to consider it modern. In the settings of the maxCache array itself, there is only the Flush and Fetch Rate option, which remained at the default value (Medium). There is no possibility to select priority by operations or disk volumes. Note that the drives were not in the new state and TRIM is not used in this configuration.

After creating the volume, maxCache needs to be included in the properties of the logical volume parameters to use it. There are three options here: turning on the cache for reading, turning on the cache for writing and cache type for writing.

The fio utility was used as a test tool, and a set of scripts included sequential and random operations with different numbers of threads. It should be noted that the study of the performance of products with caching technology synthetic tests is difficult to recognize the best option. Adequately assessing the effect is better on real-life tasks, since the synthetic load to a certain extent contradicts the very idea of caching. In addition, in this case we consider low-level operations, and in fact the user usually deals with files and, as we said above, the volume file system, the operating system, and the software itself are included in the work with them. So it is synthetics that are attractive for their simplicity and repeatability, it makes sense not by itself,

We'll see first what our array is capable of on its own. Recall that in successive operations, the speed in MB / s and delays (on a logarithmic scale) are interesting, and on random ones - IOPS and also delays.

The speed of streaming operations with an array of this configuration is at the level of 900 MB / s. In this case, the delays do not exceed 70 ms, even with a large number of threads.

For hard drives, random operations are the most difficult workload, as can be seen from the results. If you set the wait time threshold at 100 ms, then about 1,100 IOPS can be obtained at reading, and at recording, regardless of the load, the array is able to produce about 300 IOPS. Note that with a RAID60 array of 36 disks on the same controller, you can get more interesting numbers, thanks to the configuration of three blocks of 12 hard drives. This allows you to add alternation and raise the speed to 3500 and 1200 IOPS on random reading and writing, respectively (in this configuration, the old enough SAS hard drives from HGST were 2 TB each). The negative side of this option is the additional cost of the volume, since it’s not two disks per volume that are “lost”, but two for each group.

So, without caching, our array looks rather sad on random operations. Of course, this is the “raw” speed of the volume and programs rarely provide an entirely random load (recall that here we still have an array for storing large files, and not the database).

Let's see how SSD can help in this situation. The tests will use the four available configuration options - read only, read and write Write Through, read and write Write Back, read and write Instant Write Back:

- WB - write back enabled. If you are on the hard drive, it will make it. This is the default policy.

- INSTWB - instant write back enabled. If you’re in the middle of a nightmare, you’ll get

- WT - write through enabled. He added that he would not be able to write on-the-fly.

Let's start with SATA drives with a fairly large amount. The graphs this time will be separate - the speed and delays for each of the four test scenarios.

On sequential read operations, the array shows stable results regardless of the type of cache used, which is quite expected. However, they differ little from an array without a cache - all the same 900 MB / s and latencies of about 70 ms.

There are two groups on a sequential write — read only and Write Back show results similar to an array without a cache — about 900 MB / s and up to 100 ms, while Write Through and Instant Write Back can pull out no more than 100 MB / s and with significantly larger delays.

Recall that in reading the array of hard drives showed a maximum of about 1100 IOPS, but at this boundary, delays have already begun to exceed 100 ms. With SATA SSD caching, slightly better results can be achieved - around 1500 IOPS and with the same latency.

In the operations of random recording, we see the greatest effect - the growth of indicators by two and a half times with a simultaneous increase in load capacity. When using the cache, you can have delays of up to 100 ms with two to three times more threads.

General conclusion on this configuration: on sequential reading does not interfere, on sequential writing in some configurations does not interfere, on random reading adds about 35%, on random writing increases performance a couple of times.

Let us now look at the variant of the caching volume of the second pair of SSDs. Note that in our case they had a significantly smaller volume, a SAS interface of 12 Gb / s and higher speed characteristics (as stated by the manufacturer).

On sequential reading, the results do not differ from those given earlier, which is quite expected.

On the sequential write, we now have three groups - the configuration with Write Write cache lags behind, Instant Write Back shows approximately half the maximum speed, and only Write Back does not differ from an array without a cache. The same alignment and with the waiting time.

But on random reading, Instant Write Back showed itself best, reaching 2500 IOPS, while other configurations can only pull up to 1800 IOPS. Note that all the options with write caching are noticeably faster than the “clean” array. At the same time, the wait time does not exceed 100 ms, even with a large number of threads.

On random read operations, Instant Write Back comes forward again, showing almost 2000 IOPS. The second group contains the Write Through and Write Back configurations with 1000 IOPS.

The last participant, who does not use the cache on write operations, shows about 300 IOPS, just like an array of hard drives.

Perhaps the most interesting option for this caching volume is Instant Write Back. True, it is slower on streaming read operations. It may be possible to fix this using the RAID10 configuration for the maxCache volume, but this will already cost four compartments in the storage enclosure.

In general, we can say that the application of the maxCache technology can really be useful for improving the performance of arrays from hard disks, especially if there are many random operations in the load. However, it is still impossible to assume that this is as effective as replacing a hard disk with an SSD in a desktop computer or workstation.

The greatest effect that was noted in the tests is an increase in the speed of random operations by a factor of 2-3. Of course, not the fastest SSDs were used, which obviously had an effect in some tests (for example, sequential writing in Write Through mode). In addition, I would like to once again draw attention to the fact that the choice of caching configuration significantly affects the results. Considering that changing the settings is possible “on the fly” without losing data, you should check all the options yourself for your tasks and choose the best option.