Process synchronization during task parallelization using the Caché Event API

- Tutorial

Today, the presence of multi-core, multi-processor and multi-node systems is already the norm when processing large amounts of data.

How can you use all these computing power? The answer is obvious - parallelizing the task.

But then another question arises: how to synchronize the subtasks themselves?

It should be noted right away that the JOB command in the Caché version of the DBMS for Windows does not generate a thread, but a process. Therefore, it would be more correct to talk not about multi-threaded, but about a multi-process application.

It follows that for Caché the number of cores is more important in the processor than the presence of Hyper-Threading technology , which should be considered when choosing hardware.

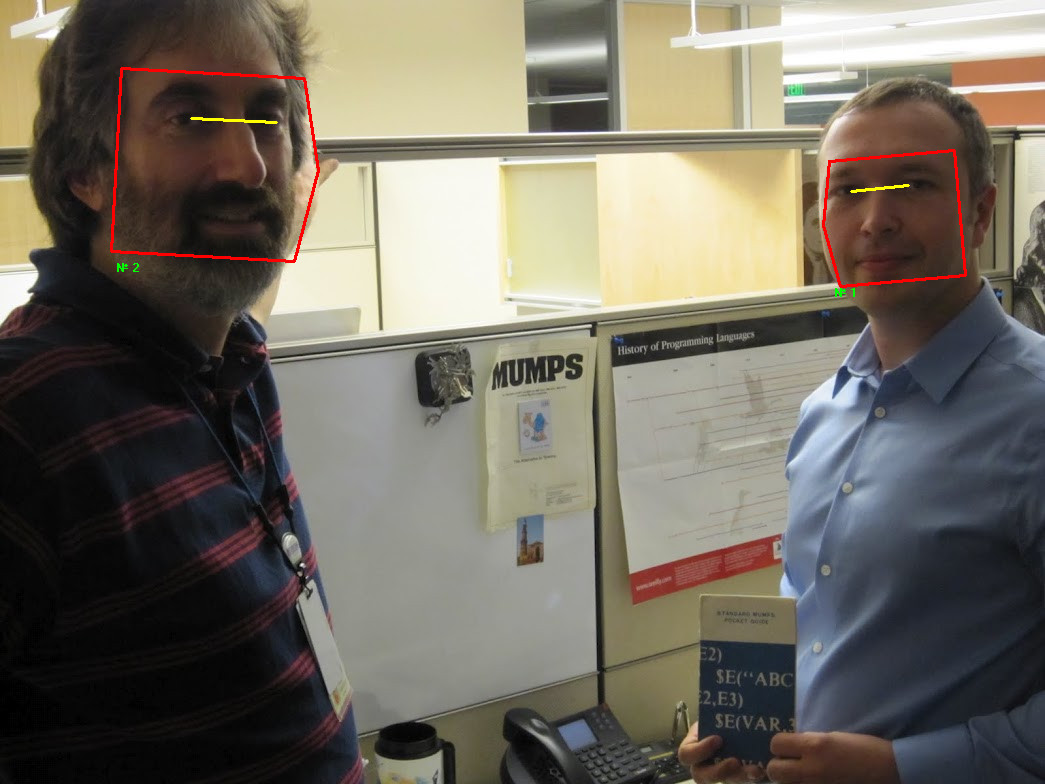

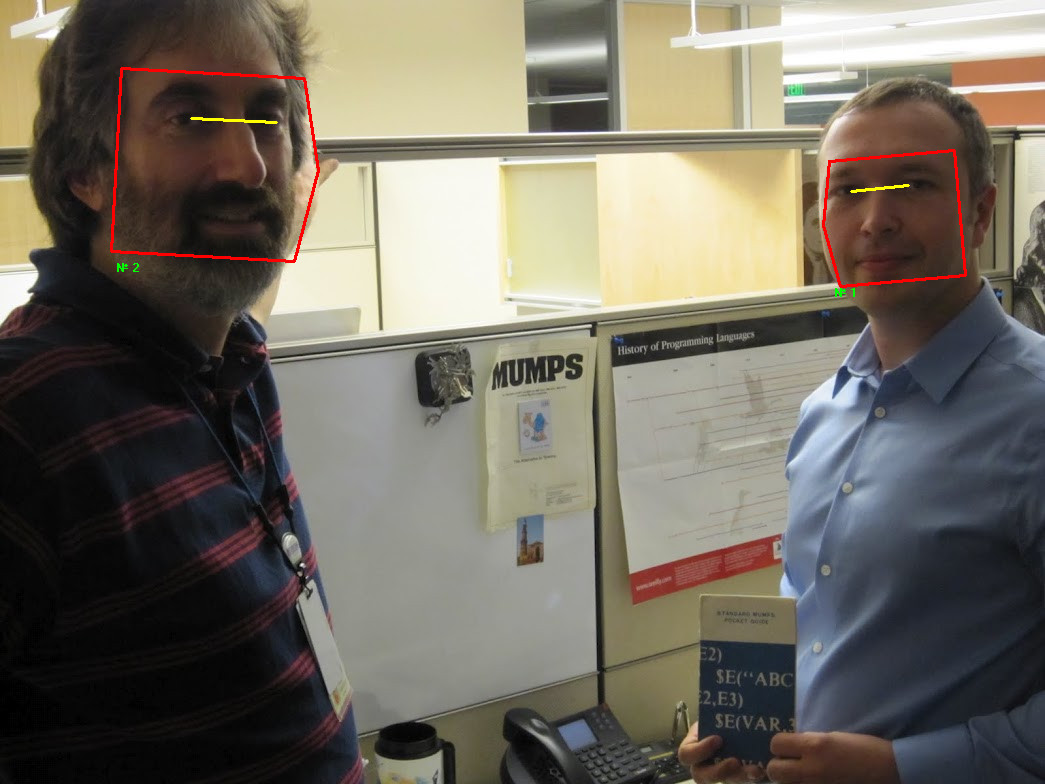

First, we briefly consider the stages of task parallelization using the example of biometric identification.

Suppose there is a database with biometric information, such as photographs.

And you, having a photo of some person, want to identify him on this basis (search “one-to-many”).

First you need to decide what, where and how we will “parallelize”.

This may depend on many factors: the number of cores, processors on one node, the number of nodes themselves in a grid-system ( ECP ), the distribution of data itself among nodes, etc.

In other words, at this stage ( Map) we must determine the strategy by which our task will be parallel. After all, one task can be divided into many smaller tasks, which in turn can also be parallelized and so on.

At the next stage ( Reduce ) we must collect data from our subtasks, aggregate them and give the final result.

For our example, the Map strategy can vary significantly.

For example, the number of people in the original photo.

If there is only one person in the photograph, then each process can be instructed to identify it within the framework of its part of the data, which can be either spread over nodes or shared by all nodes.

If several people are captured on the photo at once, then each process can be instructed to identify one person at once according to all the data.

At the Reduce stage , having received a list of similar persons and a similarity coefficient, we only need to sort it and give out the top of the most similar ones.

At the Reduce stage, along with obtaining the results from each of the subtasks, we should be able to determine which of them have already been completed and which are not, which is what the % SYSTEM.Event class will help us with .

The documentation describes in sufficient detail the mechanism for processing the event queue, so it makes no sense to stop in detail.

I note only two main methods:

So, create the following program:

Let's launch our program from the terminal:

As a result, we do not see any result, because the running processes live their own lives (they are executed asynchronously) and we did not wait for their completion from the main process.

Let's try to fix this by inserting a delay, as shown below:

Run again:

Now the result is obtained.

But this is extremely inefficient and inflexible, since we do not know in advance how long the subtasks will be performed.

You can use data checking or timeout locks. But this is also not optimal.

In this situation, the built-in " Event Queueing " mechanism saves us .

We rewrite our application, additionally assigning each process its priority.

Conclusion of the result:

Some useful links can be found in the class reference :

How can you use all these computing power? The answer is obvious - parallelizing the task.

But then another question arises: how to synchronize the subtasks themselves?

It should be noted right away that the JOB command in the Caché version of the DBMS for Windows does not generate a thread, but a process. Therefore, it would be more correct to talk not about multi-threaded, but about a multi-process application.

It follows that for Caché the number of cores is more important in the processor than the presence of Hyper-Threading technology , which should be considered when choosing hardware.

Parallelization Stages: Map and Reduce

First, we briefly consider the stages of task parallelization using the example of biometric identification.

Suppose there is a database with biometric information, such as photographs.

And you, having a photo of some person, want to identify him on this basis (search “one-to-many”).

First you need to decide what, where and how we will “parallelize”.

This may depend on many factors: the number of cores, processors on one node, the number of nodes themselves in a grid-system ( ECP ), the distribution of data itself among nodes, etc.

In other words, at this stage ( Map) we must determine the strategy by which our task will be parallel. After all, one task can be divided into many smaller tasks, which in turn can also be parallelized and so on.

At the next stage ( Reduce ) we must collect data from our subtasks, aggregate them and give the final result.

For our example, the Map strategy can vary significantly.

For example, the number of people in the original photo.

If there is only one person in the photograph, then each process can be instructed to identify it within the framework of its part of the data, which can be either spread over nodes or shared by all nodes.

If several people are captured on the photo at once, then each process can be instructed to identify one person at once according to all the data.

At the Reduce stage , having received a list of similar persons and a similarity coefficient, we only need to sort it and give out the top of the most similar ones.

Caché Event API

At the Reduce stage, along with obtaining the results from each of the subtasks, we should be able to determine which of them have already been completed and which are not, which is what the % SYSTEM.Event class will help us with .

The documentation describes in sufficient detail the mechanism for processing the event queue, so it makes no sense to stop in detail.

I note only two main methods:

- Wait / WaitMsg - waiting for a resource to wake up with / without receiving a message

- Signal - sending a signal to awaken a resource with a message

Application example

- create three child processes, passing each of its data

- in each of the processes we simulate violent activity and return some result to the parent

- display the results on the screen

So, create the following program:

main () {

; delete temporary data from the previous time

kill ^ tmp

; we start three subtasks, they are also the processes

job job (1, "apple", 5)

job job (2, "pear", 6)

job job (3, "drain", 7)

; display the result on the screen

zwrite ^ tmp

}

job (a, b, c)

hang 1; simulate violent activity with a delay of 1 second.

set ^ tmp (a) = b _ "-" _ (c * 2) // form the result

Let's launch our program from the terminal:

TEST>do ^mainTEST>As a result, we do not see any result, because the running processes live their own lives (they are executed asynchronously) and we did not wait for their completion from the main process.

Let's try to fix this by inserting a delay, as shown below:

main () {

; ...

job job (3, "drain", 7)

hang 1

; display the result on the screen

; ...

}

Run again:

TEST>do ^main^tmp(1)="яблоко-10"^tmp(2)="груша-12"^tmp(3)="слива-14"TEST>Now the result is obtained.

But this is extremely inefficient and inflexible, since we do not know in advance how long the subtasks will be performed.

You can use data checking or timeout locks. But this is also not optimal.

In this situation, the built-in " Event Queueing " mechanism saves us .

We rewrite our application, additionally assigning each process its priority.

main () {

; we create three processes with our priority

job job (3, -7, apple, 5)

job job (2, 0, pear, 6)

job job (1, 8, drain, 7)

; we await the wake-up signal and

; print the result to the screen

write $ list ($ system.Event.WaitMsg (), 2) ,!

write $ list ($ system.Event.WaitMsg (), 2) ,!

write $ list ($ system.Event.WaitMsg (), 2) ,!

}

job (x, delta, a, b)

; change the priority of the current process to delta

do $ system.Util.SetPrio (delta)

hang x; simulate violent activity with a delay of x sec.

// send the wake-up signal to the parent process

// at the same time as the result

do $ system.Event.Signal ($ zparent, a _ "-" _ (b * 2))

Conclusion of the result:

TEST>do ^mainслива-14груша-12яблоко-10TEST>The same code, but already in the form of a class

Class test.task

{

ClassMethod Test ()

{

; we start three processes asynchronously with our priority

job ..SubTask (3, -7, apple, 5)

job ..SubTask (2, 0, pear, 6)

job ..SubTask (1, 8, drain) , 7)

; we await the wake-up signal and

; print the result to the screen

write $ list ($ system.Event.WaitMsg (), 2) ,!

write $ list ($ system.Event.WaitMsg (), 2) ,!

write $ list ($ system.Event.WaitMsg (), 2) ,!

}

ClassMethod SubTask (

x,

delta,

a,

b)

{

; change the priority of the current process to delta

do $ system.Util.SetPrio (delta)

hang x; simulate violent activity with a delay of x sec.

// send the wake-up signal to the parent process

// simultaneously with the result

do $ system.Event.Signal ($ zparent, a _ "-" _ (b * 2))

}

}

To run the class method in the terminal, call

do ## class (test. task) .Test ()

{

ClassMethod Test ()

{

; we start three processes asynchronously with our priority

job ..SubTask (3, -7, apple, 5)

job ..SubTask (2, 0, pear, 6)

job ..SubTask (1, 8, drain) , 7)

; we await the wake-up signal and

; print the result to the screen

write $ list ($ system.Event.WaitMsg (), 2) ,!

write $ list ($ system.Event.WaitMsg (), 2) ,!

write $ list ($ system.Event.WaitMsg (), 2) ,!

}

ClassMethod SubTask (

x,

delta,

a,

b)

{

; change the priority of the current process to delta

do $ system.Util.SetPrio (delta)

hang x; simulate violent activity with a delay of x sec.

// send the wake-up signal to the parent process

// simultaneously with the result

do $ system.Event.Signal ($ zparent, a _ "-" _ (b * 2))

}

}

To run the class method in the terminal, call

do ## class (test. task) .Test ()

Some useful links can be found in the class reference :

- class % SYSTEM.CPU - provides processor information

- class % SYSTEM.Util - contains various useful methods.

For example: NumberOfCPUs , SetBatch , SetPrio - parameter JobServers - controls the size of the process pool