ConcurrentDictionary as a cache

Many developers often face a dilemma - to receive data only from the database or to keep the cache for a number of tables. Basically, these are some directories that contain few records and are constantly needed at hand. This is a hollywood issue and will not be addressed in this article.

Many developers often face a dilemma - to receive data only from the database or to keep the cache for a number of tables. Basically, these are some directories that contain few records and are constantly needed at hand. This is a hollywood issue and will not be addressed in this article.The same problem came up in front of me when designing a highly loaded transport monitoring system server on .NET. In the end, it was decided that the cache should be. Dictionary caches began to be stored in wrappers over ConcurrentDictionary. This option was taken without much research, as it is the standard .NET tool for thread-safe dictionaries. Now is the time to test the performance of this solution. About this, in fact, an article. Also at the end of the article there will be a little research on how it is arranged inside ConcurrentDictionary.

Problem statement

A thread-safe collection of key-value pairs is required, which can do the following:

- Requesting an object with an identifier key - of course, is used most often;

- Changing, deleting and adding new elements is rare, but thread safety must be ensured;

- Work with values - request all values, search by properties of the value object.

Clause 3 is not typical for dictionary collections and therefore is the main brake on this construction. However, if caching of lookup tables is used, such situations are inevitable (for example, it would be trivial to display the entire dictionary in the admin panel for editing).

Let's try to consider the different systems in which the cache will be used. They will differ in the frequency of operations with the dictionary. We formalize the types of these operations:

- GET - request for an object by key,

- ADD - adding a new object with a new key (if such a key already exists, rewrite it),

- UPDATE - a new value for an existing key (if there is no key, do nothing)

- SEARCH - work with an iterator, in this case - search for the first suitable value

List of Test Participants

- ConcurrentDictionary . This is a turnkey solution from Microsoft with integrated thread safety. Implements convenient methods TryGetValue, TryAdd, TryUpdate, AddOrUpdate, TryDelete, which allow you to conveniently set a conflict resolution policy. Implementation features will be discussed at the end of the article.

- Dictionary with locking via Monitor . The most head-on solution is that all non-thread-safe operations are wrapped with a lock construct.

- Dictionary with lock via ReaderWriterLock . Optimization of the previous solution - operations are divided into read and write operations. Accordingly, several streams can read at the same time, and exclusive access is required for recording.

- Dictionary with lock via ReaderWriterLockSlim . Essentially the same, but using a newer class (added recursion control settings). In the context of this task, I hardly have to show anything other than ReaderWriterLock.

- Dictionary lockable through OneManyResourceLocker of PowerThreading library from Wintellect - tricky implementation ReaderWriterLock by Jeffrey Richter . I’ll clarify that the version from the official site was used, and not the NuGet package - there the version is different and I did not like it.

- Hashtable.Synchronized . Also a ready-made solution from Microsoft - provides a thread-safe indexer. It is inconvenient to use due to the fact that it is a non-generic collection (boxing, worse readability), and there are no methods with the Try prefix (it is impossible to establish a policy for adding / updating at the same time).

Briefly tell you exactly how the handlers are implemented.

- GET operation : all participants use the thread-safe TryGetValue method from the IDictionary interface. For Hashtable, a type-coded indexer is used.

- ADD operation : for ConcurrentDictionary - AddOrUpdate, for Dictionary - write lock and add through indexer, for Hashtable - add through indexer without lock.

- UPDATE operation : for ConcurrentDictionary, first TryGetValue, then TryUpdate.

This method is interesting in that a parallel update can take place between these two methods (which was also manifested during testing). It is for this case that oldValue is passed to TryUpdate, so that in this rare case, the rewrite would fail. For Dictionary, we check for availability through ContainsKey and, if successful, set the write lock and overwrite the value. There is no convenient TryUpdate for Hashtable, so I did not bother with checking for the presence of the key and, as in the case of adding, the value is overwritten through the indexer (for this collection it is not important - it was still pretty bad anyway). - Operation SEARCH : for ConcurrentDictionary, the LINQ FirstOrDefault is used, for the rest, it is a read lock with the same FirstOrDefault.

Test bench

For testing, a console application was created ( link ).

- A set of handlers is created that can handle operations of all certain types;

- A dictionary is created of N elements (by default - 10,000);

- A collection of tasks of different types in the amount of M is created (by default - 10,000);

- Each handler conducts parallel processing of all tasks in the generated dictionary (common to all handlers);

- The experiment (points 2-4) is carried out a given number of times (by default - 10) and the obtained time is averaged. Measurements were taken on a machine with a Core 2 Quad 2.66GHz and 8GB memory.

The default values are quite small, however, if you increase them, basically nothing changes. Testing

results

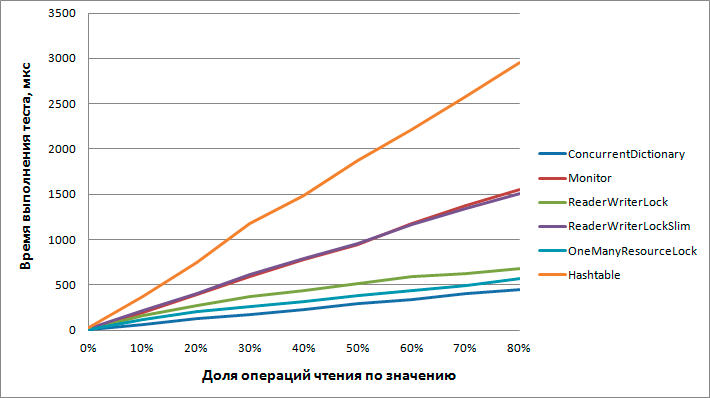

Testing was carried out with different distribution options according to the types of operations and the table turned out to be too large, you can see the whole table here ( link ). For clarity, I’ll give a graph of the test execution time in microseconds depending on the percentage of reading by value of the total number of operations (write operations are fixed at 20%, the rest are read by key).

Conclusions The

performance of all participants decreases linearly from the number of readings by value, regardless of the number of write operations.

- ConcurrentDictionary . Experiments have shown that this tool is best suited for this task. Reading by value significantly affects performance, but it still remains faster than other participants.

- Dictionary + Monitor . Significantly slower, expected results.

- Dictionary + ReaderWriterLock . Optimization of the previous version, also all expected.

It should be noted that the more recording operations prevail, the smaller the difference. From a certain point, Monitor becomes even more preferable due to lower overhead for the blocking process itself. - Dictionary + ReaderWriterLockSlim . For some reason I managed to lose even to a simple monitor. Either the enhanced functionality (compared to the previous version) affected the performance, or I don’t know how to cook it.

- Dictionary + OneManyResourceLock . Richter seems to have squeezed everything out of his read / write lock. According to test results, this is the fastest use of Dictionary. But ConcurrentDictionary is still faster.

- Hashtable . Expected Failure. Perhaps I used it incorrectly, but I do not think that it was possible to get a result comparable to other participants. Anyway, it was somehow depressing to work with non-generic collections.

Internal device ConcurrentDictionary

Let's take a closer look at the winner, namely: look at the sources of ConcurrentDictionary.

When creating this class, 2 parameters are set: Capacity and ConcurrencyLevel . The first ( Capacity ) is familiar to collections and sets the number of elements that can be recorded without expanding internal collections. In this case, linked lists are created (m_buckets, so we will call them baskets (well, not buckets, right?)) In the amount of Capacity, and then the elements are added relatively evenly to them. The default value is 31. The

second parameter ( ConsurrencyLevel) determines the number of threads that can write to our dictionary at the same time. This is achieved by creating a collection of objects for locking by monitors. Each such lock object is responsible for approximately the same number of baskets. The default value is Environment.ProcessorCount * 4.

Thus, each object in the dictionary is uniquely associated with the basket where it lies and the lock object for writing. This is done by the following method:

///

/// Computes the bucket and lock number for a particular key.

///

private void GetBucketAndLockNo(

int hashcode, out int bucketNo, out int lockNo, int bucketCount, int lockCount)

{

bucketNo = (hashcode & 0x7fffffff) % bucketCount;

lockNo = bucketNo % lockCount;

Assert(bucketNo >= 0 && bucketNo < bucketCount);

Assert(lockNo >= 0 && lockNo < lockCount);

}

Curiously, even with ConcurrencyLevel = 1, ConcurrentDictionary is still faster than a regular lock dictionary. It is also worth noting that the class is optimized for use through an iterator (as the tests showed). In particular, when calling the ToArray () method, locking is performed on all baskets, and the iterator is used relatively cheaply.

For example: it is better to use dictionary.Select (x => x.Value) .ToArray () than dictionary.Values.ToArray ().

The article was written by a leading developer of the company.

Thanks for attention!