"Calendar tester" for July. Analytics testing

Meet the authors of the July article for the "Calendar of the tester" Andrei Marchenko and Marina Tretyakov, testers-analysts of the Contour. This month, the guys will talk about analytics testing workflow models, and how they started testing analytics before the development phase. The experience of the guys will be useful for managers, testers and analysts of medium-sized grocery teams who do not live in the framework of a startup and for whom quality is more important than speed .

Analytics Testing Workflow Models

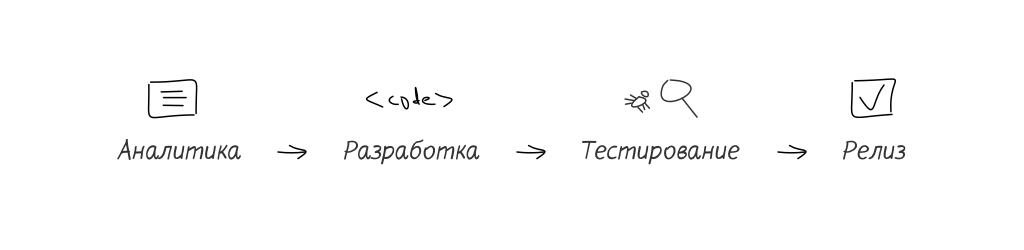

Model 1

The tester works with analytics after the finished task has been transferred to him. He checks the task by reading the analytics, like the documentation of what the developer did. Everything is mistaken, regardless of the level of professionalism. Defects can be in analytics or developer code.

Minuses:

- defects in the analytics will not be detected before the testing stage,

- there is a risk that the test task will be sent back to the analytics for revision. As a result, the TimeToMarket task is significantly increased.

Analytics errors found during testing are expensive or very expensive.

Pros:

- Tester time is reduced for tasks where an analyst is not required (infrastructure, refactoring).

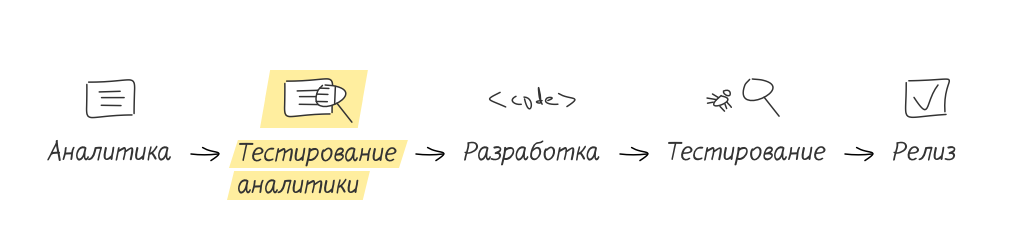

Model 2

The tester connects to the task before it is transferred to development. He looks at the prototypes for the task or just reads the documentation. All questions on the task tester asks analytics. The analyst promptly corrects the comments. The tester makes acceptance tests.

Minuses:

- the tester will have to learn the related field (design analytics and TK),

- having passed to this model, the tester will have to spend more time on testing, because the process “the finished task has arrived - I read the analytics - I test” is stretched to “the description of the future task has arrived - I read the analytics - I test the analytics - the finished task has come - I test”.

Pros:

- the probability of finding analytic errors after the transfer of the task to the development becomes less

- the tester is already in the context of the task, when she gets to him for testing, therefore he checks it faster,

- Testing analytics perfectly broadens the mind, providing a specialist with an opportunity for a future transition to analytics,

- the developer improves the quality of his code and the product as a whole, because he passes acceptance tests before sending his solution to testing.

If the development team doesn’t have reviews of analysts, testing analytics improves product quality and reduces the risk of transferring the task to development with errors in the TOR.

When we recommended the second model to testers working on the first model, we often heard:

- "We have in line and so have enough current tasks to take more."

- "And talk to the manager."

The restructuring of the development process is a serious managerial task.

Implementation of testing analytics to the development stage

Almost a year, as in the project Kontur. The standard in the development process is embedded mandatory step "testing analytics." This team did not come immediately. The growth in the number of task returns from testing to the analytics stage and further refinement was the impetus.

It was especially painful for large tasks with new functionality. The tasks transferred to the testing stage on the front-end were raw, often broke down on the simplest scenarios, were implemented differently in view of the “ambiguity” of definitions and terms in analytics.

The process of testing analytics does not appear on the click of a finger or any magic. This is hard work, but it can be divided into stages.

Stage 0. Sell the team test analytics idea

You can easily imagine a situation where you suddenly get feedback on your work with comments, suggestions and corrections. The first thought of any normal person will be: “Why did you decide to check me out? I do not trust? Are you monitoring the quality of my work? ” At this stage it is very important that the analyst does not have the feeling that he is being tested for quality and, in case of unsuccessful testing, will be dismissed.

A number of questions can be removed if the information is presented in the key: “this will give us the opportunity to learn about new functionality earlier, speed up the testing phase, prevent even minor defects in the code”.

When stage 0 is passed, you can move on.

Stage 1. The introduction of analytics testing in the development process

Having convinced the team, we proceed to the implementation of testing analytics in the daily workflow. If initially the workflow looked like this:

That after implementation:

If there are several analysts in your team who review each other before submitting the task to development, then we start testing a higher-quality text. We mean that the analytics review is not testing it, but only a part of it.

Stage 2. Analytics testing

There are tasks when prototypes replace the text version of analytics.

In this case, the prototype is also checked as text. If prototypes complement the analytics, it is useful to look at the design layouts of future functionality before reading the documentation. This is your only chance to look at the task as a user who did not read the TK and does not know how everything works and should work.

What can be checked in analytics:

1. The proposed solution satisfies the objectives of the task.

For example, if the goal of the task is to collect feedback from users, then the solution should involve recording and storing user responses.

2. Uniqueness of interpretation.

For example, the wording “show information for the current day” can be interpreted in different ways. You can understand how to “show information for the selected day in the settings,” or how to “show information for the day equal to today."

3. The feasibility of the decision.

Feasibility is the ability to realize the requirements written in analytics with known limitations of the development environment, programming language, algorithmic complexity. Good analysts can keep in mind an algorithm by which they can solve the problem they have written. It’s not a fact that the developers will do according to this algorithm (they are more aware, they will find ways to make the algorithm optimal, etc.), but its very presence indicates the feasibility of the task.

4. Testability.

How to verify that the condition “improve search results” is met is not clear. But if you rewrite the condition to “search results should be shown to the user within 1 second after pressing the“ Search ”control, it is understandable.

5. The availability of alternative scenarios.

In the wording “If the number and date are indicated, then we print the number and date. If the date is not specified, we print only the number. There are not enough scenarios:

- there is no number, but there is a date,

- No data.

6. Handling exceptions.

In the formulation “It is possible to load a document only in Excel format” it is not clear what should happen if we load files of other formats and what error we will see when loading them.

Artifacts when testing analytics

What artifacts can remain after analytics testing:

- compiled test cases

- checklists for developers.

Developer Checklist - Required, comprehensive, basic checks of the main scenarios that must work in order to be tested. It is also a tool for improving code quality. Before you submit the task to testing, the developer passes the checklist, independently quickly identifying bugs.

The developer must be persuaded to go through the tester's checklist, removing the developer’s internal unrest “I am being checked”, emphasizing “speeding up the process, speeding up testing, improving quality”. As a result, our developer passes through these checklists and is glad that it was not the tester who found the errors, but he himself (“no one will know that I have messed up in such a nonsense scenario”).

What is the result

At first glance, it seems that the introduction of a new stage in the development process will only increase TimeToMarket, but this is an illusion. First, of course, the testing process of analytics will be new and untested, and the tester will spend more time on it. In the future, gaining experience, he will be able to quickly conduct it. And the results obtained at the stage of testing analysts will reduce the time at the stage of direct testing and reduce the number of returns to a minimum.

This analytics testing process is implemented in several Contour teams. Development teams received a number of undeniable advantages:

- saving time at the testing stage: there is no cost for test design and test analytics, since everything has already been done in advance,

- Accelerating feedback to the developer through the checklist; we find critical bugs before,

- All interested persons may preliminarily review the checklists and add some checks (at the testing stage, this action is “more expensive”).

We believe that you can get these benefits by introducing the stage of testing analytics in your project.

List of calendar entries:

Try a different approach

Reasonable pair tested

Feedback: as it happens

Optimize tests

Read the book

Testing analysts

tester should catch a bug, read and organize Kaner dvizhuhu

load the service

metrics at the service of QA

test the safety of

Know Your Customer

Disassemble Backlog