Accident history: how one data center stood 8 hours

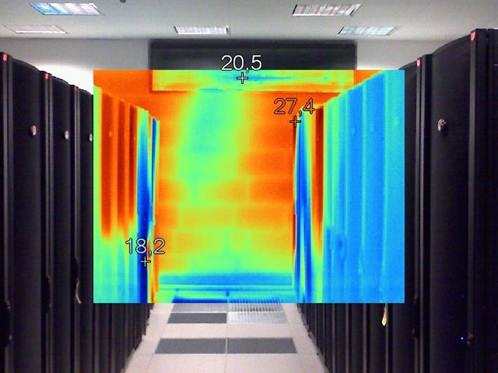

Part of the post-accident check: thermal imaging inspection of the machine room

This story happened to the data center of one company for a long time, all the consequences of the accident were eliminated, plus improvements were made to prevent the recurrence of situations. Nevertheless, the report on the incident, I believe, will be interesting both for those who deal with data centers and those who love almost detective IT stories.

A planned outage was expected. Two lines came to the data center, the data center owners knew about the situation in advance, prepared and conducted all the necessary tests. All that was needed was to simply switch to diesels according to the standard procedure.

Hour X

The shutdown occurred as planned by the power engineers, uninterruptible power supplies worked out normally, then the data center segments switched to diesel generators (in order to avoid the influence of high starting currents). Everything is as it should be. At the same time, the data center had not yet reached its design capacity, so diesel generators provided almost two times the power margin.

Hour P

Diesels worked for an hour and a half, after which the fuel in the tanks built into the containers ran out of fuel. According to the standards, a solarium cannot be stored in large volumes directly with DGU, otherwise it will have to go through very complicated certification on fire safety issues. Therefore, separate storages are used everywhere, from which DT is pumped. The system began to pump fuel, and here the diesel generators “coughed” and stood up.

It’s clear panic. Diesels rather quickly fail one after another, there is simply no time to do something - and the power in the data center disappears. A little later, it became clear that the fuel was stored in the tank for a rather long time, and due to temperature changes, condensation constantly formed there. Further, banal physics: water is heavier than fuel, and it sinks to the very bottom of the tank, where the fence comes from. The result - all diesels scooped up clean water. At the same time, the plants themselves did not even have water filters, although they would not have helped - the filter holds about half a liter, and there was much more water there. At the same time, even if it were possible to understand everything in time, it would not be possible to bring diesel fuel anyway in a short time.

The result - during a planned outage, the company received an emergency stop of the entire data center. About 8 hours, which for a large company can equal almost a quarterly profit. The data center stood from the moment water was scooped up with diesel engines until it was powered up from the city again.

To get up is not enough: you must never go to bed again

Management made relevant conclusions and decided to prevent a recurrence of the entire class of problems in the future. To do this, an audit of the entire infrastructure was needed to find bottlenecks. At this point, we connected to the project and began the analysis.

We used the techniques of Uptime Institute, an organization that collects a knowledge base on data center failures around the world and generates precise recommendations on how and what should work. By the way, more about how to build a data center for such stringent requirements can be found in the topic about our data center of increased responsibility. One of the interesting points is that the city’s standard power input is always one source (even if the channels are from 5 power plants) and is considered an additional source, since it is not fully controlled by the owner of the data center. In the sense that it may be (this is good) and it may not be (this is almost normal), plus it can disconnect without any warning - and this situation is quite common. Therefore, the experts of the Uptime Institute, when analyzing the data center infrastructure, always consider the city input like this: there is and is, they do not go into details and do not look at the city scheme.

On the other hand, the autonomous part by the standards of the Uptime Institute is very important. Carefully studied the quality of diesel engines, fuel, maintenance and so on. Explain that in Russia it is customary to do the opposite, probably not necessary. Everyone tells in detail: we have this ray from there, and this from here, this substation is very good ... If anyone remembers the Chaginskaya case when all the rays turned off at once, it will become clear why the Uptime Institute is so similar to such lines. According to the standard, you need to maintain in good condition the infrastructure that you, as the owner of the data center, have, and correctly design it.

Survey

The data center was designed and built at a fairly modern level, using modern solutions, but, as you know, the devil is in the details. The first thing our profile engineers said was that a very confusing and illogical power distribution scheme was implemented. It is built on the basis of programmable controlled controllers, and the manufacturer with a closed architecture and one “head”: if it breaks, there is no redundancy. And here we again come to what our colleagues from the Uptime Institute are talking about: the main principle is expressed in essence by one sentence for Tier III (according to our feelings, the most popular): any one element can be decommissioned, and the system should maintain its full performance.

In the elements of the design documentation, there is no mention of compliance with standards, except for construction and PUEs: this is a characteristic symptom that you need to dig deeper and audit all the little things. Another similar symptom is when standards are specified that do not require certification by an external authority in relation to the designer. It often happens here that one thing is stated on paper, and another thing at the object. Fortunately, in our case, the project documentation exactly corresponded to the actual implementation.

Nutrition

Once again: any element can be decommissioned at any time - and the data center should continue to work as if nothing had happened. Almost all the elements were duplicated at our specific surveyed object, except, for example, the controller of the automation system. It manages numerous systems of automatic input of the reserve, commutes the power distribution lines from a specific load (server racks) to input distribution devices. It looks like the lines are reserved, the shields are partially reserved, the UPS and DGU are reserved. But only one element, which costs very little compared to the total cost of infrastructure, is not reserved, and can become an additional cause of failure and accident. And while doing something quickly will be unrealistic: you have to turn off the data center to replace one piece of iron.

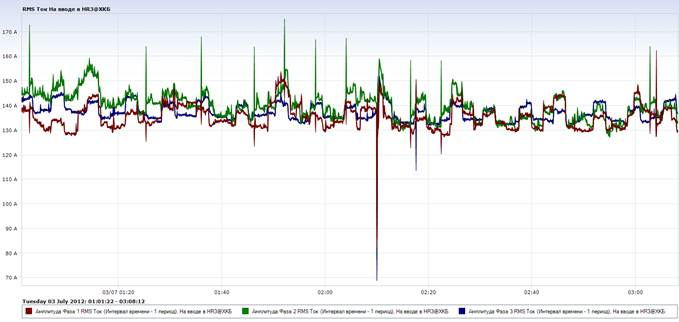

An enlarged graph of measurements of current values at the input to the shield. The phases are loaded evenly, inrush currents occur periodically.

Larger graph-2 current reduction at the input to the shield. After a current failure, the load remains at the same level for 5-6 seconds, then it starts to increase stepwise to the previous level.

The next point is the operation of the fuel supply system and the synchronization of diesel generators. The fuel system is considered critical for the functioning of the data center and requires its reservation. Well, the lack of separators, this is a question of underestimation by Western designers of our realities. The synchronization of diesel generators is another important point that the Uptime Institute with bias checks. If you run all of them, then one appoints himself a leader, sits on a common bus, and all other machines take turns trying to synchronize. Roughly speaking, the sinusoids of the alternating voltage must coincide so that there is no short circuit. As soon as any of the machines is synchronized, the contactor is turned on and it is connected to the common bus. So all diesels in turn are dialed to a common line. To make it all go well modern diesel generators provide for communication among themselves, that is, they are combined into a common information network. Another plus of this method is that machines can balance the load.

Uptime Institute suggests proceeding from the following postulate: imagine that there is no external network, you work all year round on diesel generators. Now the time has come, for example, to replace the control panel of one of the diesel engines. You turned it off, the rest is enough to provide the data center. And then you have to remove this bus, which unites the machines, from it and remove the control panel. This test is not a single standard diesel generator that we sell, will pass. Because their bus is essentially linear. They are connected to each other: you open in the middle, and the right side does not know what the left is doing. For our data center increased responsibility "Compressor"specially for this requirement made other control systems where the tire is looped. And we can tear it anywhere, the data is not lost, and the machines will continue to communicate with each other. When designing data centers, few people know about this, and, accordingly, there is another bottleneck. It seems to be small, extremely improbable - but the chain of such probabilities creates the most terrible situations that are extremely desirable to avoid.

Further tests

We saw the non-optimal arrangement of racks inside the data center. It would seem that this is a commonplace thing, a lot is said about it, everything is well known, but nevertheless, undoubtedly competent and experienced specialists who are involved in the operation of the data center on the customer side, made several mistakes in terms of the arrangement of the equipment.

Our experts in the air conditioning system proposed a simple solution that allowed (or rather, will allow after implementation) to seriously increase the efficiency of the air conditioning system. Moreover, the customer is considering the possibility of increasing capacity - this is how it will come in handy when the load is increased. Plus, we have written recommendations on what needs to be done to increase capacity. In addition, we evaluated the implementation of SCS and the complex of security systems, there were no comments, everything was done correctly.

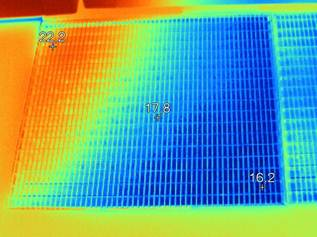

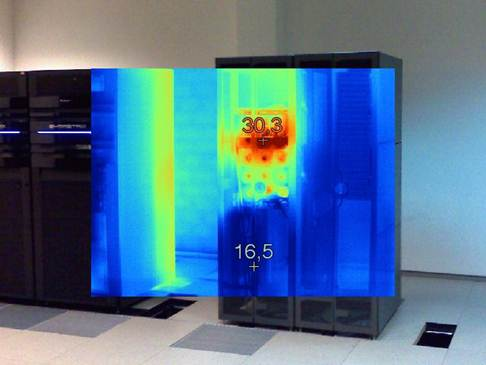

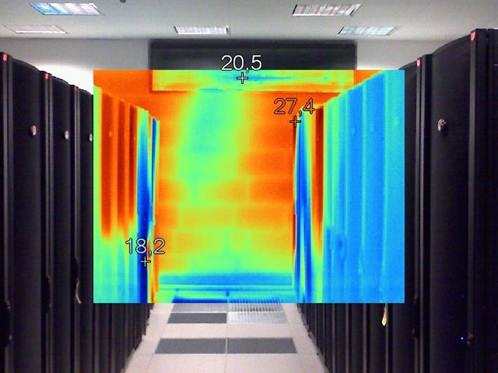

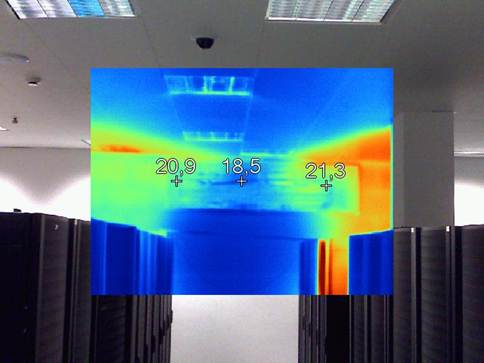

Key notes were on the most important systems. For example, we shot thermograms, searched for local overheating zones. For example, they found an injection zone:

The effect of ejection through a grate

There was a local overheating zone: there is a stand, there is not enough jet from the range of the range. It cools down, but not enough above. Moreover, if the rack is not completely filled with servers, plugs are placed to separate the hot and cold corridors. Otherwise, overflows occur. By and large, you just need to increase the fan coil fan speed: before that, the lower server was cooled more than necessary, and the upper one was warm. The second example is a wall of blocks, seams are visible on the thermogram: their thermal conductivity is higher than that of foam concrete. Naturally, this does not promise any problems, just a good demonstration of the capabilities of modern thermal imagers.

Rack

Zone The Hot Rack Corridor Zone The Rack

Cold Corridor Zone

At the same time, we looked at the quality of the power supply of the data center: we checked what quality of power was at the input, used special glands for this, everything is fine there. Then we tested several parameters in the SCS, air flow rates, the correspondence between the installed power of the fan coil and the real one - everything matches, everything works fine.

Summary

It all started with an accident, and all this resulted in an analysis, as a result of which more shortcomings were revealed and recommendations were formed on the optimal increase in power.

In Russia, instead of Tier III, according to the methodology of the Uptime Institute, Tier III is often declared according to TIA 942. The TIA, in fact, describes the requirements that must be met in order for your data center to look like a Tier-third, but at your discretion, do it or not. This is a recommendatory standard, and the assessment procedure there is declarative: they built it and said it was Tier III. And given that the Tier III level is at both the Uptime Institute and the TIA, customers are often misled. To check whether the site really received a certificate, just go to the Uptime Institute website.

We conducted audits of data centers many times (and helped build them), so we know that even if the customer and the contractor are serious, it is still better and safer to attract one more side for the audit. Because no matter how good the suppliers, designers and installers are, they can be wrong. When working on Tier III, for example, both the project and its on-site implementation are checked by the guys from the Uptime Institute, who for years on end have only been involved in walking around data centers and looking for bugs in them. Obtaining certification from them is difficult, but with a careful approach, this quest is completed, and the data center really works with an accessibility level of four nines.