The story of the creation of a sign-interpreter

Prehistory

At the beginning of this school year (17-18), the administration of our

Software part

Android application

About a month before the deadline, we began work on the project. The first stage of work was the development of an android application.

To be honest, the most difficult for me was the markup of the application: I have problems with these layouts and their types (I'm still a ninth-grader, and also from a physics mat). Making the application look equally beautiful on all devices was also quite difficult.

In addition to the markup, another challenge was to screw the bluetooth into the application (we decided to implement the connection between the android device and the model via bluetooth): this was not taught in the Samsung school courses, and there’s not much information on the Internet ( I mean information about the implementation of communication between the android device and Arduino, which managed the model).

Now a little about the code itself (link to GitHub at the very end of the article). The program consists of four activities: the start screen and one activity for each control mode.

- The first control mode is the text input mode when the user enters text manually.

- The second is using voice, the application recognizes the user's speech.

- The third is the manual mode. The user can manually change the position of the fingers to depict gestures that are not provided by the program.

To be honest, I am very ashamed of my code: it looks unfinished. The fact is that I could encapsulate a bluetooth connection into a separate class, creating connection methods, sending data, disconnecting, etc., but instead, in each activity I wrote down all of the above, since encapsulation resulted in some (not very big) problems. However, to solve them, it was necessary to spend some time studying the operation of bluetooth, and I, like, was in a hurry to write code so that there was enough time to develop and implement the hardware.

Arduino Programming

In the process of implementing the software part, it was necessary to program the Arduino microcontroller, which received data from a smartphone and operated a manipulator. A library was written that contained data on the connection of servo drives to the Arduino (to which contacts to connect the servo) and methods for translating text into sign language. The main part of the library is a matrix, which contains information about the position of each finger, corresponding to the letters of the Russian alphabet and various methods to simplify the code. The matrix is shown below.

constint navigate [Hand::n][Hand::m]= {

{224, 180, 180, 180, 180, 180},//а

{225, 90, 0, 180, 180, 180},//б

{226, 0, 0, 0, 0, 0},//в

{227, 0, 90, 180, 180, 180},//г

{228, 180, 0, 0,180, 180},//д

{229, 90, 180, 180, 90, 90},//е

{230, 90, 180, 180, 90, 90},//ж

{231, 180, 0, 180, 180, 180},//з

{232, 180, 180, 180, 0, 0},//и

{233, 180, 180, 180, 0, 0},//й

{234, 180, 0, 0,180, 180},//к

{235, 180, 90, 90, 180, 180},//л

{236, 180, 90, 90, 90, 180},//м

{237, 180, 0, 0, 180, 0},//н

{238, 90, 180, 0, 0, 0},//о

{239, 180, 90, 90, 180, 180},//п

{240, 180, 0, 180, 0, 0},//р

{241, 90, 90, 90, 90, 90},//с

{242, 180, 90, 90, 90, 180},//т

{243, 0, 180, 180, 180, 0},//у

{244, 0, 90, 90, 90, 90},//ф

{245, 0, 0, 180, 180, 180},//х

{246, 180, 0, 0, 180, 180},//ц

{247, 0, 90, 180, 180, 180},//ч

{248, 180, 0, 0, 0, 180},//ш

{249, 180, 0, 0, 0, 180},//щ

{250, 0, 0, 180, 180, 180},//ъ

{251, 0, 90, 180, 180, 90},//ы

{252, 0, 0, 180, 180, 180},//ь

{253, 90, 90, 180, 180, 180},//э

{254, 0, 90, 90, 90, 0},//ю

{255, 90, 90, 90, 180, 180}};//я"Hand" is a library header file (with the extension ".h") containing function prototypes and constants.

Now a few words about the methods of translation. The method of translating characters receives the character encoding for the input, searches for the required string (the first element of each row of the matrix is the character code), and sets the servos in accordance with the degrees of rotation angle specified in the string (the character codes are placed in a table so that, if desired, you can change the encoding the table, without changing the whole code) then waits a few seconds so that the gestures can be read and they do not follow one after the other. The sentence translation method splits the sentence into characters and uses the character translation method.

The translation method of the characters:

void Hand :: SymbolTranslate(unsignedchar a){ //перевод символов в жестыint str=-1;

int i;

for(i=0; i<n; i++){

if(navigate[i][0]==(int)(a)){

str=i;

break;

}

}

if(str==-1){

return;

}

else{

First.write(navigate[str][1]); //высавляем сервоприводы в нужное положение

Second.write(navigate[str][2]);

Third.write(navigate[str][3]);

Fourth.write(navigate[str][4]);

Fifth.write(navigate[str][5]);

delay(200);

Serial.println(str);

Serial.println("Succsessfull");

}

}

Translation method of sentences:

void Hand :: SentenceTranslate(char* s){ //перевод предложения в жестыunsignedchar a;

for(int i=0; i< strlen(s); i++){

a=s[i];

SymbolTranslate(a);

delay(2000);

}

}

The methods also output messages to the log with the received text and the result of the translation (“Succsessfull” is printed), which helped us a lot when debugging a

Hardware

The implementation of this phase of the project involved the above-mentioned kkirra . Before starting work, it seemed that developing drawings of the manipulator and assembling it would be a very simple task, but this is not at all the case.

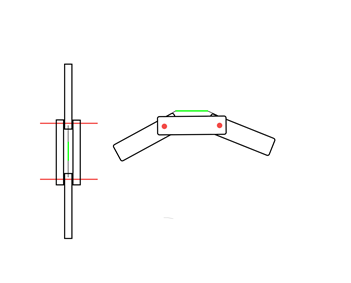

Initially, we wanted to print the details of the manipulator on a 3D printer, took the drawings from an open French bank of projects (we also drew our own, but later decided that it was better to take it from open-source), but by sending the drawings to print, we learned that our parts will take several weeks, which we did not have at that time. Then we decided to assemble the manipulator from the materials at hand: the forearm from metal plates, fingers from plywood. Fingers bent by servo drives running an Arduino microcontroller. An example drawing is shown below.

- Red color - the axis of rotation of the fingers, if you look at the fingers of people - these are the joints

- Green color - small rubber bands that return the fingers to their original position (i.e., tendon)

Conclusion

Let's sum up. We have assembled and programmed a sign-interpreter (shortly we call it “stump” for appearance). Our project allows people who do not know the sign language to communicate with people with impaired hearing using the Russian sign alphabet. The implementation of the software allows you to quickly change the code and, without breaking anything, add other sign languages to it (for example, English).

In the future, we plan to print a previously selected model on a 3D printer and assemble it (we are going to do this when we get some rest from exams and other rubbish).

In general, it turned out pretty good, though not very beautiful in terms of firmware and appearance, but the main thing is a working draft. We have learned a lot by working on this project (for example, that we should start working on a project designed for the whole year, at the beginning of the year, and not a couple of weeks before delivery), and for this we are very grateful to the teachers who helped us and especially Damir Muratovich - our teacher, who helped us solve most of the problems associated with the project.

Thank you for reading to the end!

If it became interesting to you - all the project materials (including the text of the work, presentations, etc.) are laid out in open access here .

Thanks again for your attention!