Gorutins: everything that you wanted to know, but were afraid to ask

In this article I will try to briefly and succinctly tell what goroutines are, when to use them, how they relate to system threads, and how the scheduler works.

Goroutine is a function that performs competitively with other goroutines in the same address space.

Launching goroutine is very simple:

The function

Please note goroutines are very lightweight . Almost all expenses are creating a stack, which is very small, although it can grow if necessary.

To make it easier to navigate, consider the numbers obtained empirically .

On average, you can expect about 4.5kb per goroutine. That is, for example, having 4Gb of RAM, you can contain about 800 thousand working goroutines. Maybe this is not enough to impress Erlang lovers, but it seems to me that the figure is very decent :)

And yet you should not thoughtlessly allocate the function to goroutine wherever possible. This will bring benefits in the following cases:

“Great enough” - how much? Complex issue. Most likely you will have to decide depending on the specific situation. I can only say that from my experience on my hardware (atom d525 64bit) it is ~ 50 μs. In general, test and develop a flair;)

The following terms are accepted in the source code ( src / pkg / runtime / proc.c ):

G (Goroutine) - Gorutin

M (Machine) - Machine

Each Machine works in a separate thread and is capable of executing only one Gorutin at a time. The scheduler of the operating system in which the program runs switches Machines. The number of machines running is limited by an environment variable

The goal of the scheduler is to distribute ready-to-run goroutines (G) to free machines (M).

The graphic and description of the scheduler are taken from the work of Sindre Myren RT Capabillites of Google Go .

Ready-to-play goroutines are executed in order of priority, i.e. FIFO (First In, First Out). The execution of goroutine is interrupted only when it can no longer be executed: that is, due to a system call or the use of synchronizing objects (operations with channels, mutexes, etc.). There are no time slices for the work of goroutine, after which it would return to the queue again. To allow the scheduler to do this, you must call it yourself .

As soon as the function is ready for execution again, it again enters the queue.

I will say right away that the current state of affairs greatly surprised me. For some reason, subconsciously, I expected more “parallelism”, probably because I perceived goroutines as lightweight system flows. As you can see, this is not entirely true.

In practice, this primarily means that sometimes it is worth using , so that several long-lived goroutines do not stop the work of all others for a significant time. On the other hand, such situations are quite rare in practice. I also want to note that at the moment the scheduler is by no means perfect, as mentioned in the documentation and the official FAQ. Serious improvements are planned in the future. Personally, I primarily expect the possibility of giving priority to the goroutines.

What kind of goroutines?

Goroutine is a function that performs competitively with other goroutines in the same address space.

Launching goroutine is very simple:

go normalFunc(args...)The function

normalFunc(args...)will start executing asynchronously with the code that called it. Please note goroutines are very lightweight . Almost all expenses are creating a stack, which is very small, although it can grow if necessary.

How much to hang in grams?

To make it easier to navigate, consider the numbers obtained empirically .

On average, you can expect about 4.5kb per goroutine. That is, for example, having 4Gb of RAM, you can contain about 800 thousand working goroutines. Maybe this is not enough to impress Erlang lovers, but it seems to me that the figure is very decent :)

And yet you should not thoughtlessly allocate the function to goroutine wherever possible. This will bring benefits in the following cases:

- If you need asynchrony. For example, when we work with a network, disk, database, mutex-protected resource, etc.

- If the execution time of the function is large enough and you can get a gain by loading other kernels.

“Great enough” - how much? Complex issue. Most likely you will have to decide depending on the specific situation. I can only say that from my experience on my hardware (atom d525 64bit) it is ~ 50 μs. In general, test and develop a flair;)

System threads

The following terms are accepted in the source code ( src / pkg / runtime / proc.c ):

G (Goroutine) - Gorutin

M (Machine) - Machine

Each Machine works in a separate thread and is capable of executing only one Gorutin at a time. The scheduler of the operating system in which the program runs switches Machines. The number of machines running is limited by an environment variable

GOMAXPROCSor function runtime.GOMAXPROCS(n int). By default, it is 1. It usually makes sense to make it equal to the number of cores.Go Scheduler

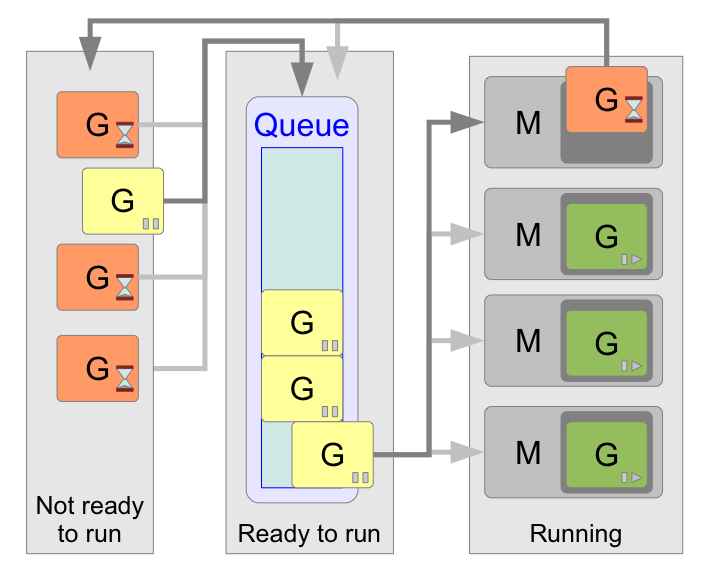

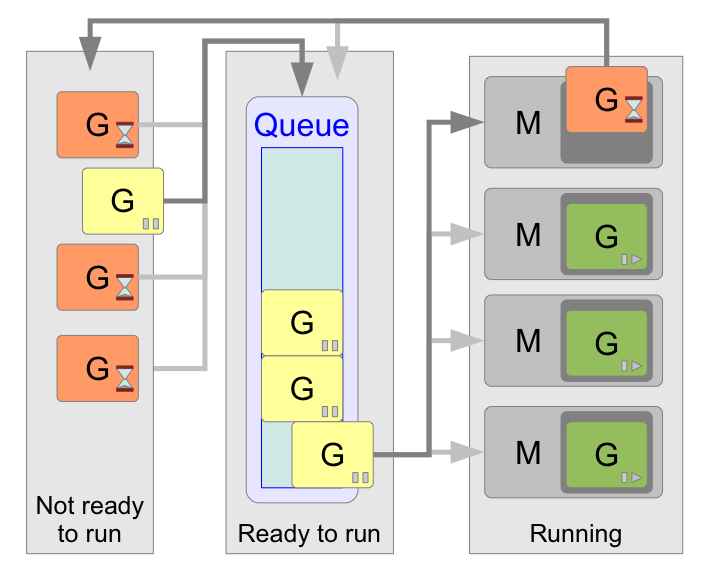

The goal of the scheduler is to distribute ready-to-run goroutines (G) to free machines (M).

The graphic and description of the scheduler are taken from the work of Sindre Myren RT Capabillites of Google Go .

Ready-to-play goroutines are executed in order of priority, i.e. FIFO (First In, First Out). The execution of goroutine is interrupted only when it can no longer be executed: that is, due to a system call or the use of synchronizing objects (operations with channels, mutexes, etc.). There are no time slices for the work of goroutine, after which it would return to the queue again. To allow the scheduler to do this, you must call it yourself .

runtime.Gosched()As soon as the function is ready for execution again, it again enters the queue.

conclusions

I will say right away that the current state of affairs greatly surprised me. For some reason, subconsciously, I expected more “parallelism”, probably because I perceived goroutines as lightweight system flows. As you can see, this is not entirely true.

In practice, this primarily means that sometimes it is worth using , so that several long-lived goroutines do not stop the work of all others for a significant time. On the other hand, such situations are quite rare in practice. I also want to note that at the moment the scheduler is by no means perfect, as mentioned in the documentation and the official FAQ. Serious improvements are planned in the future. Personally, I primarily expect the possibility of giving priority to the goroutines.

runtime.Gosched()