Skynet, hi: artificial intelligence has learned to see people through walls

After the discovery of X-rays, he was taken for a long time as a miracle of miracles. In principle, to many people x-ray still seems to be something very unusual, almost fiction. But in our time there are things and more interesting. For example, a group of scientists from MIT taught AI to feel people through walls.

The project, in which the development of such a system was carried out, was named “ RF-Pose ”. The AI does not just feel that there is someone behind the wall, the machine even “sees” what a person is doing. It is clear that there are no miracles here either, the basis of everything is the tracking of the characteristics of radio signals in the room.

The human body in a certain way affects the behavior of radio waves, and with proper analysis it is possible to understand what and how a person is in a room. The AI monitors all this in real time and demonstrates a figure that repeats the outlines and actions of the person behind the wall. If there are several people behind the wall, the system will show everyone.

The scientists who created the technology did not at all strive to create equipment and software for intelligence officers (although, who knows). No, the goal was different - to develop a system capable of detecting diseases such as Parkinson's disease , various types of sclerosis, muscular dystrophy and so on. According to the movement of the formed image, all these diseases can be diagnosed with a greater or lesser degree of accuracy. Over time, you can see the effectiveness of treatment.

For older people, such a system can also be useful because if a person falls and cannot rise, the computer will automatically notify relatives and physicians. So that a person will not remain unconscious, being deprived of any help. Currently, developers are negotiating with doctors about the possibility of using RF-Pose in healthcare.

All data that is tracked by the system is anonymous. Moreover, they are stored in encrypted form, so that attackers will not be able to steal information in any way. Specialists are developing a set of special movements that will diagnose the diseases mentioned above with a high degree of reliability.

“We know that the speed of movement of patients, as well as their ability to perform certain movements, allow us to obtain a specific set of data for diagnosing certain diseases. All this can be used in medicine - both for diagnostics and for monitoring the effectiveness of treatment, ”says one of the authors of the project.

As for security, there are already systems in the world that warn the relatives of the elderly if something is wrong with the latter. But such systems usually provide a set of sensors. And you can forget to charge or just put on, left without a warning system. If something happens to a person who, for one reason or another, is deprived of sensors, no one can help him in time. And this is extremely dangerous.

RF-Pose is also useful for gamers who also don’t have to wear sensors and sensors that track the movement of a person in space and his actions. You can simply turn on the system and start moving around the room - the computer will do the rest.

Rescuers will be able to work with RF-Pose to determine the location of people affected by natural disasters. For example, during earthquakes strong enough to destroy homes, people often find themselves buried under the rubble. And by no means all of them find victims, who are unable to call for help, in some cases it is simply impossible to detect using standard methods.

When creating RF-Pose, an important problem arose for scientists - the training of a neural network. Usually, when specialists teach a neural network to recognize certain objects, they “feed” it with pictures or videos that depict people, animals, buildings, furniture, etc. A person helps a neural network to “understand” who is who. But since radio signals are used in RF-Pose, here a person can no longer become an assistant.

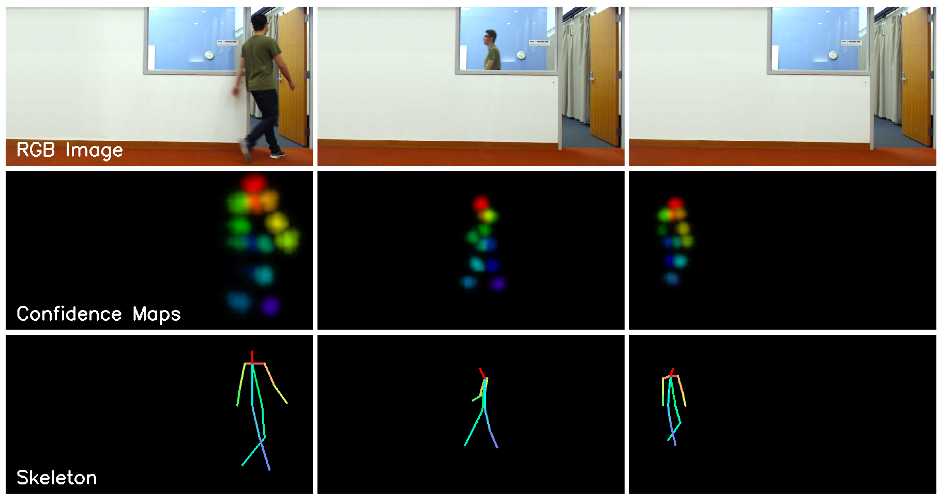

Therefore, the neural networks were allowed to “look” at an ordinary photo, for example, of a standing person and they downloaded a digital print of the same person in the radio field. People were asked to perform dozens and hundreds of actions, and each time the neural networks were allowed to compare the image and parameters of the radio signal affected by this action. Then the next step followed - the neural network was taught to draw (schematically) the human body and body parts in accordance with the influence of these objects on the radio signal.

As a result, the desired was achieved and the computer began to “understand” who is doing what at a particular moment. Moreover, the system, as it turned out, is able to identify a person by “radio print”. Accuracy is 83%. Not so much, but still ahead, because the project is not even in beta.