Dataflow architecture. Part 1

The second part of the article.

Most modern computers, whether it be a Fujitsu K supercomputer , an ordinary personal computer, or even a calculator, combine a common principle of operation, namely, a calculation model based on the Control Flow. However, this model is not the only one possible. In some ways, its opposite is a computational model driven by a data stream , or simply Dataflow. I want to talk about her now.

Control flow architecture is often referred to as von Neumann (in honor of John von Neumann). This is not entirely correct, since von Neumann architecture is just a subset of the control flow architectures. There are non-Neumann architectures of the control flow, for example, Harvard , which can now be found only in microcontrollers.

As part of the architecture of the control flow (controlflow), the computer consists of two main nodes: processor and memory. A program is a set of instructions stored in memory in execution order. The data the program works with is also stored in memory as a set of variables. The address of the instruction that is currently being executed is stored in a special register, in x86 it is called Instruction Pointer (IP). The moment the instruction begins to be executed is determined by the moment the previous one is completed (we are now considering a simplified model without any Out-of-Order ). The system is very simple, understandable and familiar to most readers. So why come up with something else?

Congenital controlflow problems

The fact is that the control flow architecture has a number of inherent disadvantages, which cannot be completely eliminated, since they stem from the organization of the computational process itself, you can only reduce the negative effect with the help of various

- Before the instruction is executed, its operands must be loaded from the memory into the processor registers, and after execution, the result must be unloaded back to the memory. The processor-memory bus is becoming a bottleneck: the processor is idle part of the time, waiting for data to load. Variable success is resolved using proactive sampling and multiple cache levels.

- The construction of multiprocessor systems is fraught with a number of difficulties. There are two main concepts of such systems: with shared and distributed memory. In the first case, it is difficult to physically provide the shared access of many processors to one RAM. In the second case, data coherence and synchronization problems arise. As the number of processors in the system grows, more and more resources are spent on providing synchronization, and less and less on computing itself [03] .

- No one guarantees that at the time of execution of any instruction its operands will be in memory at the specified addresses. The instruction that should record this data may not yet be implemented. In multi-threaded applications, a significant proportion of the

programmer’sresourcesand nerves arespent on ensuring thread synchronization.

Meet - Dataflow

There is no established Russian translation for the term Dataflow architecture. You can come across options such as “streaming architecture”, “data flow architecture”, “architecture with data flow control” and the like.

In architectures with data flow control (Dataflow) [01] there is no concept of “sequence of instructions”, there is no Instruction Pointer, and there is not even an addressable memory in the usual sense. A program in a streaming system is not a set of instructions, but a computational graph. Each node of the graph represents an operator or a set of operators, and the branches reflect the dependencies of the nodes according to the data. The next node starts to run as soon as all its input data is available. This is one of the basic principles of dataflow: the execution of data readiness instructions.

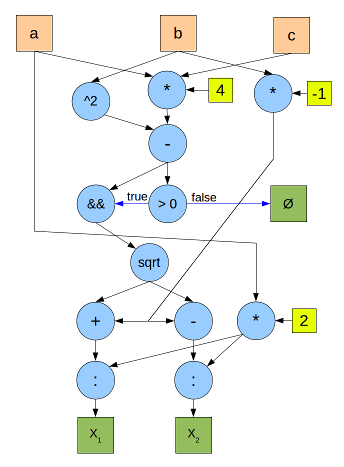

Here is an example of a graph for calculating the roots of a quadratic equation. Blue circles are operators, orange squares are input data, green squares are output data, and yellow squares are constants. Black arrows indicate the transmission of numerical data, blue arrows indicate Boolean data.

Hardware implementation

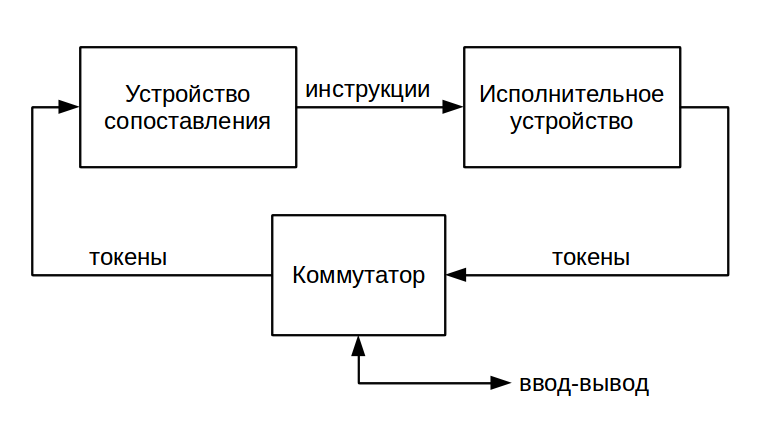

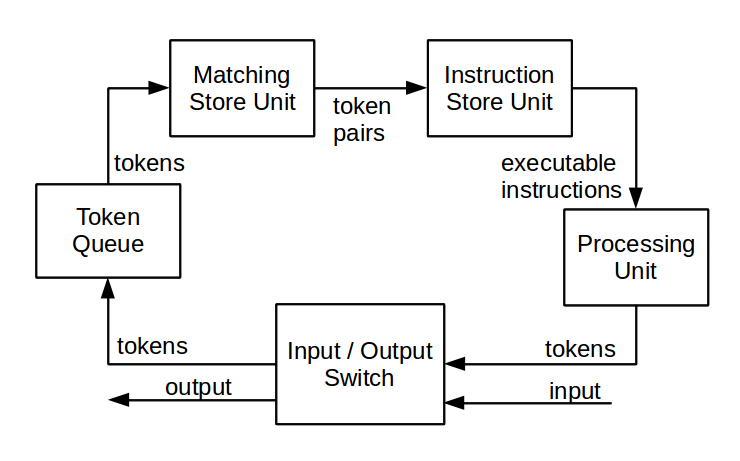

In streaming machines, data is transmitted and stored as so-called. tokens (token). A token is a structure containing the actual value transmitted and a label is a pointer to the destination node. The simplest streaming computing system consists of two devices: an execution unit and a matching unit [11] .

The actuator serves to execute instructions and generate tokens with the results of operations. Typically, it includes read-only instruction memory. The readiness of the input data of the node is determined by the presence of a set of tokens with the same labels. To search for such sets and serves as a matching device. Usually it is implemented on the basis of associative memory. Either "real", hardware associative memory ( CAM - content-addressable memory) is used, or structures that work similarly, for example, hash tables .

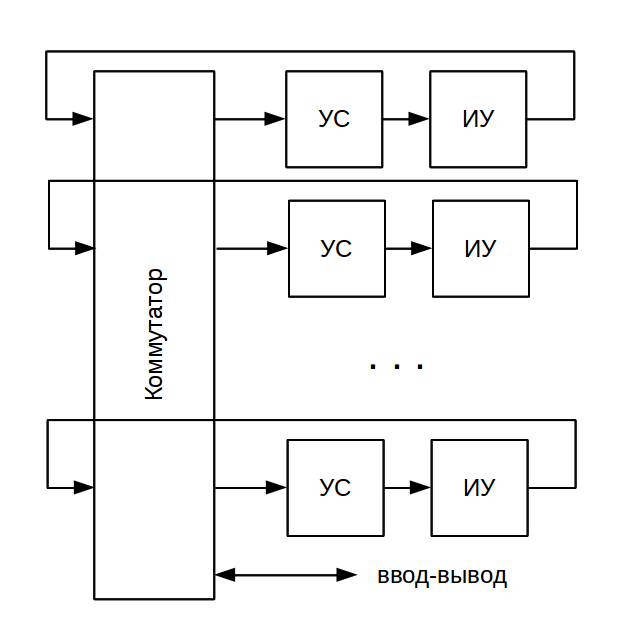

One of the main advantages of the dataflow architecture is its scalability: it is not difficult to assemble a system containing many matching devices and actuators. Devices are united by the simplest switch, and their labels are used to address tokens. The entire range of node numbers is simply distributed evenly between devices. No additional measures for synchronization of the computing process, unlike the multiprocessor controlflow architecture, are required.

Static dataflow architecture

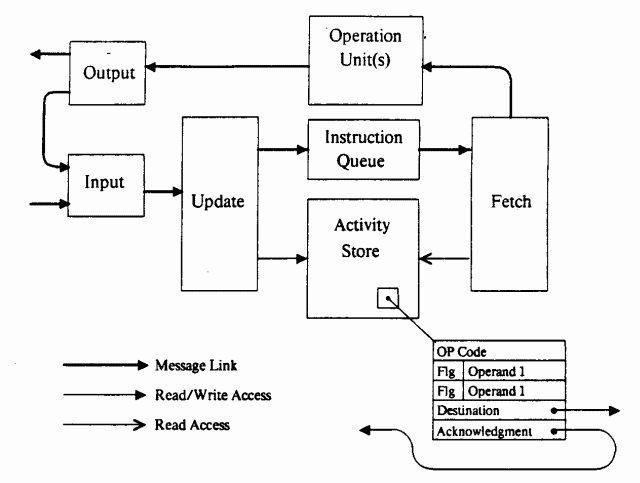

The scheme described above is called static (static dataflow). In it, each computing node is presented in a single copy, the number of nodes is known in advance, and the number of tokens circulating in the system is also known in advance. An example of a static architecture implementation is the MIT Static Dataflow Machine [12] , a streaming computer created at the Massachusetts Institute of Technology in 1974. The machine consisted of many processing elements (Processing Element) connected by a communication network. The diagram of one element is shown in the figure:

The role of the mapping device was performed by the interaction memory(activity store). It contained pairs of tokens along with the address of the destination node, readiness flags, and operation code. Any computing node in this architecture had only two inputs and consisted of one operator. Upon detection of the readiness of both operands , the fetch unit read the operation code, and the data was sent for processing to the operation unit.

Dynamic dataflow architecture

In a dynamic dataflow architecture, each node can have multiple instances. In order to distinguish tokens addressed to different instances of the same node, an additional field is introduced into the token structure - the context . Token mapping is now conducted not only by labels, but also by context values. Compared to static architecture, there are a number of new features.

- Recursion. A node can direct data to its copy, which will differ in context (but at the same time have the same label).

- Support procedures. The procedure in the framework of this computational model will be a sequence of nodes interconnected and having inputs and outputs. You can call multiple instances of the same procedure at the same time, which will differ in context.

- Parallelization of cycles. If there is no data dependency between iterations of the loop, you can process all iterations at once simultaneously. The iteration number, as you probably already guessed, will be contained in the context field.

One of the first implementations of dynamic streaming architecture was the Manchester Dataflow Machine (1980) [13] . The machine contained hardware for organizing recursions, calling procedures, expanding loops, copying and combining branches of a computational graph. Also in a separate module the instruction store unit memory was taken out. The figure shows a diagram of one element of the machine:

Dynamic dataflow architecture, in comparison with static, demonstrates higher performance due to better parallelism of calculations. In addition, it provides more opportunities for the programmer. On the other hand, a dynamic system is more complicated in hardware implementation, especially for matching devices and token context generation blocks.

To be continued

In the next part of the article: why are the dataflow architectures not as good as described here? How to cross a streaming system with a

Stay tuned ...

Literature

General dataflow questions

[01] - Dataflow architectures, Jurij Silc

[02] - Bakanov V.M. Streaming (Dataflow) computers: control the intensity of data processing

[03] - Parallelization of data processing on computers of the stream (Dataflow) architecture. Magazine "Top50 Supercomputers".

Hardware implementations

[11] - Dataflow architectures, Arvind David E. Culler

[12] - Preliminary Architecture for a Basic Data-Flow Processor, Jack B. Dennis and David P. Misunas

[13] - A Multilayered Data Flow Computer Architecture, JR Gurd, I. Watson.