Wake up, in the courtyard of the XXI century

- Tutorial

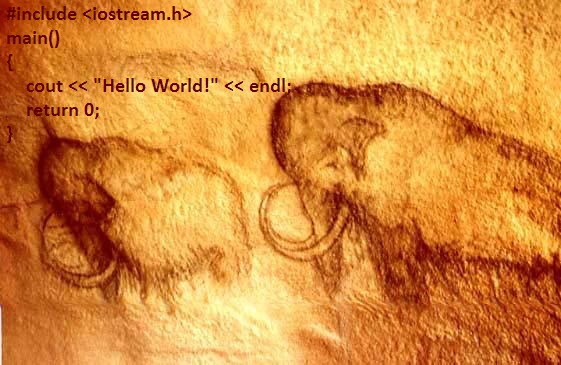

I would like to start the article by stating the fact that right outside the window is 2011 ( pruflink ), mid-April. I remind you this first of all to myself, as I am periodically visited by doubts about this. The fact is that both for work and for my hobby, I often read C ++ code written 10-20 years ago (but still supported) or code written recently, but by people who have learned to program in C ++ the same 20 years ago. And after that, I have a feeling that there has been no progress over the years, nothing has changed or developed, and mammoths still roam the Earth.

Introduction

From KVN:

-And where is it here in Sochi, grandmother, can I rent a room for 25 dollars a day?

-Ah, it’s not so far, guys. In the 90th year.

The specifics of programming 20 years ago was completely different. The memory and processor resources were counted for bytes and clock cycles, many things had not yet been invented and had to be twisted. But this is not a reason to write code today based on these premises. The world is changing.

All that I will write further concerns only C ++ programming and only mainstream compilers (gcc, Intel, Microsoft) - I worked less with other languages and compilers and I can’t talk about the state of things in them. Also, I will only talk about applied programming for desktop OSes (in clusters, microprocessors and system programming, trends may vary).

TR1

For those who have been in the tank for the last five years, I will tell a great military secret (Toko shhh!). There is such a thing as TR1 . This may be a revelation, but almost all modern compilers have built-in smart pointers, good random number generators, many special mathematical functions, support for regular expressions and other interesting things. It works pretty well. Use it.

C ++ 0x

For those who joined the seat mug in heavy armored vehicles just a couple of years ago, I will give one more good news. There is such a thing as C ++ 0x . Rejoice, brothers! Yes, officially it still does not have several high signatures and the ceremony of breaking a bottle of champagne on the standard board has not yet taken place, but the release candidate has been approved and there is already support in the compilers.

Already at your service:

- Lambda expressions

- Rvalue links

- Generalized Constant Expressions

- External templates

- Initialization lists

- Collection for loop

- Improving object constructors

- nullptr

- Local and anonymous types as template arguments

- Explicit operator conversions

- Unicode characters and strings

- Raw string literals

- Static Diagnostics

- Template typedefs

- Auto keyword

and a bunch of other useful things.

Well, look at least for the following examples:

- Instead

now you can writevector::const_iterator itr = myvec.begin();

- and it will work! Moreover, even strong typing does not go anywhere (auto is not a pointer or Variant, it's just syntactic sugar)auto itr = myvec.begin(); - Now you can walk through collections with the for_each loop

int my_array[5] = {1, 2, 3, 4, 5}; for(int &x : my_array) x *= 2;

Well, beauty, right? Let me remind you that this is supported in the main, stable (not alpha / beta) branches of all the main compilers. And it works. Why are you still not using this ?

Transfer of everything and everywhere according to the signs (links)

The ability to transfer entities to functions and methods both by reference and by value is a very powerful mechanism and should not be used one-way. Often I see how everything is always transferred according to the pointer. Arguments in people are as follows:

- A pointer is passed faster than a data structure — an increase in speed.

- When transferring by pointer, there is no need for an additional copy - saving memory.

Both arguments are irrelevant. The win often amounts to a couple of bytes and ticks (it cannot even be experimentally measured), but a whole bunch of flaws come out:

- The receiver function is forced to check all arguments for at least NULL. And the fact that the pointer is not NULL also does not guarantee anything.

- The receiver function has the right to do anything with the transmitted entity. Change, delete - that's it. The keyword argument "const" is not an argument. In C ++, there are a lot of hacks that make it possible to change data by a constant pointer or link.

- The calling function is forced to either trust the data being called in terms of changing the data, or validate them after each call.

- A significant part of the objects whose transmission they are trying to optimize using pointers are themselves almost pure pointers. This applies at least to the classes of strings, work with which has been optimized everywhere and for a long time.

I will give an analogy: you have a party at home, there are a dozen good friends + a couple of random personalities (as always). Suddenly, one of those persons notices on your computer

Calculation of constants

Here is a piece of code:

#define PI 3.1415926535897932384626433832795

#define PI_DIV_BY_2 1.5707963267948966192313216916398

#define PI_DIV_BY_4 0.78539816339744830961566084581988

...

< еще 180 аналогичных дефайнов >

...Maybe for someone I will reveal a great secret, but the constants are now calculated by the compiler at compilation, and not at runtime. So that "PI / 2" will be easier to read, take up less space, and work as fast as 180 defines in the example above. Do not underestimate the compiler.

Own bicycles

Sometimes I see in the code something like:

class MySuperVector

{ // моя очень быстрая реализация вектора

...

}At this moment, trembling penetrates me. There are STL , Boost (and many other libraries) in which the best minds of the planet have been improving many wonderful algorithms and data structures for decades. Writing your own is worth it only in 3 cases:

- You study (laboratory, course)

- You are writing serious scientific work on this subject.

- You know by heart the codes of STL, Boost, a dozen of similar libraries, 3 volumes of Knut and are clearly sure that they do not have a solution for your case.

In reality, the following happens:

- People have no idea about having libraries

- Nifig people do not read smart books

- People have high self-esteem, consider themselves smarter than everyone

- “Chukchi is not a reader, Chukchi is a writer”

As a result, we have ridiculous bugs, wild brakes and sincere surprise of the author during

Unnecessary optimizations

Example:

int a = 10;

a <<= 1;What is written here? I believe in Habr’s readers and I think almost everyone knows that this is a bitwise shift. And many people also know that these operations for integers are equivalent to multiplying and dividing by 2. It was fashionable to write earlier, since the bitwise shift operation is faster than the multiplication and division operations. But here are the facts for today:

- All compilers are smart enough to independently replace multiplication and division by shift in such cases.

- Not all people are smart enough to understand this code.

As a result, you will get a worse readable code, without advantages in speed and periodic (depending on the number and qualifications of colleagues) questions: “What the hell is that?”. Why do you need this?

Unnecessary memory savings

I will give a couple of links to cases when people tried to save 1-2 bytes of memory and what came of it.

Already today we have an average of 2 to 4 GB of RAM. A couple of years and everything will be 64-bit and there will be even more memory. Think ahead. Save megabytes, not individual bits. When it comes to the number of people, items, transactions, temperature, date, distance, file sizes, etc. - use long, longlong types or something specialized. Forget about byte, short and int. These are just a few bytes, and overflowing in the future can be very expensive. Do not be too lazy to create separate variables for different entities, rather than using one temporary variable with the thought "ah, they will never be used simultaneously at the same time."

conclusions

Do not program cave painting. Descendants will not appreciate.