Profiling python applications

Brief note with links and examples on profiling:

For the first time I had to use Django for profiling applications when I caught tricky recursive import in someone else's code. Then I used the existing handler for mod_python , but since the latter is no longer popular, alternative connection methods and even modules for profiling appeared long ago (the latter did not use it).

Unfortunately, I still do not know the means to make it as simple and pleasant. I did not want to rummage through the debugger, I was not satisfied with Guppy - it is strong, but difficult - so often, fortunately, you do not have to profile. Objgraph also does not provide easy navigation outside the debug shell .

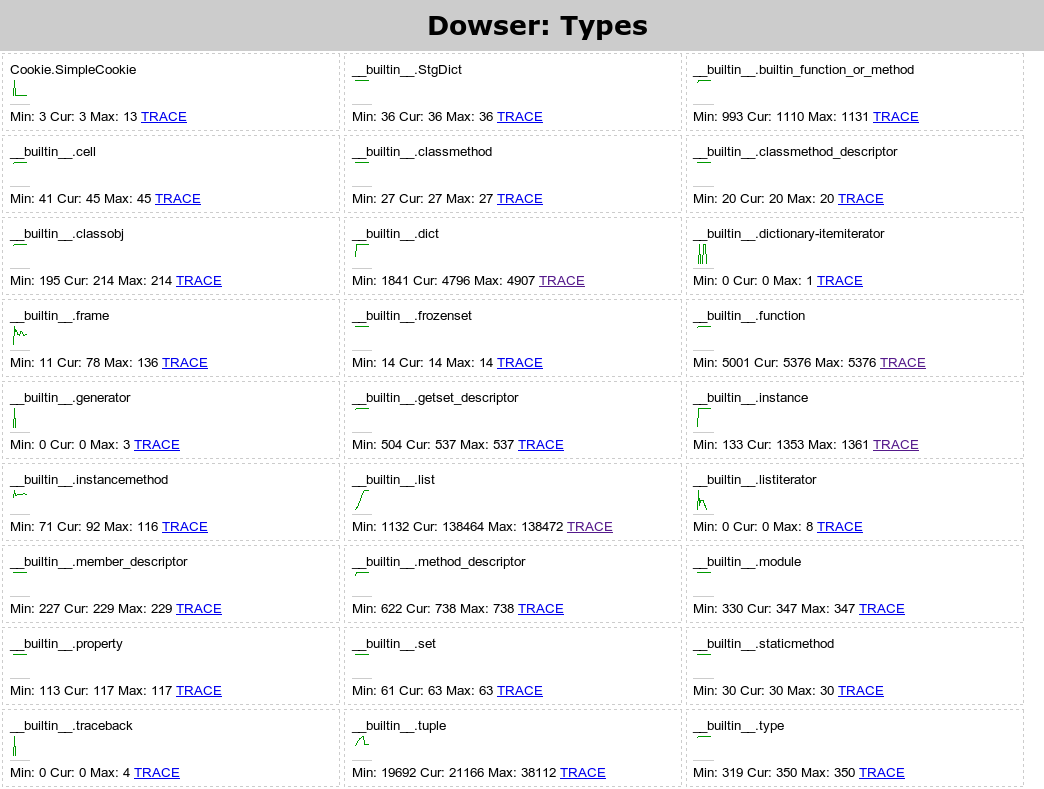

My choice now is a dowser , a CherryPy frontend application. With it, everything is simpler, although not so flexible:

What methods and means of profiling are still worth learning about?

- performance: hotshot or python profile / cProfile + kcachegrind log visualizer (there is a port for windows , an analogue of WinCacheGrind )

- memory usage: web-based dowser

performance

- collect statistics profiler options:

- example 1, using a quick hotshot (which can become deprecated): we

import hotshot prof = hotshot.Profile("your_project.prof") prof.start() # your code goes here prof.stop() prof.close()

convert the log format using the utility from the kcachegrind-converters package :hotshot2calltree your_project.prof > your_project.out - example 2, using the standard profile / cProfile: we

python -m cProfile -o your_project.pyprof your_project.py

convert the log format using pyprof2calltree :pyprof2calltree -i your_project.pyprof -o your_project.out

(with the -k option, it immediately starts kcachegrind and there is no need to create an intermediate file)

- example 1, using a quick hotshot (which can become deprecated): we

- open and study the log in the kcachegrind visualizer

For the first time I had to use Django for profiling applications when I caught tricky recursive import in someone else's code. Then I used the existing handler for mod_python , but since the latter is no longer popular, alternative connection methods and even modules for profiling appeared long ago (the latter did not use it).

memory

Unfortunately, I still do not know the means to make it as simple and pleasant. I did not want to rummage through the debugger, I was not satisfied with Guppy - it is strong, but difficult - so often, fortunately, you do not have to profile. Objgraph also does not provide easy navigation outside the debug shell .

My choice now is a dowser , a CherryPy frontend application. With it, everything is simpler, although not so flexible:

- create a controller, essentially a CherryPy 3 application:

# memdebug.py import cherrypy import dowser def start(port): cherrypy.tree.mount(dowser.Root()) cherrypy.config.update({ 'environment': 'embedded', 'server.socket_port': port }) cherrypy.engine.start() - connect to your application:

import memdebug memdebug.start(8080) # your code goes here - we go to the browser and look at the statistics (ignore the objects of CherryPy, other libraries - we are only looking for ours) The

http://localhost:8080/

functionality is ascetic, you have to click and figure it out a little, but it is enough to find problems. There is no need to learn and remember the debugger API. Attention, on some operations like “Show the entire tree”, a non-enormous amount of memory may be required for large applications. - the application itself will not close. After learning, interrupt Ctrl + Z and kill

What methods and means of profiling are still worth learning about?