Load Balancing with LVS

So, you have a loaded server and you suddenly wanted to unload it. You set and filled the same (same), but users persistently go to the first. In this case, of course, you need to think about load balancing.

The first thing that suggests is the use of Round-robin DNS. If anyone does not know, this is a method that allows you to smear requests between the nth number of servers by simply giving a new IP to each DNS request.

What are the cons:

Although it’s not worth adding “too much computer” between input and output, I’d like some methods of controlling the situation.

And here Linux Virtual Server or LVS comes to our aid. In fact, this is a kernel module ( ipvs ) that exists somewhere else from version 2.0-2.2.

What is he like? In fact, this is an L4 router (I would say that L3, but the authors insist on L4), which allows transparently and controllably route packets on given routes.

The main terminology is as follows:

On the director, this IPVS (IP Virtual Server) module is turned on, packet forwarding rules are configured and VIP is raised - usually as an alias to the external interface. Users will go through VIP.

Packets arriving at the VIP are forwarded by the selected method to one of the Realserver's and are already being processed there normally. It seems to the client that he is working with one machine.

The packet forwarding rules are extremely simple: we define a virtual service defined by a pair of VIP: port. The service can be TCP or UDP. Here we set the method of rotation of nodes (scheduler, scheduler). Next, we specify a set of servers from our farm, also a pair of RIP: port, and also specify the packet forwarding method and weight, if required by the selected scheduler.

It looks like this. Yes, do not forget to put the ipvsadmin package, it should be in the repository of your distribution. In any case, it is in Debian and RedHat.

In the example above, we create the virtual HTTP service 192.168.100.100 and include the servers 127.0.0.1, 192.168.100.2 and 192.168.100.3 in it. The "-w" switch sets the weight of the server. The higher it is, the more likely it is to receive a request. If you set the weight to 0, then the server will be excluded from all operations. It is very convenient if you need to decommission the server.

Packets are forwarded by default using the DR method. In general, the following routing options are available:

DR is the simplest, but if, for example, you need to change the destination port, you will have to draw rules in iptables. NAT, however, requires that the default route for the entire farm be directed to the director, which is not always convenient, especially if real servers also have external addresses.

There are several planners in the delivery set, you can read in detail in the instructions. Let's consider only the main ones.

In addition, it can be reported that the service requires persistence, i.e. keeping the user on one of the servers for a specified period of time - all new requests from the same IP will be sent to the same server.

So, according to the example above, we had to get a virtual server on VIP 192.168.100.100, which ipvsadm tells us joyfully: However, nothing will happen when you try to connect! What is the matter? The first step is to raise the alias. But even here, failure awaits us - the package arrives at the interface of a real server unmodified and therefore stupidly kicks off the kernel as not intended for the machine. The easiest way to resolve this issue is to raise the VIP to loopback. In no case do not pick it up on an interface that looks into the same subnet as the director’s external interface. Otherwise, an external router can cache the mac of the wrong machine and all traffic will go to the wrong place.

Now the bags should run where necessary. By the way, the director himself can be a real server, which we see in our example.

In this decision, the director himself will be the obvious point of failure causing the destruction of the entire service.

Well, it doesn’t matter, ipvsadm supports launching in daemon mode with the ability to synchronize tables and current connections between several directors. One will obviously become a master, the rest will be slaves.

What is left to us? Move VIP between directors in case of failure. HA solutions like heartbeat will help us here.

Another task will be monitoring and timely decommissioning of servers from our farm. This is easiest to do with weights.

To resolve both issues, many solutions have been written tailored for different types of services. I personally liked the Ultramonkey solution most of all, from the LVS authors themselves.

RedHat has a native thing called Piranha, it has its own set of daemons for monitoring the farm and directors, and even some clumsy web interface, but it does not support more than 2 directors in the HA bundle. I don’t know why.

So, Ultramonkey consists of 2 main packages - heartbeat and ldirectord. The first one provides HA for directors, including raising and moving VIPs (and generally speaking it can be used not only for directors), and the second one supports the ipvsadm config and monitors the status of the farm.

For heartbeat, you need to draw 3 config. The basic versions are provided with detailed comments, so just give examples.

authkeys Configure daemon authorization on different machines. haresources Here we have information about for which resource we create HA and which services we pull when moving. Those. raise eth0 192.168.100.100/24 on the interface and start ldirectord. ha.cf

We say how to support the HA cluster and who actually enters it.

Ldirectord has only one config, and there, in general, everything is clear too. Those. ldirectord will pull the alive.html file through http every 2 seconds and if it doesn’t contain the “I'm alive!” line or, worse, the server does not respond, the daemon will immediately set it to 0 and it will be excluded from subsequent transfers. Weights can also be set by yourself, for example, running the crown over the field and calculating them depending on the current loadavg, etc. - Nobody takes away direct access to ipvsadm from you.

Although everywhere on the Internet as the scope of LVS is mainly considered balancing web servers, in fact they can balance a great many services. If the protocol rests on one port and has no states, then it can be balanced without any problems. The situation is more complicated with multiport protocols like samba and ftp, but there are solutions too.

By the way, Linux does not have to be real servers. It can be almost any OS with a TCP / IP stack.

Also, there is also the so-called L7 router, which operates not with ports, but with knowledge of high-level protocols. Japanese comrades are developing the Ultramonkey-L7 for this occasion. However, we will not touch it now.

What balancing solutions do you use?

I will be glad to any comments and comments.

DNS-RR

The first thing that suggests is the use of Round-robin DNS. If anyone does not know, this is a method that allows you to smear requests between the nth number of servers by simply giving a new IP to each DNS request.

What are the cons:

- It’s difficult to manage: you scored a group of IP addresses and that’s all, no weight controls, server status is not monitored, etc.

- In fact, you spread requests over the IP range, but do not balance the load on the servers

- Client DNS caching can break all raspberries

Although it’s not worth adding “too much computer” between input and output, I’d like some methods of controlling the situation.

LVS

And here Linux Virtual Server or LVS comes to our aid. In fact, this is a kernel module ( ipvs ) that exists somewhere else from version 2.0-2.2.

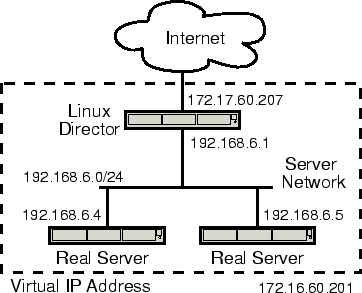

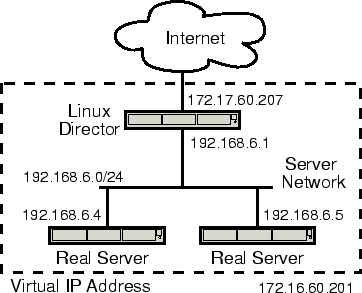

What is he like? In fact, this is an L4 router (I would say that L3, but the authors insist on L4), which allows transparently and controllably route packets on given routes.

The main terminology is as follows:

- Director - the actual node that provides routing.

- Realserver is the workhorse of our server farm.

- VIP or Virtual IP is just the IP of our virtual (collected from a bunch of real) server.

- Accordingly, DIP and RIP are IP directors and real servers.

On the director, this IPVS (IP Virtual Server) module is turned on, packet forwarding rules are configured and VIP is raised - usually as an alias to the external interface. Users will go through VIP.

Packets arriving at the VIP are forwarded by the selected method to one of the Realserver's and are already being processed there normally. It seems to the client that he is working with one machine.

rules

The packet forwarding rules are extremely simple: we define a virtual service defined by a pair of VIP: port. The service can be TCP or UDP. Here we set the method of rotation of nodes (scheduler, scheduler). Next, we specify a set of servers from our farm, also a pair of RIP: port, and also specify the packet forwarding method and weight, if required by the selected scheduler.

It looks like this. Yes, do not forget to put the ipvsadmin package, it should be in the repository of your distribution. In any case, it is in Debian and RedHat.

# ipvsadm -A -t 192.168.100.100:80 -s wlc

# ipvsadm -a -t 192.168.100.100:80 -r 192.168.100.2:80 -w 3

# ipvsadm -a -t 192.168.100.100:80 -r 192.168.100.3:80 -w 2

# ipvsadm -a -t 192.168.100.100:80 -r 127.0.0.1:80 -w 1In the example above, we create the virtual HTTP service 192.168.100.100 and include the servers 127.0.0.1, 192.168.100.2 and 192.168.100.3 in it. The "-w" switch sets the weight of the server. The higher it is, the more likely it is to receive a request. If you set the weight to 0, then the server will be excluded from all operations. It is very convenient if you need to decommission the server.

Packets are forwarded by default using the DR method. In general, the following routing options are available:

- Direct Routing (gatewaying) - the packet is sent directly to the farm, unchanged.

- NAT (masquarading) is just a tricky NAT mechanism.

- IPIP incapsulation (tunneling) - tunneling.

DR is the simplest, but if, for example, you need to change the destination port, you will have to draw rules in iptables. NAT, however, requires that the default route for the entire farm be directed to the director, which is not always convenient, especially if real servers also have external addresses.

There are several planners in the delivery set, you can read in detail in the instructions. Let's consider only the main ones.

- Round Robin - everyone's mutual responsibility.

- Weighted Round Robin is the same, but using server weights.

- Least Connection - send the packet to the server with the least number of connections.

- Weighted Least Connection - the same, but taking into account weights.

In addition, it can be reported that the service requires persistence, i.e. keeping the user on one of the servers for a specified period of time - all new requests from the same IP will be sent to the same server.

Underwater rocks

So, according to the example above, we had to get a virtual server on VIP 192.168.100.100, which ipvsadm tells us joyfully: However, nothing will happen when you try to connect! What is the matter? The first step is to raise the alias. But even here, failure awaits us - the package arrives at the interface of a real server unmodified and therefore stupidly kicks off the kernel as not intended for the machine. The easiest way to resolve this issue is to raise the VIP to loopback. In no case do not pick it up on an interface that looks into the same subnet as the director’s external interface. Otherwise, an external router can cache the mac of the wrong machine and all traffic will go to the wrong place.

ipvsadm -L -n

IP Virtual Server version 1.0.7 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.100:80 wlc

-> 192.168.100.2:80 Route 3 0 0

-> 192.168.100.3:80 Route 2 0 0

-> 127.0.0.1:80 Local 1 0 0# ifconfig eth0:0 inet 192.168.100.100 netmask 255.255.255.255# ifconfig lo:0 inet 192.168.100.100 netmask 255.255.255.255Now the bags should run where necessary. By the way, the director himself can be a real server, which we see in our example.

Single point of failure

In this decision, the director himself will be the obvious point of failure causing the destruction of the entire service.

Well, it doesn’t matter, ipvsadm supports launching in daemon mode with the ability to synchronize tables and current connections between several directors. One will obviously become a master, the rest will be slaves.

What is left to us? Move VIP between directors in case of failure. HA solutions like heartbeat will help us here.

Another task will be monitoring and timely decommissioning of servers from our farm. This is easiest to do with weights.

To resolve both issues, many solutions have been written tailored for different types of services. I personally liked the Ultramonkey solution most of all, from the LVS authors themselves.

RedHat has a native thing called Piranha, it has its own set of daemons for monitoring the farm and directors, and even some clumsy web interface, but it does not support more than 2 directors in the HA bundle. I don’t know why.

Ultramonkey

So, Ultramonkey consists of 2 main packages - heartbeat and ldirectord. The first one provides HA for directors, including raising and moving VIPs (and generally speaking it can be used not only for directors), and the second one supports the ipvsadm config and monitors the status of the farm.

For heartbeat, you need to draw 3 config. The basic versions are provided with detailed comments, so just give examples.

authkeys Configure daemon authorization on different machines. haresources Here we have information about for which resource we create HA and which services we pull when moving. Those. raise eth0 192.168.100.100/24 on the interface and start ldirectord. ha.cf

auth 2

#1 crc

2 sha1 mysecretpass

#3 md5 Hello!director1.foo.com IPaddr::192.168.100.100/24/eth0 ldirectordkeepalive 1

deadtime 20

udpport 694

udp eth0

node director1.foo.com # <-- должно совпадать с uname -n !

node director2.foo.com # We say how to support the HA cluster and who actually enters it.

Ldirectord has only one config, and there, in general, everything is clear too. Those. ldirectord will pull the alive.html file through http every 2 seconds and if it doesn’t contain the “I'm alive!” line or, worse, the server does not respond, the daemon will immediately set it to 0 and it will be excluded from subsequent transfers. Weights can also be set by yourself, for example, running the crown over the field and calculating them depending on the current loadavg, etc. - Nobody takes away direct access to ipvsadm from you.

checktimeout=10

checkinterval=2

autoreload=yes

logfile="/var/log/ldirectord.log"

# Virtual Service for HTTP

virtual=192.168.100.100:80

real=192.168.100.2:80 gate

real=192.168.100.3:80 gate

service=http

request="alive.html"

receive="I'm alive!"

scheduler=wlc

protocol=tcp

checktype=negotiateApplicability

Although everywhere on the Internet as the scope of LVS is mainly considered balancing web servers, in fact they can balance a great many services. If the protocol rests on one port and has no states, then it can be balanced without any problems. The situation is more complicated with multiport protocols like samba and ftp, but there are solutions too.

By the way, Linux does not have to be real servers. It can be almost any OS with a TCP / IP stack.

Also, there is also the so-called L7 router, which operates not with ports, but with knowledge of high-level protocols. Japanese comrades are developing the Ultramonkey-L7 for this occasion. However, we will not touch it now.

What else to read?

- LVS-HOWTO - strictly required to read

- LVS-mini-HOWTO - briefly

- Redhat piranha

- Ultra monkey

What balancing solutions do you use?

I will be glad to any comments and comments.