SpiNNaker - Neural Computer

After reading a recently published article, “An Overview of Modern Large-Scale Brain Activity Modeling Projects,” I would like to talk about another similar project carried out by a research team from the University of Manchester in the UK led by Professor

Steve Furber, creator of the BBC Microcomputer and 32-bit ARM RISC microprocessor, as well as the founder of ARM.

The university has an outstanding history of computer development and has played a revolutionary role in the development of computer science and artificial intelligence. The world's first electronic computer called SSEM, also known as “Baby,” whose distinguishing feature was the joint storage of data and programs in the machine’s memory (in other words, matching von Neumann architecture), was created in 1948 by Frederick Williams and Tom Kilburn. The device itself was created not so much for computational purposes as to study the properties of computer memory on cathode ray tubes (aka “Williams tubes” ).

The success of the experiment prompted the creation next year of Manchester Mark 1 (Manchester Mark 1), which already had a device for reading and writing punch tape, allowing input / output from a magnetic drum without stopping the program. Also in Mark 1, index registers were used for the first time in the world. Two years later, there was developed the world's first commercial universal computer Ferranti Mark 1 (Ferranti Mark 1). These computers have become the progenitors of almost all modern computers.

In experiments with "Baby"and Mark 1 was directly involved Alan Turing, one of the founders of computing and artificial intelligence. Turing believed that computers could eventually think like a human being. He published the results of his research in the article “Computing machines and mind”, in which, in particular, a thought experiment (which became known as the Turing test) was proposed, which consists in assessing the ability of a machine to think: is it possible for a person, talking with an invisible interlocutor, to determine whether he is communicating he is with another person or artificial device.

The Turing test and the approach to modeling thinking proposed in it has not yet been solved and remains the subject of heated scientific discussions. The main disadvantages of modern computers that distinguish them from the brain of living creatures are their inability to learn and instability to hardware failures, when the whole system goes down when one component breaks down. In addition, modern computers of von Neumann architecture do not have a number of features easily performed by the human brain, such as associative memory and the ability to solve problems of classification and recognition of objects, clustering or forecasting.

Another reason for the popularity of such projects is the approach to the physical limit of increasing the power of microprocessors. Industry giants recognize that a further increase in the number of transistors will not lead to a significant increase in processor speed, and logic suggests increasing the number of processors themselves in microcircuits, in other words, apply the path of mass parallelism.

The dilemma is whether it is necessary to maximize the power of microprocessors and only then install as many of these processors as possible in the final product, or should simplified processors be used that can perform basic mathematical operations. If it is possible to divide the task into an arbitrary number of largely independent sub-tasks, the system built according to the last principle wins.

The above motives are driven by a research team working on a project called SpiNNaker (Spiking Neural Network Architecture). The aim of the project is to create a device with a sufficiently high fault tolerance, which is achieved by dividing the processing power into the nth number of parts that perform the simplest subtasks. Moreover, if any of these parts fails, the system continues to function correctly, only reconfiguring itself in such a way as to exclude an unreliable node, redistributing its responsibilities to neighboring nodes and finding alternative “synaptic” connections for signal transmission. Something similar happens in the human brain, because every second a person loses a neuron, but this has little effect on his ability to think.

Another advantage of the created system is its ability to self-learn, a hallmark of neural networks. Thus, such a machine will help scientists learn about the processes occurring in the cerebral cortex and improve understanding of the complex interaction of brain cells.

At the first stage of the project, it is planned to simulate in real time up to 500,000 neurons, which roughly corresponds to the number of neurons in the bee’s brain. At the final stage of the project, it is assumed that the device will be able to simulate up to a billion neurons, which is approximately equal to the number of neurons in the human brain.

Noteworthy is the approach to designing a computer. The proposed device consists of a regular matrix of 50 chips. Each chip has 20 ARM968 microprocessors, and 19 of them are allocated directly to the simulation of neurons, and the rest controls the operation of the chip and keeps a log of activity.

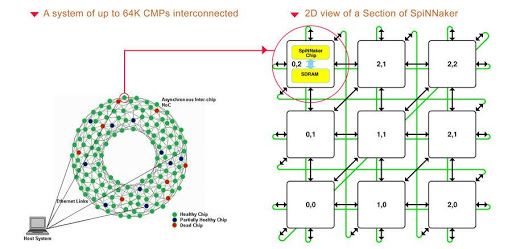

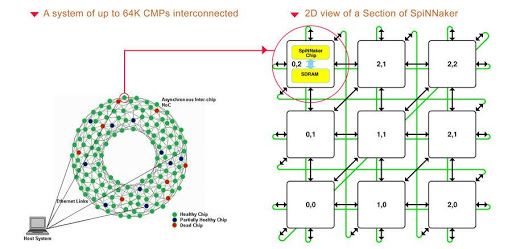

Fig. 1. System diagram

Each such chip is a complete subsystem with its own router, internal synchronous dynamic RAM with direct access mode (32 Kbytes for storing commands and 64 Kbytes for storing data) and its own messaging system with a throughput of 8 Gbps. In addition, each chip has 1 GB of external memory to store the network topology. According to the developers, the ratio of the central processor, internal memory and data transmission tools allows you to simulate up to 1,000 neurons in each microprocessor in real time.

A distinctive feature of this system is the complete lack of synchronization. Each neuron, upon reaching a certain internal state, sends a signal to the postsynaptic neuron, which accordingly sends or does not send (depending on its internal state) a new signal to the next neuron.

Noteworthy is the possibility of reconfiguration of the neural network during the working cycle. Thus, it allows isolating faulty nodes, as well as creating the possibility of forming new connections between neurons and probably even allowing new neurons to appear, as necessary.

Prior to the immediate start of the simulation itself, the configuration file is loaded into the system, determining the location of the neurons and the initial network data. This method of downloading data requires a simple operating system on each chip. In working condition, the machine needs a constant supply of information at the input, and the result is fed to the output device.

Fig. 2. Test chip

There is a need for a monitoring system for ongoing processes inside the machine to support, correct errors and manually reconfigure the system. For this purpose, it is possible to manually stop the machine while maintaining the current state of the network and after (as necessary) the corresponding changes in loading already new configurations.

Despite the fact that the primary purpose of the machine is aimed at modeling neural networks, it is equally possible to use it in various other applications that require large computing power, such as protein folding, decryption, or database search.

Steve Furber, creator of the BBC Microcomputer and 32-bit ARM RISC microprocessor, as well as the founder of ARM.

An excursion into the history of research at the University of Manchester

The university has an outstanding history of computer development and has played a revolutionary role in the development of computer science and artificial intelligence. The world's first electronic computer called SSEM, also known as “Baby,” whose distinguishing feature was the joint storage of data and programs in the machine’s memory (in other words, matching von Neumann architecture), was created in 1948 by Frederick Williams and Tom Kilburn. The device itself was created not so much for computational purposes as to study the properties of computer memory on cathode ray tubes (aka “Williams tubes” ).

The success of the experiment prompted the creation next year of Manchester Mark 1 (Manchester Mark 1), which already had a device for reading and writing punch tape, allowing input / output from a magnetic drum without stopping the program. Also in Mark 1, index registers were used for the first time in the world. Two years later, there was developed the world's first commercial universal computer Ferranti Mark 1 (Ferranti Mark 1). These computers have become the progenitors of almost all modern computers.

In experiments with "Baby"and Mark 1 was directly involved Alan Turing, one of the founders of computing and artificial intelligence. Turing believed that computers could eventually think like a human being. He published the results of his research in the article “Computing machines and mind”, in which, in particular, a thought experiment (which became known as the Turing test) was proposed, which consists in assessing the ability of a machine to think: is it possible for a person, talking with an invisible interlocutor, to determine whether he is communicating he is with another person or artificial device.

Project Motives

The Turing test and the approach to modeling thinking proposed in it has not yet been solved and remains the subject of heated scientific discussions. The main disadvantages of modern computers that distinguish them from the brain of living creatures are their inability to learn and instability to hardware failures, when the whole system goes down when one component breaks down. In addition, modern computers of von Neumann architecture do not have a number of features easily performed by the human brain, such as associative memory and the ability to solve problems of classification and recognition of objects, clustering or forecasting.

Another reason for the popularity of such projects is the approach to the physical limit of increasing the power of microprocessors. Industry giants recognize that a further increase in the number of transistors will not lead to a significant increase in processor speed, and logic suggests increasing the number of processors themselves in microcircuits, in other words, apply the path of mass parallelism.

The dilemma is whether it is necessary to maximize the power of microprocessors and only then install as many of these processors as possible in the final product, or should simplified processors be used that can perform basic mathematical operations. If it is possible to divide the task into an arbitrary number of largely independent sub-tasks, the system built according to the last principle wins.

SpiNNaker Project Goals

The above motives are driven by a research team working on a project called SpiNNaker (Spiking Neural Network Architecture). The aim of the project is to create a device with a sufficiently high fault tolerance, which is achieved by dividing the processing power into the nth number of parts that perform the simplest subtasks. Moreover, if any of these parts fails, the system continues to function correctly, only reconfiguring itself in such a way as to exclude an unreliable node, redistributing its responsibilities to neighboring nodes and finding alternative “synaptic” connections for signal transmission. Something similar happens in the human brain, because every second a person loses a neuron, but this has little effect on his ability to think.

Another advantage of the created system is its ability to self-learn, a hallmark of neural networks. Thus, such a machine will help scientists learn about the processes occurring in the cerebral cortex and improve understanding of the complex interaction of brain cells.

At the first stage of the project, it is planned to simulate in real time up to 500,000 neurons, which roughly corresponds to the number of neurons in the bee’s brain. At the final stage of the project, it is assumed that the device will be able to simulate up to a billion neurons, which is approximately equal to the number of neurons in the human brain.

System architecture

Noteworthy is the approach to designing a computer. The proposed device consists of a regular matrix of 50 chips. Each chip has 20 ARM968 microprocessors, and 19 of them are allocated directly to the simulation of neurons, and the rest controls the operation of the chip and keeps a log of activity.

Fig. 1. System diagram

Each such chip is a complete subsystem with its own router, internal synchronous dynamic RAM with direct access mode (32 Kbytes for storing commands and 64 Kbytes for storing data) and its own messaging system with a throughput of 8 Gbps. In addition, each chip has 1 GB of external memory to store the network topology. According to the developers, the ratio of the central processor, internal memory and data transmission tools allows you to simulate up to 1,000 neurons in each microprocessor in real time.

A distinctive feature of this system is the complete lack of synchronization. Each neuron, upon reaching a certain internal state, sends a signal to the postsynaptic neuron, which accordingly sends or does not send (depending on its internal state) a new signal to the next neuron.

Noteworthy is the possibility of reconfiguration of the neural network during the working cycle. Thus, it allows isolating faulty nodes, as well as creating the possibility of forming new connections between neurons and probably even allowing new neurons to appear, as necessary.

Prior to the immediate start of the simulation itself, the configuration file is loaded into the system, determining the location of the neurons and the initial network data. This method of downloading data requires a simple operating system on each chip. In working condition, the machine needs a constant supply of information at the input, and the result is fed to the output device.

Fig. 2. Test chip

There is a need for a monitoring system for ongoing processes inside the machine to support, correct errors and manually reconfigure the system. For this purpose, it is possible to manually stop the machine while maintaining the current state of the network and after (as necessary) the corresponding changes in loading already new configurations.

Prospects

Despite the fact that the primary purpose of the machine is aimed at modeling neural networks, it is equally possible to use it in various other applications that require large computing power, such as protein folding, decryption, or database search.