xz - LZMA compression force is already in your console

Many probably already know about the utility for compression / decompression xz . But they don’t know even more. Therefore, I wrote this fact-finding topic.

xz - data compression format, along with gzip, bzip2 included in gnu-shnyh applications.

It uses the LZMA algorithm , the same as in 7z, which means that it is possible to compress many types of data more strongly, such as text, binary data not yet compressed compared to the standard ones mentioned above.

xz is used in the new rpm 4.7.2 to compress .cpio archives in rpm packages (used with Fedora 12).

ArchLinux generally uses .tar.xz as a package.

In GNU taroptions appeared -J --lzma, which play the same role as -z for gzip, -j for bzip2

Pros:

High compression

High compression

Minuses:

high resource consumption:

cpu time (and compression time proper)

cpu time (and compression time proper)

memory (configurable, but still more than gzip, bzip2).

memory (configurable, but still more than gzip, bzip2).

In particular, xz with --best aka -9 consumes up to 700mb! with compression and 90mb with decompression

Features:

Consuming a large amount of memory is slightly limited by preliminary calculation of available resources.

Consuming a large amount of memory is slightly limited by preliminary calculation of available resources.

integration into GNU tar

integration into GNU tar

work with streams

work with streams

optionally: progressbar via --verbose

optionally: progressbar via --verbose

I don’t feel like clogging up the fact-finding topic with charts and so on, but you can’t do without it:

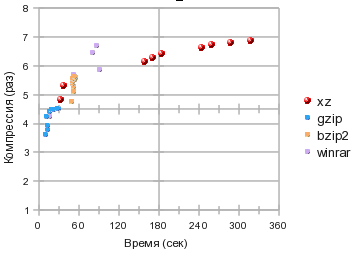

I made a banal run gzip, bzip2, xz for the degree of compression, time consumption. WinRar will also take part as a guest (although drunk, under wine, but it still showed excellent results)

We

get 4 squares: The lower left one is slow and weakly squeezing: gzip and winrar fastest.

Top left: the winning compression / time ratio: bzip2, xz is slightly better at compression levels 1 and 2, and the

top right is the real press mechanism: it’s very tightly compressing xz

In the bottom right: there is nobody, and who needs a long-running and weakly compressing archiver?

But in general, the grid is not well matched: how do we evaluate time? categories! for example, quickly - 10-20 seconds, on average from half a minute to a minute, more than 2 minutes is a long time.

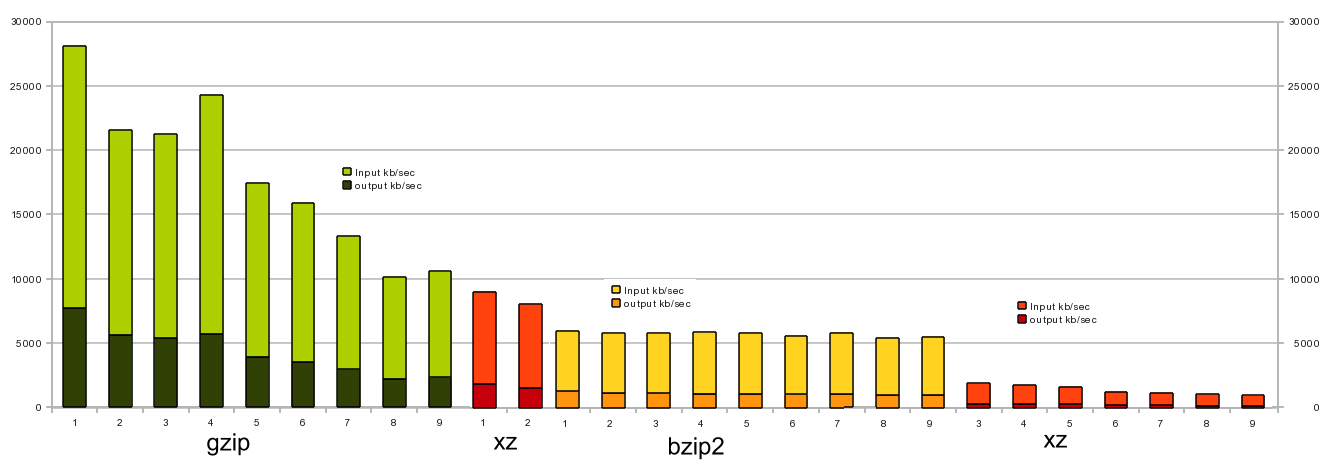

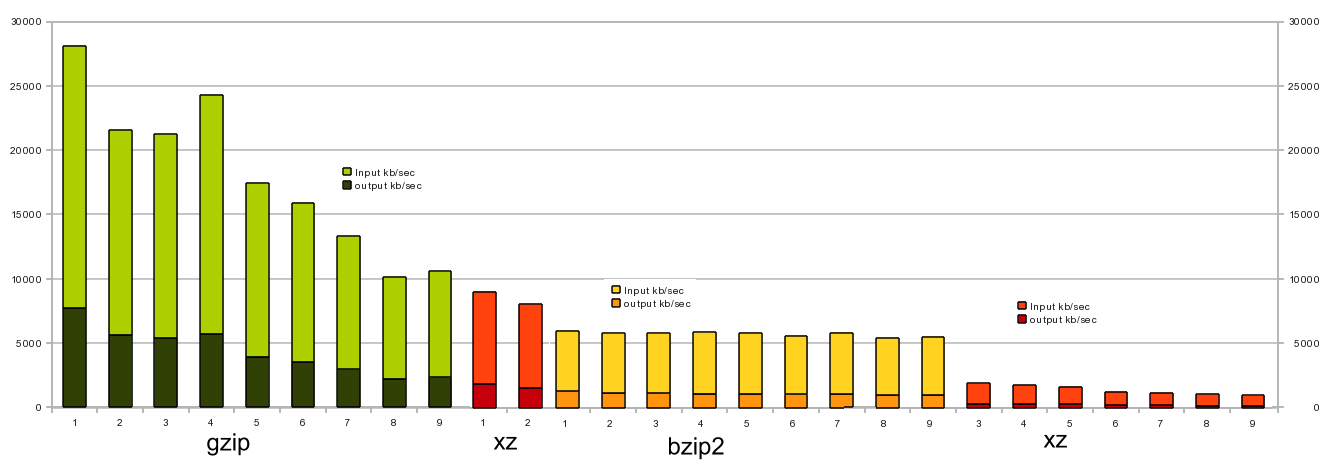

so the logarithmic scale is more obvious here: And if you evaluate them as stream compression, on my Core2Duo E6750 @ 2.66GHz, then we have such a graph:

those. Using gzip -1 or gzip -4 conveyor as a compressor, you can drive up to 25 MB / sec of non-compressed data in a 100Mbit network. (checked several times - gzip -4 for some reason gives a greater profit than -3 or -5)

xz can be used in this only on channels <8mbits,

xz - in view of resource consumption, it occupies the niche of compressorarchivers , where the degree of compression can play a big role, and there is enough computing resource and time resource. those. various backups / archives, distributions (rpm, tar.xz in archlinux). Or the data is very easily compressed: logs, tables with text-digital data csv, tsv, which are not supposed to be changed.

PS No matter how happy for xz, in the wisdom of the effort spent WinRar Wins.

xz - data compression format, along with gzip, bzip2 included in gnu-shnyh applications.

It uses the LZMA algorithm , the same as in 7z, which means that it is possible to compress many types of data more strongly, such as text, binary data not yet compressed compared to the standard ones mentioned above.

xz is used in the new rpm 4.7.2 to compress .cpio archives in rpm packages (used with Fedora 12).

ArchLinux generally uses .tar.xz as a package.

In GNU taroptions appeared -J --lzma, which play the same role as -z for gzip, -j for bzip2

Pros:

High compression

High compression Minuses:

high resource consumption:

cpu time (and compression time proper)

cpu time (and compression time proper)  memory (configurable, but still more than gzip, bzip2).

memory (configurable, but still more than gzip, bzip2). In particular, xz with --best aka -9 consumes up to 700mb! with compression and 90mb with decompression

Features:

Consuming a large amount of memory is slightly limited by preliminary calculation of available resources.

Consuming a large amount of memory is slightly limited by preliminary calculation of available resources.  integration into GNU tar

integration into GNU tar  work with streams

work with streams  optionally: progressbar via --verbose

optionally: progressbar via --verboseI don’t feel like clogging up the fact-finding topic with charts and so on, but you can’t do without it:

I made a banal run gzip, bzip2, xz for the degree of compression, time consumption. WinRar will also take part as a guest (although drunk, under wine, but it still showed excellent results)

We

get 4 squares: The lower left one is slow and weakly squeezing: gzip and winrar fastest.

Top left: the winning compression / time ratio: bzip2, xz is slightly better at compression levels 1 and 2, and the

top right is the real press mechanism: it’s very tightly compressing xz

In the bottom right: there is nobody, and who needs a long-running and weakly compressing archiver?

But in general, the grid is not well matched: how do we evaluate time? categories! for example, quickly - 10-20 seconds, on average from half a minute to a minute, more than 2 minutes is a long time.

so the logarithmic scale is more obvious here: And if you evaluate them as stream compression, on my Core2Duo E6750 @ 2.66GHz, then we have such a graph:

those. Using gzip -1 or gzip -4 conveyor as a compressor, you can drive up to 25 MB / sec of non-compressed data in a 100Mbit network. (checked several times - gzip -4 for some reason gives a greater profit than -3 or -5)

cat /some/data | gzip -1c | ssh user@somehost -c "gzip -dc > /some/data"xz can be used in this only on channels <8mbits,

The obvious conclusion (with the assistance of KO)

xz - in view of resource consumption, it occupies the niche of compressor

PS No matter how happy for xz, in the wisdom of the effort spent WinRar Wins.