Localization and navigation in ROS using rtabmap

Good afternoon, dear readers. In my last article, I talked about two SLAM algorithms designed for depth cameras: rtabmap and RGBD-SLAM. Then we tried only to build a map of the area. In this article I will talk about the possibilities of localization and navigation of the robot using the rtabmap algorithm. Anyone interested, please under the cat.

To begin with, if the ROS master is not running, then run it:

Run the driver for the camera:

Run rtabmap to build a map with visualization in rviz and removing the old map:

When the mapping procedure is completed, finish the program using Ctrl + C to save the map and restart rtabmap in localization mode:

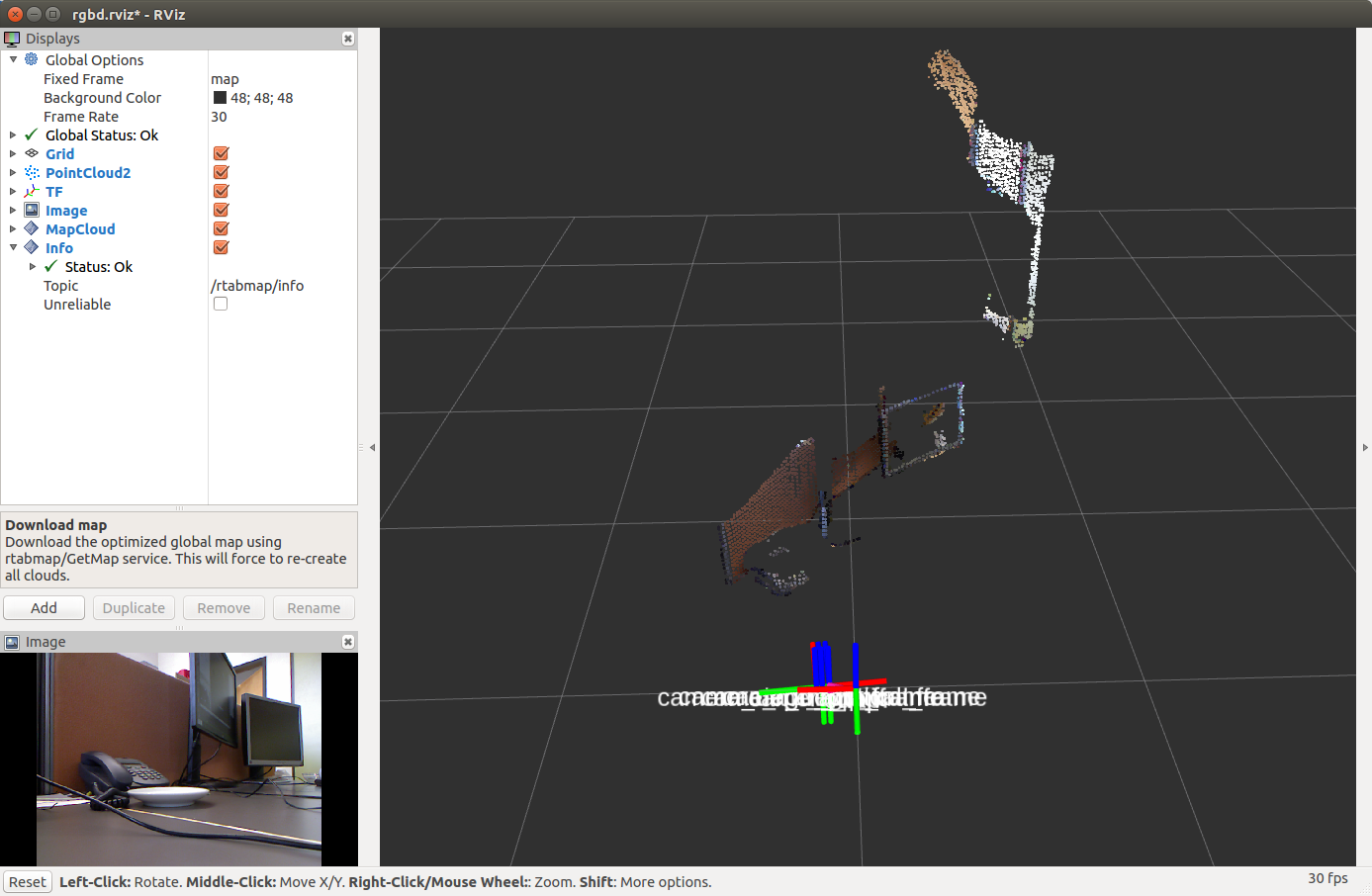

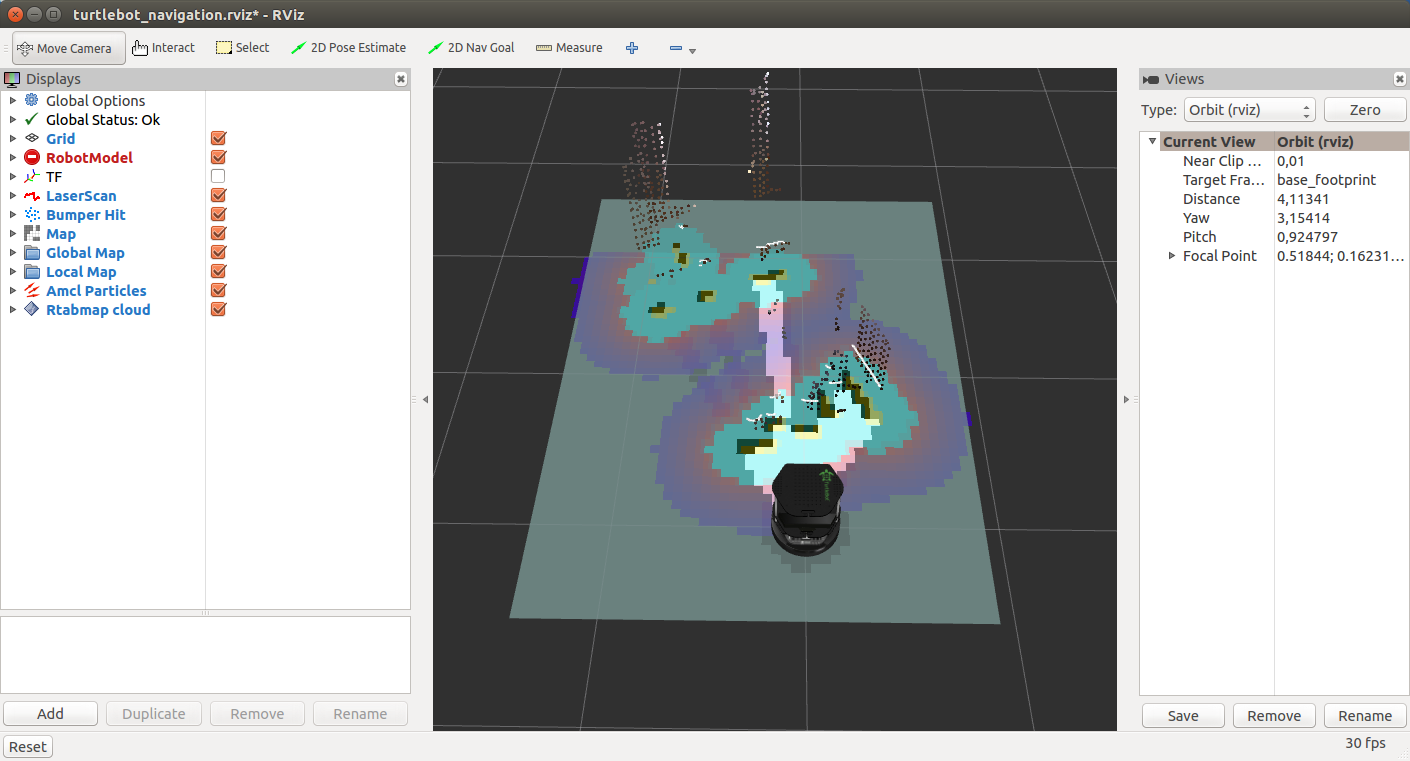

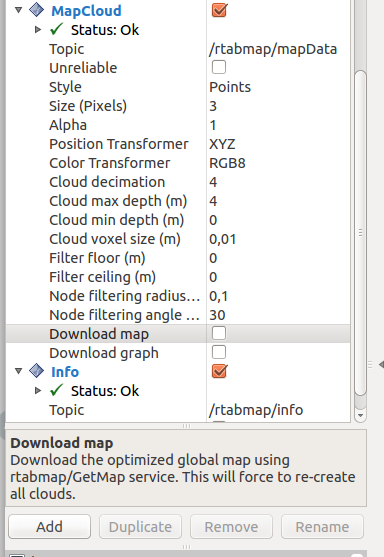

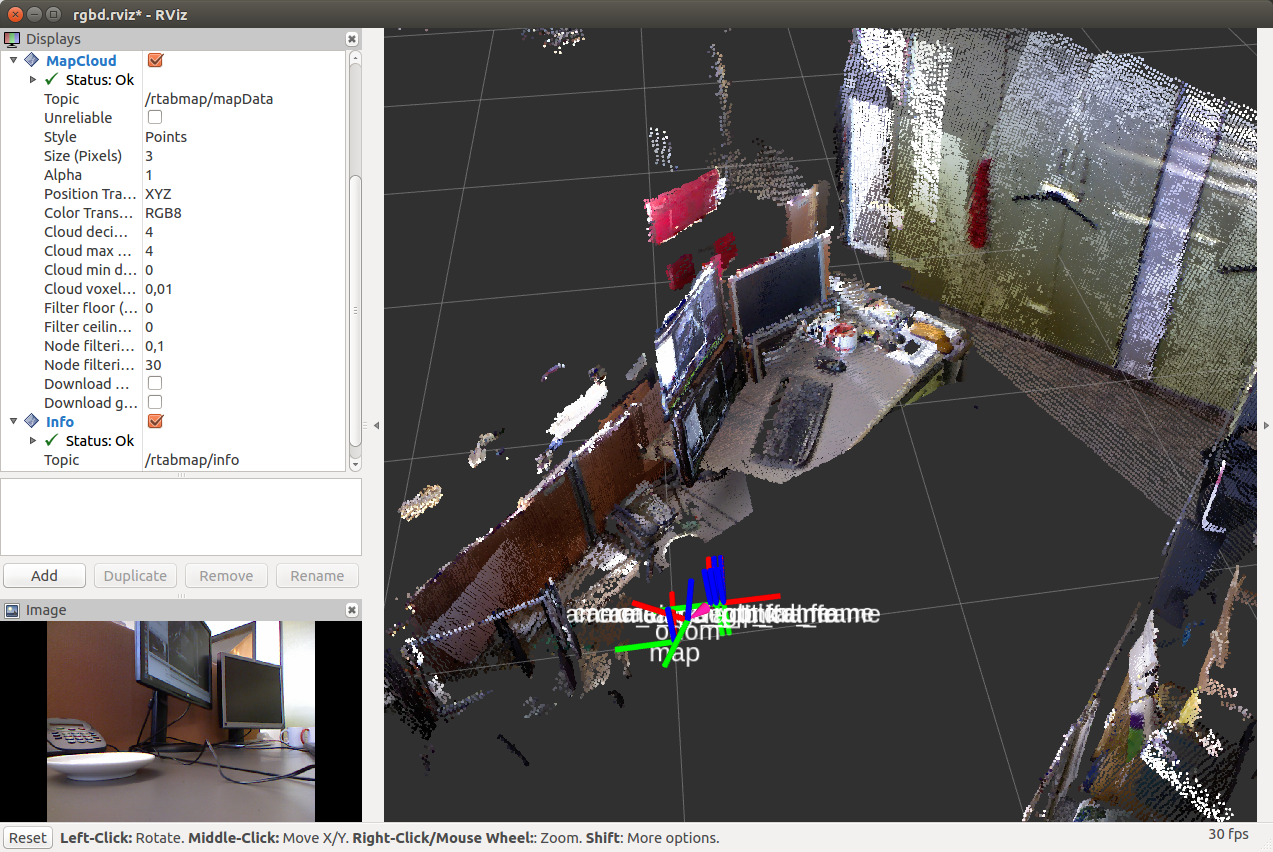

In order to show the map in rviz, you must click the checkmark in the Download Map item on the MapCloud display:

Move the camera to the place where the robot is located on the map in rviz. After that, the transformation between the coordinate systems / map → / odom will be published.

Mapping and localization of the robot using the rtabmap method can be tried on the simulator of the Turtlebot robot. In rtabmap there are special packages for this. For additional information I give a link to the source of the material. First, install the necessary packages:

The default driver is OpenNI2, which is specified in the 3dsensor.launch file (TURTLEBOT_3D_SENSOR = asus_xtion_pro). In my experiments, I used the Microsoft Kinect camera. Let's install a driver for it in the TURTLEBOT_3D_SENSOR variable:

Now we’ll start building a map using special packages for the Turtlebot simulator in rtabmap_ros:

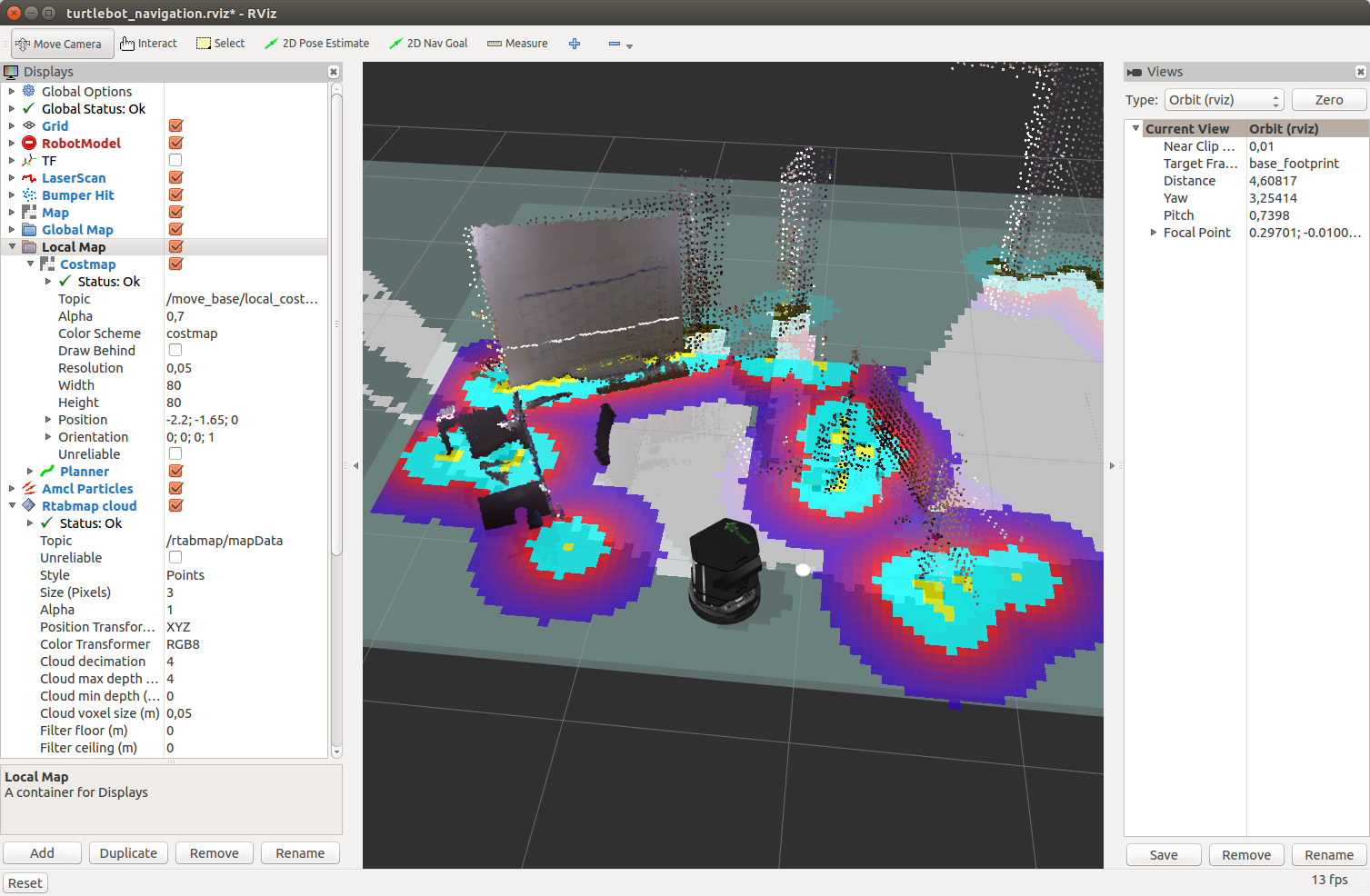

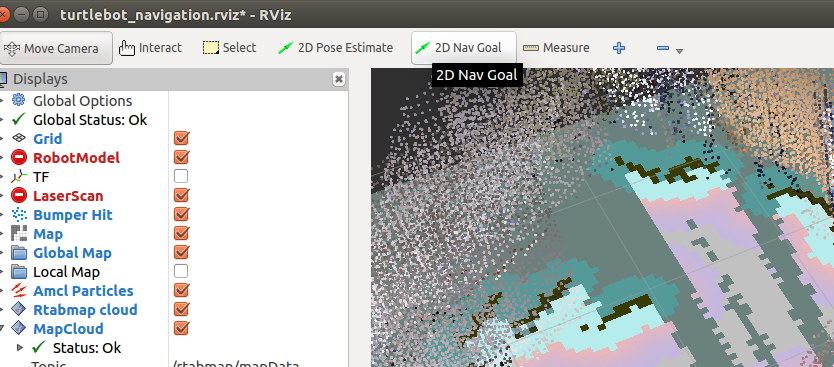

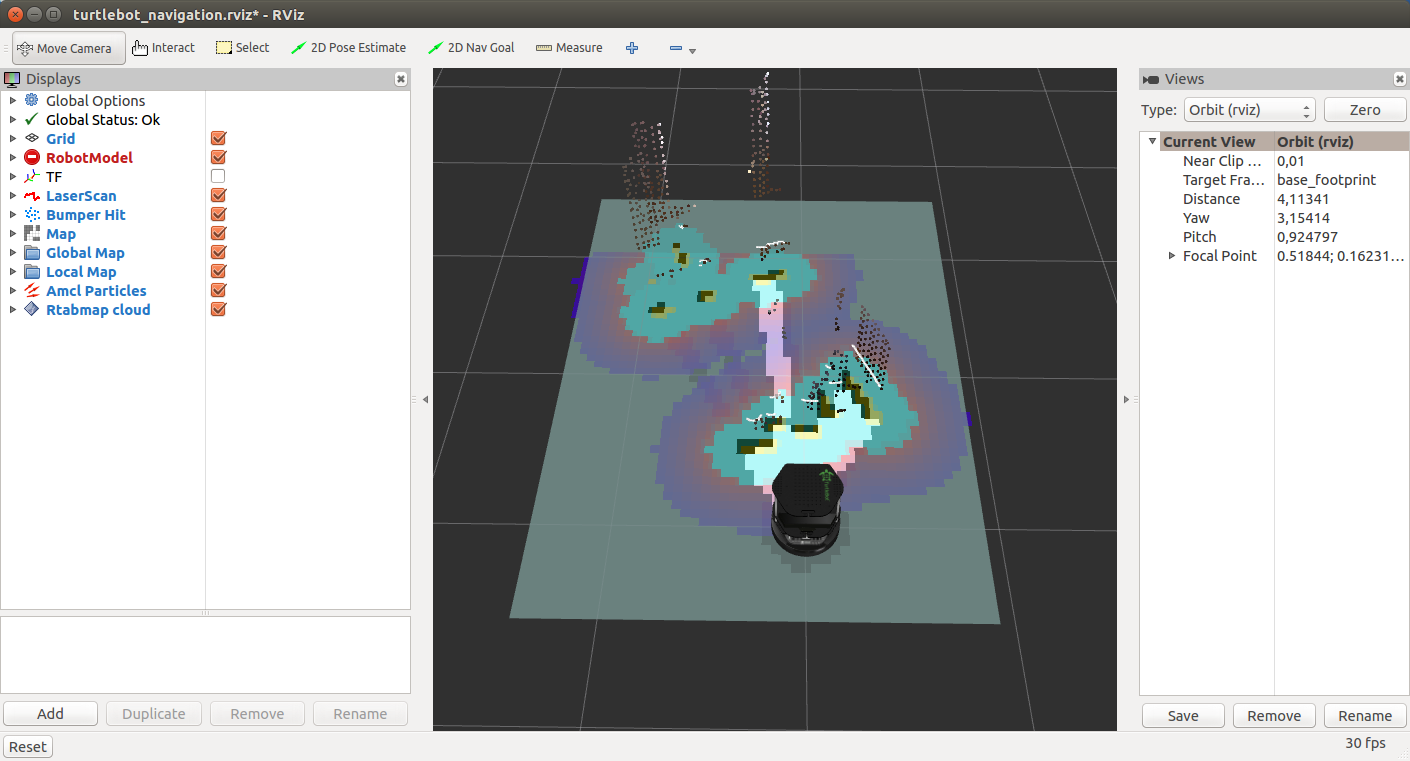

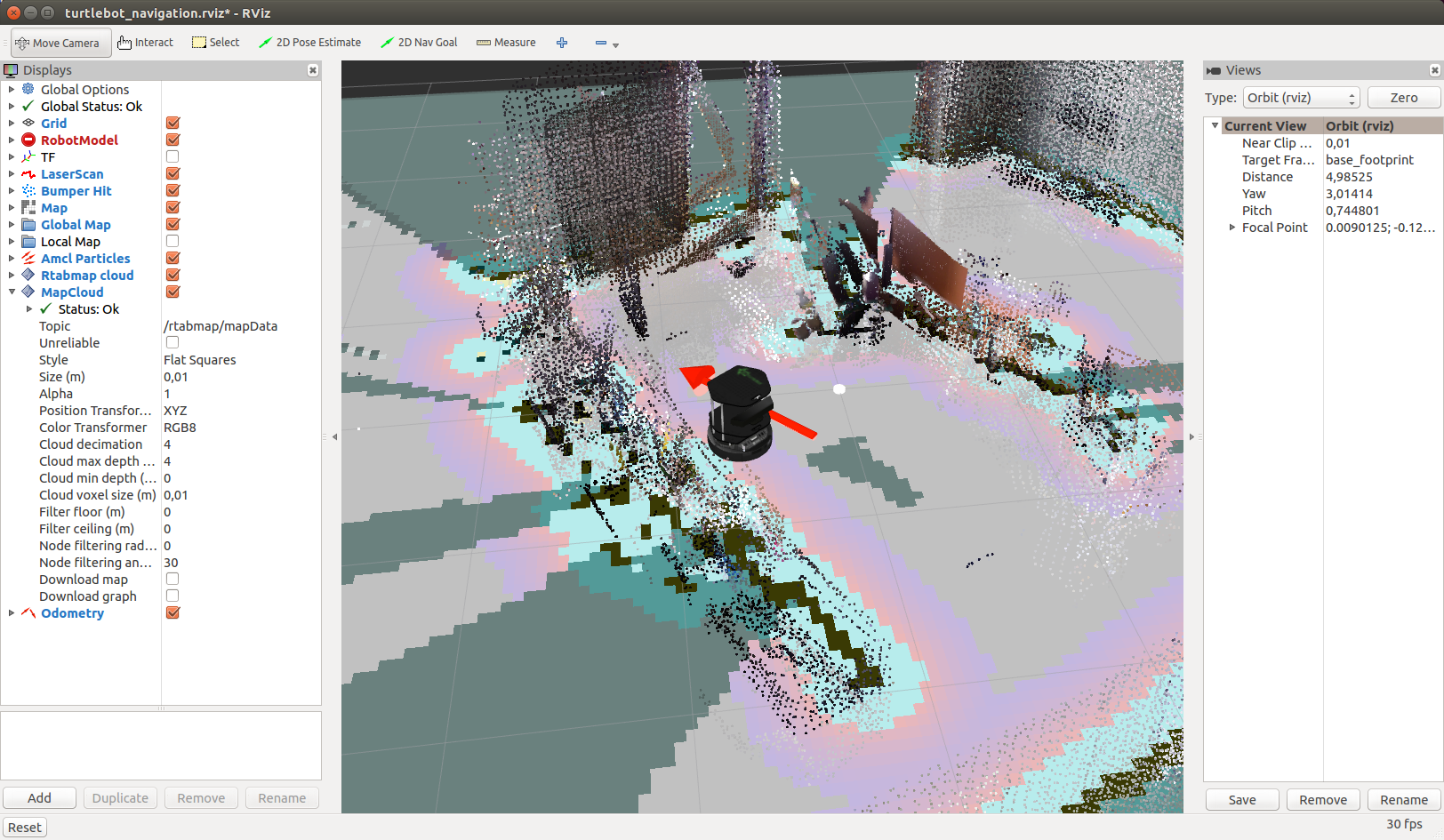

We will see in the window rviz:

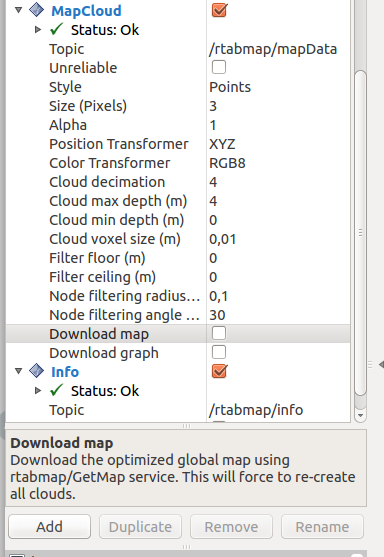

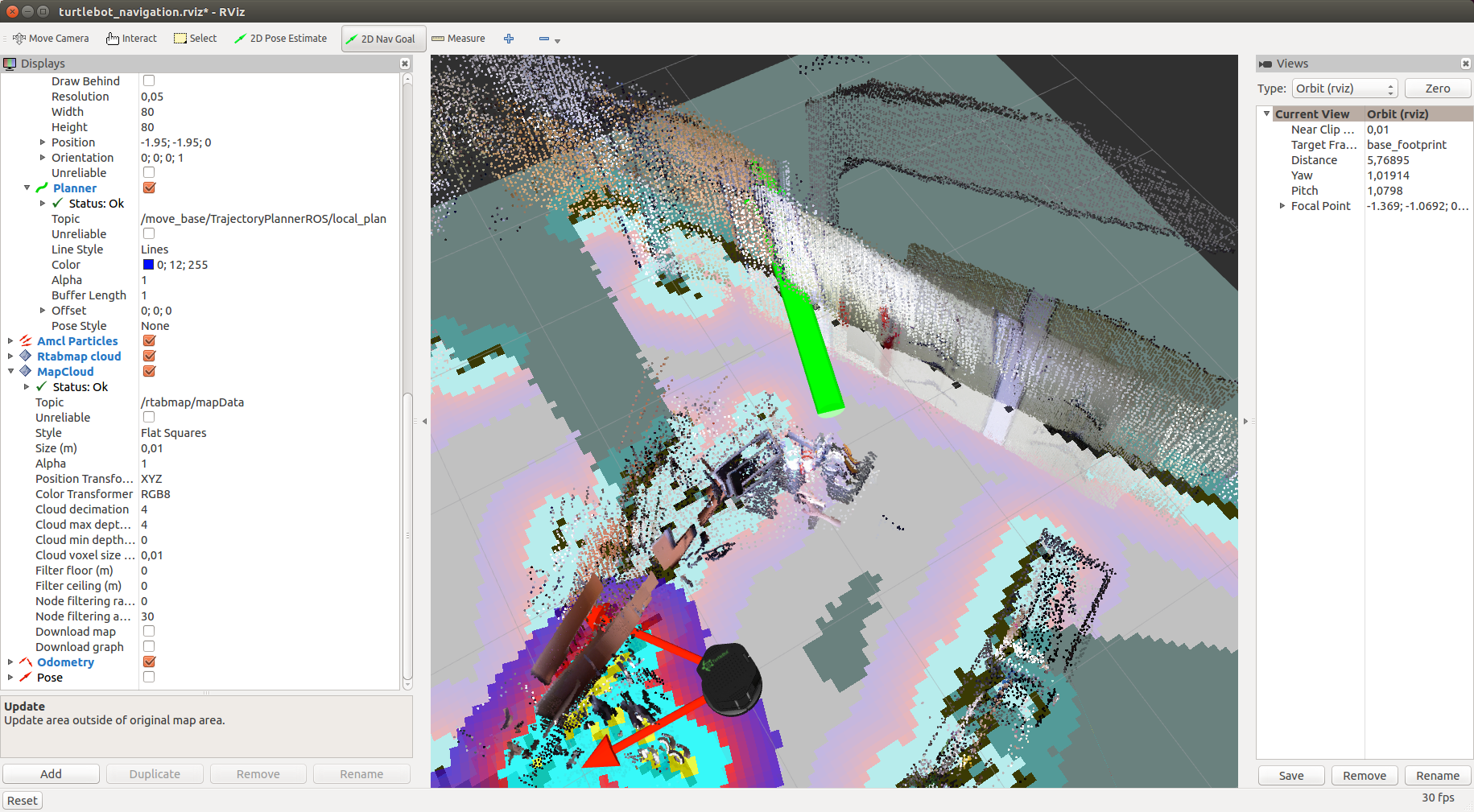

And now with a cloud of points from the Kinect camera:

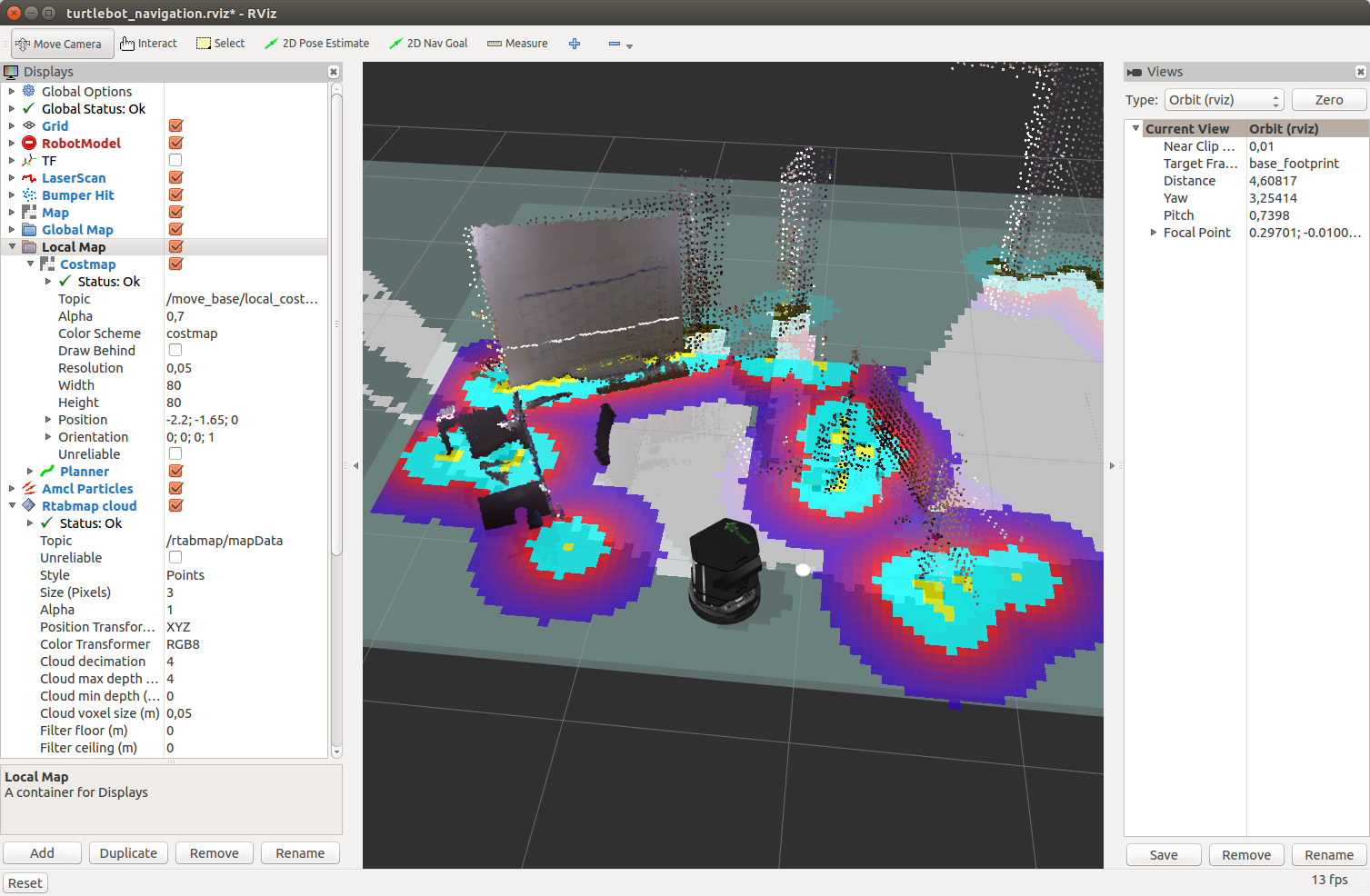

You should see a two-dimensional map, a three-dimensional map, and some more of the topic output needed for navigation. In addition, standard rviz displays and additional rtabmap displays (Local Map, Global Map, Rtabmap Cloud) are displayed in the display panel on the left.

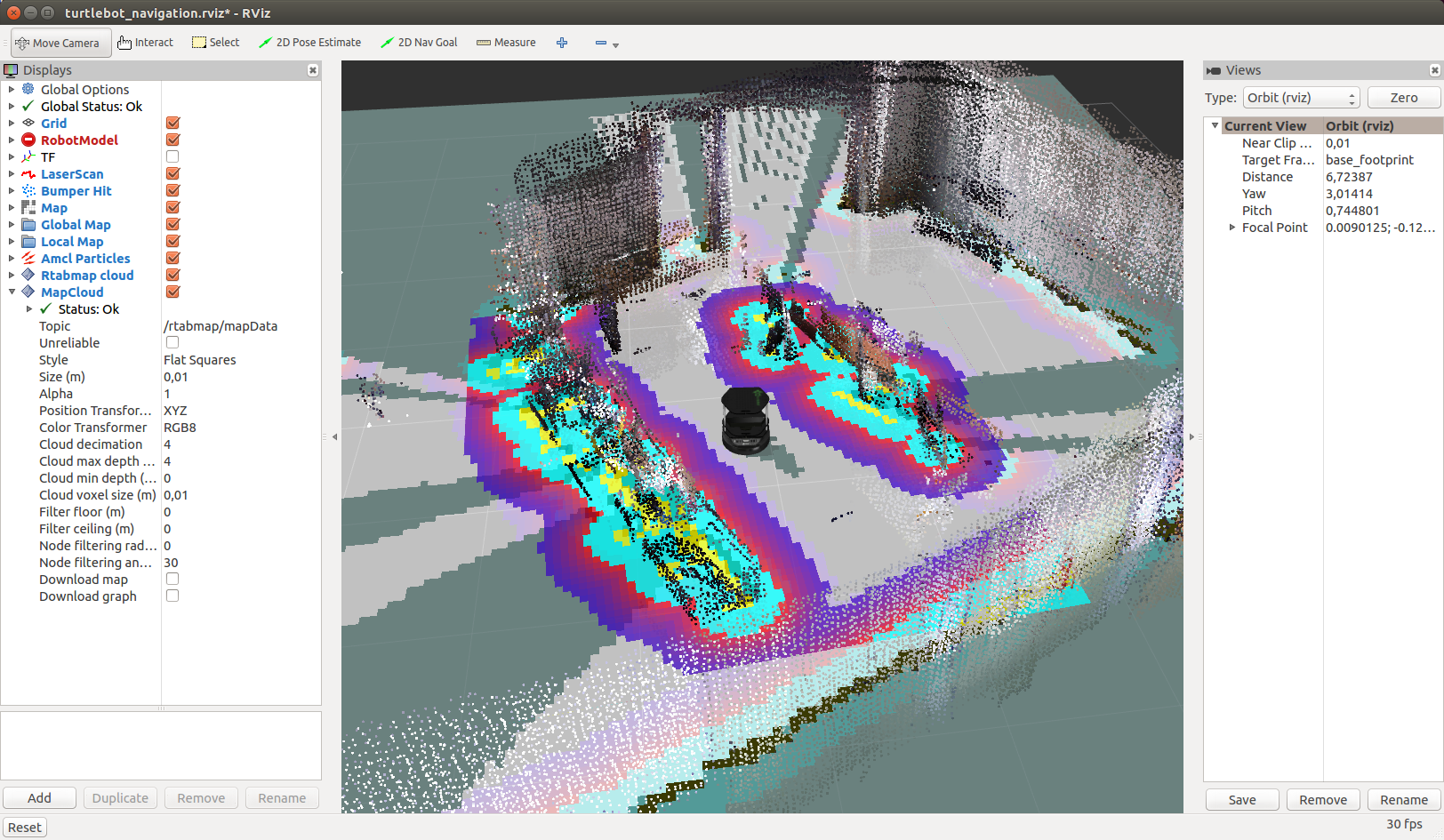

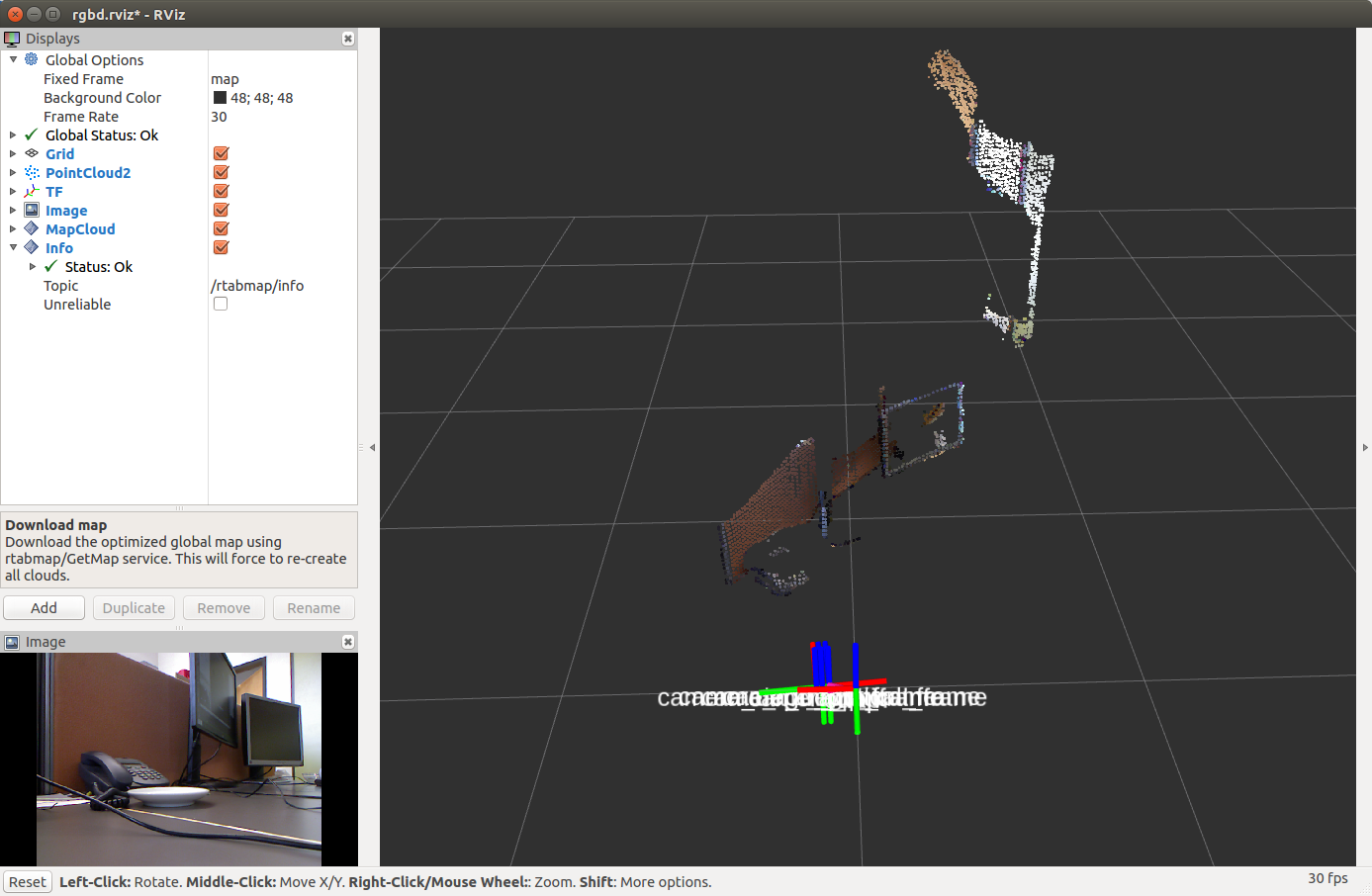

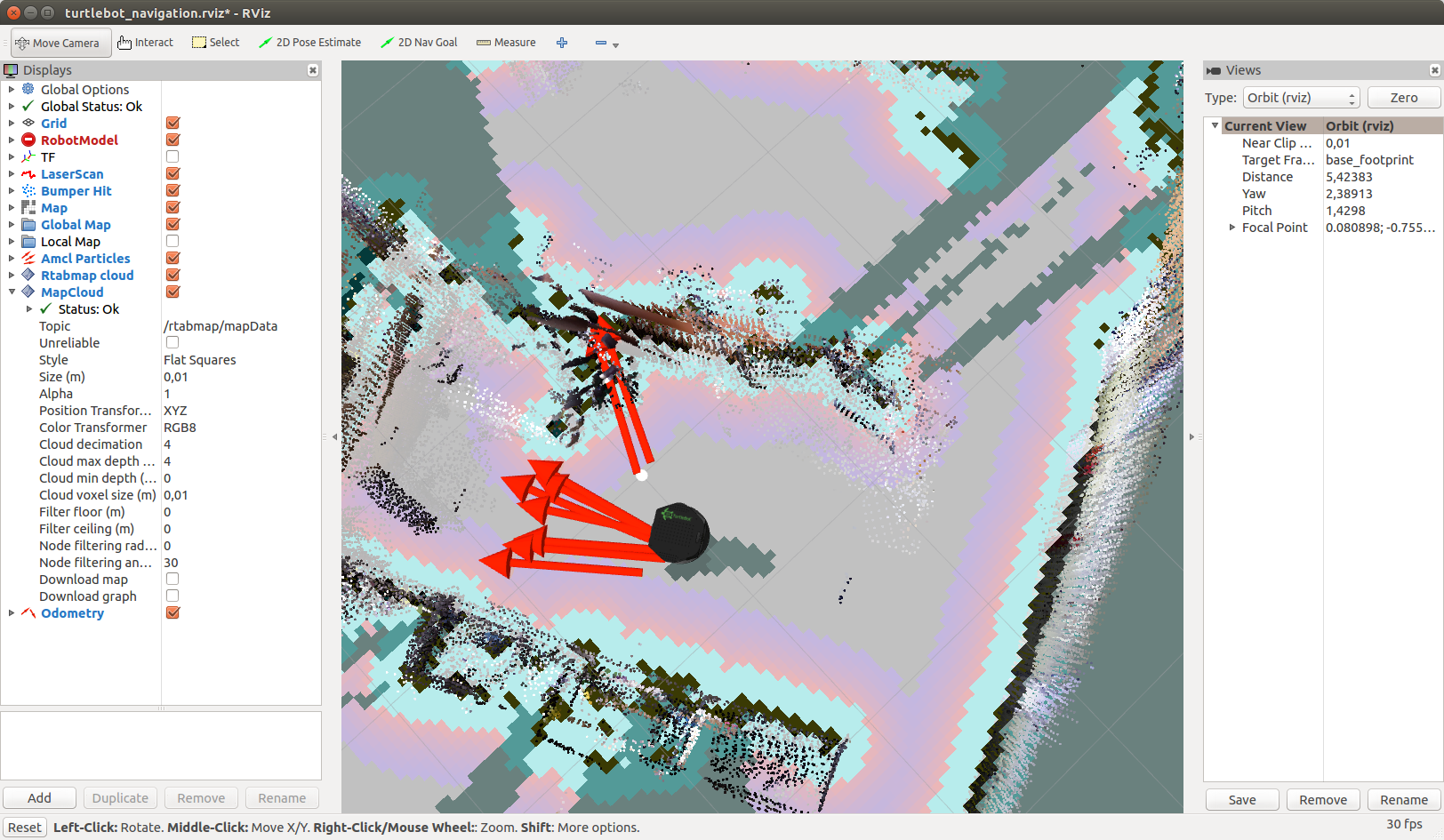

Move the camera in space and after a while we will see:

By default, rtabmap uses the same database each time demo_turtlebot_rviz.launch is launched. To remove the old map and start building the map again (from a clean start), you can either manually delete the database stored in the file ~ / .ros / rtabmap.db, or run demo_turtlebot_rviz.launch with the argument args: = "- delete_db_on_start".

Let's start building a map without deleting the database:

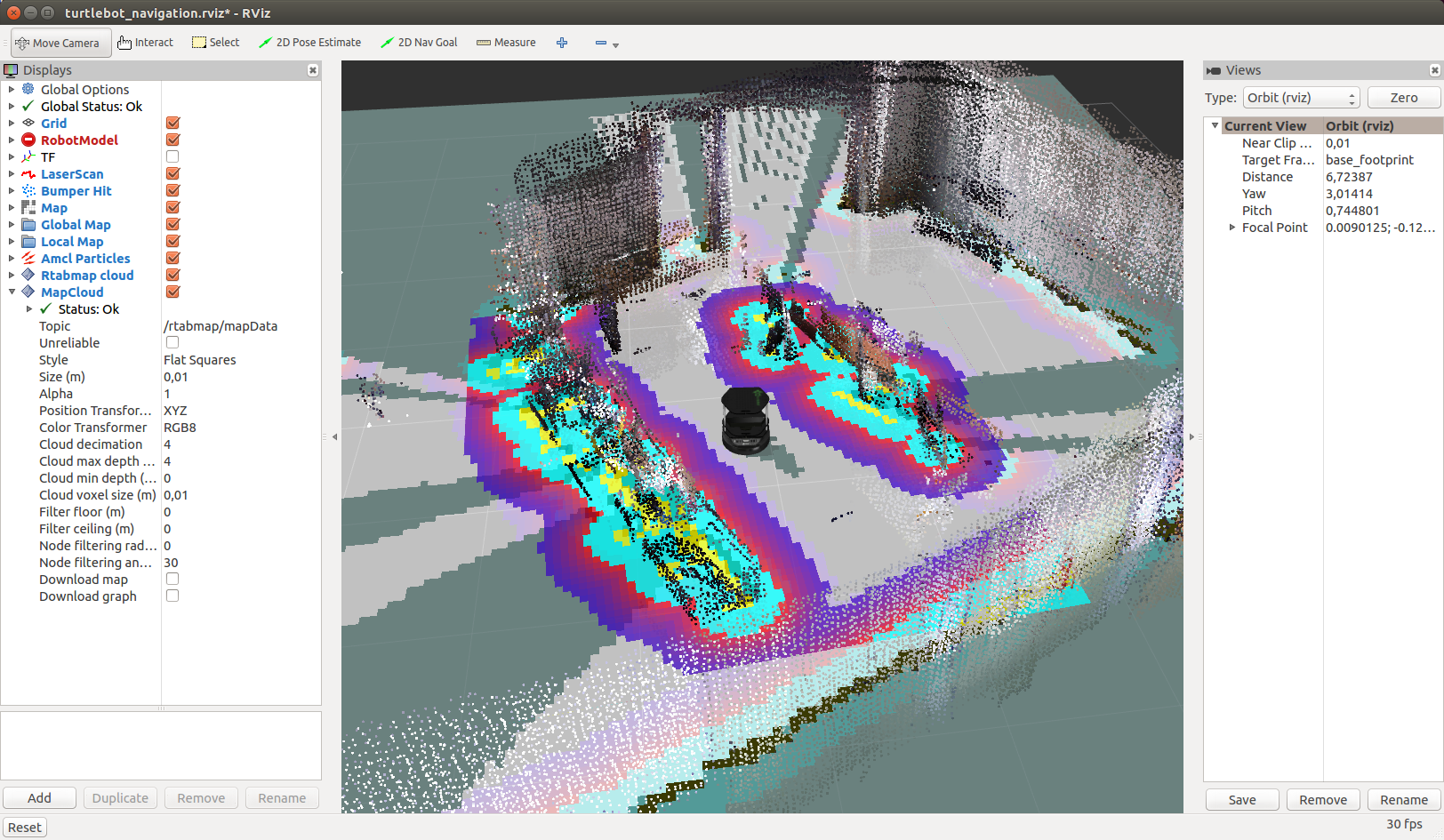

After building the map, the result will look something like this:

Now we have a map that is stored in the database in the file ~ / .ros / rtabmap.db. Restart demo_turtlebot_mapping.launch in localization mode with the argument localization: = true:

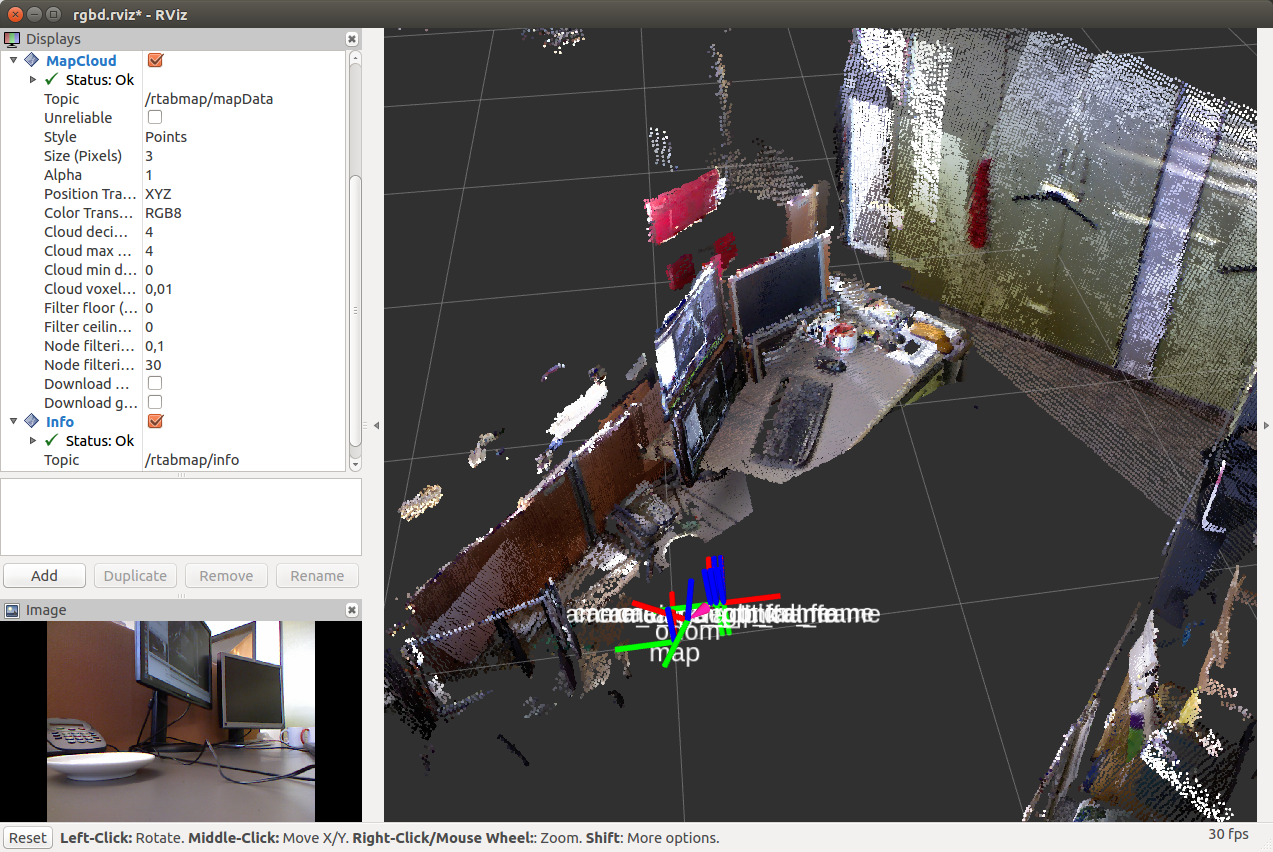

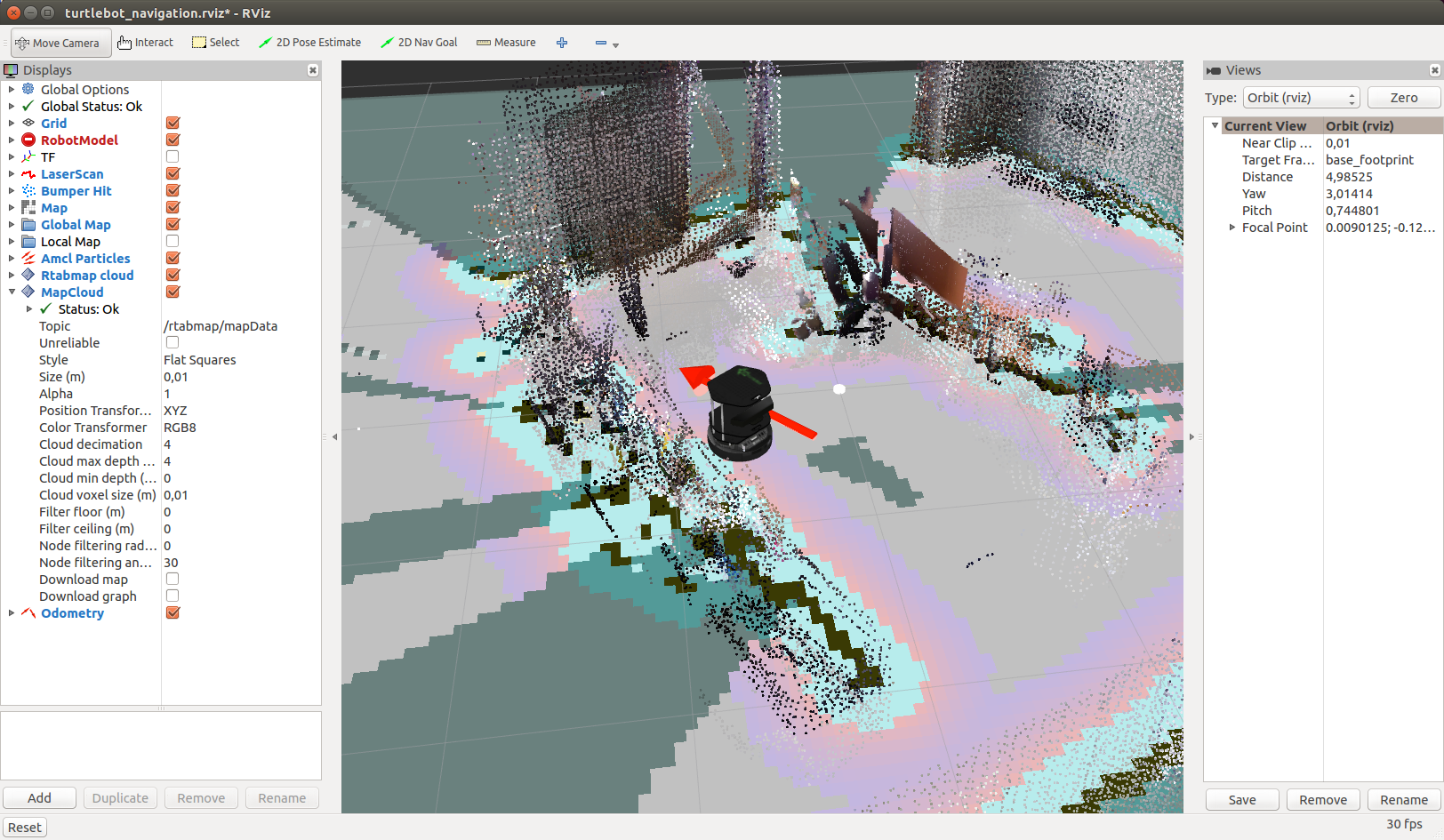

We will see this picture:

Move Kinect to the side. The robot will determine its new location as soon as it detects a loop (loop closure):

It will take time for the loop to be detected successfully, so be patient.

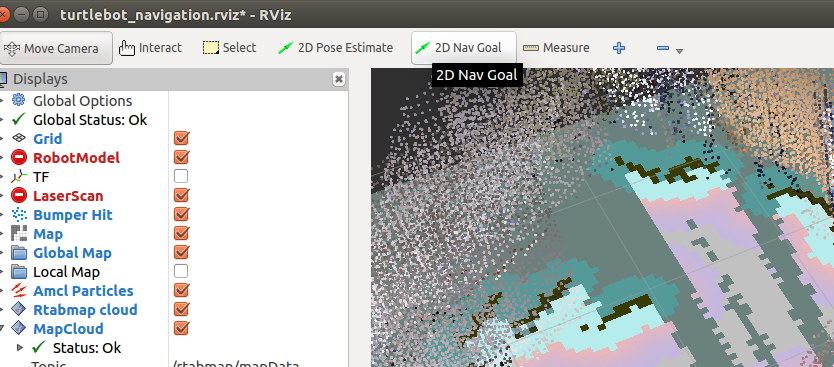

It works pretty fast:

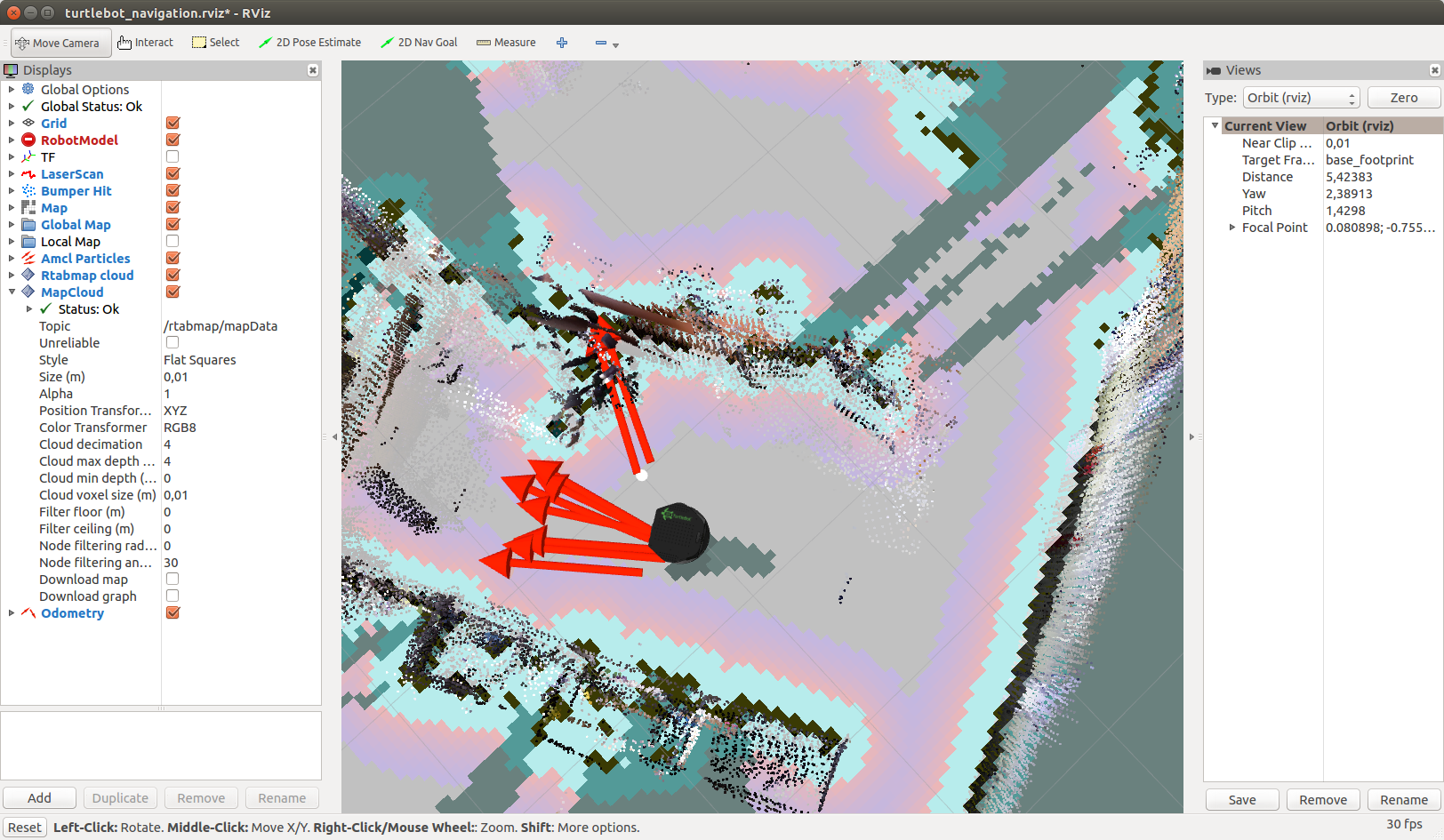

Now that the map has been created, you can try offline navigation on a well-known map using the Navigation stack. To do this, simply specify for the robot the current target on the map in rviz, in the direction of which the robot should move offline. Everything else will take care of the Navigation stack. To start, we just need to press the 2D Nav Goal button in rviz

and specify the target by clicking in an arbitrary place on the map.

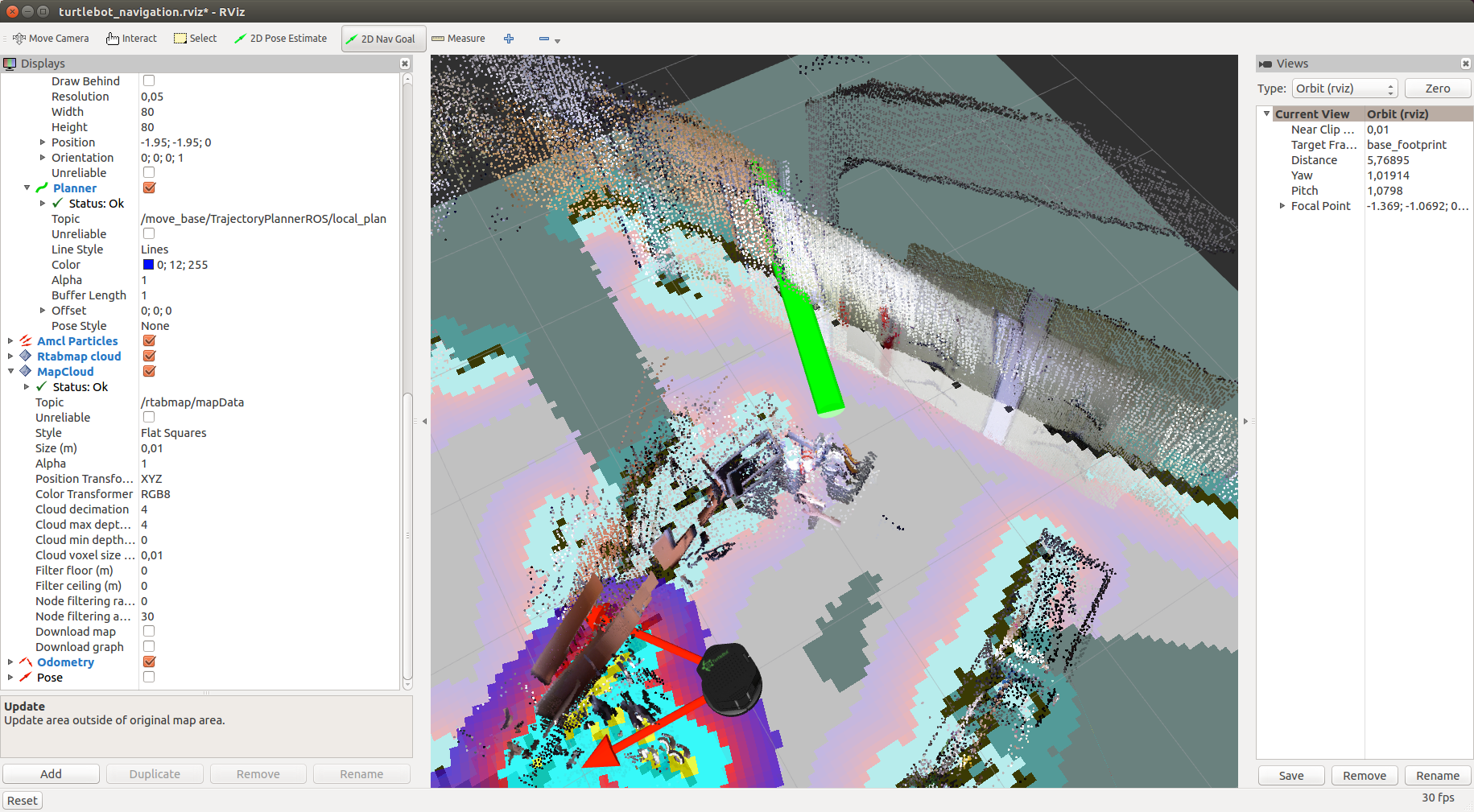

Add the Pose display, select the “/ move_base / current_goal” topic for it and the Path display and select the “/ move_base / NavfnROS / plan” topic. You should see the planned path (green line on the map) and the set goal, indicated by the red arrow:

The move_base package is responsible for controlling the robot in the direction of the target. It will publish motion commands of the geometry_msgs / Twist type in the topic / mobile_base / commands / velocity:

Additional information about working with the Navigation stack can be found in the tutorial on the official turtlebot_navigation page. There is also a tutorial for using rtabmap on your own robot .

Thus, this time we were able to localize the robot on a pre-built map and learned how to set a target for the robot so that it could move towards it offline. I wish you good luck in your experiments and see you soon!

Localization in rtabmap with visualization in rviz

To begin with, if the ROS master is not running, then run it:

roscore

Run the driver for the camera:

roslaunch freenect_launch freenect.launch depth_registration:=trueRun rtabmap to build a map with visualization in rviz and removing the old map:

roslaunch rtabmap_ros rtabmap.launch rtabmap_args:="--delete_db_on_start" rtabmapviz:=false rviz:=trueWhen the mapping procedure is completed, finish the program using Ctrl + C to save the map and restart rtabmap in localization mode:

roslaunch rtabmap_ros rtabmap.launch localization:=true rtabmapviz:=false rviz:=true

In order to show the map in rviz, you must click the checkmark in the Download Map item on the MapCloud display:

Move the camera to the place where the robot is located on the map in rviz. After that, the transformation between the coordinate systems / map → / odom will be published.

Mapping and navigation using rtabmap using the example of Turtlebot

Mapping and localization of the robot using the rtabmap method can be tried on the simulator of the Turtlebot robot. In rtabmap there are special packages for this. For additional information I give a link to the source of the material. First, install the necessary packages:

sudo apt-get install ros-<ros_version>-turtlebot-bringup ros-<ros_version>-turtlebot-navigation ros-<ros_version>-rtabmap-rosThe default driver is OpenNI2, which is specified in the 3dsensor.launch file (TURTLEBOT_3D_SENSOR = asus_xtion_pro). In my experiments, I used the Microsoft Kinect camera. Let's install a driver for it in the TURTLEBOT_3D_SENSOR variable:

echo ‘export TURTLEBOT_3D_SENSOR=kinect’ >> ~/.bashrc

source ~/.bashrc

Now we’ll start building a map using special packages for the Turtlebot simulator in rtabmap_ros:

roslaunch turtlebot_bringup minimal.launch

roslaunch rtabmap_ros demo_turtlebot_mapping.launch args:="--delete_db_on_start" rgbd_odometry:=true

roslaunch rtabmap_ros demo_turtlebot_rviz.launch

We will see in the window rviz:

And now with a cloud of points from the Kinect camera:

You should see a two-dimensional map, a three-dimensional map, and some more of the topic output needed for navigation. In addition, standard rviz displays and additional rtabmap displays (Local Map, Global Map, Rtabmap Cloud) are displayed in the display panel on the left.

Move the camera in space and after a while we will see:

By default, rtabmap uses the same database each time demo_turtlebot_rviz.launch is launched. To remove the old map and start building the map again (from a clean start), you can either manually delete the database stored in the file ~ / .ros / rtabmap.db, or run demo_turtlebot_rviz.launch with the argument args: = "- delete_db_on_start".

Let's start building a map without deleting the database:

roslaunch rtabmap_ros demo_turtlebot_mapping.launch

After building the map, the result will look something like this:

Localization with Turtlebot

Now we have a map that is stored in the database in the file ~ / .ros / rtabmap.db. Restart demo_turtlebot_mapping.launch in localization mode with the argument localization: = true:

roslaunch rtabmap_ros demo_turtlebot_mapping.launch rgbd_odometry:=true localization:=trueWe will see this picture:

Move Kinect to the side. The robot will determine its new location as soon as it detects a loop (loop closure):

It will take time for the loop to be detected successfully, so be patient.

It works pretty fast:

Autonomous navigation with Turtlebot

Now that the map has been created, you can try offline navigation on a well-known map using the Navigation stack. To do this, simply specify for the robot the current target on the map in rviz, in the direction of which the robot should move offline. Everything else will take care of the Navigation stack. To start, we just need to press the 2D Nav Goal button in rviz

and specify the target by clicking in an arbitrary place on the map.

Add the Pose display, select the “/ move_base / current_goal” topic for it and the Path display and select the “/ move_base / NavfnROS / plan” topic. You should see the planned path (green line on the map) and the set goal, indicated by the red arrow:

The move_base package is responsible for controlling the robot in the direction of the target. It will publish motion commands of the geometry_msgs / Twist type in the topic / mobile_base / commands / velocity:

rostopic echo /mobile_base/commands/velocity

Additional information about working with the Navigation stack can be found in the tutorial on the official turtlebot_navigation page. There is also a tutorial for using rtabmap on your own robot .

Thus, this time we were able to localize the robot on a pre-built map and learned how to set a target for the robot so that it could move towards it offline. I wish you good luck in your experiments and see you soon!