The cult of cargo for AI: the myth of superhuman artificial intelligence

- Transfer

I heard that in the future, computer AI will become so smarter than us, that they will take away all our jobs and resources, and people will die. Is it so?

This is the most frequent question that I get asked at my speeches about AI. People who ask him are genuinely worried, and their anxiety comes from other people - experts who are asking the same question. Among them you can meet the smartest of the living people today - this, for example, Stephen Hawking , Ilon Musk , Max Tegmark , Sam Harris and Bill Gates, - and they all believe in the possibility of such a scenario. At a recent conference devoted to the problems of AI, a committee of nine of the most knowledgeable people in the field of AI agreed that the early appearance of superhuman AI cannot be avoided.

But this scenario of conquering the world of AI includes five assumptions, which, as it turns out, after careful study of them, are not based on evidence. These statements may be justified in the future, but now none of them have any evidence. Here are these assumptions:

1. AI is already getting smarter than us, and its power is growing exponentially.

2. We will create a general-purpose AI, similar to our own.

3. We are able to create human intellect based on silicon.

4. Intellect is able to grow without limits.

5. After the explosion of superintelligence, he will help us solve all our problems.

As an objection to this orthodox canon, I will cite five heretical statements, which, it seems to me, are more justified.

1. Intellect is not one-dimensional, therefore the concept of “smarter than people” does not make sense.

2. Neither humans nor AI have any general consciousness.

3. Emulation of human thinking on other media will be limited to the cost of its creation.

4. The dimensions of the intellect are not infinite.

5. Intellect is just one of the factors of progress.

If the expectation of superhuman AI (FIC) is based on five key assumptions that have no evidence, then this idea is more like religious faith or myth. Next, I will explain in detail each of my five counter-assumptions, and I will prove that the FIC is actually a myth.

one.

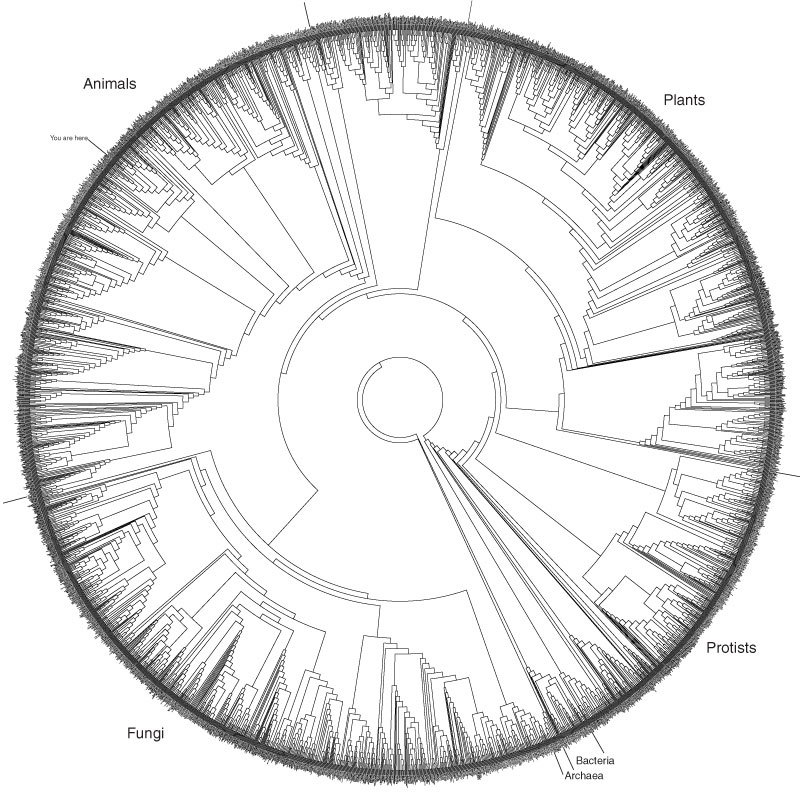

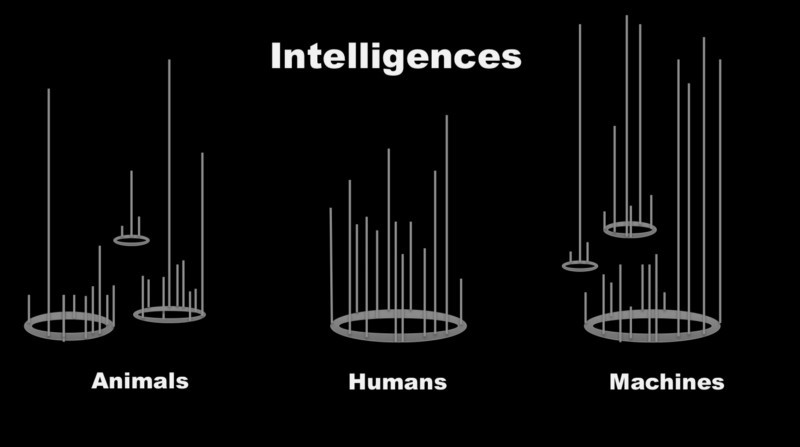

The most common misconception about AI begins with the misconception about natural intelligence. It consists in that intelligence is one-dimensional. Most techies are prone to portraying intelligence in the way that Nick Bostrom does in the Superintelligence book [Superintelligence], as a one-dimensional line graph with increasing amplitude.

On one side there is a low intelligence, say, a small animal; on the other - a high, let's say, a genius - as if the intellect can be represented as a sound level in decibels. Of course, in this case it is easy to imagine that the loudness of the intellect continues to grow and as a result exceeds our highly intellectual level and becomes a super-loud intellect — a roar! - inaccessible to us and beyond the limits of the schedule.

This model is topologically equivalent to a ladder on which each subsequent step of intelligence is one step higher than the previous one. Younger animals are on the lower steps, and high-level AI will overtake us and be on the steps above. The timeline of this event does not matter; only the ranking matters — the metric of increasing intelligence.

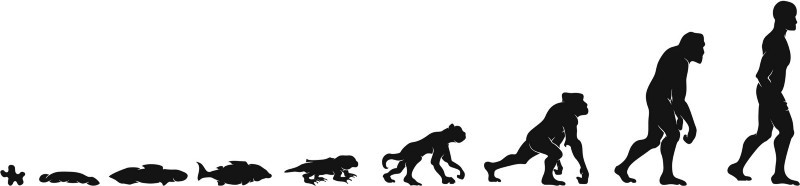

The problem with this model is that it is as mythical as the ladder of evolution. Before Darwin, the natural world was viewed as a ladder on which younger animals were located below man. Even after Darwin, it was customary to think of evolution as a “ladder”, according to which fish turned into reptiles, then mammals, then primates, into humans, and each stage is at a slightly higher “evolutionary level”, and therefore considered smarter than the previous ones. So the ladder of intellect is consistent with the ladder of existence. But these models have a completely unscientific approach.

A more accurate depiction of the natural evolution of a species is an expanding disk, as in the image above, first proposed by David Hillis of the University of Texas, and based on DNA. This genealogicalthe mandala begins in the center with the most primitive forms of life, and then branches out in time. Time moves outside, so the newest types of life that inhabit the planet today are around the perimeter of the circle. This image emphasizes the fact of evolution, which is difficult to accept: each of the species living today is equally evolving. People exist on this ring with cockroaches, clams, ferns, foxes and bacteria. Each species has gone through a continuous chain of three billion years of successful reproduction, which means that today's bacteria and cockroaches are just as evolutionary developed as humans. There is no stairs.

Similarly, there is no ladder for the intellect. Intellect is not one-dimensional. This is a complex of many types and recognition modes, each of which is a continuum. Take the simplest task of measuring the intelligence of animals. If the intellect were one-dimensional, we would just have to build in the right upward order the intellects of a parrot, dolphin, horse, squirrel, octopus, blue whale, cat, and gorilla. But today we have no scientific evidence for the existence of such a line. One of the reasons for this could be the lack of difference between the intellects of animals, but we also do not see this. Zoology is full of amazing examples of differences in animal thinking. But maybe they all have one relative "general-purpose intelligence"? Perhaps, but for him we have no way of measuring and metrics.

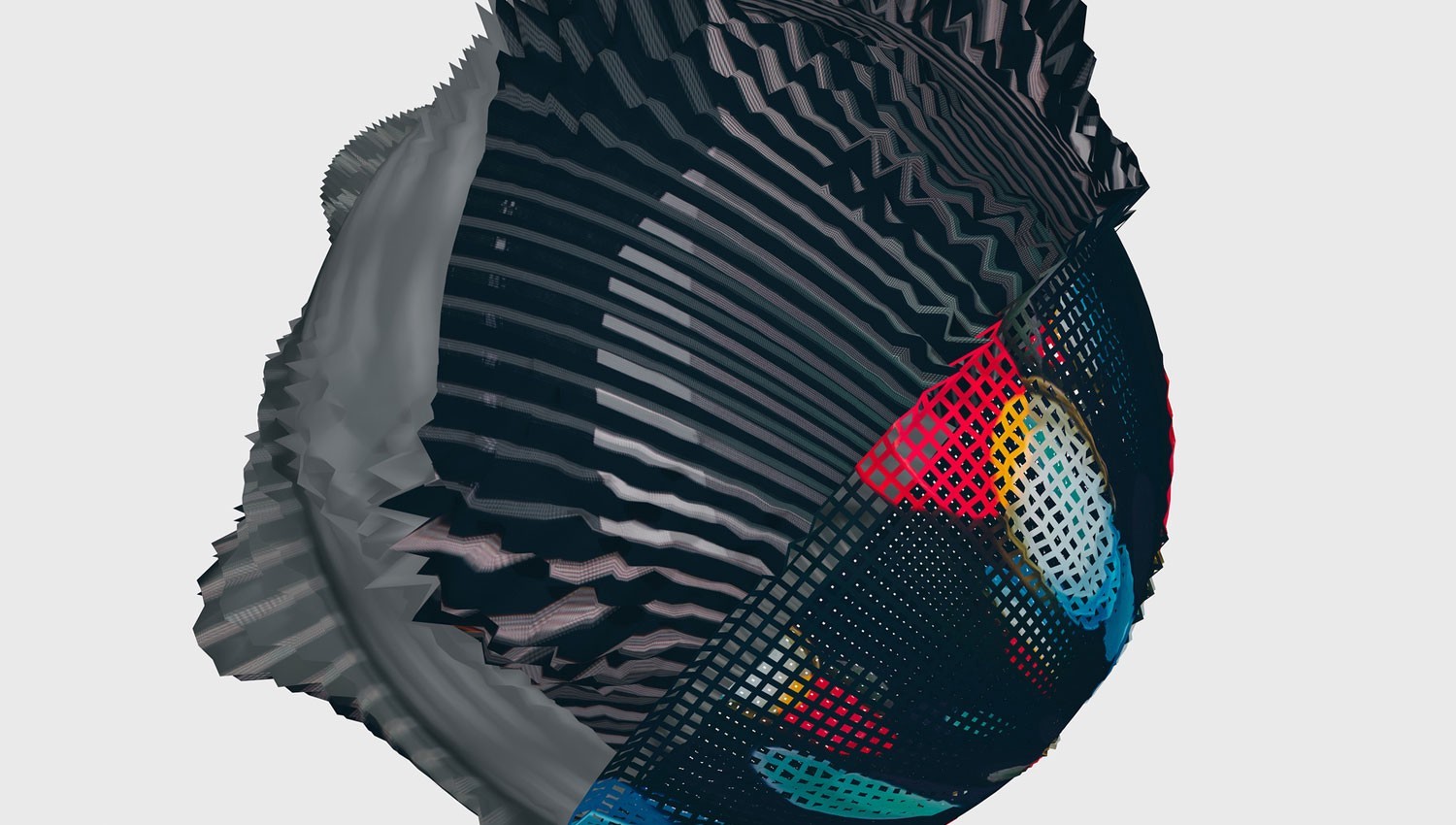

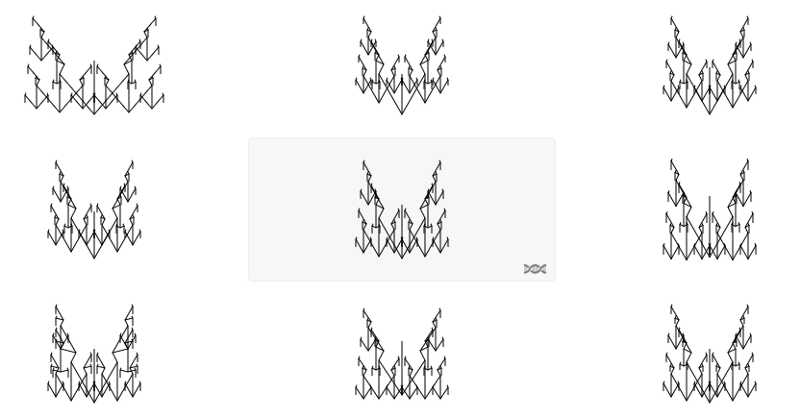

Instead of a single line with decibels, a more accurate model of intelligence will be a graph of its probabilistic space, such as the figure above, representing possible forms. Intellect is a combinatorial continuum. A set of nodes, each of which is a continuum, create complex and diverse structures in higher dimensions. Some intelligences can be very complex and have a multitude of thought nodes. Others may be easier, but reach out further, taking an angle in space. These complexes, which we call intellects, can be considered as symphonies involving many types of instruments. They differ not only in volume, but also in tone, melody, color, tempo, etc. They can be represented as an ecosystem. And in this sense, the various component nodes of thinking depend on each other and are created together.

As Marvin Minsky said , human minds are communities of minds. We work on thinking ecosystems. We have many types of thinking inside that deal with many types of thinking: deduction, induction, symbolic logic, emotional intelligence, spatial logic, short-term memory and long-term memory. The nervous system of our intestines is also a brain of some type with its mode of thinking. We think not just with one brain, we think with our whole body.

These sets of thinking vary from individual to individual and from species to species. A squirrel can remember the exact location of several thousand acorns over the years, which completely impresses the human mind. So in this kind of thinking, proteins are superior to humans. This superpower is associated with other modes that are fading compared to ours, and this connection constitutes the mind of proteins. In the animal kingdom, there are many other examples of possibilities that are superior to humans, and they are also included in different systems.

The same is true for AI. Artificial minds are already superior to people in certain dimensions. Your calculator is the genius of mathematics, Google’s memory already exceeds ours in a certain dimension. We create AIs that stand out in certain modes. We are able to do some of these things ourselves, but they do it better - for example, in the fields of probability or mathematics. Other modes of thinking are not available to us - only a search engine can remember every word on six billion web pages. In the future, we will come up with completely new types of thinking that we do not have, and indeed in nature. Inventing artificial flight, we were inspired by biological flight regimes, mainly flapping of wings. But we invented such flights — with propellers and fixed wings — that were unknown to the biological world. This is someone else's flight. In the same way, we will invent new modes of thinking that do not exist in nature. In many cases, they will be new, specific, small modes designed for a particular work - perhaps a type of reasoning suitable only for statistics and probability theory.

In other cases, the new mind will be a complex set of those types of thinking that we can use to solve problems that are beyond our intelligence. Many of the hardest problems of business and science may require a two-step solution. The first stage: to invent a new mode of thinking, able to work with our mind. Second: combine them to solve the problem. As we solve problems that are not available to us earlier, we want to call this new type of thinking “smarter” than we are, but in fact it is just different from us. The main advantage of AI is a different thinking from ours. I think it is useful to think of AI as an alien thinking (or artificial aliens). Its singularity will be its main advantage.

At the same time, we will integrate these different modes of thinking into more complex mind communities. Some of these complexes will be more difficult than ours, because they can solve problems that are inaccessible to us, and then someone wants to call them superhuman. But we do not call Google CII, although its memory is beyond ours, since there are many things that we can do better than it. These complexes of AI can surpass us in many dimensions, but none can do everything we do better than us. This can be compared with the physical abilities of people. The industrial revolution is already 200 years old, and although on the whole machines exceed the physical abilities of a person (speed of movement, weight lifting, precise cutting, etc.), there is no machine capable of surpassing the average person in everything he does.

And although the community of minds in AI is becoming more and more complex, so far this complexity is difficult to measure scientifically. We do not have good metrics for complexity that can determine if a cucumber of a Boeing 747 is more difficult, or describe how their difficulties differ. This is one of the reasons why we do not have good metrics for the mind. It will be very difficult to determine whether mind A is more difficult than mind B, and therefore it is difficult to understand which of them is smarter. Soon we will come to understand that the mind is not one-dimensional, and we are actually interested in all the many ways in which the intellect is able to function - all those nodes of knowledge that we have not yet discovered.

2

The second error associated with human intelligence is our belief that our mind is universal, it is a general purpose mind. This belief led to the appearance of the often claimed goal of AI researchers to create a generalized AI, OII. However, if we consider the intellect as more space of possibilities, it does not have a generalized state. The human intellect is not in a certain central position around which other specialized intellects revolve. Human intelligence is a very, very special type of intelligence that emerged as a result of millions of years of evolution in order for our species to survive on this planet. If we place it in the space of all possible intelligences, it will be somewhere in the corner, just like our world is on the edge of a huge galaxy.

We can certainly imagine and even invent a mind that resembles a universal Swiss knife. He copes well with a bunch of tasks, but he does not cope with any of them very well. AI will follow the same engineering maximfollowed by all things created or born: you cannot optimize every dimension. Only compromises are possible. It will not be possible to create a generalized multifunctional unit, superior specialized. A big mind, “capable of everything,” cannot do it all as well as specialized ones. Since we believe in the generalization of our mind, we believe that thinking is not obliged to follow engineering compromises, that it will be possible to create intelligence that maximizes all modes of thinking. But this is no evidence. We have not yet invented enough variants of the mind to see the whole space (and so far we dismiss the minds of animals, evaluating them with one-dimensional amplitude).

3

Part of this belief comes from the concept of universal computing. This assumption, formally described in 1950, as the " Church-Turing thesis"claims that all calculations that reach a certain threshold are equivalent. Therefore, there is a universal core for all calculations, and whether they occur in the same machine with many fast parts, or slow parts, or in the biological brain, they are all the same logical process. And this means that it is possible to emulate any computational process (thinking) in any machine capable of “universal” computations. Adherents of singularity are based on this principle, expecting that we can create silicon brains capable of estit the human mind, and that we can create artificial intelligence, think like humans, only much smarter. This hope should be treated with skepticism, since it is based on a misunderstanding of the Church-Turing hypothesis.

The starting point of this hypothesis is as follows: "In the presence of an infinite film (memory) and time, all calculations are equivalent." The problem is that in reality the computer does not have infinite memory and time. In the real world, real time is crucial, often even a matter of life and death. Yes, all thinking can be equivalent if time is ignored. Yes, you can emulate human thinking on any matrix, as long as you ignore time or the real limitations of data storage and memory. However, by including time, it will be possible to significantly redefine this principle: "Two computing systems running on very different platforms will not be equivalent in time." Or: "The only way to get similar thinking patterns is to run them on equivalent platforms." Physical matter

I will continue these considerations and say that the only way to get close to the human thinking process is to run the calculations on a soft and damp cloth, similar to a human one. This means that very large and complex AI, working on dry silicon, will give us big, complex and inhuman types of thinking. If it becomes possible to create an artificial wet brain using grown human-like neurons, then I would say that his thoughts will be very similar to ours. The benefits of such a wet brain are proportional to how similar we can make the foundation. The cost of creating such a “human computer” is enormous, and the closer the tissue is to the brain tissue, the more cost effective it will be to simply create a person. In the end, we can do this in nine months.

Moreover, as stated above, we think with the help of the whole body, and not just with just one brain. We have a wealth of data showing that the nervous system of the intestines guides our "rational" decision-making process, that it is capable of learning and predicting events. The more we model the human body system, the closer we come to its reproduction. An intelligence working on a very different body (on dry silicon instead of wet carbon) will think differently.

It seems to me that this is not a bug, but a feature. As I mentioned in paragraph 2, the difference between thinking and human is the main advantage of AI. This is another reason why it is wrong to say that he is “smarter than people.”

four.

At the heart of the notion of superhuman intelligence — in particular, the notion that such intelligence will constantly improve — lies the belief that the scale of intelligence is infinite. I do not see evidence of this. An erroneous idea of the one-dimensionality of the intellect helps to believe this - but this is only faith. There are no reliably infinite physical dimensions in science known to science. The temperature is not infinite - there is a finite cold and a finite heat. Time and space are finite. The final speed. The numerical line in mathematics may be infinite, but physical attributes have limitations. It is reasonable to say that the mind itself is finite. So the question is, where is the limit of intelligence? We tend to believe that he is far beyond our reach, he is much “higher” than us, as far as we are “higher” than an ant. If we leave the recurring problem of one-dimensionality, what evidence do we have that we have not reached this limit? Why can't we be at the maximum? Or perhaps this limit is not far from us? Why do we believe that intelligence is something that can expand forever?

It is much better to approach this issue, considering our intellect as one ofsets of possible types of intelligence . And even if every dimension of thinking and computing has a limitation, with hundreds of dimensions, there can be innumerable minds — none of which will be infinite in any dimension. When we meet or create these variants of minds, we can decide that some of them are superior to ours. In my last book, The Inevitable, [ The Inevitable ], I outlined some types of such minds that can be somewhat superior to ours. Here is an incomplete list of them:

• Reason, similar to human, but working faster (this kind of AI is the easiest to imagine).

• Very slow mind, characterized by huge amounts of information storage.

• A global superbrain made up of millions of cohesive primitive minds.

• The overmind of the hive, consisting of many clever minds that are not aware of their belonging to the hive.

• Cybernetic mind, consisting of intelligent minds, aware of their belonging to the collective mind.

• Mind, specifically designed to improve the performance of your mind personally, and useless for someone else.

• Reason, able to imagine a more perfect mind, but unable to make it.

• The mind, capable of producing a perfect mind, but not conscious of itself sufficiently to represent it.

• The Mind, capable of once successfully creating a more perfect mind.

• The mind, which is able to successfully create a more perfect mind, which in turn is capable of creating an even more perfect mind, etc.

• A mind that has access to its source code and is able to influence its work.

• Superlogical mind without emotion.

• Mind capable of solving common problems, but not aware of itself.

• Self-conscious mind unable to solve common problems.

• Reason, for the development of which takes a lot of time and protects his mind.

• Extremely slow mind, distributed over a large physical volume, in connection with which it is invisible to faster minds.

• Mind capable of fast and accurate self cloning.

• A mind capable of self-cloning, after which it can remain combined with its clones.

• Immortal mind that can migrate between platforms.

• Fast and dynamic mind, able to change the process and characteristics of their work.

• Nano-reason, the smallest possible from the energy and physical points of view.

• Mind specializing in predictions of events.

• Reason, never erasing anything and not forgetting.

• Symbiotic mind in a half-animal half-mouse.

• Cybernetic intelligence in half-half-human.

• Mind using quantum computing, with inaccessible logic to us.

Some are willing to call any mind from this list of FIC, but the very diversity and alienity of such minds force us to develop new terms and concepts about the intellect and mind.

Then, believers in FIC assume an exponential growth of intelligence (for some indefinite metric), perhaps because they believe that it is already developing exponentially. However, so far there is no evidence that intelligence develops exponentially, no matter how measured. By exponential growth, I mean growth, in which the AI doubles its power at certain regular intervals. Where is the proof? I can not find them. If they are not there today, why is it assumed that they will appear? As an exhibitor, so far only the amount of input data for AI, as well as resources devoted to the production of the highest intelligence, is growing. But the results of their work do not grow according to Moore's law. AI does not get twice as smart every three years or even every 10 years.

I asked many experts on AI to provide evidence of the exponential growth of AI capabilities, but they all agreed that we do not have a metric for AI, and in general it does not work that way. When I asked Ray Kurzweil, the exponential wizard himself, where you can take evidence of the exponential growth of AI, he replied to me that AI does not grow exponentially, it grows in levels. He wrote: “It takes an exponential improvement in computation and algorithmic complexity to add an additional level in the hierarchy ... So we can expect linear additions of levels, because adding each level requires exponentially more complexity, and the progress of our capabilities in this area is actually growing exponentially. There are not many levels left for us to reach the capabilities of the cerebral cortex, so my prediction for 2029 is as I like it. ”

Apparently, Ray wants to say that it is not the capabilities of the AI that are growing exponentially, but our costs of creating it, and its output simply rises by one level each time. This assumption is almost the opposite of an exponential increase in intelligence. In the future this may change, but today the AI is clearly not growing exponentially.

Therefore, imagining an “explosion of intellect,” we should not present it as a chain reaction, but as a scattering reproduction of new variants. The Cambrian explosion , not a nuclear explosion. The results of the accelerating technology are likely to be not superhuman, but extrahuman. Out of our experience, but not necessarily "above" him.

five.

Another, unreliable belief in the coming of the FAR, not supported by evidence, is that the superintelligence of almost infinite power will quickly solve all our basic unsolved problems.

Many supporters of the explosion of intelligence believe that it will lead to an explosion of progress. I call this mythic faith "thinkism". This is the erroneous idea that the future steps of progress are unattainable only because of a lack of mental powers or intelligence. I note that the belief that thinking is a magic panacea for everything is common among many people who like to think.

Take a cure for cancer or prolong life. Such problems can not be solved by thinking. No thought is enough to understand how cells age or how telomeres fall away. No superfood intellect will be able to understand how a human body works, simply by reading all the famous scientific literature in the world and pondering it. No FIC can, simply thinking about all experiments with nuclear fusion, produce a working nuclear fusion scheme. To overcome the path from not knowing how something works, to understanding how something works, it takes much more than just thinking. In the real world, heaps of experiments are produced, each of which leads to the appearance of mountains of conflicting data that require further experiments to create a working hypothesis. Thinking about potential data will not give you the right data.

Thinking is only a part of science, perhaps even a small part of it. For example, we lack the necessary data to get closer to solving the problem of death. When working with living organisms, almost all experiments take time. Slow cell metabolism cannot be accelerated. Getting results takes years, months, at least days. If we want to find out what happens to subatomic particles, we cannot just think about them. We need to build huge, complex, cunning physical structures to find out. Even if the smartest physicists would be 1000 times smarter than they are now, without the collider they would not have learned anything new.

There is no doubt that FIC can accelerate the progress of science. We can do computer simulations of atoms or cells and speed them up many times, but two problems limit the utility of simulations. The first is that simulations and models can go faster than the studied processes only because we do not take into account something. This is the essence of the model or simulation. In addition, testing, testing and proof of these models also takes time and it needs to go with the speed of the simulated processes. Verification of the truth can not be accelerated.

These simplified versions are useful in filtering out the most promising paths and speeding up progress. But in reality there is no excessiveness; everything that is real has its effect to some extent; this is one of the definitions of reality. If you start pumping up models and simulations with more and more data, it soon becomes clear that reality works faster than its 100% simulation. This is another definition of reality: the fastest possible version of all the present details and degrees of freedom. If you can model all the molecules in the cells and all the cells in the human body, then the simulation will not work as fast as the human body. No matter how much you think about it, you will need time to experiment, be it a real system or a simulation.

To be useful, AI needs to infiltrate the real world, and the world will set the speed of its innovation. Without conducting experiments, building prototypes, errors, and experimenting with reality, the intellect may think but not produce results. No instant discoveries per minute, hour, day or year of the so-called appearance. "Superhuman AI" is not expected. Of course, advances in the development of AI will significantly increase the number of discoveries per unit of time, in particular, since AI unlike humans will ask questions that a person would not ask, but even extremely powerful intelligence, compared to us, does not guarantee instant progress. Solving problems requires much more than just intelligence.

Intellect alone cannot solve not only the problems of cancer and longevity, but also the problems of the intellect itself. The typical mantra of singularity supporters is that as soon as you make an AI, which will be “smarter than a man,” he suddenly thinks like it and invents an AI, “smarter than himself,” who, in turn, will think even more properly, and invent more intelligent AI, and as a result, everything will end with an explosion of intellectual power at an almost divine level. We have no evidence that just thinking about intelligence is enough to create new levels of intelligence. Such thinking is faith. We have a lot of evidence that in addition to a large amount of knowledge, we need experiments, data, tests and errors, unusually posed questions and everything else beyond simple ingenuity,

In conclusion, I can say that I can be wrong with my statements. We are in the early stages. We can open a universal metric for intelligence; open its infinity in all directions. Since we know very little about what intelligence is (not to mention consciousness), the probability of an AI singularity exceeds zero. It seems to me that all the evidence speaks in favor of a small probability of such a scenario, but this probability is non-zero.

So, although I disagree with his probability, I agree with the broader goals of OpenAIand intelligent people who are worried about FID - so that we must create friendly AI and think about how to instill in them the values that coincide with ours. And although I think that FIC is a very distant existential threat (which should be considered), I think that its small probability (based on the available evidence) should not control our science, politics and development. Colliding an asteroid with Earth would be a disaster. Its probability is greater than zero (so we must support the B612 fund ), but we should not allow the asteroid impact to control our decisions, for example, in the field of climate change, or space travel, or even urban planning.

Similarly, the evidence so far suggests that AI will most likely prove to be not superhuman, but extra-human, it will be hundreds of new types of thinking, different from human, none of which will not be general-purpose AI, and none of them will become god, instantly solving all our problems. Instead, we will have a galaxy of limited intelligence, working in unfamiliar dimensions, beyond our capabilities in many of them, working together with us to solve existing problems and create new ones.

I understand the charm and appeal of the FIC God. This is something like Superman. But, like Superman, this is a mythical figure. Somewhere in the Universe Superman may exist, but the probability of its existence is extremely small. But myths can be useful, and not disappear after their invention. The idea of Superman will not die. The idea of a superhuman AI-singularity, once it originated, will not die either. But we must understand that now it is a religious, not a scientific idea. If we study the data we have today for intelligence, artificial and natural, we can only conclude that our reasoning about the mythical FII-god is just myths.

Many isolated islands of Micronesiafirst touched the rest of the world during the Second World War. The alien gods flew in their skies in noisy birds, dumped food and goods on their islands, and did not return. Religious cults appeared on the islands, praying for the gods to return and throw off more cargo. Even now, fifty years later, many are still waiting for the return of the goods. It is possible that FIC may be another cargo cult. After a hundred years, people will be able to look back at our time, at the time when the believers began to wait for the appearance of the AII, which will bring them unimaginable benefits. Decade after decade, they are waiting for the arrival of the FIC, confident that he and his benefits should appear very soon.

But non-superhuman AI is already real here. We constantly redefine this term, increase its complexity, and as a result, retain it in the future, but in the broad sense of defining other people's intellects - in a continuous spectrum of various minds, intellects, thoughts, logics, trainings and consciousnesses - AI already exists on the planet, and continues spread, deepen, diversify and grow. No previous invention compares with his power over the world, and by the end of the century the AI will touch and change all aspects of our life. But the myth of superhuman artificial intelligence, ready to bestow us with super-abundance or drive us into super-slavery (or both at the same time), apparently, will continue to live - this opportunity is too mythical to reject.