Programmable chips help Microsoft optimize the performance of its cloud services.

The prototype board for Project Catapult (Source: Clayton Cotterell for Wired)

Microsoft has announced a major upgrade of its data center equipment worldwide. This is, first of all, the DC, which provide the Azure cloud service. New equipment is typical for data centers, with the exception of chips. These are reprogrammable arrays of logic elements (field-programmable gate array, FPGA). FPGAs themselves are not new, but using them in data centers is a new idea from Microsoft. According to experts of the corporation, programmable chips will provide hardware acceleration for the implementation of various software algorithms.

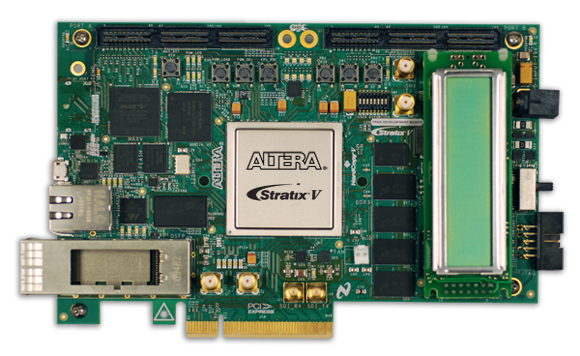

Initially, with the help of FPGA, the corporation planned to optimize the performance of its search service Bing. The company developed and began to implement the first phase of the project calledProject Catapult . As part of this project, Altera's PCI cards (now the owner of Altera is Intel) have added FPGAs to some of the servers responsible for running Bing. According to the authors of the project, this should have improved the performance of the search algorithm ranking algorithm. As it turned out, this assumption was true. Modified servers showed significant performance gains compared to servers, the hardware of which was not subjected to modifications.

It was further proposed to develop specialized integrated circuits, the principle of which would be equivalent to FPGA. Microsoft has already developed such chips for their Hololens video glasses . This has significantly reduced the power consumption of the device and improve its performance. As it turned out, this method is not suitable for the Bing team. The fact is that the search algorithms of the service change very often. And if you start developing chips that are adapted to certain algorithms, while these chips are manufactured and delivered, the search algorithms will change. This means that the chips will become useless (a typical example of hardware lock).

But, since the pilot project was, on the whole, successful, FPGAs decided to use it in equipment that provided the work of other services of the company. For example, Azure and Office 365. In this case, specialized chips could be developed without fear of obsolescence in just a couple of weeks. The main problem for Azure are not algorithms at all, but increasing requirements for network bandwidth.

Altera Stratix V board. Microsoft uses similar boards in its Project Catapult project. A

huge number of virtual machines are running in the Azure environment. They are hosted on a limited number of physical servers. In the company's data centers, Hyper-V hypervisor is used for virtualization. Each virtual machine has one or more network adapters through which these machines receive and send network traffic. The hypervisor manages the physical network equipment connected to the Azure network infrastructure. The redirection of traffic from virtual equipment to real and reverse, while maintaining the load balance of equipment, managing the routing of traffic requires the allocation of significant resources.

Microsoft employees have proposed adding FPGA to PCIe. The FPGA boards, according to experts, had to work directly with the Azure network infrastructure, allowing the network servers to directly send and receive traffic without routing it across the main system’s network interface.

It was decided to directly connect the PCIe interface to the virtual machines, which shortened the path for network traffic. Result? Azure virtual machines now work with a network infrastructure performance of 25 Gbps and a delay of only 100 milliseconds. And this was achieved without the use of server processors.

The same problem can be solved using modern network cards that can work directly with virtual machines in the same way, bypassing the host. But in this case there are limitations. For example, each card can work simultaneously with only 4 virtual machines. The flexibility that FPGA provides is not here. With FPGA, Microsoft can work more actively, programming chips according to current needs. As a result, the overall performance of the data center increases and the load on the equipment decreases. All instructions are written directly to the chip. If you use network cards, in this case, the host processor is still involved, which is responsible for executing instructions.

After the test launch of such a system, the company was convinced of its effectiveness and decided to deploy it in all of its data centers providing Azure operation. This week, the corporation demonstrated the performance of its system at the Ignite conference. An employee of the corporation showed a simultaneous translation process of 3 billion words from the English Wikipedia involving thousands of FPGAs. Data was processed in just a tenth of a second. The system performance in this case reached 10 18 operations per second.