How we launched robots in little Chernobyl. Part 1

The birth of the Remote Reality concept

The story of our “crazy” project began three years ago, when thinking about the future prospects of the development of the gaming industry, my friend Lesha said: “Imagine a future in which people in the form of entertainment, from any part of the world, control real robots on a gaming ground like“ avatars. ”

The idea initially seemed to us quite interesting and not difficult to implement. We immediately sat down in search of similar projects and were surprised to find that no one had done anything like this. It seemed strange, because the idea was literally “on the surface”. We found many traces of amateur prototyping projects in the form of an Arduino-based chassis with a camera, but no one has brought any project to its logical conclusion. Later, overcoming seemingly endless difficulties and problems, we understood the reason for the lack of analogues, but initially the idea seemed extremely simple and quickly implemented to us.

The next week we devoted to the development of the concept. We imagined dozens of varieties of robots with different capabilities and hundreds of game ranges, between which players can instantly move through the "teleport". Anyone who wanted, on the basis of our “solution”, had the opportunity to build their own game training ground of various scales.

We immediately decided that these thoughts fit more into the concept of an entertainment attraction, rather than a computer game. People love entertainment and want something new, and we knew what to offer them. As in any business, the question of payback immediately surfaced, because at first glance it seems that our physical model is limited by the number of robots. But multiplying the robots by 24 hours and the price of an hour of 5-10 dollars, doubts disappeared. The financial model was not a Klondike, but it paid off even at 10% load.

Very quickly, the name of a new concept appeared in our head: Remote Reality, similar to Virtual Reality and Augmented Reality.

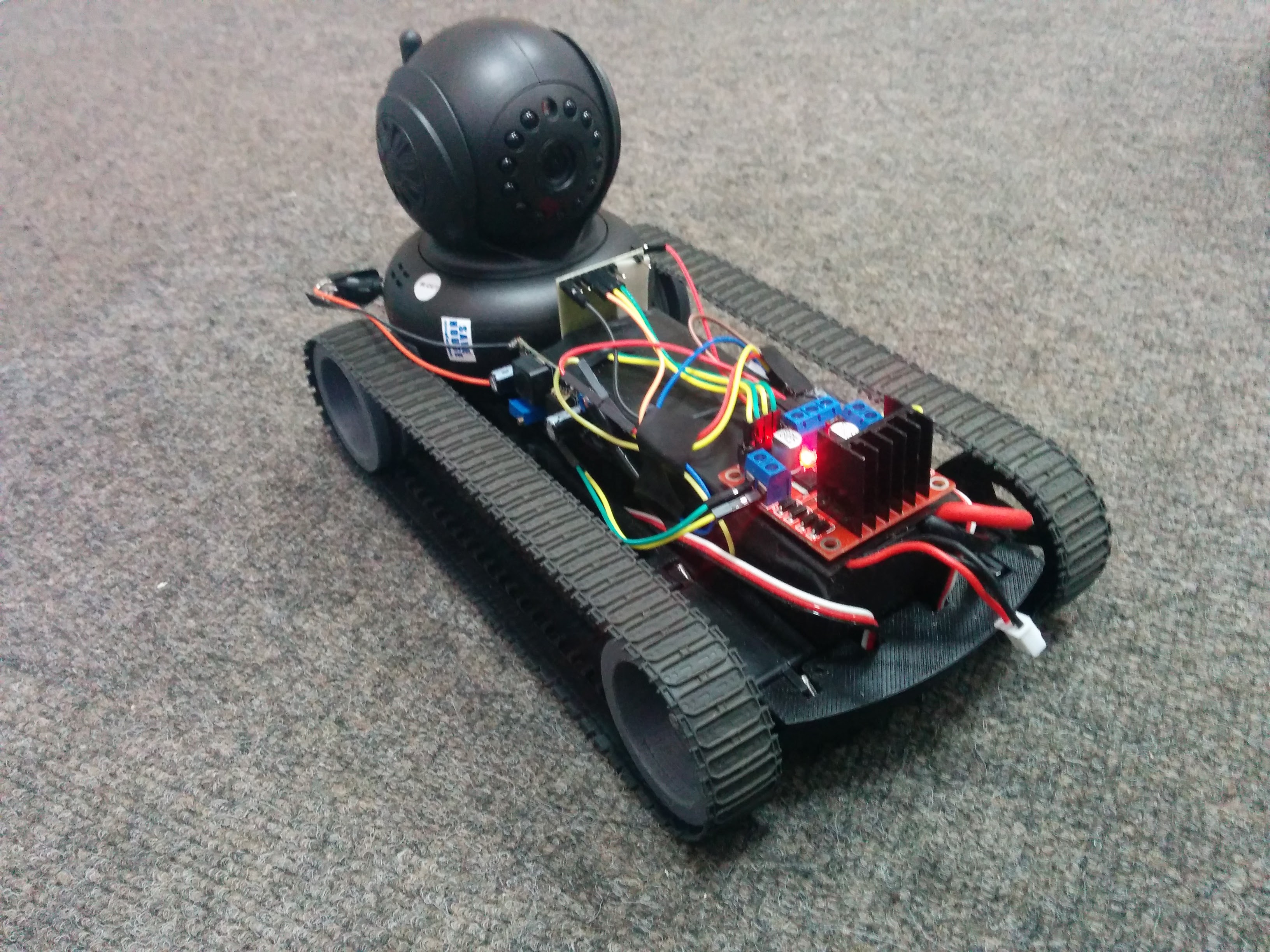

Like the rest of the "experimenters", first of all, we took the machine on the radio control, put a Chinese Wi-Fi camera on it, installed the Arduino board and our robot "went." We asked our friend from the USA to connect to the typewriter via the Internet. He was able to travel around our office and we were delighted. Delays in control and video stream in a few seconds did not seem to us a problem.

From this moment we have divided our work into two areas:

- modeling and construction of a playing ground

- development and creation of robots and control systems

Gullivers in the city of Pripyat

I’ll start my story with a training ground. We understood that people had to play somewhere. The place should be world famous, “mysterious” and uncomplicated in technical implementation. Going through many options, we suddenly came up with the idea of Chernobyl. The Chernobyl zone met all our requirements, and most importantly, all possible future breakdowns and damage to the playing ground could be attributed to the apocalypse post.

Having found a room with an area of 200 square meters, we set to work, which eventually lasted two years. We painted the streets and textures of houses, created three-dimensional models of buildings, including internal floors. Then they cut everything out of particleboard and plywood, assembled buildings from hundreds of different parts.

We tried to recreate, as accurately as possible, all the textures of Pripyat, "spying" on Google maps. Of course, the size of the room did not allow us to create everything exactly, and we did not want to miss the details, therefore, for example, we had to move the Chernobyl NPP closer to Pripyat.

It is difficult to calculate how many hundreds of boards, tens of sheets of plywood, fiberboard and other "consumables" we spent. For the last three months, we have literally crawled on all fours with brushes and paints, decorating houses and floors. We wanted the maximum detail. The scale of the city turned out to be 1:16 and the houses 9 stories high were approximately at the level of the adult’s chest. Being in this city we felt like real giants.

A little bit about us

Then probably it's time to talk about our team. Initially, we had only two engineer friends. Thinking about the project, we understood that it would be difficult to find an investor for such an “adventurous” idea and we decided to do everything for our money. During the work, many people helped us. Someone is free, someone we hired for help.

A good example of teamwork was the story of 3D printing. We assembled our printer and printed the parts for the first time on our own, until we came to the conclusion that you cannot be a specialist in everything. Printing took us a lot of time, parts of the parts were large and an unexpected marriage at the end of printing parts spoiled all our plans. As a result, we found a “narrow” 3D printing specialist who later became part of our team. Having shared our dreams, he helped us to make robotic bodies simply at the cost of plastic.

Collecting robots, we could not do without the help of a turner. One of our friends helped us with this. Construction work at the landfill often required non-standard and complex solutions, we were very lucky to meet the guys who also actively helped us in these matters.

The project was very lucky with the designer, the artist, his talent was invaluable.

To save as much as possible on the construction of the gaming arena, we had to do almost everything ourselves. But in addition to a large training ground, there was also a technical part ...

Defeating Video Delay

Surely questions of the engineering implementation of the project will be more interesting to you than the description of our “urban planning”.

Let us return to the moment when, as you remember, we put the camera on the “cart” and were able to control it. Following this, it was time to choose hardware and technology to create our robots. Here the first surprise was waiting for us: after going through a dozen cameras, we could not achieve a signal delay in which controlling the robot via the Internet would be comfortable. Everything was complicated by the time it took to order camera samples in China and test them.

We wanted to make the robot control system completely in the browser without any “download our wonderful client” and outdated Flash players. This significantly narrowed the list of technologies and cameras that support them. We experimented for a long time with the transfer of the video stream in MJPEG format, but in the end we abandoned this idea. These experiments cost us half a year to lose. We even fully assembled the first five robots and launched an open testing for everyone, but ...

Live tests showed the inability of the router to process a huge video stream over the air from several robots in the MJPEG format, as soon as we tried to optimize the picture resolution. The video stream from one robot could not be made less than 20-30 Mbit, which made it impossible for the stable simultaneous operation of the 20 robots planned by us. Also, we could not find a ready-made sound transmission solution without delay. This led to the fact that we had to search again for the technology suitable for our tasks.

As a result, our choice settled on WebRTC. This ensured us the transmission of video images and sound with a delay of only 0.2 seconds.

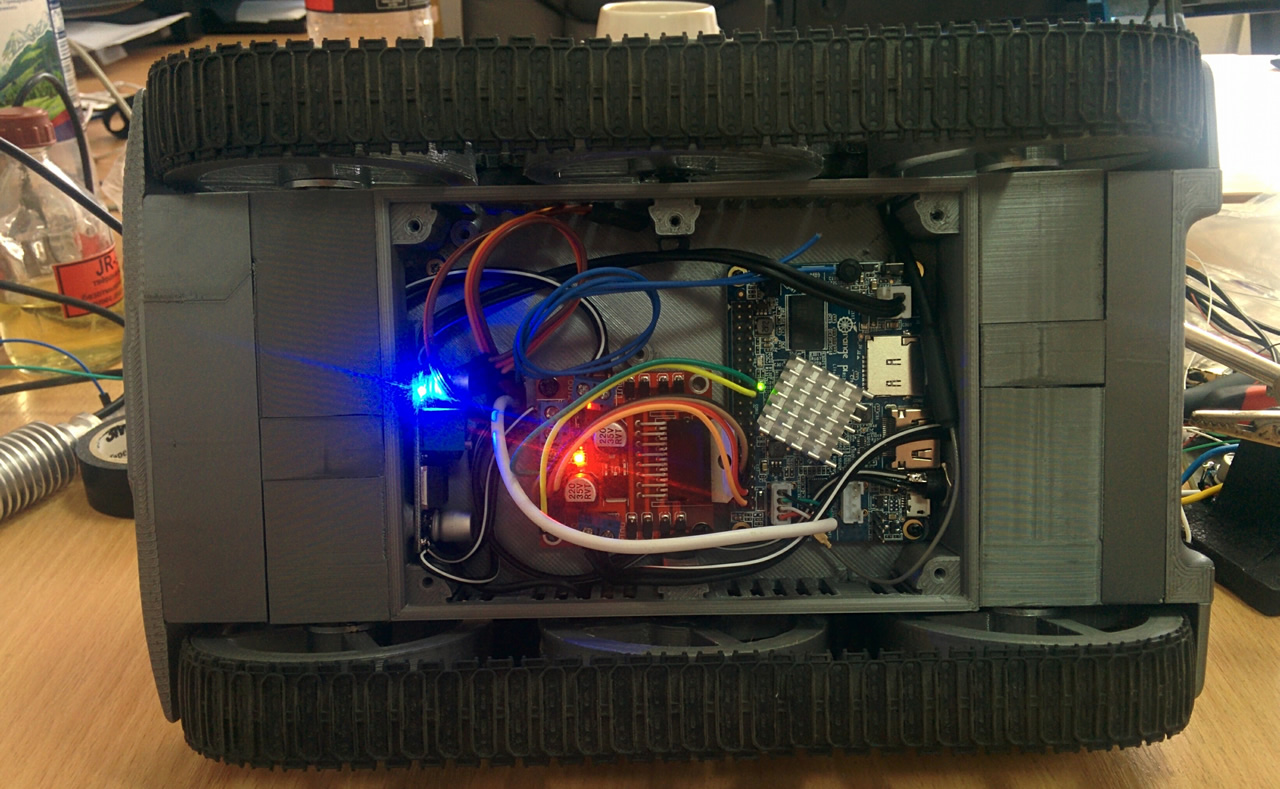

Then it's time to simulate and assemble robots. To depend less on external suppliers, we printed all the details of our bots on a 3D printer. This allowed us to create the most compact model of the robot, and optimally place all the electronics and powerful batteries inside.

Power system

The next question was related to power supply, because we really wanted to change the batteries as little as possible. After going through a lot of ready-made options, we settled on our own battery, assembled from elements of the Panasonic 18650B. A battery voltage of 17 volts and a capacity of 6800 mAh made it possible for our robots to travel for 10-12 hours on a single charge.

During the experiments, we successfully “killed” a hundred elements, because we wanted to use the capacitance of the elements as much as possible, and the voltage at the end of the discharge dropped very quickly and our simple voltage indicator, assembled on a divider, did not always give accurate readings. But in the end, we raised the threshold for the minimum allowable voltage from 2.5 volts to 3.2, plus we put a microcircuit for precise voltage control and the Panasonic cases of “death” stopped.

As chargers, we chose the iMax B6 devices, which are popular among modellers, with the option of charging in the element balancing mode. We “killed” some of the batteries due to improper calibration of Chinese copies of the iMax B6. We connected five cans and charged them in balancing mode. At the end of the charge, the total voltage of the battery was checked without breaking it into cells, but in fact one bank was not fully charged and "died" first.

Motors for robots

Surely many of you have asked yourself the question: why 17 volts? The answer lies in the motors. Motors are the second part of our “Chinese torment” after choosing cameras. We went over a lot of different engines. To our horror, almost all of them had a small resource and quickly failed. After 3-4 months, during the experiments, we managed to find a manufacturer of “normal” motors in terms of reliability, but there was still no final solution.

In a conventional machine, the transmission plays a key role in transferring power from the engine to the wheels. We didn’t have it. By reducing the voltage on the motors, we successfully reduced the speed of the robot, but at the same time its power was lost and our “tanks” could not slowly turn around. Soon we solved this problem.

Oh, I said that word is "tanchiki."

Why are the "tanks"?

Why exactly the "tanks"? The answer is simple. If we add to the camera delay an unknown Internet channel delay, then some resident of Australia will be able to comfortably control only something relatively slow. This was the first argument in favor of choosing tanks, and the second argument, which finally convinced us, was to comfortably control the robot. A man got used to it by clicking on the “arrow” to the right to wait for the robot to turn right, and without the caterpillars it was impossible to do, because only the tanks were turning “in place”. We were also happy about the expected “super cross-country ability”. Having ordered a box of rubber tracks in China, we began to print "wheels-rollers" under the tracks.

The very first tests broke our dreams to smithereens, caterpillars often flew from the tank when hitting low obstacles. Having studied the basics of tank mechanics and having tried different tensioners and auxiliary wheels, we still did not solve this problem. We had to part with the caterpillars. Since the robots were already printed and assembled, we had to look for some quick and easy solution, but there was one thing - good wheels with a rubber tread. And how do you turn on the spot you ask? We "got out" by connecting the two axes with each other with a thin strap from a 3D printer. In general, we got a wheeled robot with all-wheel drive and a rotation in place.

Robot heart

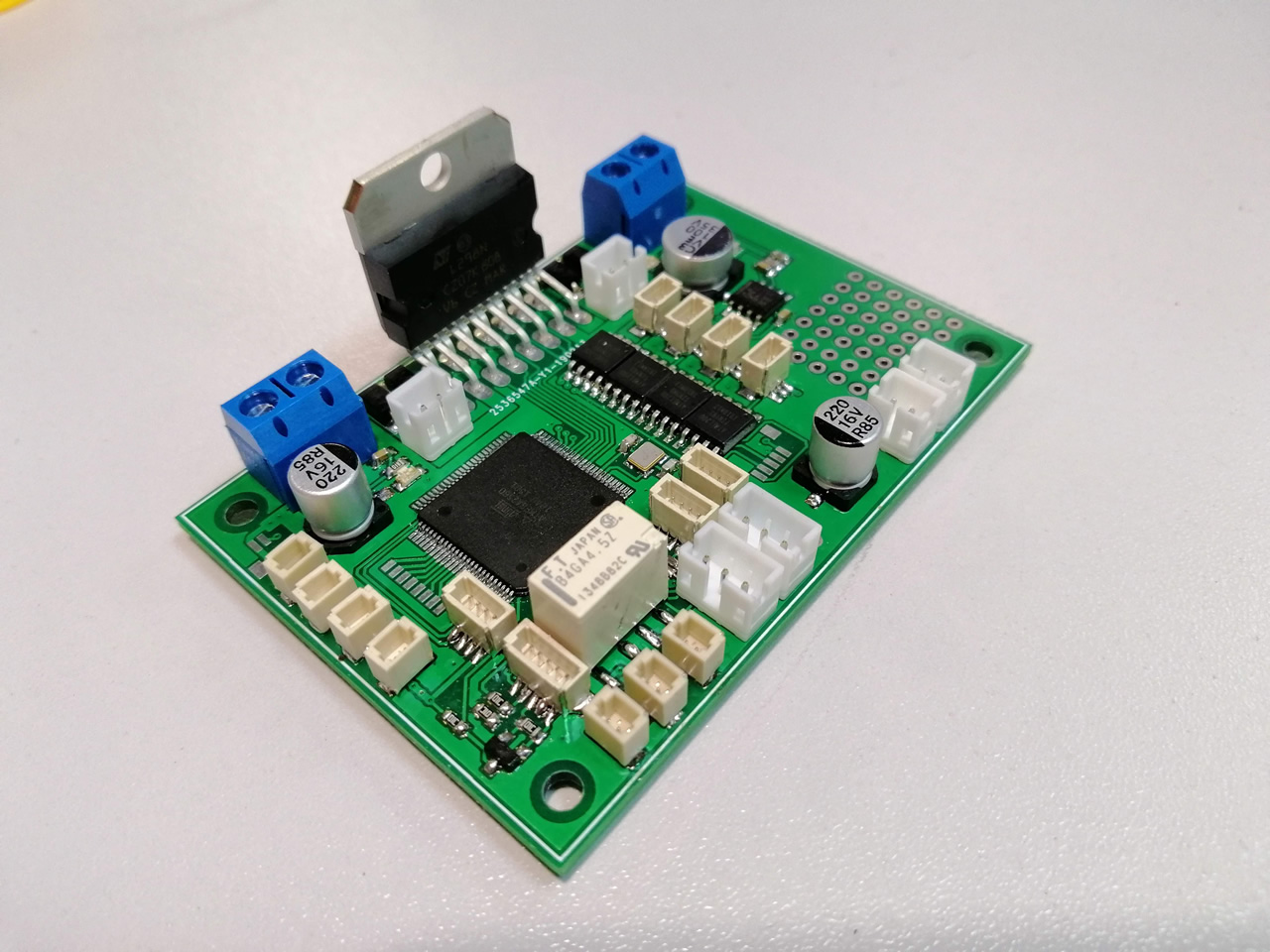

We have already talked about most of the elements of our robot and did not say anything about the most important component.

Our bot was based on the Raspberry Pi mini computer on Linux and specially developed software that allows the robot to communicate with the server. The Raspberry Pi works in conjunction with our monitoring and control board. The board includes a microcontroller, a driver for motors, microchips for processing signals from various sensors, and a module for precise control of battery voltage. For ease of assembly, we have implemented absolutely all peripheral connections on separate connectors.

As I mentioned earlier, we often had to change components when we were faced with unforeseen problems. It happened this time too. Initially, we collected the first robots on the Orange Pi, to save money. In the future, we had to replace them with a Raspberry Pi 2 B. But this was not the end. We soon again had to replace this mini computer with a version of the Raspberry Pi 3 B + which had a 5 GHz module on board WiFi. But more on that later.

Wi-Fi setup

The next problem that awaited us was the Wi-Fi radio channel. We learned about it only by starting the tests immediately 10 robots in motion. Our landfill was located in an enclosed basement and the "re-reflection" of reinforced concrete walls was simply terrible. The control commands went fine, but the video stream was wildly “slowing down”, when one of the robots left for the far corner of the room.

The transition from 2.4 GHz to 5 GHz helped us cope with channel loading. But the difficulties did not end there. If the robot drove around a corner, the signal dropped below -80 dBm and the brakes started. Finally, we solved the problem by installing a sector antenna with diversity reception and raising the transmitter power to half a watt. Of course, the router had to be "picked up" from the business solutions segment with a powerful processor.

It is worth mentioning that instead of increasing the power, we tried for a long time to set up the “seamless” roaming mode based on the Ubiquity solution, but alas, the Wi-Fi module we needed “refused” to support it, but the iPhone worked perfectly, moving between several access points.

Having collected “ten” robots and launched the monitoring and control server, in November 2018 we went to Kickstarter with the Isotopium Chernobyl project . We did not even realize that tens of thousands of people would soon try our game.

Read about our future article and why we almost closed the project in our next article: Online game with RC models controlled via the Internet