Texturing, or what you need to know to become a Surface Artist. Part 1. Pixel

- Tutorial

What is this series of lessons about?

In this series of articles, I will try to maximize the theory of creating textures for the gaming industry, starting from the very concept of “pixel” and ending with the construction of complex materials (shaders) in the game engine using the example of Unreal Engine 4.

Part 1. Pixel - you read it.

Part 2. Masks and textures here .

Part 3. PBR and materials here .

Part 4. Models, normals, and sweep here .

Part 5. Material system here .

I will try to cover programs such as Windows Paint, Photoshop, Substance Painter, Substance Designer and, possibly, Quixel (I don’t really see the point in this program, since after reading all the articles, readers should have a complete understanding of how to work with textures, and Quixel will become intuitive).

I will try to analyze concepts such as PBR, masks and various types of textures in as much detail as possible.

And all this will be considered from the lowest and most basic levels for first-graders and those who have never had any problems with it, so that upon reading these articles, the reader does not have any questions, have a maximum understanding of how it all works, and he could start confidently working with textures and shaders in any software, since everyone has one basis ( base, essence ).

I am not perfect. I do not think that I know this topic from and to. I started writing this article to help my friends get involved in this beautiful world of texturing without my help - so that they can open articles at any time, read them and understand how they work with it and what they should do. And I will be grateful to all of you if you help me fill out this tutorial so that we can all give the right people to these articles and they can quickly get involved in this area. I really ask everyone who cares about this topic and the topic of training in this area, to help me in the comments - to make my own corrections or wishes, if I suddenly miss something or make a mistake in something.

I beg everyone who can come up with other examples for a better understanding of a block to unsubscribe in the comments so that I can add these examples to the article. Suddenly, my examples will not be enough to fully understand the basics?

So guys, let's go =)

Part 1. Pixel

What is a pixel?

The concept of "pixel" is used in the definition of the physical element of the display matrix, as well as the smallest color point in the image, from the heap of which the image itself is formed.

The concept of “pixel” is equally used both here and there for the simple reason that, in general, the principle of operation of this element is the same in monitors and in images with slight differences. Therefore, to begin with, let's analyze the principle of the pixel on the monitor.

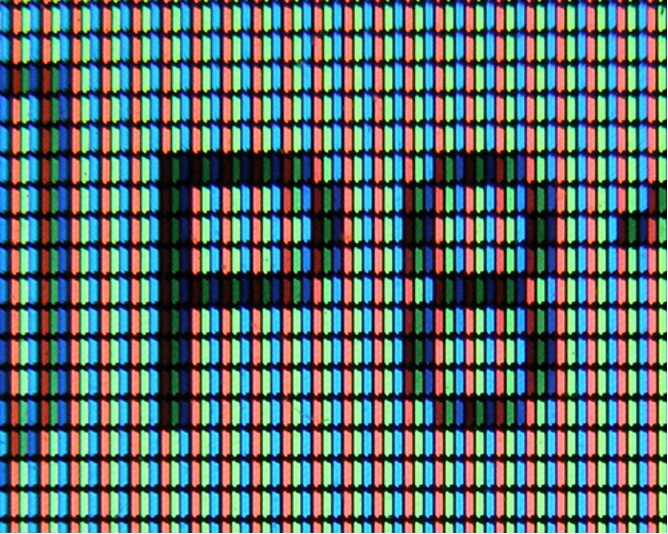

Pixel and Monitors

(The description of the operation of the pixels below is abstract and does not describe the real physical phenomena of the operation of LCD monitors).

In monitors, a pixel is a physical element consisting of 3 luminous elements of 3 colors - Red, Green and Blue. The intensity of each element (luminosity) determines the color of the pixel. That is, if the green and blue elements stop completely glowing, and the red element remains on, then on the screen it will already be a red dot (red pixel), and if you physically get as close to the monitor as possible, you can see how this red dot on its right has black space - two blank elements.

Intensity Range and Pixel Color

We repeat once more. The pixel color is determined by 3 light elements - red, green and blue. Depending on their glow settings, the color itself is obtained. It is important.

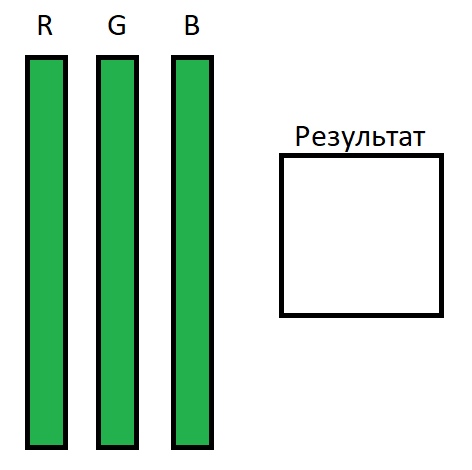

Now imagine this in the form of a scale of intensity of each element, where green represents the current intensity, and in the square on the right is the color that is approximately obtained from the combination of the intensity of the elements:

Imagine that the figure shows the maximum intensity of the glow of all pixel elements, which ultimately gives us white pixel on the monitor. Accordingly, if we reduce the intensity of the Green and Blue elements to 0, we get an exceptionally bright red color:

And we also come to a very important point - the intensity range.

The intensity range (let's call it that) is the range of the state of an element from its minimum state (no glow at all) to its maximum state (maximum brightness).

This can be expressed in many formats, for example:

- - From 0% to 100%. That is, the element can glow in half the force, in other words, by 50%.

- - From 0 to 6000 candles. That is, the maximum brightness of the element (100%) is 6000 candles, and the power by 75% will be equal to 4500 candles, respectively.

- - From 0 to 255. That is, 30% in this range will be equal to 76.5.

- - From 0 to 1. The same as 100%, but use 1 instead of 100. This is convenient for calculations, which we will definitely consider later.

In our lessons, we will use the last version of the range representation, since it is convenient for calculations, as we will see later.

Offtop

In reality (outside of our conventions), the brightness of light in monitors is measured in units of candela , which in Italian means “candle”. In the world, it is customary to denote brightness and write cd (Russian version of cd ). In our tutorial, in order to indicate precisely the convention of these meanings, I will continue to use the word “ candle ”.

Special thanks for adjusting Vitter .

Special thanks for adjusting Vitter .

In our lessons, we will use the last version of the range representation, since it is convenient for calculations, as we will see later.

Now add intensity ranges to our scale and get the following picture:

Now we see that the intensity is R = 1, the intensity is G = 0.55-0.60, and B = 0. As a result, we get about the orange color that the pixels give us on the monitors. You can physically get as close as possible to the monitor and try to look at the pixels in the square of the result - you will not see a blue element in these pixels, since it is completely turned off (its intensity is 0).

It is important to understand that each monitor, depending on the manufacturer of the matrix, assembly, and some additional parameters, the brightness level itself can completely differ.

For example, the range of pixel brightness in the matrix of monitors:

- - Samsung may have 6,000 candles.

- - LG = 5800 candles.

- - HP = 12,000 candles.

These are abstract figures that have nothing to do with reality, so that there is an understanding that each monitor has a maximum intensity force, but the intensity range is always the same - from 0 to 1. That is, when you twist to 1 intensity of the red element, then it starts to glow as brightly as you can, because 1 = maximum.

Now we have the maximum idea of how the color is built on the monitor - a million pixels adjust the intensity of their elements so that in total they get the desired color. If you are reading this text in black letters on a white background, you should already understand that the letters themselves are displayed in pixels that completely turn off their glow, and the white background consists of pixels that turn on the intensity of all their elements to the maximum.

If you go very deep into this ocean, you can find that the pixel has 2 ranges of glow intensity - this is the intensity range of each element, and the overall intensity range, which determines the overall brightness of the entire monitor (for example, the brightness of the monitor is reduced in the dark and added, when it is very light).

Screen Resolution and Pixel Sizes

And so, having understood how the color is built in pixels, we understand how the image is formed on the monitor. And what is the size of a pixel? And why is size important?

The smaller the pixel in size, the more you can put them in the monitor. However, the pixel count itself is always limited by the screen resolution.

For example, a screen with a resolution of 1920x1080 contains 2,073,600 pixels. That is, the screen width consists of 1920 pixels, and the height = 1080 pixels. Multiplying these two values, we get the area (number) of pixels.

Thus, depending on the diagonal of the monitor and screen resolution, the pixel on the screen has its own dimensions. So, with a monitor diagonal of 19 inches and a resolution of 1920x1080, the pixel size will be smaller than that of a 24-inch monitor with the same resolution. Accordingly, if we take a 24-inch monitor with a resolution of 2560x1440, then its pixel size will be smaller than that of the previous example.

Total

We have an idea of how the color of pixels is formed on the monitor.

We have an idea about the size of the pixels, and that they may vary depending on the monitor itself.

We have an idea that with a resolution of 1920x1080 on the phone’s monitors, the pictures will look more detailed and sharper, since the pixels are smaller.

And in general, we have an understanding of how the image is formed on the monitor.

Pixel in Images

Again. The concept of "pixel" is used in the definition of the physical element of the display matrix, as well as the smallest color point in the image, from the heap of which the image is formed.

Formation of color

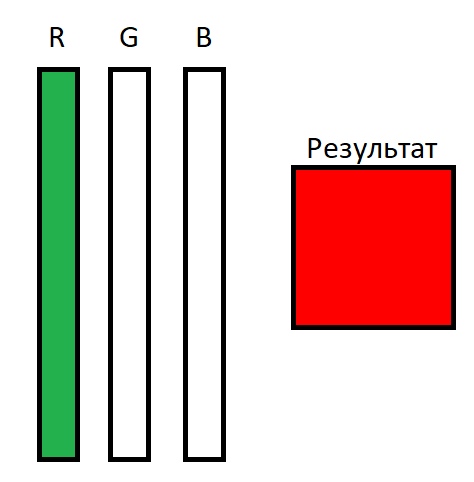

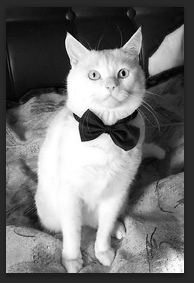

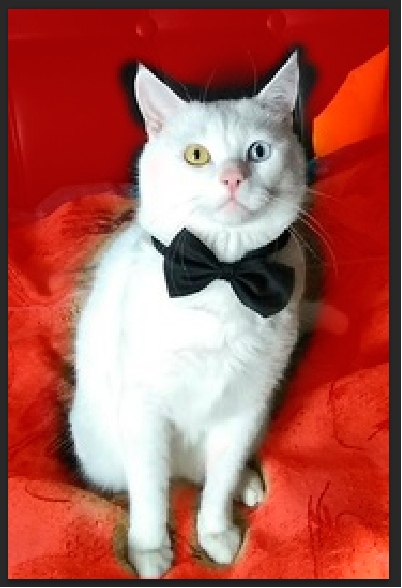

Let's look at the image, some picture. For example, of my cat:

This picture has a resolution of 178x266 pixels. That is, the picture consists of 47.348 pixels and occupies on the screen only 2.2 percent of the space. Is that so? Does this picture really occupy 47.348 physical pixels on your monitor? And if the image scale down? When reducing and enlarging an image, the number of pixels of which it consists does not change, which means that the pixels in the image clearly mean something different from the pixels in the monitor. Yes and no.

A pixel in an image is the smallest color point from which a bunch of images is composed. The number of pixels in the picture is not tied to the monitor in any way and depends on who created this picture (or what created). In this example, the number of pixels depended on my crooked hands - I cut the photo randomly and got a picture with that many pixels.

To make it easier to understand what a “pixel” is in an image, you should turn to the software implementation of this object.

A pixel in a computer image is a set of numbers. Relatively speaking, this block (brick, square) - one white square on the cat’s ear takes 32 bits. When the computer wants to display my cat on your monitor, it reads every pixel of the image (all 47.348 pixels in turn), and displays them on the monitor. When the image scale is 1 to 1 (1 pixel of the image is equal to 1 pixel of the monitor or, in other words, the scale is 100%), the image size occupies exactly the same number of pixels of the monitor as it has.

Each such pixel consists of 4 values (channels) of 8 bits = 32 bits.

3 values are allocated to the intensity distribution of the colors Red, Green and Blue (remembering how a pixel works in the monitor, it immediately becomes clear how these values affect the color).

1 value is given to transparency (more on that later).

(In these lessons we will consider only 32-bit images and 8-bit channels. Everything else is already particular and other standards that work by analogy).

Each value (channel) can be in the range from 0 to 255 integers, or else 256 values, which is equal to 8 bits.

In other words:

If the channels responsible for green and blue are equal to 0, and red is equal to 255 - then the image pixel will be as red as possible.

If the green value is raised to 128 (which is equal to the middle or 0.5), then the pixel will be orange, as in the example with pixels from the monitor above.

Or here's another example:

In this image, the Tint, Contrast, and Brightness options do not really affect the color formation. These are derivatives that are automatically calculated from the current values of the channels, and not stored in them, so you don’t need to be scared of so many digits.

That is, the image pixel has three channels (three values), which have their intensity range from 0 to 255. By adjusting the channel intensity, it is possible to obtain various shades of colors.

From this moment, we will begin to use the program Adobe Photoshop, because it has a great way to visualize the compilation of color from 3 channels (and even more, but more on that later).

It so happened that for a visual display of the range of intensity began to use shades of white.

The blackest color = 0.

The whitest color = 1 (or 255, if we consider the scale from 0 to 255).

And it looks like this:

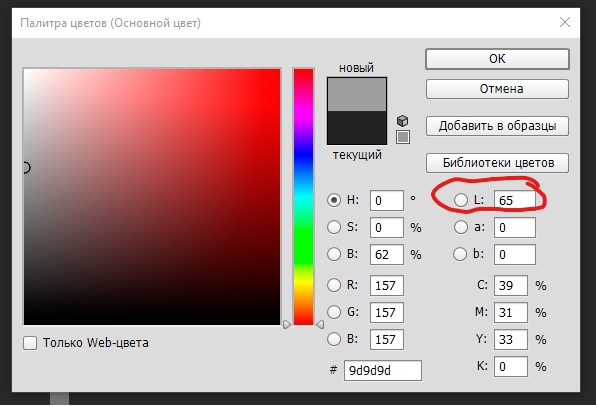

By the way, setting the intensity in Photoshop corresponds to the Level parameter (the range designation used is 0-100). Where 65 can be regarded as 65% of the intensity or 0.65:

Remember how in the screenshot of the color settings in Paint I asked not to pay attention to other parameters? In fact, in Photoshop you can ignore other options. Everything is controlled by 3 channels - RGB. The remaining values here are calculated based on the RGB values. But you can use them, for example, indicating the intensity in the Level parameter from 0 to 100, and then Photoshop will calculate the necessary values for RGB for us.

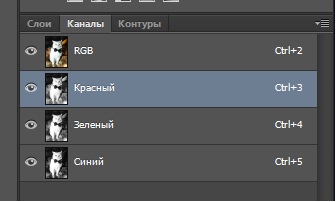

In Photoshop, you can switch to each channel separately and see how the intensity of each channel is indicated in the range from 0 to 1 white:

Red channel:

Green channel:

Blue channel:

Well, once again, how it all looks together:

Now, understanding how it develops the pixel color from the sum of the channels, how the intensity of each channel is visualized, you can understand what colors some objects have when looking at each channel separately. For example, the orange cover on the top right on the red channel is bright white (intensity is from 0.8 and higher), on the green channel is average intensity (about 0.5), and on the blue channel it is almost black (intensity about 0). Together, the result is an orange color.

Total

The pixel in the image forms the color in much the same way as the pixel on the monitor. In fact, when a 1 to 1 picture is scaled, the image pixel tells the monitor pixel how to shine. But zooming in already makes the software in which this happens to process the image differently.

(Here is a paragraph with a subjective view of the working processes) As I understand it, when you zoom in on a picture, the software simply paints a bunch of pixels (for example, 4 by 4) in the same color (as if it were a single pixel), forming a feeling of the picture getting closer and its pixelation . But when zooming out the picture, when 2 or more pixels of the image begin to fall on 1 real pixel of the monitor, the software starts to average the color of several pixels of the image that fight over 1 pixel of the monitor. And when you increase and decrease the scale of the image, the software somehow uses its image processing algorithms.

Additionally:

The above is a system for constructing an image without any compression. In general, there are varieties of image compression methods in which values are either trimmed or averages are taken from the ones next to it, and so on - this is not interesting for us now, and compression methods are already ways to cut down the amount of memory on a computer that are repelled from this view pixel work.

Images and Masks

And now gradually we are getting closer to the most interesting thing - masks .

The first mask that we have already encountered, but have not voiced, is the mask of transparency.

Recall that a pixel has 4 channels of 8 bits. Of these, 3 channels are responsible for color formation, and the fourth is responsible for transparency.

The transparency mask is the 4th channel in the image pixel, which indicates that this pixel should be displayed fully, have transparency or not be displayed completely.

That is, this channel also has a size of 8 bits and can have values from 0 to 255. Where 0 is the most transparent, and 255 is the most NOT transparent.

If you do not have a transparency channel in the image, then it is easy to add it by clicking on the add channel:

And you will immediately have an Alpha channel.

Now, all the values in this channel are 0, and it is visually completely black.

Next, I designated the zone of 100% visibility - highlighted my cat, drawing a cat silhouette in the alpha channel:

Now, if you turn on the display of all 3 channels + Alpha channel, you can see the following:

Photoshop marked in red areas that are completely transparent, so that I understand that when unloading a transparent image, the pixels that fall into the red zone will be recorded, they will have a value in all 4 channels, but since they have a value of 0 in the 4th channel, they will not be displayed and, therefore, will be weighed for image file.

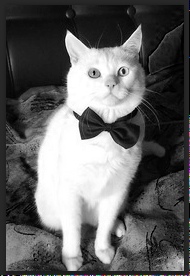

This is how the exported image in PNG format looks like with a transparency layer (in fact, it was Tiff, but it doesn’t play any role):

Now it should be noted that when I was drawing the transparency mask, it had smooth transitions (that is, not roughly 1 and 0, and 1 in the center, and soft edges from 1 to 0 on the edges). This allowed us to create translucent pixels, which in this image show how smoothly around the cat the picture goes into transparency.

So smoothly we came to the next extensive topic, which I will try to cover in the near future - to masks and the first lesson of texturing.

Thank you for your attention, waiting for your wishes and corrections =)