iOS Digest No. 8 (June 14 - June 27)

Image source: The Verge Apple’s self-driving car, support for a mouse manipulator on the iPad, a camera on the Apple Watch, and even more news in the new release of the iOS digest. At the same time, let's see how good SwiftUI is in terms of performance and how to use the Combine framework if RxSwift chains no longer cling to the soul.

Industry news

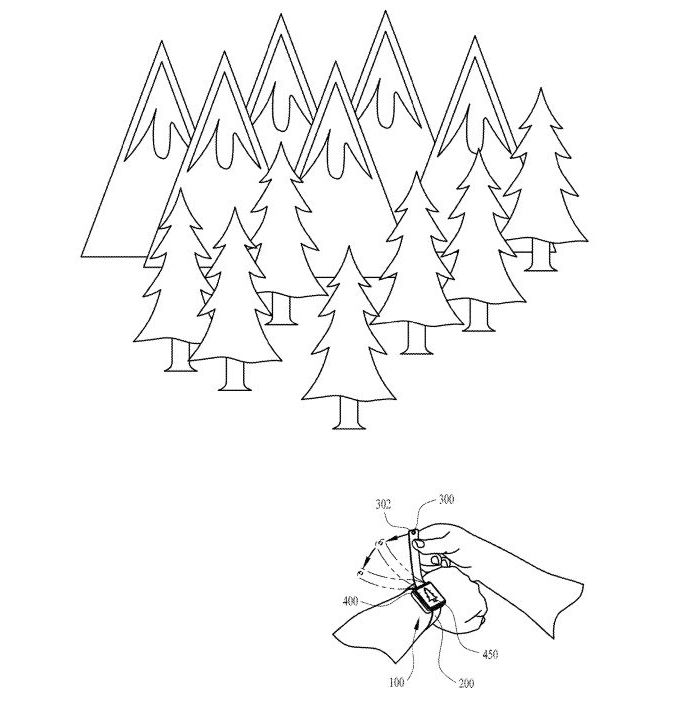

- The Verge reports that Apple is considering integrating the camera into its watch. We can only guess how serious these plans are, but just in case, the company has filed several patents for integrating the camera into the strap, one of which dates back to 2016. During this time, the camera has not appeared in new versions, but it will still be.

It is reported that taking pictures will be possible using voice control or holding the clock. Judging by the images, to remove from the desired angle, just turn the strap (which looks much more convenient than turning your hand if the camera were built into the case itself). - The iPad is overgrown with the number of possible paired devices: in addition to the keyboard and stylus, iPadOS now supports a computer mouse. There was no official announcement, this functionality is disabled by default, but can be enabled in the Universal Access settings.

- Apple bought the startup Drive.ai , which is developing a self-driving car. About the fact that Apple is working on its own car with autonomous control, it was already known, but last year there was news that the project was closed. Apparently not, the work continues.

- Apple has hired one of ARM's top processor designers to work on its own MacBook processor, Bloomberg reports . Judging by the information available, a plan to replace Intel processors has already existed for several years, and the goal is to switch to their ARM-based relatives in 2020.

IOS Development News

- Developers continue to play with the new version of ARKit 3, several interesting demos have been released: for example, one with the ability to remove people from video in real time. This will probably look like the “Block user” function in social networks for smart glasses in the future.

So far, without specific practical use, but it’s quite sticky that the body is divided into particles in real time:

Have you managed to do something similar using ARKit? Share ideas in the comments. - Swift language complexity is increasing, with each update more and more functionality is added. In Swift 5.1, it is possible to write wrappers for class properties and structures (in the original property wrappers). They are needed in order to declaratively add some functionality and behavior on top of the declared type of the variable, thereby increasing the security of the code.

For example, consider a wrapper that automatically cuts spaces and line breaks in a string:import Foundation @propertyWrapper struct Trimmed { private(set) var value: String = "" var wrappedValue: String { get { value } set { value = newValue.trimmingCharacters(in: .whitespacesAndNewlines) } } init(initialValue: String) { self.wrappedValue = initialValue } }

A variant of the declaration and use in the code will look as follows:struct Post { @Trimmed var title: String @Trimmed var body: String } let quine = Post(title: " Swift Property Wrappers ", body: "...") quine.title // "Swift Property Wrappers" (no leading or trailing spaces!) quine.title = " @propertyWrapper " quine.title // "@propertyWrapper" (still no leading or trailing spaces!)

See the Swift Property Wrappers article for more details .

Meanwhile, GitHub already has a collection of wrappers that might be useful. - There are two articles on using the Combine framework : Getting started with the Combine framework in Swift and Combine framework in action . Choose one or read both!

- It turns out that the new feature of Xcode 11 Preview can be used without SwiftUI, but you will have to change the minimally supported version of iOS, which looks a bit crutched and maybe not applicable for an existing large project. The procedure can be found here .

- If you, like me, are interested in the issue of synchronizing client data, then be sure to read Apple's New CloudKit-Based Core Data Sync article . In it, the developer of the Ensembles data synchronization library reflects on the new CloudKit reincarnation of the Apple CoreData-based synchronization framework.

For those who are interested: at Mobius in May, I had a chance to make a presentation about the problems of data synchronization on mobile clients during joint editing. In addition, in July it will be possible to personally communicate with one of the ambassadors of data synchronization issues Martin Kleppman at the Hydra 2019 conference , which will be held on July 11-12, 2019 in St. Petersburg. In the meantime, there is an opportunity to read a great interview with him .

- Twitter reports that the animation performance of the SwiftUI interface is not inferior to the primitives written using CALayer and CoreGraphics.

SwiftUI can process 5-10 times more primitives before performance drops below 60 FPS.