Why is the Internet still online?

The Internet seems to be a strong, independent and indestructible structure. In theory, the strength of the network is enough to survive a nuclear explosion. In reality, the Internet can drop one small router. All due to the fact that the Internet is a heap of contradictions, vulnerabilities, errors and videos about cats. The basis of the Internet - BGP - contains a bunch of problems. It is amazing that he is still breathing. In addition to errors on the Internet itself, everyone else is breaking it: big Internet providers, corporations, states and DDoS attacks. What to do with it and how to live with it? Alexey Uchakin ( Night_Snake ), the leader of the network engineering team at IQ Option,

knows the answer . Its main task is the accessibility of the platform for users. In decoding Alexey's report at Saint HighLoad ++ 2019let's talk about BGP, DDOS attacks, Internet switch, provider errors, decentralization and cases when a small router sent the Internet to sleep. In the end - a couple of tips on how to survive all this.

I will give only a few incidents when connectivity broke on the Internet. This will be enough for the full picture.

"Incident with AS7007 . " The first time the Internet broke down in April 1997. There was an error in the software of one router from the autonomous system 7007. At some point, the router announced to the neighbors its internal routing table and sent half the network to the black hole.

Pakistan vs. YouTube . In 2008, the brave guys from Pakistan decided to block YouTube. They did it so well that half the world was left without seals.

“Capture of VISA, MasterCard and Symantec Prefixes by Rostelecom”. In 2017, Rostelecom mistakenly announced the prefixes VISA, MasterCard, and Symantec. As a result, financial traffic went through channels that the provider controls. The leak did not last long, but financial companies were unpleasant.

Google v. Japan . In August 2017, Google began to announce the prefixes of large Japanese providers NTT and KDDI in part of their uplinks. Traffic went to Google as transit, most likely by mistake. Since Google is not a provider and does not allow transit traffic, a significant part of Japan is left without the Internet.

“DV LINK has captured the prefixes of Google, Apple, Facebook, Microsoft . ” In the same 2017, the Russian provider DV LINK for some reason began to announce the network of Google, Apple, Facebook, Microsoft and some other major players.

"US eNet has grabbed the AWS Route53 and MyEtherwallet prefixes . " In 2018, an Ohio provider or one of its customers announced the Amazon Route53 network and the MyEtherwallet crypto wallet. The attack was successful: even despite the self-signed certificate, a warning about which appeared to the user when they entered the MyEtherwallet website, many wallets hijacked and stole part of the cryptocurrency.

There were more than 14,000 such incidents in 2017 alone! The network is still decentralized, so not all and not all break down. But the incidents happen in the thousands, and they are all connected with the BGP protocol, on which the Internet works.

The BGP protocol - Border Gateway Protocol , was first described in 1989 by two engineers from IBM and Cisco Systems on three “napkins” - A4 sheets. These “napkins” still lie at the Cisco Systems headquarters in San Francisco as a relic of the networking world.

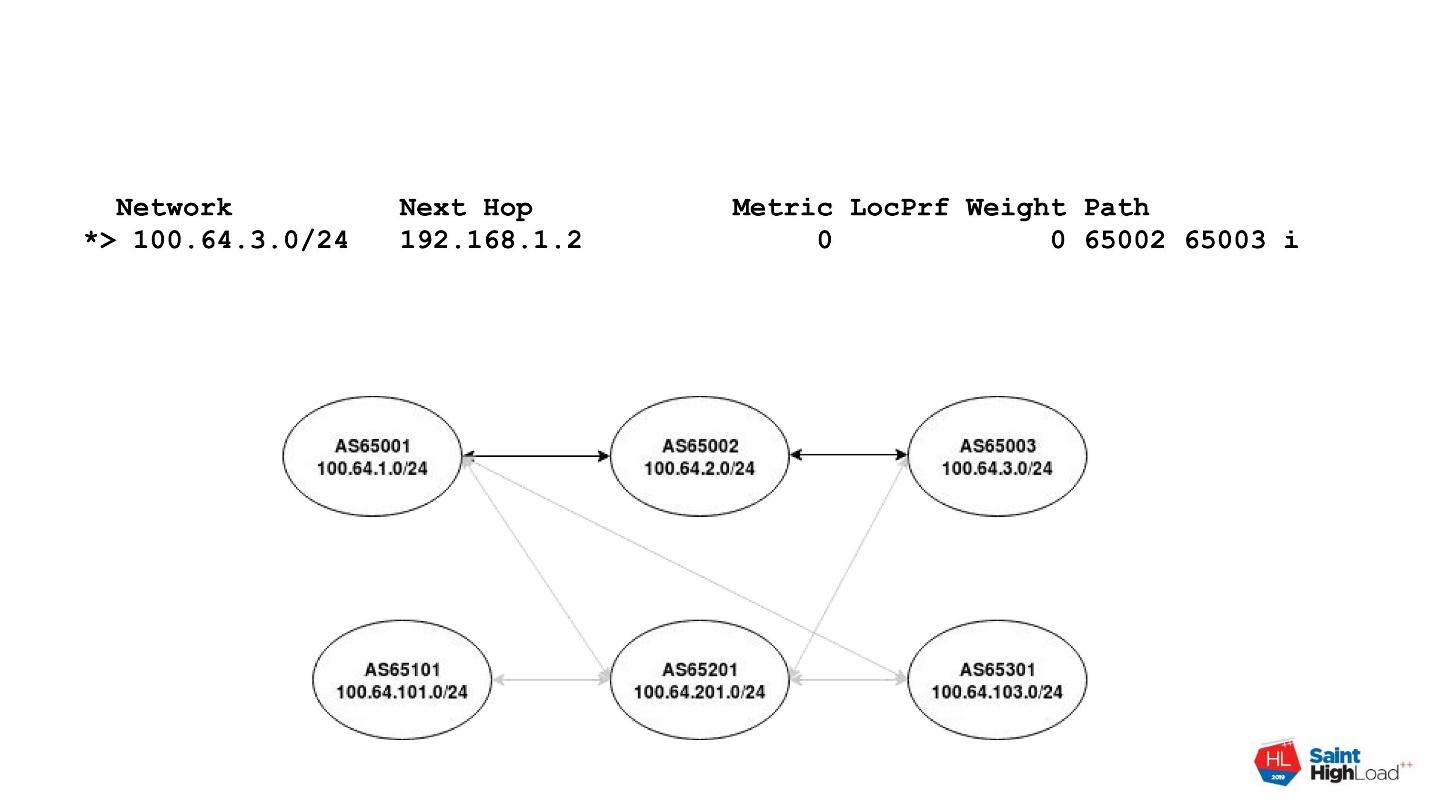

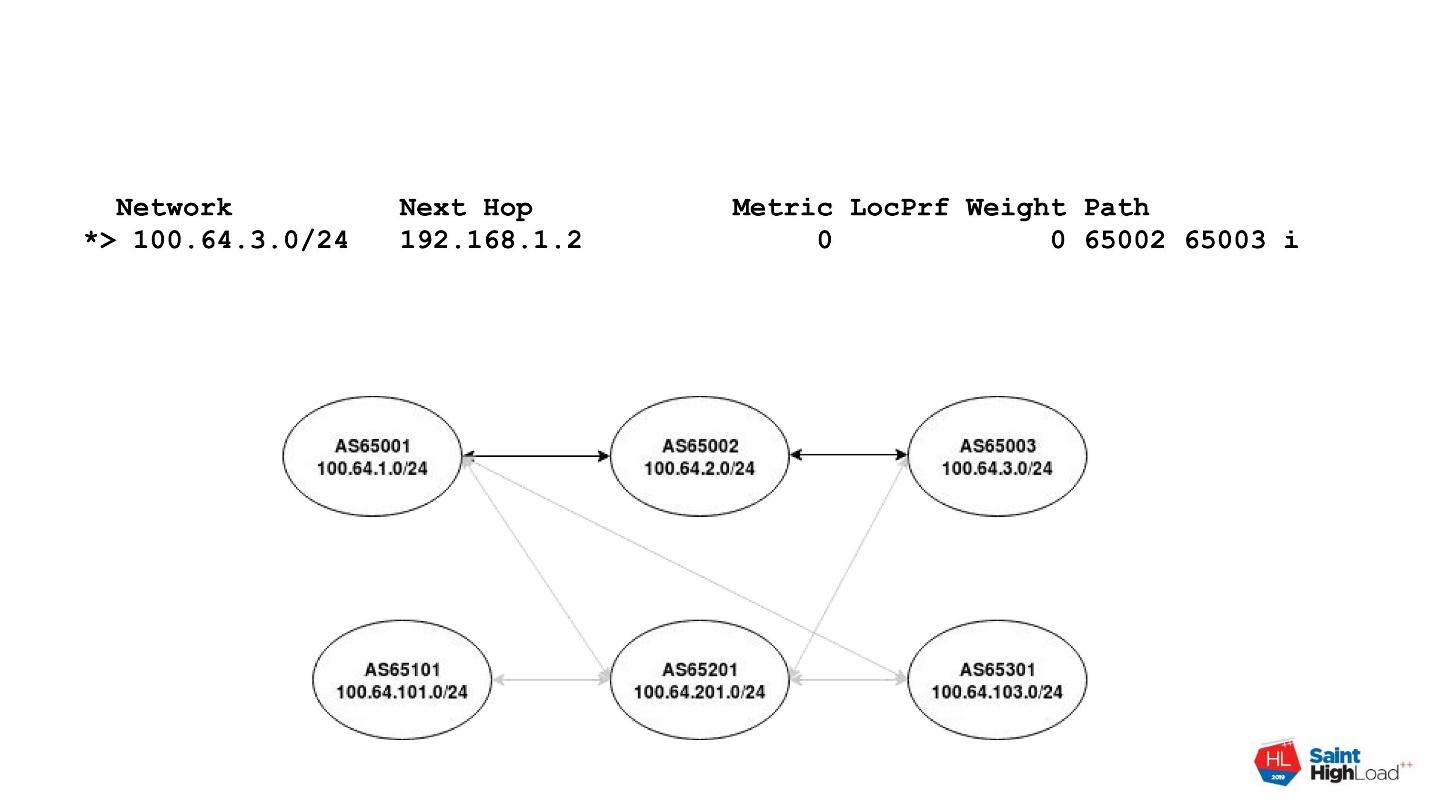

The protocol is based on the interaction of autonomous systems - Autonomous Systems or in abbreviated form - AS. An autonomous system is just some ID that IP networks are assigned to in the public registry. A router with this ID can announce these networks to the world. Accordingly, any route on the Internet can be represented as a vector called AS Path . A vector consists of autonomous system numbers that must be completed in order to reach a destination network.

For example, there is a network of a number of autonomous systems. You need to get from the AS65001 system to the AS65003 system. The path from one system is represented by AS Path in the diagram. It consists of two autonomies: 65002 and 65003. For each destination address there is an AS Path vector, which consists of the numbers of autonomous systems that we need to go through.

So what are the problems with BGP?

BGP protocol - trust based. This means that by default we trust our neighbor. This is a feature of many protocols that were developed at the dawn of the Internet. Let’s figure out what “trust” means.

No neighbor authentication . Formally, there is MD5, but MD5 in 2019 - well, that’s ...

There is no filtering . BGP has filters and they are described, but they are not used, or are used incorrectly. I will explain why later.

It is very simple to establish a neighborhood . Neighborhood settings in the BGP protocol on almost any router - a couple of lines of config.

BGP management rights are not required . No need to take exams to confirm your qualifications. No one will take away the rights to configure BGP while drunk.

Prefix hijacking - prefix hijacks . Prefix hijacking is the announcement of a network that does not belong to you, as is the case with MyEtherwallet. We took some prefixes, agreed with the provider or hacked it, and through it we announce these networks.

Route leaks - route leaks . Leaks are a bit trickier. Leak is a change in AS Path . In the best case, the change will lead to a greater delay, because you need to go the route longer or less capacious link. In the worst case with Google and Japan.

Google itself is neither an operator nor a transit autonomous system. But when he announced to his provider a network of Japanese operators, then traffic through Google via AS Path was seen as more priority. Traffic went there and dropped just because the routing settings inside Google are more complicated than just filters on the border.

Nobody cares . This is the main reason - everyone doesn’t care. The administrator of a small provider or company that connected to the provider via BGP took MikroTik, configured BGP on it and does not even know that there you can configure filters.

Configuration errors . They debuted something, made a mistake in the mask, put the wrong mesh - and now, again, a mistake.

There is no technical possibility . For example, communication providers have many customers. In a smart way, you should automatically update the filters for each client - make sure that he has a new network, that he has leased his network to someone. Keeping track of this is difficult; with your hands it is even more difficult. Therefore, they simply put relaxed filters or do not put filters at all.

Exceptions. There are exceptions for beloved and large customers. Especially in the case of inter-operator joints. For example, TransTeleCom and Rostelecom have a bunch of networks and a junction between them. If the joint lays down, it will not be good for anyone, so the filters relax or remove completely.

Outdated or irrelevant information in the IRR . Filters are built on the basis of information recorded in the IRR - Internet Routing Registry . These are registries of regional Internet registrars. Often in the registries outdated or irrelevant information, or all together.

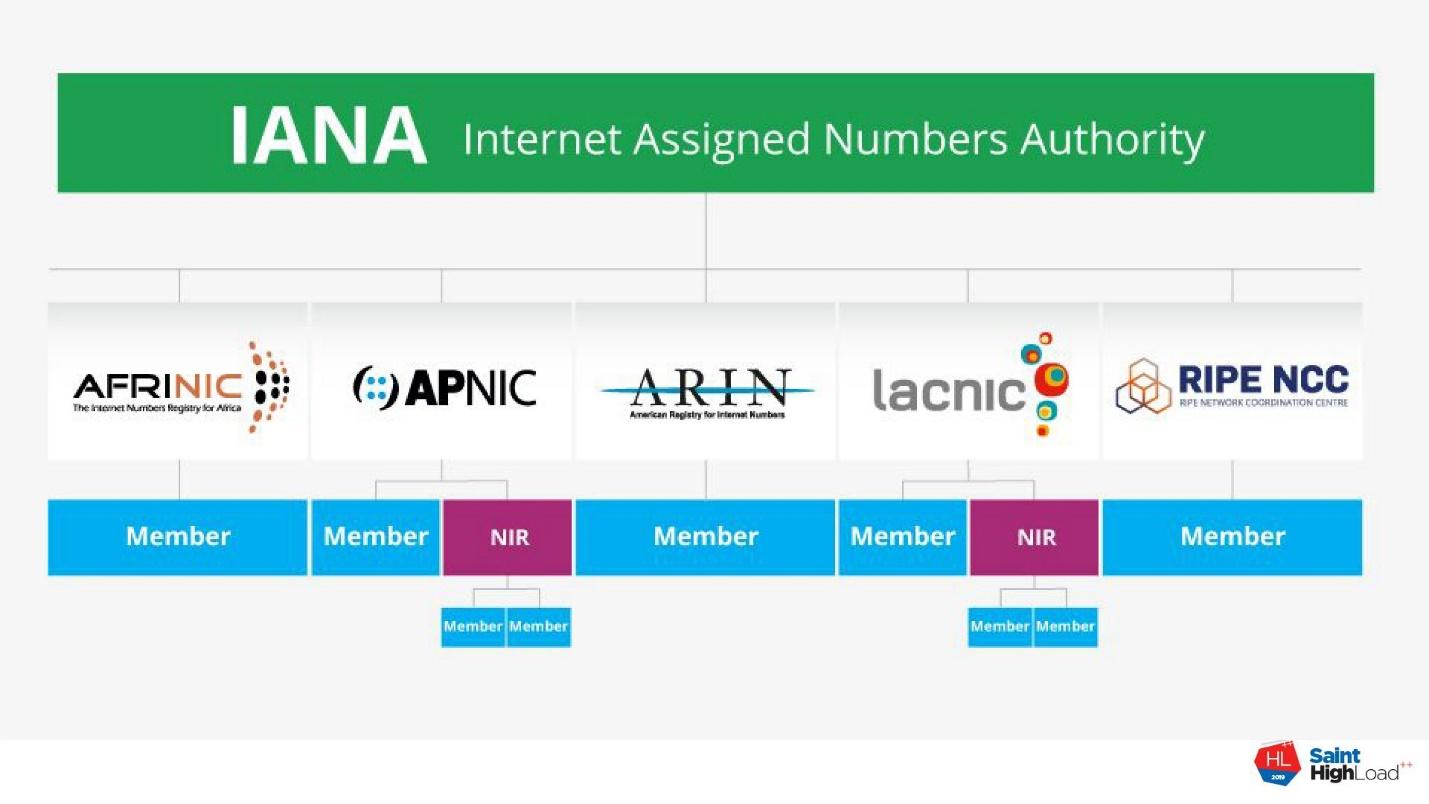

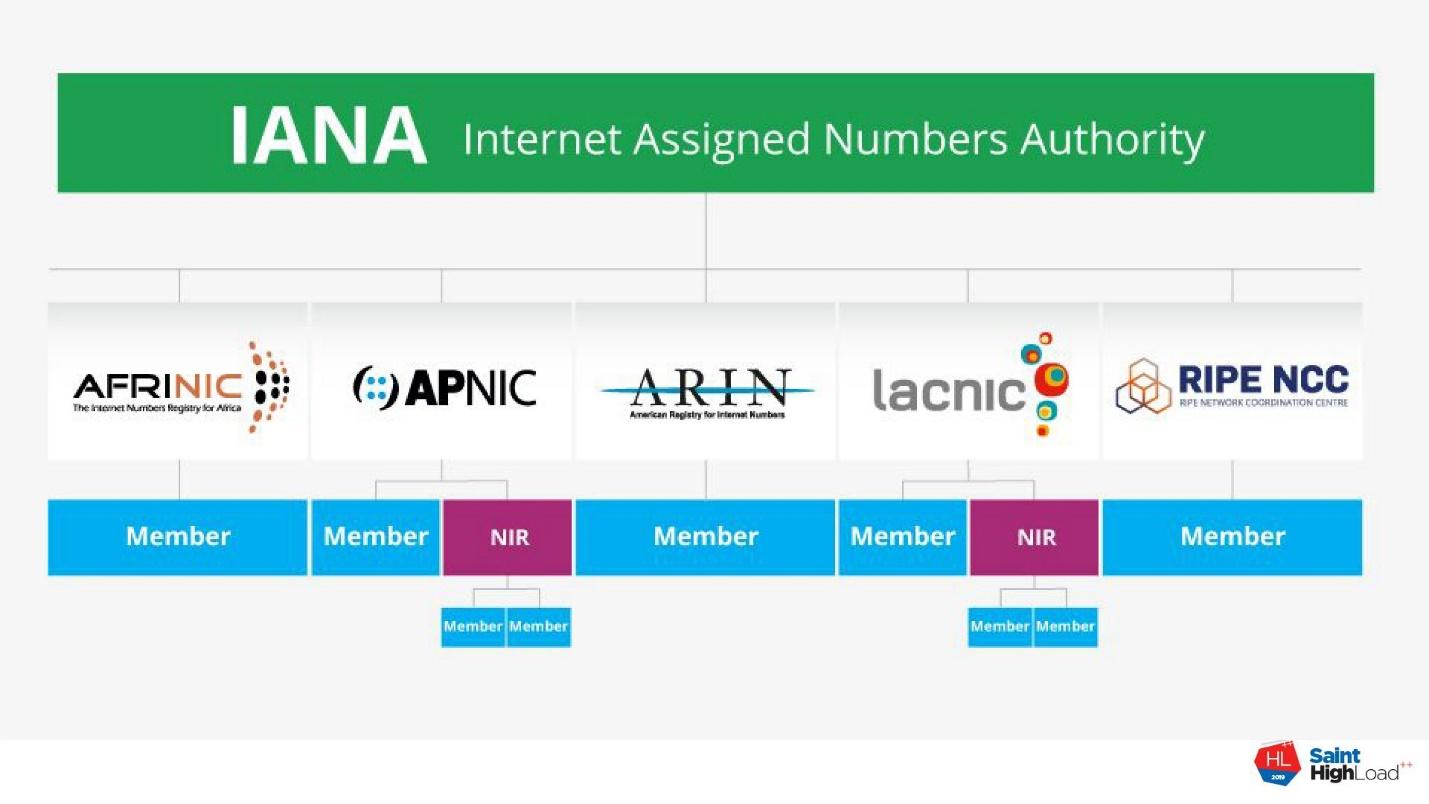

All Internet addresses are owned by IANA - Internet Assigned Numbers Authority . When you buy an IP network from someone, you do not buy addresses, but the right to use them. Addresses are an intangible resource, and by common agreement all of them belong to the IANA agency.

The system works like this. IANA delegates the management of IP addresses and autonomous system numbers to five regional registrars. Those issue autonomous systems to LIR - local Internet registrars . Next, LIRs allocate IP addresses to end users.

The disadvantage of the system is that each of the regional registrars maintains its own registries in its own way. Everyone has their own views on what information should be contained in the registries, who should or should not check it. The result is a mess, which is now.

IRR is mediocre quality . With IRR it’s clear - everything is bad there.

BGP-communities . This is some attribute that is described in the protocol. For example, we can attach a special community to our announcement so that a neighbor does not send our networks to his neighbors. When we have a P2P link, we only exchange our networks. So that the route does not accidentally go to other networks, we hang up the community.

Community is not transitive. This is always a contract for two, and this is their drawback. We cannot hang out any community, except for one, which is accepted by default by all. We cannot be sure that this community will be accepted and correctly interpreted by everyone. Therefore, in the best case, if you agree with your uplink, he will understand what you want from him in the community. But his neighbor may not understand, or the operator will simply reset your mark, and you will not achieve what you wanted.

RPKI + ROA solves only a small part of the problems . RPKI is a Resource Public Key Infrastructure - a special framework for signing routing information. It’s a good idea to get LIRs and their clients to maintain an up-to-date address space database. But there is one problem with him.

RPKI is also a hierarchical public key system. Does IANA have a key from which RIR keys are generated, and from them LIR keys? with which they sign their address space using ROAs - Route Origin Authorization:

- I assure you that this prefix will be announced on behalf of this autonomy.

In addition to ROA, there are other objects, but about them somehow later. It seems that the thing is good and useful. But it does not protect us from leaks from the word "completely" and does not solve all the problems with hijacking prefixes. Therefore, players are not in a hurry to implement it. Although there are already assurances from large players such as AT&T and large IXs that prefixes with an invalid ROA record will drop.

Perhaps they will do this, but so far we have a huge number of prefixes that are not signed at all. On the one hand, it is unclear whether they are announced validly. On the other hand, we cannot drop them by default, because we are not sure whether this is correct or not.

BGPSec . This is a cool thing that academics came up with for the pink pony network. They said:

- We have RPKI + ROA - a mechanism for certifying the signature of the address space. Let's get a separate BGP attribute and call it BGPSec Path. Each router will sign with its signature the announcements that it announces to its neighbors. So we get the trusted path from the chain of signed announcements and can verify it.

In theory, it’s good, but in practice there are many problems. BGPSec breaks many existing BGP mechanics by choosing next-hop and managing inbound / outbound traffic directly on the router. BGPSec does not work until 95% of the entire market participants introduce it, which in itself is a utopia.

BGPSec has huge performance issues. On the current hardware, the speed of checking announcements is about 50 prefixes per second. For comparison: the current Internet table of 700,000 prefixes will be filled in for 5 hours, for which it will change another 10 times.

BGP Open Policy (Role-based BGP) . Fresh offer based on the Gao Rexford model . These are two scientists who are involved in BGP research.

The Gao Rexford model is as follows. To simplify, in the case of BGP there are a small number of types of interactions:

Based on the role of the router, it is already possible to set certain import / export policies by default. The administrator does not need to configure prefix lists. Based on the role that the routers agree on and which can be set, we already get some default filters. Now this is a draft that is being discussed at the IETF. I hope that soon we will see this in the form of RFC and implementation on hardware.

Consider the example of a CenturyLink provider . This is the third largest US provider, which serves 37 states and has 15 data centers.

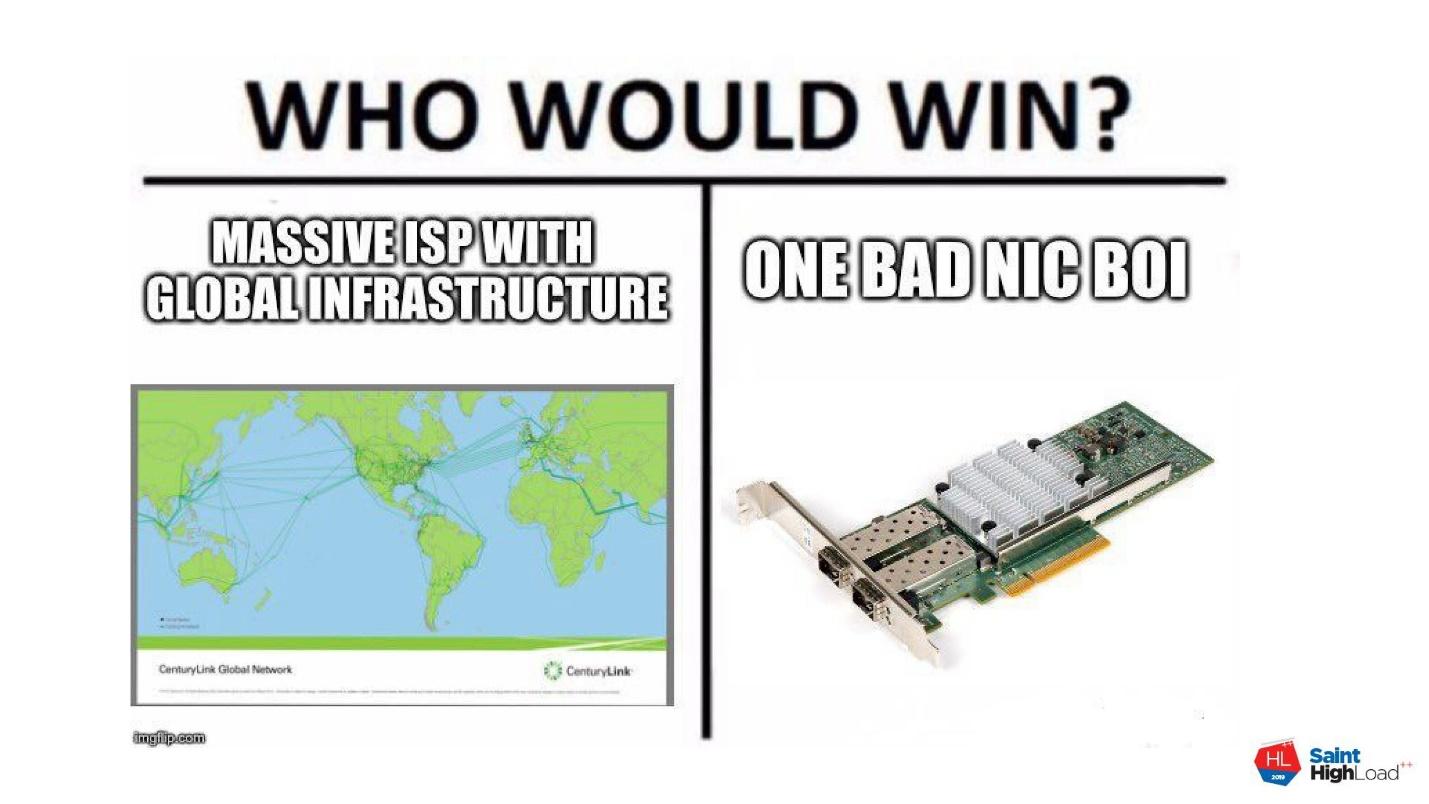

In December 2018, CenturyLink was in the US market for 50 hours. During the incident, there were problems with the operation of ATMs in two states; 911 did not work for several hours in five states. Idaho lottery was torn to pieces. The US Telecommunications Commission is currently investigating the incident.

The reason for the tragedy is in one network card in one data center. The card failed, sent incorrect packets, and all 15 provider data centers went down.

For this provider, the idea of “too big to fall” did not work. This idea does not work at all. You can take any major player and put some trifle. In the US, everything is still good with connectedness. CenturyLink customers who had a reserve went into it en masse. Then alternative operators complained about the overload of their links.

Likely on Google, Amazon, FaceBook and other corporations is connected to the Internet? No, they break it too.

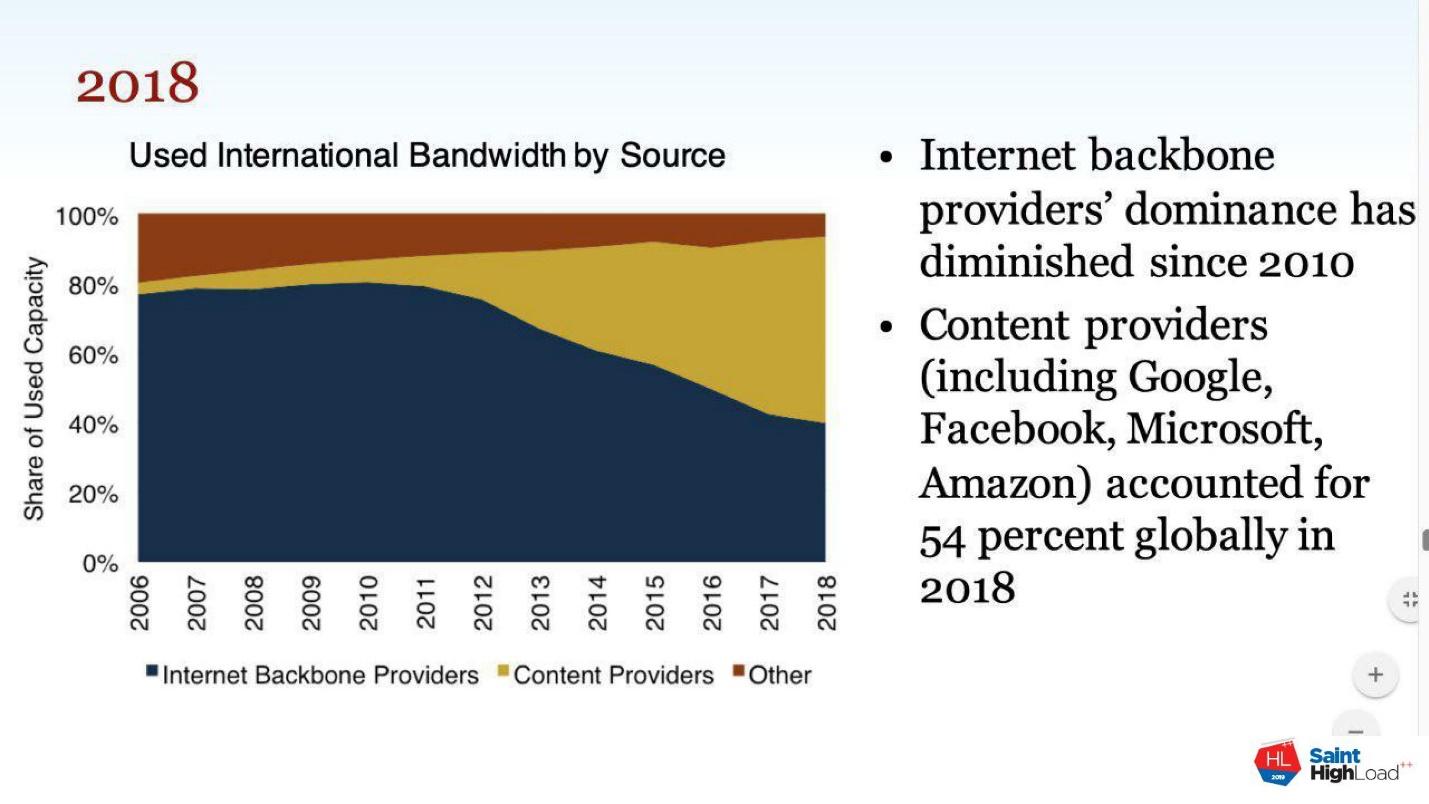

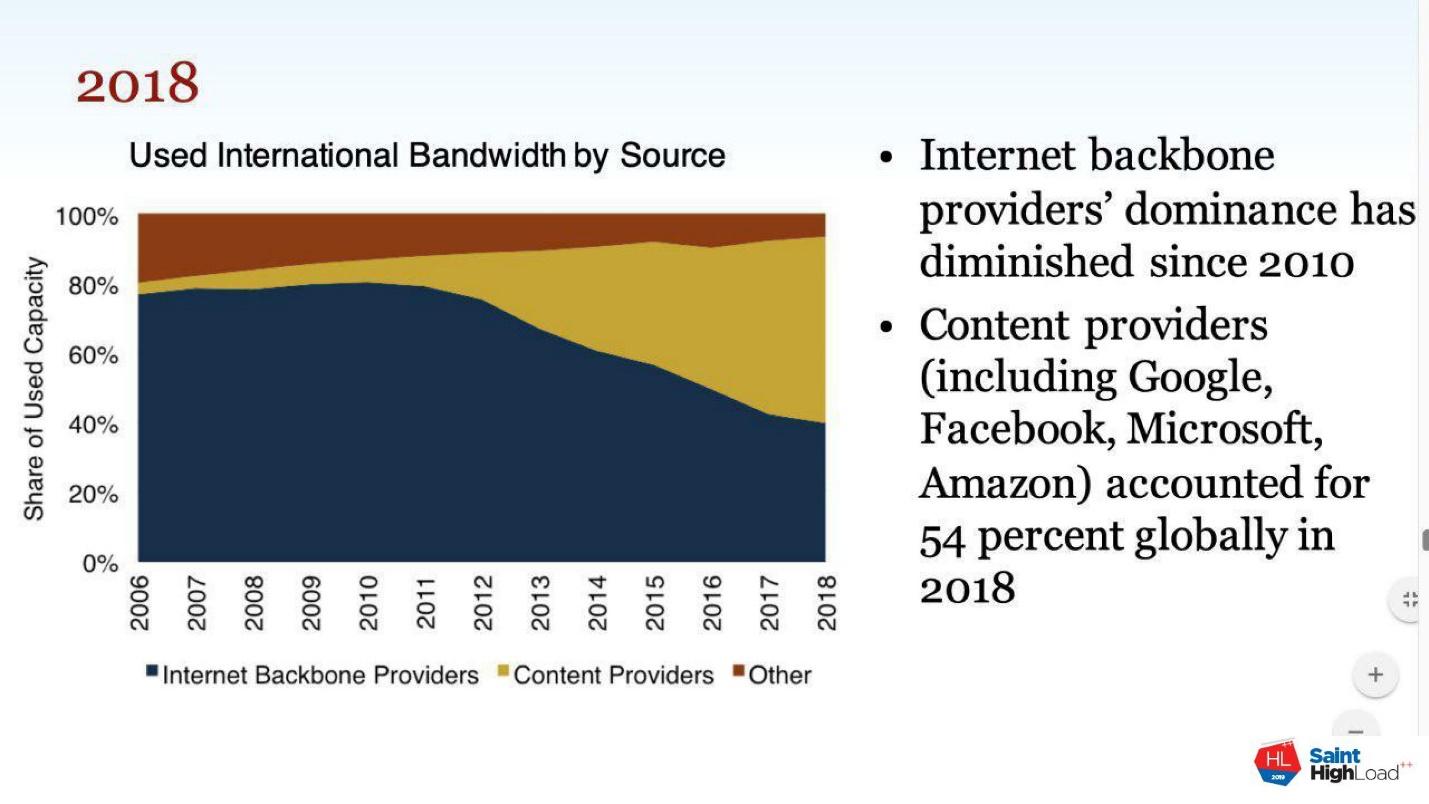

In 2017 in St. Petersburg at the ENOG13 conference, Jeff Houston of APNIC presented the report “Death of Transit” . It says that we are used to the fact that interaction, cash flows and Internet traffic are vertical. We have small providers that pay for connectivity to larger ones, and those already pay for connectivity to global transit.

Now we have such a vertically oriented structure. Everything would be fine, but the world is changing - large players are building their transoceanic cables to build their own backbones.

News about the CDN cable.

In 2018, TeleGeography released a study that more than half of the traffic on the Internet is no longer the Internet, but the backbones of the major players. This is traffic that is related to the Internet, but this is not the same network that we talked about.

Microsoft has its own network, Google has its own, and they weakly overlap with each other. Traffic that originated somewhere in the USA goes through Microsoft channels across the ocean to Europe somewhere on a CDN, then connects to your provider via CDN or IX and gets to your router.

This strength of the Internet, which will help him survive the nuclear explosion, is lost. There are places of concentration of users and traffic. If the conditional Google Cloud lies, there will be many victims at once. In part, we felt this when Roskomnadzor blocked AWS. And with the example of CenturyLink, it’s clear that there’s enough detail for this.

Previously, not all and not all broke. In the future, we can come to the conclusion that by influencing one major player, you can break a lot of things, many where and many with whom.

The states next in line, and usually happens to them.

Here, our Roskomnadzor is never even a pioneer. A similar practice of Internet shutdown is in Iran, India, Pakistan. In England there is a bill on the possibility of disconnecting the Internet.

Any large state wants to get a switch to turn off the Internet, either in full or in parts: Twitter, Telegram, Facebook. They don’t understand that they will never succeed, but they really want it. A knife switch is used, as a rule, for political purposes - to eliminate political competitors, or elections on the nose, or Russian hackers again broke something.

I will not take the bread from the comrades from Qrator Labs, they do it much better than me. They have an annual report on internet stability. And here is what they wrote in the report for 2018.

The average duration of DDoS attacks drops to 2.5 hours . Attackers also begin to count money, and if the resource did not go down immediately, then it is quickly left alone.

The intensity of attacks is growing . In 2018, we saw 1.7 Tb / s on the Akamai network, and this is not the limit.

New attack vectors appear and old ones amplify . New protocols appear that are subject to amplification, new attacks appear on existing protocols, especially TLS and the like.

Most traffic is mobile devices.. At the same time, Internet traffic is transferred to mobile clients. With this you need to be able to work both for those who attack and those who defend themselves.

Invulnerable - no . This is the main idea - there is no universal protection that does not exactly protect against any DDoS.

I hope I scared you enough. Let’s now think what to do with it.

If you have free time, desire and knowledge of English - participate in working groups: IETF, RIPE WG. These are open mailing lists, subscribe to newsletters, participate in discussions, come to conferences. If you have LIR status, you can vote, for example, in RIPE for various initiatives.

For mere mortals, this is monitoring . To know what is broken.

Normal Ping , and not only a binary check - it works or not. Write RTT to history to watch anomalies later.

Traceroute . This is a utility program for determining data paths in TCP / IP networks. Helps detect abnormalities and blockages.

HTTP checks-checks custom URLs and TLS certificates will help to detect blocking or DNS spoofing for an attack, which is almost the same. Locks are often performed by spoofing DNS and wrapping traffic on a stub page.

If possible, check with your clients the resolve of your origin from different places, if you have an application. So you will find DNS interception anomalies, which providers sometimes sin.

There is no universal answer. Check where the user comes from. If users are in Russia, check from Russia, but do not limit yourself to it. If your users live in different regions, check from these regions. But better from all over the world.

I came up with three ways. If you know more - write in the comments.

Let's talk about each of them.

RIPE Atlas is such a small box. For those who know the domestic “Inspector” - this is the same box, but with a different sticker.

RIPE Atlas is a free program . You register, receive a router by mail and plug it into the network. For the fact that someone else takes advantage of your breakdown, you will be given some loans. For these loans you can conduct some research yourself. You can test in different ways: ping, traceroute, check certificates. Coverage is quite large, many nodes. But there are nuances.

The credit system does not allow to build production solutions. Loans for ongoing research or commercial monitoring are not enough. Credits are enough for a short study or one-time check. The daily rate from one sample is eaten by 1-2 checks.

Coverage is uneven . Since the program is free in both directions, the coverage is good in Europe, in the European part of Russia and in some regions. But if you need Indonesia or New Zealand, then everything is much worse - 50 samples per country may not be collected.

You cannot check http from the sample . This is due to technical nuances. They promise to fix it in the new version, but so far http cannot be checked. Only a certificate can be verified. Some kind of http check can only be done before the special RIPE Atlas device called Anchor.

The second way is commercial monitoring.. He’s fine, are you paying money? They promise you several tens or hundreds of monitoring points around the world, draw beautiful dashboards “out of the box”. But, again, there are problems.

It is paid, very much in places . Ping monitoring, verifications from around the world, and many http verifications can cost several thousand dollars a year. If finances allow and you like this solution - please.

Coverage may be lacking in the region of interest . The same ping clarifies the maximum abstract part of the world - Asia, Europe, North America. Rare monitoring systems can drill down to a specific country or region.

Weak support for custom tests . If you need something custom, and not just a "kurlyk" on the url, then this is also a problem.

The third way is your monitoring . This is a classic: “And let's write your own!”

Its monitoring is turning into the development of a software product, and distributed. You are looking for an infrastructure provider, see how to deploy and monitor it - monitoring needs to be monitored, right? And support is also required. Think ten times before tackling it. It might be easier to pay someone who does it for you.

Here, the available resources are still easier. BGP anomalies are detected using specialized services such as QRadar, BGPmon . They accept a full view table from many operators. Based on what they see from different operators, they can detect anomalies, look for amplifiers, and more. Usually registration is free - you hammer in your autonomy number, subscribe to notifications by mail, and the service alerts you to your problems.

Monitoring DDoS attacks is easy too. As a rule, these are NetFlow-based and logs . There are specialized systems such as FastNetMon , modules for Splunk. In extreme cases, there is your provider of DDoS protection. He can also merge NetFlow and based on it he will notify you of attacks in your direction.

Do not have illusions - the Internet will definitely break . Not everything and not everyone will break, but 14 thousand incidents in 2017 hint that there will be incidents.

Your task is to notice the problems as early as possible . At least no later than your user. Not only should you notice, always keep “Plan B” in stock. A plan is a strategy that you will do when everything breaks down : backup operators, DCs, CDNs. A plan is a separate checklist by which you check the work of everything. The plan should work without the involvement of network engineers, because there are usually few of them and they want to sleep.

That's all. I wish you high availability and green monitoring.

knows the answer . Its main task is the accessibility of the platform for users. In decoding Alexey's report at Saint HighLoad ++ 2019let's talk about BGP, DDOS attacks, Internet switch, provider errors, decentralization and cases when a small router sent the Internet to sleep. In the end - a couple of tips on how to survive all this.

The day the internet has broken

I will give only a few incidents when connectivity broke on the Internet. This will be enough for the full picture.

"Incident with AS7007 . " The first time the Internet broke down in April 1997. There was an error in the software of one router from the autonomous system 7007. At some point, the router announced to the neighbors its internal routing table and sent half the network to the black hole.

Pakistan vs. YouTube . In 2008, the brave guys from Pakistan decided to block YouTube. They did it so well that half the world was left without seals.

“Capture of VISA, MasterCard and Symantec Prefixes by Rostelecom”. In 2017, Rostelecom mistakenly announced the prefixes VISA, MasterCard, and Symantec. As a result, financial traffic went through channels that the provider controls. The leak did not last long, but financial companies were unpleasant.

Google v. Japan . In August 2017, Google began to announce the prefixes of large Japanese providers NTT and KDDI in part of their uplinks. Traffic went to Google as transit, most likely by mistake. Since Google is not a provider and does not allow transit traffic, a significant part of Japan is left without the Internet.

“DV LINK has captured the prefixes of Google, Apple, Facebook, Microsoft . ” In the same 2017, the Russian provider DV LINK for some reason began to announce the network of Google, Apple, Facebook, Microsoft and some other major players.

"US eNet has grabbed the AWS Route53 and MyEtherwallet prefixes . " In 2018, an Ohio provider or one of its customers announced the Amazon Route53 network and the MyEtherwallet crypto wallet. The attack was successful: even despite the self-signed certificate, a warning about which appeared to the user when they entered the MyEtherwallet website, many wallets hijacked and stole part of the cryptocurrency.

There were more than 14,000 such incidents in 2017 alone! The network is still decentralized, so not all and not all break down. But the incidents happen in the thousands, and they are all connected with the BGP protocol, on which the Internet works.

BGP and its problems

The BGP protocol - Border Gateway Protocol , was first described in 1989 by two engineers from IBM and Cisco Systems on three “napkins” - A4 sheets. These “napkins” still lie at the Cisco Systems headquarters in San Francisco as a relic of the networking world.

The protocol is based on the interaction of autonomous systems - Autonomous Systems or in abbreviated form - AS. An autonomous system is just some ID that IP networks are assigned to in the public registry. A router with this ID can announce these networks to the world. Accordingly, any route on the Internet can be represented as a vector called AS Path . A vector consists of autonomous system numbers that must be completed in order to reach a destination network.

For example, there is a network of a number of autonomous systems. You need to get from the AS65001 system to the AS65003 system. The path from one system is represented by AS Path in the diagram. It consists of two autonomies: 65002 and 65003. For each destination address there is an AS Path vector, which consists of the numbers of autonomous systems that we need to go through.

So what are the problems with BGP?

BGP is a trust protocol

BGP protocol - trust based. This means that by default we trust our neighbor. This is a feature of many protocols that were developed at the dawn of the Internet. Let’s figure out what “trust” means.

No neighbor authentication . Formally, there is MD5, but MD5 in 2019 - well, that’s ...

There is no filtering . BGP has filters and they are described, but they are not used, or are used incorrectly. I will explain why later.

It is very simple to establish a neighborhood . Neighborhood settings in the BGP protocol on almost any router - a couple of lines of config.

BGP management rights are not required . No need to take exams to confirm your qualifications. No one will take away the rights to configure BGP while drunk.

Two main problems

Prefix hijacking - prefix hijacks . Prefix hijacking is the announcement of a network that does not belong to you, as is the case with MyEtherwallet. We took some prefixes, agreed with the provider or hacked it, and through it we announce these networks.

Route leaks - route leaks . Leaks are a bit trickier. Leak is a change in AS Path . In the best case, the change will lead to a greater delay, because you need to go the route longer or less capacious link. In the worst case with Google and Japan.

Google itself is neither an operator nor a transit autonomous system. But when he announced to his provider a network of Japanese operators, then traffic through Google via AS Path was seen as more priority. Traffic went there and dropped just because the routing settings inside Google are more complicated than just filters on the border.

Why don't filters work?

Nobody cares . This is the main reason - everyone doesn’t care. The administrator of a small provider or company that connected to the provider via BGP took MikroTik, configured BGP on it and does not even know that there you can configure filters.

Configuration errors . They debuted something, made a mistake in the mask, put the wrong mesh - and now, again, a mistake.

There is no technical possibility . For example, communication providers have many customers. In a smart way, you should automatically update the filters for each client - make sure that he has a new network, that he has leased his network to someone. Keeping track of this is difficult; with your hands it is even more difficult. Therefore, they simply put relaxed filters or do not put filters at all.

Exceptions. There are exceptions for beloved and large customers. Especially in the case of inter-operator joints. For example, TransTeleCom and Rostelecom have a bunch of networks and a junction between them. If the joint lays down, it will not be good for anyone, so the filters relax or remove completely.

Outdated or irrelevant information in the IRR . Filters are built on the basis of information recorded in the IRR - Internet Routing Registry . These are registries of regional Internet registrars. Often in the registries outdated or irrelevant information, or all together.

Who are these registrars?

All Internet addresses are owned by IANA - Internet Assigned Numbers Authority . When you buy an IP network from someone, you do not buy addresses, but the right to use them. Addresses are an intangible resource, and by common agreement all of them belong to the IANA agency.

The system works like this. IANA delegates the management of IP addresses and autonomous system numbers to five regional registrars. Those issue autonomous systems to LIR - local Internet registrars . Next, LIRs allocate IP addresses to end users.

The disadvantage of the system is that each of the regional registrars maintains its own registries in its own way. Everyone has their own views on what information should be contained in the registries, who should or should not check it. The result is a mess, which is now.

How else can you deal with these problems?

IRR is mediocre quality . With IRR it’s clear - everything is bad there.

BGP-communities . This is some attribute that is described in the protocol. For example, we can attach a special community to our announcement so that a neighbor does not send our networks to his neighbors. When we have a P2P link, we only exchange our networks. So that the route does not accidentally go to other networks, we hang up the community.

Community is not transitive. This is always a contract for two, and this is their drawback. We cannot hang out any community, except for one, which is accepted by default by all. We cannot be sure that this community will be accepted and correctly interpreted by everyone. Therefore, in the best case, if you agree with your uplink, he will understand what you want from him in the community. But his neighbor may not understand, or the operator will simply reset your mark, and you will not achieve what you wanted.

RPKI + ROA solves only a small part of the problems . RPKI is a Resource Public Key Infrastructure - a special framework for signing routing information. It’s a good idea to get LIRs and their clients to maintain an up-to-date address space database. But there is one problem with him.

RPKI is also a hierarchical public key system. Does IANA have a key from which RIR keys are generated, and from them LIR keys? with which they sign their address space using ROAs - Route Origin Authorization:

- I assure you that this prefix will be announced on behalf of this autonomy.

In addition to ROA, there are other objects, but about them somehow later. It seems that the thing is good and useful. But it does not protect us from leaks from the word "completely" and does not solve all the problems with hijacking prefixes. Therefore, players are not in a hurry to implement it. Although there are already assurances from large players such as AT&T and large IXs that prefixes with an invalid ROA record will drop.

Perhaps they will do this, but so far we have a huge number of prefixes that are not signed at all. On the one hand, it is unclear whether they are announced validly. On the other hand, we cannot drop them by default, because we are not sure whether this is correct or not.

What else is there?

BGPSec . This is a cool thing that academics came up with for the pink pony network. They said:

- We have RPKI + ROA - a mechanism for certifying the signature of the address space. Let's get a separate BGP attribute and call it BGPSec Path. Each router will sign with its signature the announcements that it announces to its neighbors. So we get the trusted path from the chain of signed announcements and can verify it.

In theory, it’s good, but in practice there are many problems. BGPSec breaks many existing BGP mechanics by choosing next-hop and managing inbound / outbound traffic directly on the router. BGPSec does not work until 95% of the entire market participants introduce it, which in itself is a utopia.

BGPSec has huge performance issues. On the current hardware, the speed of checking announcements is about 50 prefixes per second. For comparison: the current Internet table of 700,000 prefixes will be filled in for 5 hours, for which it will change another 10 times.

BGP Open Policy (Role-based BGP) . Fresh offer based on the Gao Rexford model . These are two scientists who are involved in BGP research.

The Gao Rexford model is as follows. To simplify, in the case of BGP there are a small number of types of interactions:

- Provider Customer;

- P2P;

- internal interaction, for example, iBGP.

Based on the role of the router, it is already possible to set certain import / export policies by default. The administrator does not need to configure prefix lists. Based on the role that the routers agree on and which can be set, we already get some default filters. Now this is a draft that is being discussed at the IETF. I hope that soon we will see this in the form of RFC and implementation on hardware.

Large Internet Service Providers

Consider the example of a CenturyLink provider . This is the third largest US provider, which serves 37 states and has 15 data centers.

In December 2018, CenturyLink was in the US market for 50 hours. During the incident, there were problems with the operation of ATMs in two states; 911 did not work for several hours in five states. Idaho lottery was torn to pieces. The US Telecommunications Commission is currently investigating the incident.

The reason for the tragedy is in one network card in one data center. The card failed, sent incorrect packets, and all 15 provider data centers went down.

For this provider, the idea of “too big to fall” did not work. This idea does not work at all. You can take any major player and put some trifle. In the US, everything is still good with connectedness. CenturyLink customers who had a reserve went into it en masse. Then alternative operators complained about the overload of their links.

If the conditional Kazakhtelecom lies, the whole country will be left without the Internet.

Corporations

Likely on Google, Amazon, FaceBook and other corporations is connected to the Internet? No, they break it too.

In 2017 in St. Petersburg at the ENOG13 conference, Jeff Houston of APNIC presented the report “Death of Transit” . It says that we are used to the fact that interaction, cash flows and Internet traffic are vertical. We have small providers that pay for connectivity to larger ones, and those already pay for connectivity to global transit.

Now we have such a vertically oriented structure. Everything would be fine, but the world is changing - large players are building their transoceanic cables to build their own backbones.

News about the CDN cable.

In 2018, TeleGeography released a study that more than half of the traffic on the Internet is no longer the Internet, but the backbones of the major players. This is traffic that is related to the Internet, but this is not the same network that we talked about.

The Internet is breaking up into a wide range of loosely coupled networks.

Microsoft has its own network, Google has its own, and they weakly overlap with each other. Traffic that originated somewhere in the USA goes through Microsoft channels across the ocean to Europe somewhere on a CDN, then connects to your provider via CDN or IX and gets to your router.

Decentralization disappears.

This strength of the Internet, which will help him survive the nuclear explosion, is lost. There are places of concentration of users and traffic. If the conditional Google Cloud lies, there will be many victims at once. In part, we felt this when Roskomnadzor blocked AWS. And with the example of CenturyLink, it’s clear that there’s enough detail for this.

Previously, not all and not all broke. In the future, we can come to the conclusion that by influencing one major player, you can break a lot of things, many where and many with whom.

States

The states next in line, and usually happens to them.

Here, our Roskomnadzor is never even a pioneer. A similar practice of Internet shutdown is in Iran, India, Pakistan. In England there is a bill on the possibility of disconnecting the Internet.

Any large state wants to get a switch to turn off the Internet, either in full or in parts: Twitter, Telegram, Facebook. They don’t understand that they will never succeed, but they really want it. A knife switch is used, as a rule, for political purposes - to eliminate political competitors, or elections on the nose, or Russian hackers again broke something.

DDoS attacks

I will not take the bread from the comrades from Qrator Labs, they do it much better than me. They have an annual report on internet stability. And here is what they wrote in the report for 2018.

The average duration of DDoS attacks drops to 2.5 hours . Attackers also begin to count money, and if the resource did not go down immediately, then it is quickly left alone.

The intensity of attacks is growing . In 2018, we saw 1.7 Tb / s on the Akamai network, and this is not the limit.

New attack vectors appear and old ones amplify . New protocols appear that are subject to amplification, new attacks appear on existing protocols, especially TLS and the like.

Most traffic is mobile devices.. At the same time, Internet traffic is transferred to mobile clients. With this you need to be able to work both for those who attack and those who defend themselves.

Invulnerable - no . This is the main idea - there is no universal protection that does not exactly protect against any DDoS.

The system cannot be put up only if it is not connected to the Internet.

I hope I scared you enough. Let’s now think what to do with it.

What to do?!

If you have free time, desire and knowledge of English - participate in working groups: IETF, RIPE WG. These are open mailing lists, subscribe to newsletters, participate in discussions, come to conferences. If you have LIR status, you can vote, for example, in RIPE for various initiatives.

For mere mortals, this is monitoring . To know what is broken.

Monitoring: what to check?

Normal Ping , and not only a binary check - it works or not. Write RTT to history to watch anomalies later.

Traceroute . This is a utility program for determining data paths in TCP / IP networks. Helps detect abnormalities and blockages.

HTTP checks-checks custom URLs and TLS certificates will help to detect blocking or DNS spoofing for an attack, which is almost the same. Locks are often performed by spoofing DNS and wrapping traffic on a stub page.

If possible, check with your clients the resolve of your origin from different places, if you have an application. So you will find DNS interception anomalies, which providers sometimes sin.

Monitoring: where to check?

There is no universal answer. Check where the user comes from. If users are in Russia, check from Russia, but do not limit yourself to it. If your users live in different regions, check from these regions. But better from all over the world.

Monitoring: how to check?

I came up with three ways. If you know more - write in the comments.

- RIPE Atlas.

- Commercial monitoring.

- Own network of virtualoks.

Let's talk about each of them.

RIPE Atlas is such a small box. For those who know the domestic “Inspector” - this is the same box, but with a different sticker.

RIPE Atlas is a free program . You register, receive a router by mail and plug it into the network. For the fact that someone else takes advantage of your breakdown, you will be given some loans. For these loans you can conduct some research yourself. You can test in different ways: ping, traceroute, check certificates. Coverage is quite large, many nodes. But there are nuances.

The credit system does not allow to build production solutions. Loans for ongoing research or commercial monitoring are not enough. Credits are enough for a short study or one-time check. The daily rate from one sample is eaten by 1-2 checks.

Coverage is uneven . Since the program is free in both directions, the coverage is good in Europe, in the European part of Russia and in some regions. But if you need Indonesia or New Zealand, then everything is much worse - 50 samples per country may not be collected.

You cannot check http from the sample . This is due to technical nuances. They promise to fix it in the new version, but so far http cannot be checked. Only a certificate can be verified. Some kind of http check can only be done before the special RIPE Atlas device called Anchor.

The second way is commercial monitoring.. He’s fine, are you paying money? They promise you several tens or hundreds of monitoring points around the world, draw beautiful dashboards “out of the box”. But, again, there are problems.

It is paid, very much in places . Ping monitoring, verifications from around the world, and many http verifications can cost several thousand dollars a year. If finances allow and you like this solution - please.

Coverage may be lacking in the region of interest . The same ping clarifies the maximum abstract part of the world - Asia, Europe, North America. Rare monitoring systems can drill down to a specific country or region.

Weak support for custom tests . If you need something custom, and not just a "kurlyk" on the url, then this is also a problem.

The third way is your monitoring . This is a classic: “And let's write your own!”

Its monitoring is turning into the development of a software product, and distributed. You are looking for an infrastructure provider, see how to deploy and monitor it - monitoring needs to be monitored, right? And support is also required. Think ten times before tackling it. It might be easier to pay someone who does it for you.

Monitoring BGP Anomalies and DDoS Attacks

Here, the available resources are still easier. BGP anomalies are detected using specialized services such as QRadar, BGPmon . They accept a full view table from many operators. Based on what they see from different operators, they can detect anomalies, look for amplifiers, and more. Usually registration is free - you hammer in your autonomy number, subscribe to notifications by mail, and the service alerts you to your problems.

Monitoring DDoS attacks is easy too. As a rule, these are NetFlow-based and logs . There are specialized systems such as FastNetMon , modules for Splunk. In extreme cases, there is your provider of DDoS protection. He can also merge NetFlow and based on it he will notify you of attacks in your direction.

conclusions

Do not have illusions - the Internet will definitely break . Not everything and not everyone will break, but 14 thousand incidents in 2017 hint that there will be incidents.

Your task is to notice the problems as early as possible . At least no later than your user. Not only should you notice, always keep “Plan B” in stock. A plan is a strategy that you will do when everything breaks down : backup operators, DCs, CDNs. A plan is a separate checklist by which you check the work of everything. The plan should work without the involvement of network engineers, because there are usually few of them and they want to sleep.

That's all. I wish you high availability and green monitoring.

Next week in Novosibirsk, the sun, highload and a high concentration of developers are expected at HighLoad ++ Siberia 2019 . In Siberia, the front of reports on monitoring, accessibility and testing, security and management is forecasted. Precipitation is expected in the form of written notes, networking, photos and posts on social networks. We recommend postponing all business on June 24 and 25 and book tickets . We are waiting for you in Siberia!