Exploring Dlink Switch after a Thunderstorm

The article you are reading is an extension of the article " Configuring Access Level Switches on the Provider’s Network ". I am justifying the correctness of the author's approach by screenshots and my observations. So, a photo of the subject.

Everything is like in the DMB film, the plot is about the gopher: I also do not see the cables, but there are links. The settings at the switch are reset to the factory #reset system command .

For clarity, a few more screenshots:

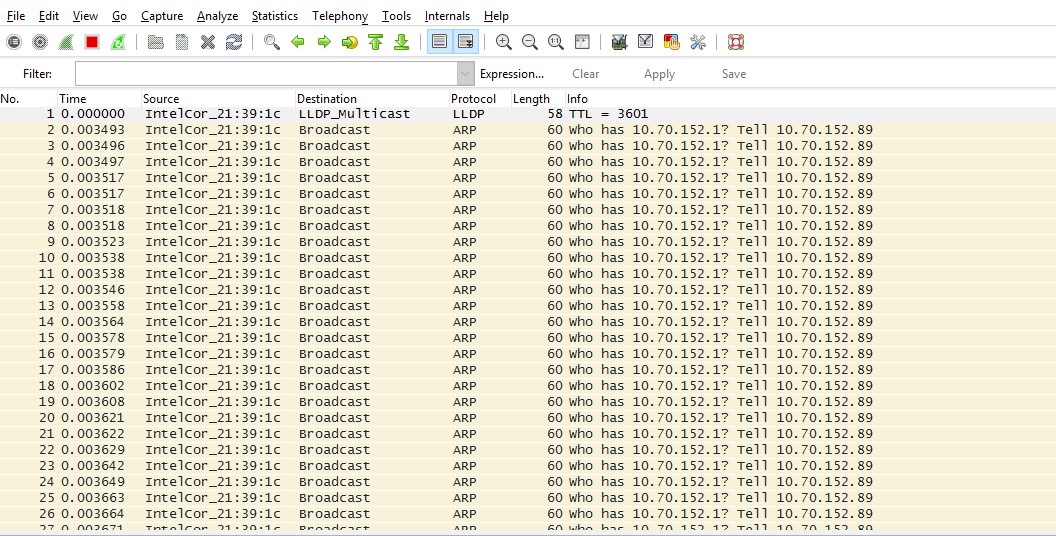

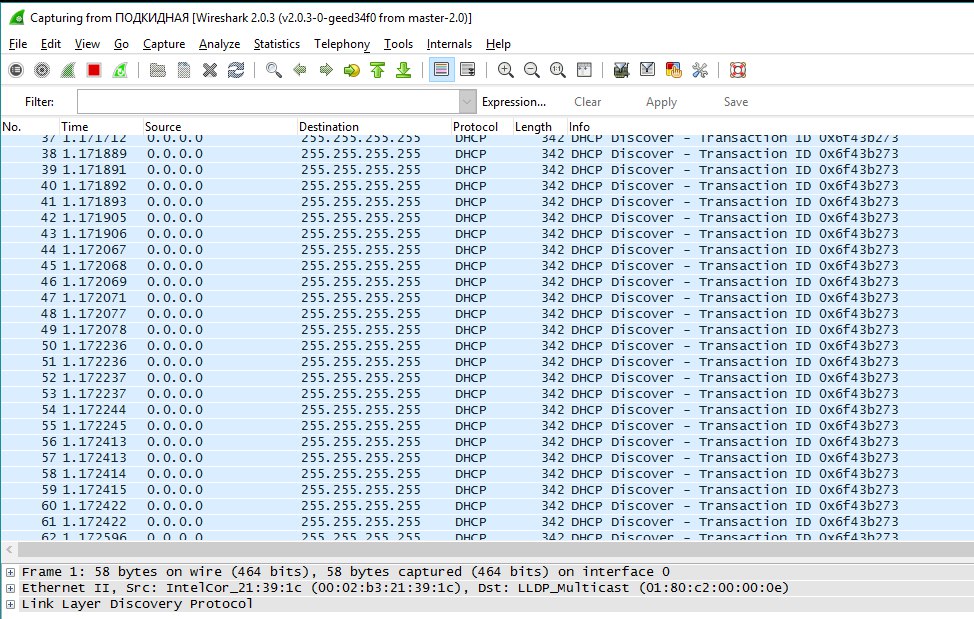

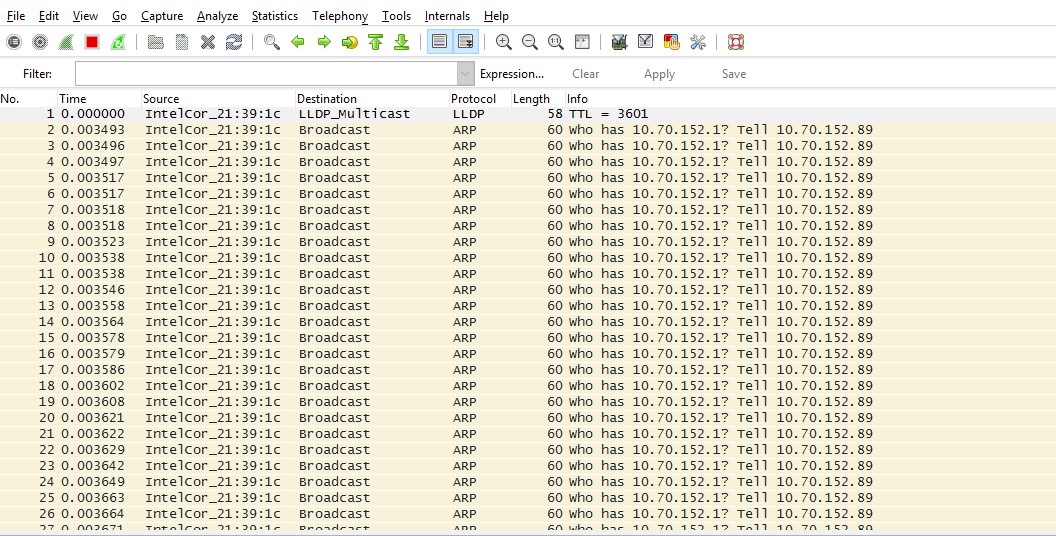

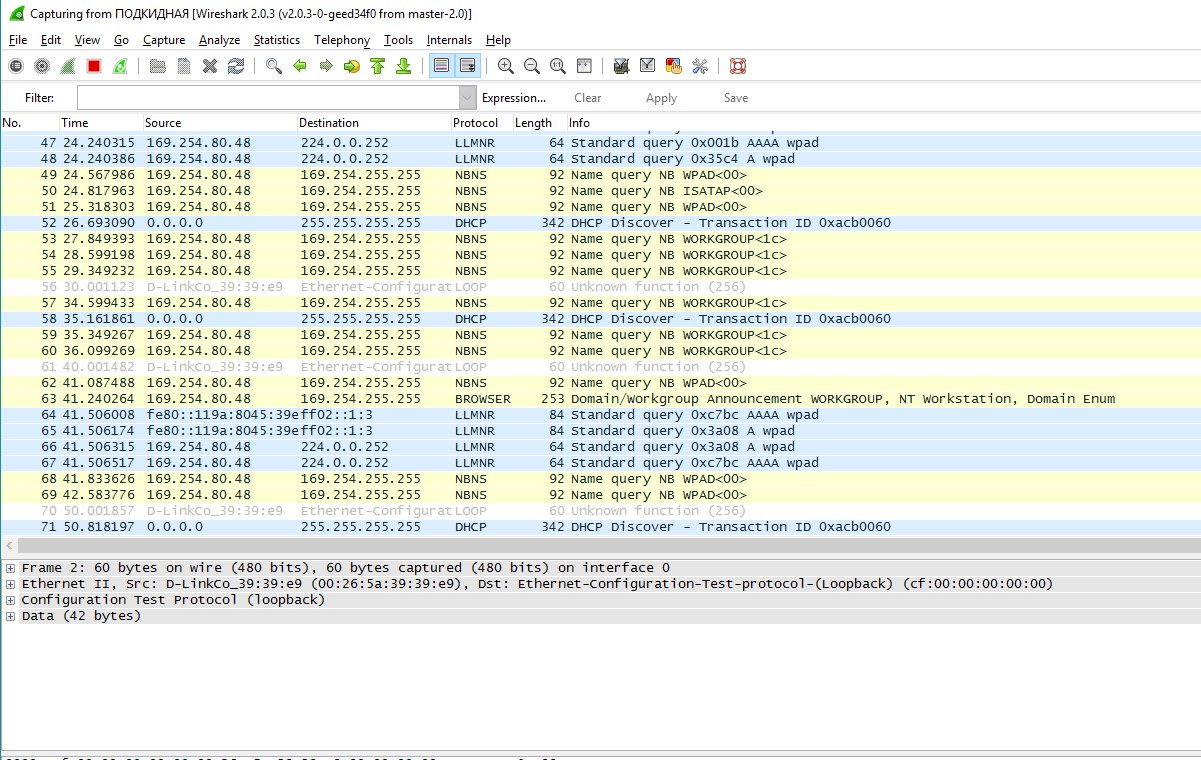

Links went up, the switch is working, but what it is doing for us will tell wireshark and we see an amazing picture:

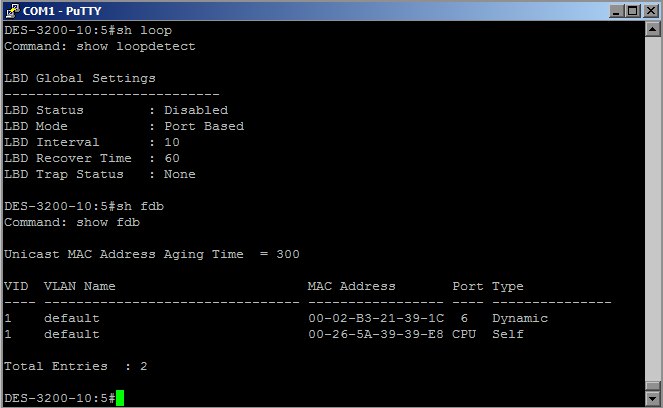

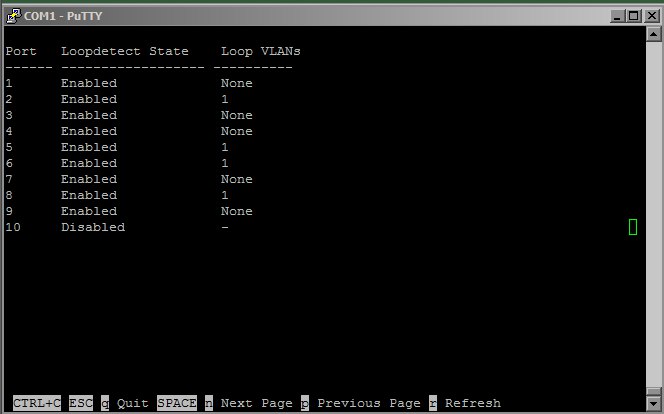

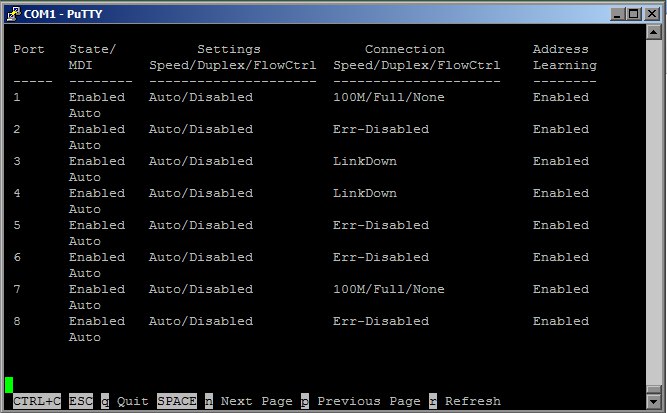

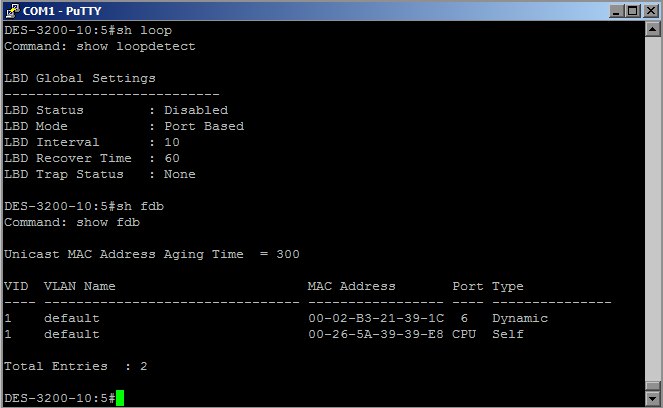

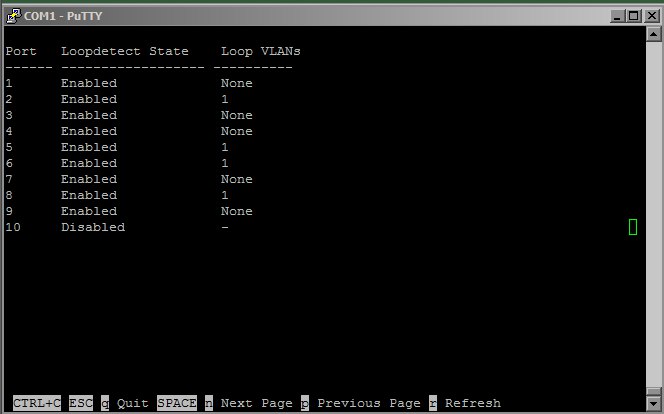

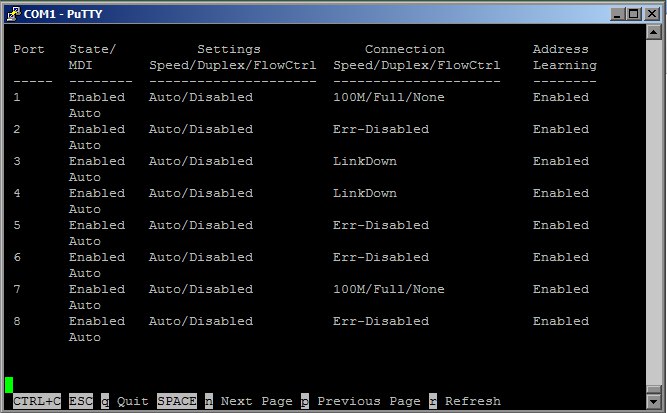

The presented screenshot shows a large number of ARP packets that the switch itself generates, presumably as a result of activity of the failed ports. Based on this, we enable the Loop Detection function, the function is intended to form the switch tree using the STP protocol, but it works when STP is turned off: Here's how the situation changed: After applying the Loop Detection setting, a record is made in the switch logs about the detected ring on the faulty port, the port itself goes into err-disabled mode, as seen in the screenshots above ( mode vlan-based

allows you to configure ring detection on trunk ports, as a result of which only traffic from the vlan in which the ring is detected will be blocked, while the remaining vlan will work as usual).

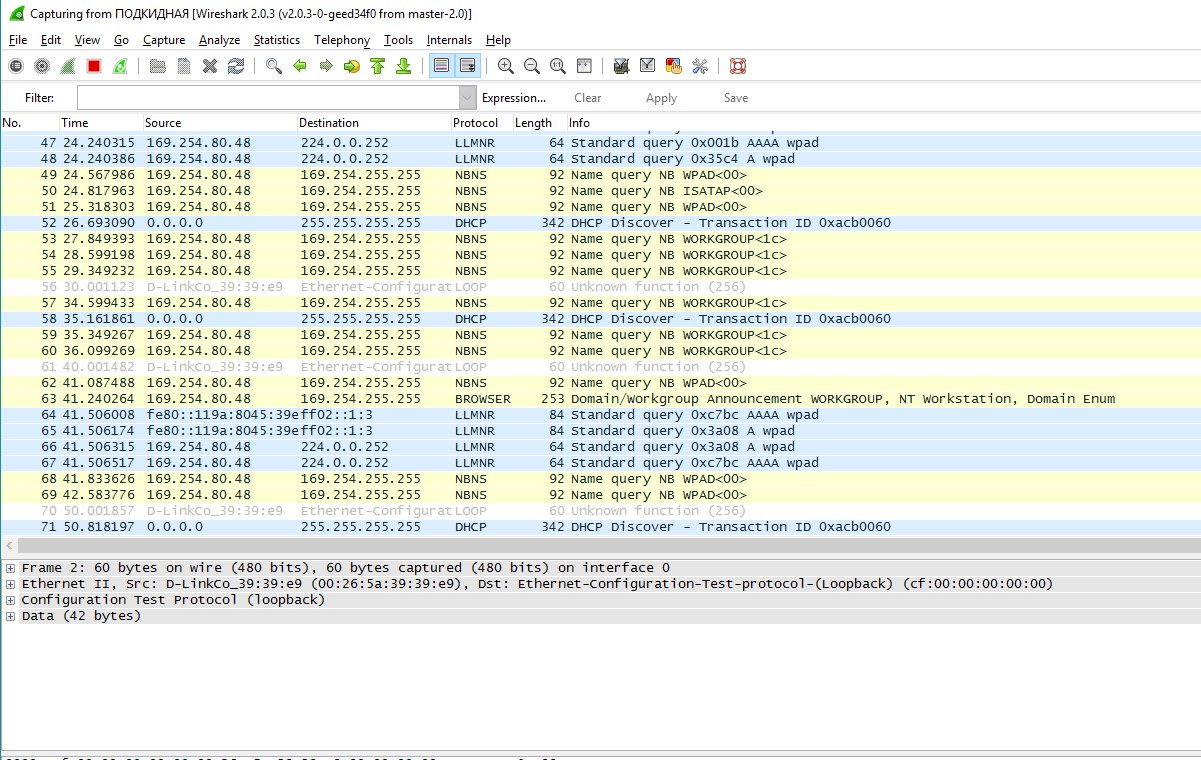

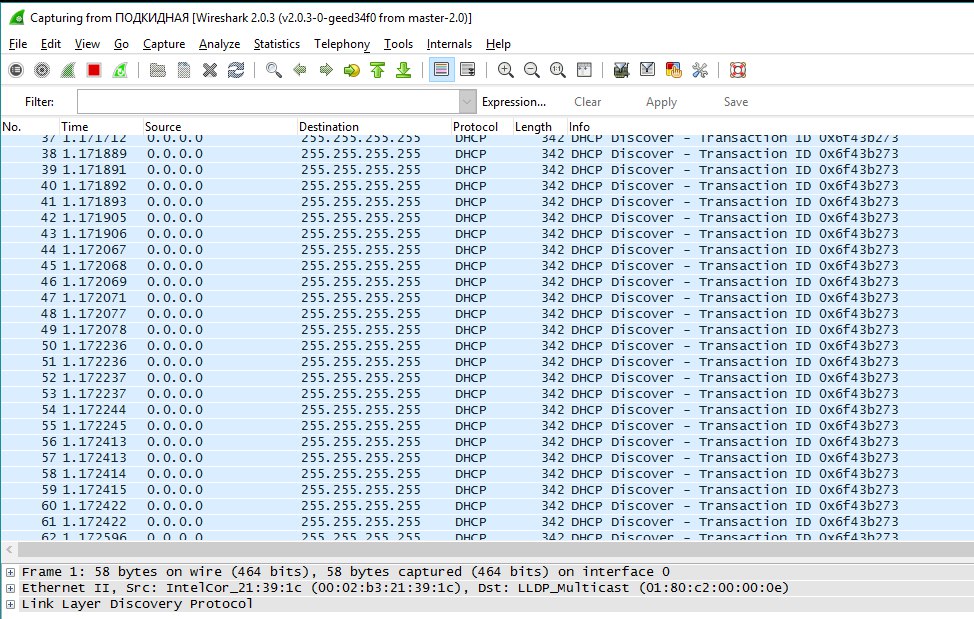

The type of traffic caught by wereshark also changed. Now we see a large number of dhcp requests (on the connected computer, the setting is to automatically obtain an IP address).

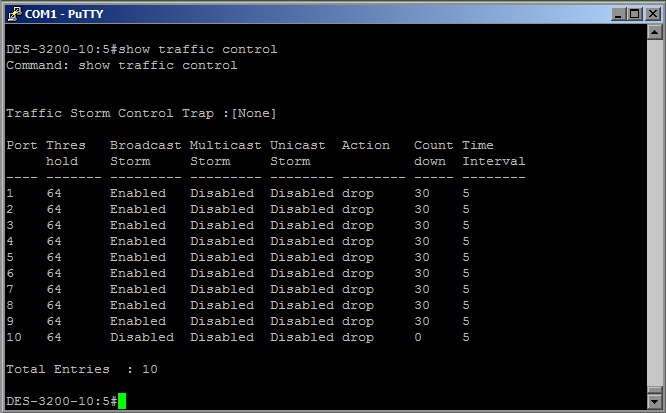

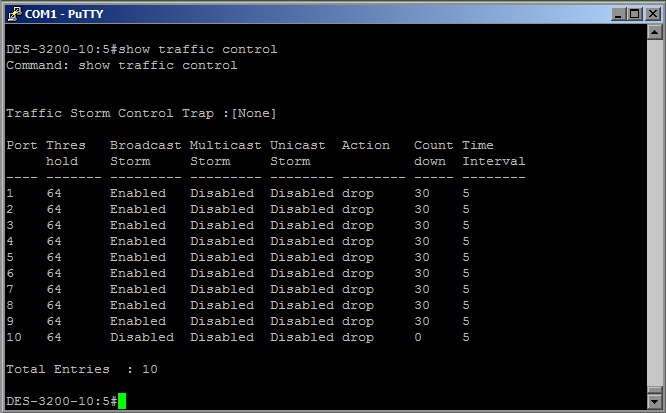

It follows that the situation with broadcast traffic has not changed. This adversely affects the functioning of the network, as Broadcast packets are cloned by the switches, and this leads to the phenomenon of a broadcast storm. Based on this, a decision was made to limit the number of broadcast traffic through the command (if the number of packets exceeded the level, then the packets are discarded):

In addition, we prohibit the switch from skipping responses to the DHCP request from all ports except Uplink. In addition to restricting traffic, we get the opportunity to block subscriber dhcp-server. An example of a configuration through the CLI is to configure an ACL that allows DHCP responses from port 10 and denies from all other ports:

So, after the configuration, we have normal traffic on the working port, small bursts of broadcast traffic, since failed ports are periodically turned on by timeout. In addition, in the logs we get a line of the form “Port 1 VID 156 LBD recovered. Loop detection restarted ”, and, if necessary, trap to the monitoring server.

All efforts will allow us to work methodically on eliminating the consequences of a thunderstorm in a city network (replacing switches). As practice has shown (for more than 5 years in the telecom operator as a network administrator), the Dlink DES3200-series is very fond of thunderstorms.

Thank you for your time reading. Special thanks to colleagues for your comments and recommendations, as well as interest in this study.

PS

Series of articlesConstructive admin laziness, or how I automated a config, the goal of which is to reduce the load on all your favorite computer people as a network administrator.

Everything is like in the DMB film, the plot is about the gopher: I also do not see the cables, but there are links. The settings at the switch are reset to the factory #reset system command .

For clarity, a few more screenshots:

Links went up, the switch is working, but what it is doing for us will tell wireshark and we see an amazing picture:

The presented screenshot shows a large number of ARP packets that the switch itself generates, presumably as a result of activity of the failed ports. Based on this, we enable the Loop Detection function, the function is intended to form the switch tree using the STP protocol, but it works when STP is turned off: Here's how the situation changed: After applying the Loop Detection setting, a record is made in the switch logs about the detected ring on the faulty port, the port itself goes into err-disabled mode, as seen in the screenshots above ( mode vlan-based

enable loopdetect

config loopdetect mode vlan-based

config loopdetect recover_timer 1800

config loopdetect interval 10

config loopdetect ports 1-9 state enable

allows you to configure ring detection on trunk ports, as a result of which only traffic from the vlan in which the ring is detected will be blocked, while the remaining vlan will work as usual).

The type of traffic caught by wereshark also changed. Now we see a large number of dhcp requests (on the connected computer, the setting is to automatically obtain an IP address).

It follows that the situation with broadcast traffic has not changed. This adversely affects the functioning of the network, as Broadcast packets are cloned by the switches, and this leads to the phenomenon of a broadcast storm. Based on this, a decision was made to limit the number of broadcast traffic through the command (if the number of packets exceeded the level, then the packets are discarded):

config traffic control 1-9 broadcast enable action drop threshold 64 countdown 5 time_interval 30

In addition, we prohibit the switch from skipping responses to the DHCP request from all ports except Uplink. In addition to restricting traffic, we get the opportunity to block subscriber dhcp-server. An example of a configuration through the CLI is to configure an ACL that allows DHCP responses from port 10 and denies from all other ports:

create access_profile ip udp src_port_mask 0xFFFF profile_id 1

config access_profile profile_id 1 add access_id 1 ip udp src_port 67 port 10 permit

config access_profile profile_id 1 add access_id 2 ip udp src_port 67 port 1-9 deny

Why was this all about, and what did you get as a result?

So, after the configuration, we have normal traffic on the working port, small bursts of broadcast traffic, since failed ports are periodically turned on by timeout. In addition, in the logs we get a line of the form “Port 1 VID 156 LBD recovered. Loop detection restarted ”, and, if necessary, trap to the monitoring server.

All efforts will allow us to work methodically on eliminating the consequences of a thunderstorm in a city network (replacing switches). As practice has shown (for more than 5 years in the telecom operator as a network administrator), the Dlink DES3200-series is very fond of thunderstorms.

Thank you for your time reading. Special thanks to colleagues for your comments and recommendations, as well as interest in this study.

PS

Series of articlesConstructive admin laziness, or how I automated a config, the goal of which is to reduce the load on all your favorite computer people as a network administrator.