Samsung Launches Free Online Computer Vision Neural Network Course

You still do not understand why ReLU is better than sigmoid, how Rprop differs from RMSprop, why normalize signals and what is skip connection? Why does a neural network need a graph, and what mistake did it make that it propagates back? Do you have a project with computer vision, or maybe you are making an intergalactic robot to deal with dirty dishes, and want it to be able to decide for itself, to launder, or so it will do?

We are launching the open course “Neural Networks and Computer Vision” , which is addressed to those who are taking their first steps in this area. The course was developed by experts from Samsung Research Russia: Samsung Research Center and Samsung Center for Artificial Intelligence in Moscow. Strengths of the course:

This course opened on June 1, the first in a series of free online courses from Samsung on the Stepik platform. Preference was given specifically to the Russian platform - this will provide more opportunities for the Russian-speaking audience. Courses will primarily focus on Machine Learning (ML). The choice is not accidental: in May 2018, the Samsung Artificial Intelligence Center was opened in Moscow, where ML scientific stars such as Viktor Lempitsky (the most quoted Russian scientist in the Computer Science category), Dmitry Vetrov, Anton Konushin, Sergey Nikolenko and many others work.

So, for 6 weeks of video lectures and practical exercises, doing 3-5 hours a week, you will be able to figure out how to solve the basic problems of machine vision, and also gain the necessary theoretical training for further independent study of the field.

Two course modes are supposed: basic and advanced. In the first case, it is enough to watch lectures, answer questions on lectures and solve seminars. In the second case, it will be necessary to solve theoretical problems in which it will be necessary to apply sufficiently extensive knowledge from mathematics of 1-2 courses of a technical university.

The course consistently sets out the terminology and principles of the construction of neural networks, talks about modern tasks, optimization methods, loss functions, and the basic architectures of neural networks. And at the end of training - the solution of a visual applied task of computer vision.

Mikhail Romanov

Mikhail Romanov

A graduate of the Moscow Institute of Physics and Technology. He graduated from Yandex Data Analysis School. He received his PhD from Technical University of Denmark.

An employee of the Moscow AI Center Samsung. Michael is engaged in machine vision tasks for robots and loves to teach. He has many ideas and topics for further courses. One of the graduates of AI Bootcamp 2018, held at the Samsung Center for Artificial Intelligence, wrote in the exit questionnaire on the question of evaluating Mikhail on a 5-point scale as a teacher, “it’s a pity that there is no rating of six!”

Igor Slinko

Igor Slinko

MIPT graduate. He graduated from Yandex Data Analysis School. An employee of the Moscow AI Center Samsung. Igor also deals with machine vision tasks for robots and teaches Machine Learning at the Higher School of Economics. Last and this year he was a volunteer lecturer at the Deep Learning workshop of the Summer School social and educational project , organized in partnership with Samsung Research Russia.

Neural network:

Build the first neural network:

Tasks solved using neural networks:

Optimization methods:

Convolutional neural networks:

Regularization, normalization, maximum likelihood method:

The course is designed for students who take the first steps in the field of machine learning. What is needed from you?

Challenge accepted? Then proceed to the course !

We are launching the open course “Neural Networks and Computer Vision” , which is addressed to those who are taking their first steps in this area. The course was developed by experts from Samsung Research Russia: Samsung Research Center and Samsung Center for Artificial Intelligence in Moscow. Strengths of the course:

- course authors know what they are talking about: these are engineers at the Moscow Center for Artificial Intelligence Samsung, Mikhail Romanov and Igor Slinko;

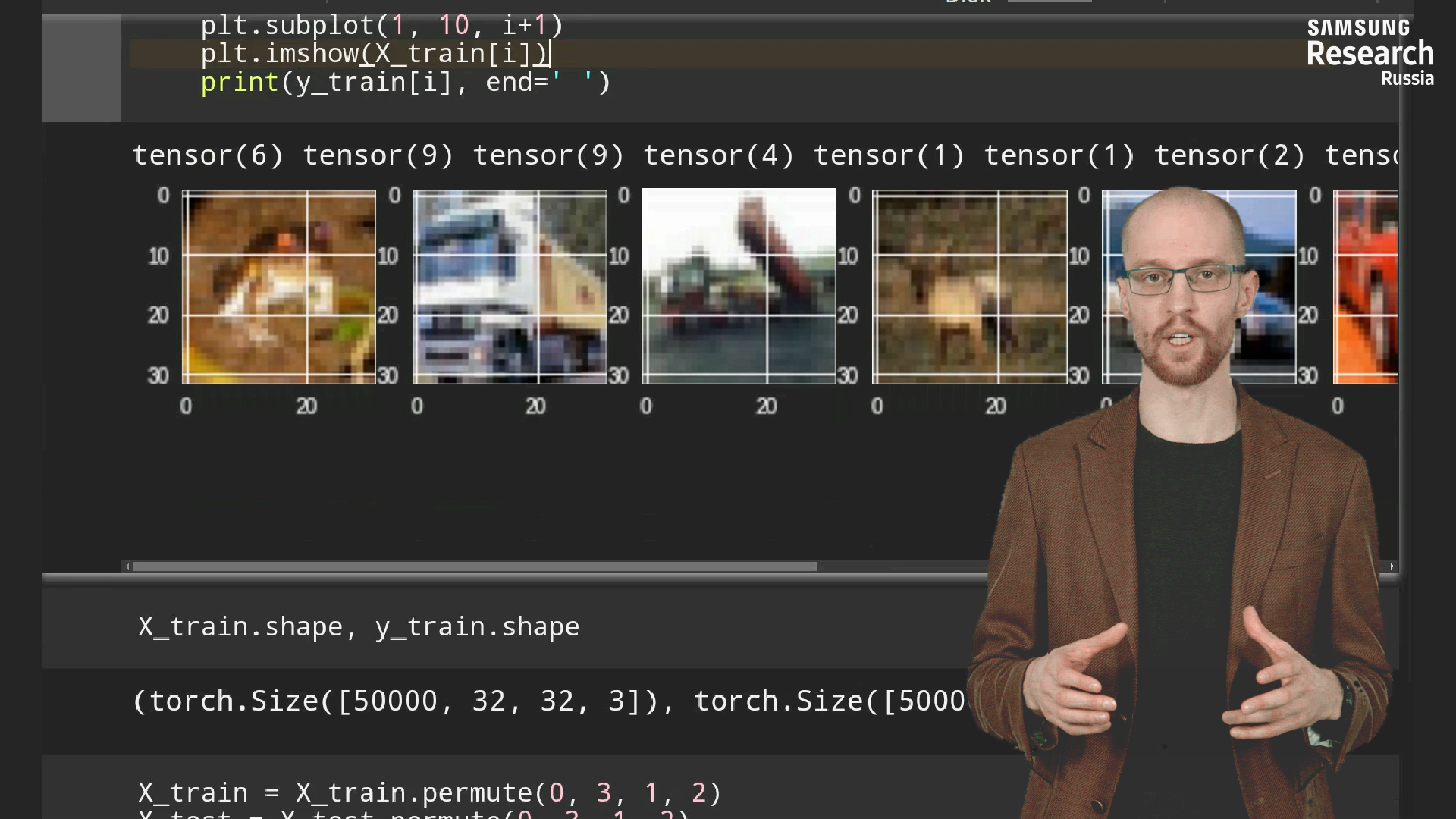

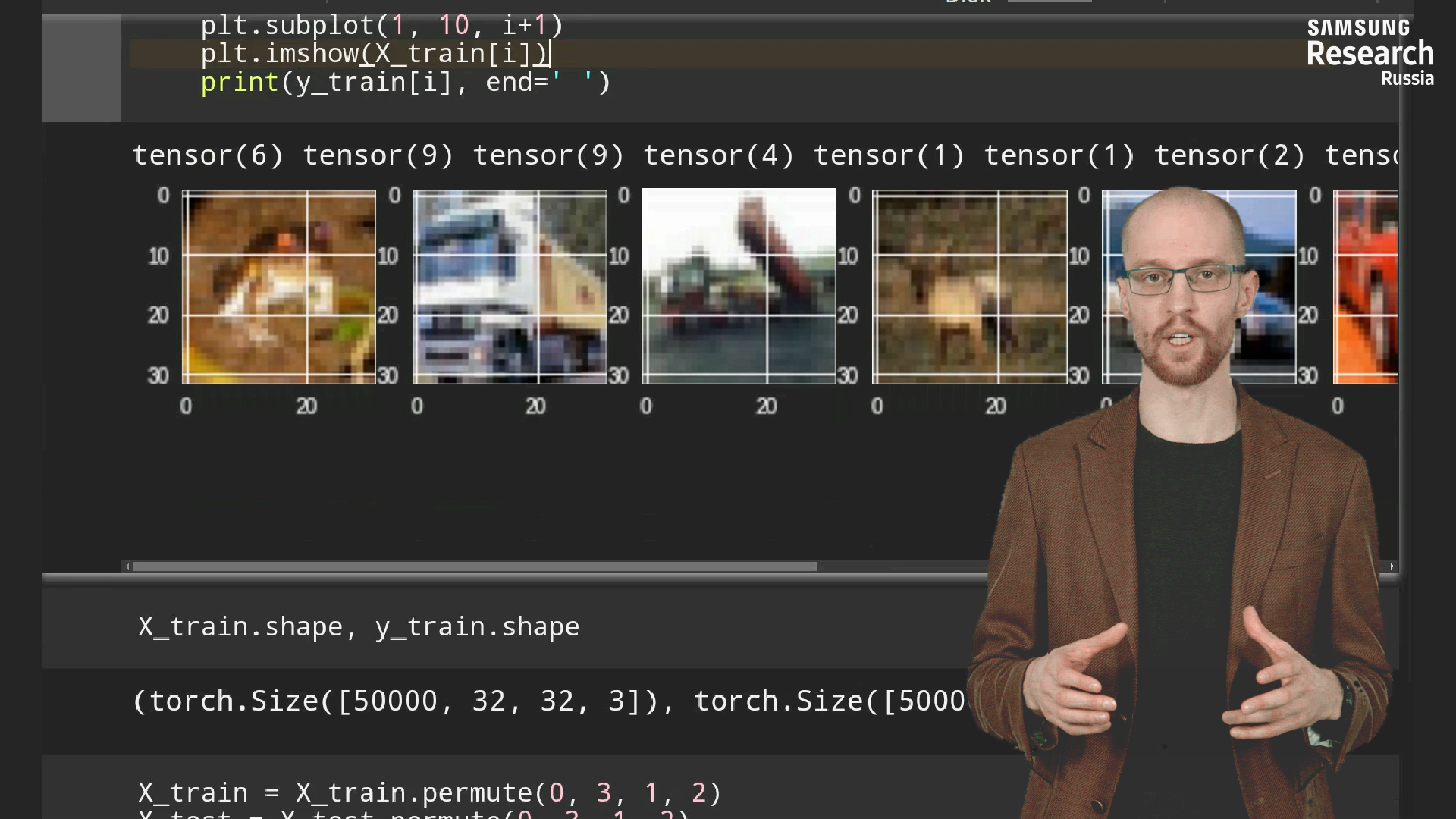

- There is both a theory with problems and practice on PyTorch

- start practice immediately after mastering the minimum theoretical knowledge.

- the best students will be invited for an interview at Samsung Research Russia!

This course opened on June 1, the first in a series of free online courses from Samsung on the Stepik platform. Preference was given specifically to the Russian platform - this will provide more opportunities for the Russian-speaking audience. Courses will primarily focus on Machine Learning (ML). The choice is not accidental: in May 2018, the Samsung Artificial Intelligence Center was opened in Moscow, where ML scientific stars such as Viktor Lempitsky (the most quoted Russian scientist in the Computer Science category), Dmitry Vetrov, Anton Konushin, Sergey Nikolenko and many others work.

So, for 6 weeks of video lectures and practical exercises, doing 3-5 hours a week, you will be able to figure out how to solve the basic problems of machine vision, and also gain the necessary theoretical training for further independent study of the field.

Two course modes are supposed: basic and advanced. In the first case, it is enough to watch lectures, answer questions on lectures and solve seminars. In the second case, it will be necessary to solve theoretical problems in which it will be necessary to apply sufficiently extensive knowledge from mathematics of 1-2 courses of a technical university.

The course consistently sets out the terminology and principles of the construction of neural networks, talks about modern tasks, optimization methods, loss functions, and the basic architectures of neural networks. And at the end of training - the solution of a visual applied task of computer vision.

Course teachers

Mikhail Romanov

Mikhail RomanovA graduate of the Moscow Institute of Physics and Technology. He graduated from Yandex Data Analysis School. He received his PhD from Technical University of Denmark.

An employee of the Moscow AI Center Samsung. Michael is engaged in machine vision tasks for robots and loves to teach. He has many ideas and topics for further courses. One of the graduates of AI Bootcamp 2018, held at the Samsung Center for Artificial Intelligence, wrote in the exit questionnaire on the question of evaluating Mikhail on a 5-point scale as a teacher, “it’s a pity that there is no rating of six!”

Igor Slinko

Igor SlinkoMIPT graduate. He graduated from Yandex Data Analysis School. An employee of the Moscow AI Center Samsung. Igor also deals with machine vision tasks for robots and teaches Machine Learning at the Higher School of Economics. Last and this year he was a volunteer lecturer at the Deep Learning workshop of the Summer School social and educational project , organized in partnership with Samsung Research Russia.

Course program

Neural network:

- Mathematical model of neuron

- Boolean operations in the form of neurons

- From neuron to neural network

- Workshop: Basic Work in PyTorch

Build the first neural network:

- Neural network dependency recovery

- Neural network components

- Theoretical Objectives: Dependency Recovery

- Neural network tuning algorithm

- Theoretical Problems: Graphs of Computing and BackProp

Tasks solved using neural networks:

- Binary classification? Binary Cross Entropy!

- Multiclass classification? Softmax!

- Localization, detection, super-resolution

- Theoretical Problems: Loss Functions

- Workshop: Building the First Neural Network

- Workshop: Classification in PyTorch

Optimization methods:

- The most common gradient descent

- Gradient Descent Modifications

- Theoretical Objectives: Understanding SGD with momentum

- Workshop: Implementing Gradient Descent Using PyTorch

- Workshop: Classification of Handwritten Numbers by a Fully Connected Network

Convolutional neural networks:

- Convolution, cascade of convolutions

- History of Architectures: LeNet (1998)

- History of Architecture: AlexNet (2012) and VGG (2014)

- History of Architectures: GoogLeNet and ResNet (2015)

- Workshop: Handwriting recognition by convolutional neural network

Regularization, normalization, maximum likelihood method:

- Regularization and neural networks

- Data normalization

- Seminar: We solve the classification problem on the CIFAR dataset

- Maximum likelihood method

- Seminar: Transfer learning on the example of competition on Kaggle

Student requirements

The course is designed for students who take the first steps in the field of machine learning. What is needed from you?

- Have basic knowledge in the field of mathematical statistics.

- Be prepared to program in Python.

- If you want to take a course at a difficult level, you will need a good knowledge of mathematical analysis, linear algebra, probability theory and statistics.

Challenge accepted? Then proceed to the course !