Restoring photos using neural networks

Hi everyone, I work as a research programmer in the computer vision team of Mail.ru Group. For Victory Day this year, we decided to make a project for the restoration of military photographs . What is photo restoration? It consists of three stages:

- we find all image defects: breaks, scuffs, holes;

- paint over the defects found based on the pixel values around them;

- colorize the image.

In this article I will go through each of the stages of restoration in detail and tell you how and where we took data, what networks we learned, what we did, what rakes we stepped on.

Defect search

We want to find all the pixels related to defects in the uploaded photo. First, we need to understand what kind of photographs of the war years people will upload. We turned to the organizers of the Immortal Regiment project, who shared data with us. After analyzing them, we noticed that people often upload portraits, single or group, which have a moderate or large number of defects.

Then it was necessary to collect a training sample. The training sample for the segmentation task is an image and a mask on which all defects are marked. The easiest way is to give photos to the markers to the markers. Of course, people are good at finding defects, but the problem is that markup is a very long process.

It can take from one hour to a whole working day to mark pixels related to defects in one photo, so it is difficult to collect a sample of more than 100 photos in a few weeks. Therefore, we tried to somehow supplement our data and wrote defects on our own: we took a clean photo, applied artificial defects to it and got a mask showing us which particular parts of the image were damaged. The main part of our training sample was 79 manually marked photos, of which 11 were transferred to the test sample.

The most popular approach for the segmentation problem: take Unet with a pre-trained encoder and minimize the amount

What problems arise with this approach in the problem of segmentation of defects?

- Even if it seems to us that there are a lot of defects in the photo, that it is very dirty and very tattered by time, the area occupied by defects is still much smaller than the undamaged part of the image. To solve this problem, you can increase the weight of the positive class in

, and the optimal weight will be the ratio of the number of all pure pixels to the number of pixels belonging to the defects.

- The second problem is that if we use Unet out of the box with a pre-trained encoder, for example Albunet-18, then we lose a lot of positional information. The first layer of Albunet-18 consists of a convolution with a core of 5 and stride equal to two. This allows the network to work quickly. We sacrificed the network’s operating time for the better localization of defects: we removed max pooling after the first layer, reduced stride to 1 and reduced the convolution core to 3.

- If we work with small images, for example, compressing a picture to 256 x 256 or 512 x 512, then small defects will simply disappear due to interpolation. Therefore, you need to work with a large picture. Now in production we are segmenting defects in photographs of 1024 x 1024. Therefore, it was necessary to train the neural network in large crop of large images. And because of this, there are problems with the small size of the batch on one video card.

- During training, we have about 20 pictures placed on one card. Because of this, the estimate of the mean and variance in the BatchNorm layers is inaccurate. In-place BatchNorm helps us solve this problem , which, firstly, saves memory, and secondly, it has a version of Synchronized BatchNorm, which synchronizes statistics between all cards. Now we consider the average and variance not by 20 pictures on one card, but by 80 pictures from 4 cards. This improves network convergence.

In the end, increasing weight

As a result, our network converged on four GeForce 1080Ti in 18 hours. Inference takes 290 ms. It turns out long enough, but it’s a fee for the fact that we are well looking for small defects. Validation

Fragment Restoration

Unet helped us solve this problem again. To the input we gave him the original image and a mask on which we mark clean spaces with units, and those pixels that we want to paint over with zeros. We collected the data as follows: we took from the Internet a large dataset with pictures, for example, OpenImagesV4, and artificially added defects that are similar in shape to those found in real life. And after that they trained the network to repair the missing parts.

How can we modify Unet for this task?

You can use Partial Convolution instead of the usual convolution. Her idea is that when we collapse a region of a picture with a kernel, we do not take into account the pixel values related to defects. This helps make the painting more accurate. An example from an NVIDIA article. In the center picture, they used Unet with the usual convolution, and on the right with Partial Convolution:

We trained the network for 5 days. On the last day, we froze BatchNorm, this helped to make the borders of the painted part of the image less noticeable.

The network processes a picture of 512 x 512 in 50 ms. The validation PSNR is 26.4. However, metrics cannot be trusted unconditionally in this task. Therefore, we first ran several good models on our data, anonymized the results, and then voted for the ones that we liked more. So we chose the final model.

I mentioned that we artificially added defects to clean images. When training, you need to carefully monitor the maximum size of the superimposed defects, because with very large defects that the network has never seen in the learning process, it will wildly fantasize and give an absolutely inapplicable result. So, if you need to paint over large defects, also apply large defects during training.

Here is an example of the algorithm:

Coloring

We segmented the defects and painted them, the third step is the reconstruction of the color. Let me remind you that among the photographs of the "Immortal Regiment" there are a lot of single or group portraits. And we wanted our network to work well with them. We decided to make our own colorization, because none of the services known to us paints portraits quickly and well.

GitHub has a popular repository for coloring photos. On average, he does this job well, but he has several problems. For example, he loves to paint clothes in blue. Therefore, we also rejected it.

So, we decided to make a neural network for colorization. The most obvious idea: take a black and white image and predict three channels, red, green and blue. But, generally speaking, we can simplify our work. We can work not with the RGB representation of the color, but with the YCbCr representation. Component Y is brightness (luma). The downloaded black and white image is the Y channel, we will reuse it. It remained to predict Cb and Cr: Cb is the difference in blue color and brightness, and Cr is the difference in red color and brightness.

Why did we choose the YCbCr view? The human eye is more susceptible to changes in brightness than to color changes. Therefore, we reuse the Y-component (brightness), something that the eye is initially susceptible to, and predict Cb and Cr, in which we can make a little more mistake, since people notice less “false” in colors. This feature began to be actively used at the dawn of color television, when the channel bandwidth was not enough to transmit all the colors in full. The image was transferred to YCbCr, transferred to the Y component unchanged, and Cb and Cr were compressed twice.

How to assemble baseline

You can again take Unet with a pre-trained encoder and minimize the L1 Loss between the real CbCr and the predicted one. We want to color portraits, so in addition to photos from OpenImages, we need to add photos specific to our task.

Where can I get color photographs of people in military uniform? There are people on the Internet who paint old photographs as a hobby or to order. They do this very carefully, trying to fully comply with all the nuances. Coloring the uniform, epaulettes, medals, they turn to archival materials, so the result of their work can be trusted. In total, we used 200 hand-painted photographs. The second useful data source is the site of the Workers 'and Peasants' Red Army. One of its creators was photographed in almost all possible variants of a military uniform during the Great Patriotic War.

In some photographs, he repeated the poses of people from famous archival photographs. It’s especially good that he shot on a white background, this allowed us to augment the data very well, adding various natural objects to the background. We also used ordinary modern portraits of people, complementing them with insignia and other attributes of wartime clothes.

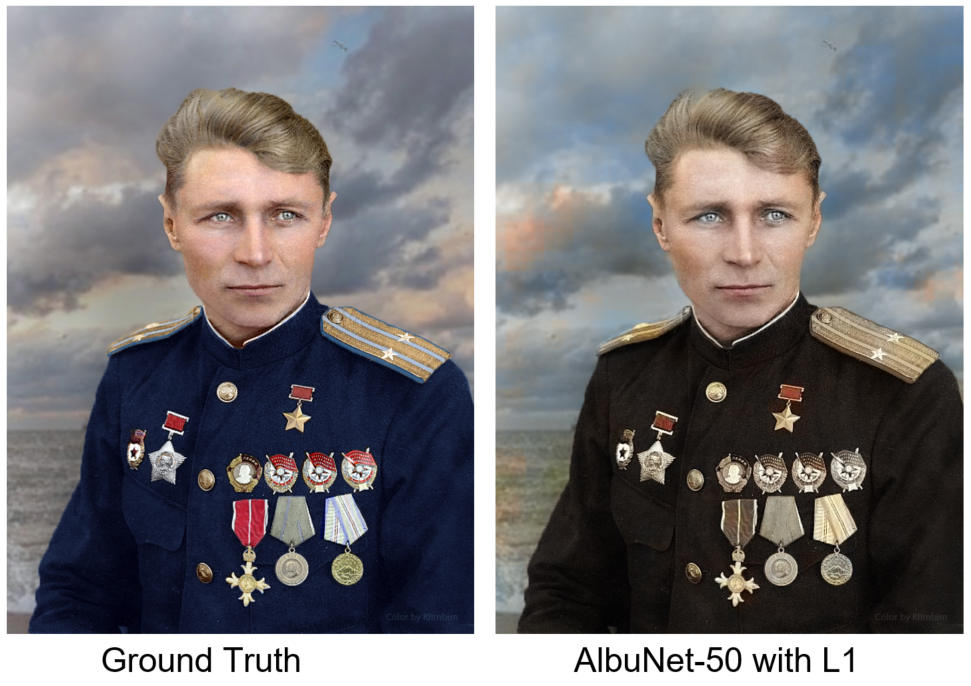

We trained AlbuNet-50 - this is Unet, in which AlbuNet-50 is used as an encoder. The network began to give adequate results: the skin is pink, the eyes are gray-blue, the shoulder straps are yellowish. But the problem is that she painted the pictures with spots. This is due to the fact that from the point of view of the L1 error it is sometimes more profitable not to do anything than to try to predict some color.

We are comparing our result with a photo of Ground Truth - manual colorization of the artist under the nickname Klimbim

How to solve this problem? We need a discriminator: a neural network, to which we will supply images to the input, and it will say how realistic this image looks. Below, one photograph is hand-painted, and the second by a neural network. Which one do you think?

Answer

The left photo is manually painted.

As a discriminator, we use the discriminator from the article Self-Attention GAN . This is a small convolutional network, in the last layers of which the so-called Self-Attention is built-in. It allows you to more "pay attention" to the image details. We also use spectral normalization. The exact explanation and motivation can be found in the article. We trained a network with a combination of L1-loss and the error returned by the discriminator. Now the network paints the details of the image better, and the background is more consistent. Another example: on the left is the result of the network trained only with L1-loss, on the right - with L1-loss and a discriminator error.

On four Geforce 1080Ti, training took two days. The network worked out in 30 ms in the 512 x 512 picture. The validation MSE was 34.4. As in the inpainting problem, metrics can not be fully trusted. Therefore, we selected 6 models that had the best metrics for validation, and blindly voted for the best model.

After rolling out the model in production, we continued the experiments and came to the conclusion that it is better to minimize not per-pixel L1-loss, but perceptual loss. To calculate it, you need to run the network prediction and the source photo through the VGG-16 network, take the attribute maps on the lower layers and compare them according to MSE. This approach paints more areas and helps to get a more colorful picture.

Conclusions and Conclusion

Unet is a cool model. In the first segmentation problem, we encountered a problem in training and working with high-resolution images, so we use In-Place BatchNorm. In the second task (Inpainting), instead of the usual convolution, we used Partial Convolution, this helped to achieve better results. In the colorization problem for Unet, we added a small discriminator network that fined the generator for an unrealistic looking image and used perceptual loss.

The second conclusion is that accessors are important. And not only at the stage of marking pictures before training, but also for validating the final result, because in problems of painting defects or colorization, you still need to validate the result with the help of a person. We give the user three photos: the original one with the removed defects, colorized with the removed defects, and just the colorized photo in case the algorithm for finding and painting defects is wrong.

We took some photos of the Military Album project and processed them with our neural networks. Here are the results obtained:

And here you can see them in the original resolution and at each stage of processing.