Skeletal animation on the side of the video card

Unity recently introduced ECS. In the process of studying, I became interested in how animation and ECS can be made friends. And in the process of searching, I came across an interesting technique that the guys from NORDVEUS used in their demo for the Unite Austin 2017 report.

Unite Austin 2017 - Massive Battle in the Spellsouls Universe.

The report contains many interesting solutions, but today we will discuss the preservation of skeletal animation in texture with a view to its further application.

Why such difficulties, you ask?

The guys from NORDVEUS simultaneously painted on the screen a large number of the same type of animated object: skeletons, swordsmen. In the case of using the traditional approach: SkinnedMeshRenderers and Animation \ Animator , will entail an increase in draw calls and an additional load on the CPU for rendering the animation. And to solve these problems, the animation was moved to the side of the GPU, or rather to the vertex shader.

I was very interested in the approach and decided to figure it out in more detail, and since I did not find articles on this topic I got into the code. In the process of studying the issue, this article was born, and my vision of solving this problem.

So let's cut the elephant into pieces:

- Getting animation keys from clips

- Saving data to texture

- Mesh (mesh) preparation

- Shader

- Putting it all together

Getting animation keys from animation clips

From the components of SkinnedMeshRenderers we get an array of bones and a mesh. The Animation component provides a list of available animations. So, for each clip, we must save the transformation matrix frame by frame for all the bones of the mesh. In other words, we keep the character's pose per unit of time.

We select a two-dimensional array in which the data will be stored. One dimension of which has the number of frames times the length of the clip in seconds. Another is the total number of bones in the mesh:

var boneMatrices = new Matrix4x4[Mathf.CeilToInt(frameRate * clip.length), renderer.bones.Length];In the following example, we change the frames for the clip one by one and save the matrices:

// проходим по всем кадрам в клипе

for (var frameIndex = 0; frameIndex < totalFramesInClip; ++frameIndex)

{

// нормализуем время: 0 - начало клипа, 1 - конец.

var normalizedTime = (float) frameIndex / totalFramesInClip;

// выставляем время семплируемого кадра

animationState.normalizedTime = normalizedTime;

animation.Sample();

// проходим по всем костям

for (var boneIndex = 0; j < renderer.bones.Length; boneIndex++)

{

// рассчитываем матрицу трансформации для кости в этот кадр

var matrix = renderer.bones[boneIndex].localToWorldMatrix *

renderer.sharedMesh.bindposes[boneIndex];

// сохраняем матрицу

boneMatrices[i, j] = matrix;

}

}

Matrices are 4 by 4, but the last row always looks like (0, 0, 0, 1). Therefore, for the purpose of slight optimization, it can be skipped. Which in turn will reduce the cost of data transfer between the processor and the video card.

a00 a01 a02 a03

a10 a11 a12 a13

a20 a21 a22 a23

0 0 0 1

Saving data to texture

To calculate the size of the texture, we multiply the total number of frames in all animation clips by the number of bones and the number of rows in the matrix (we agreed that we save the first 3 rows).

var dataSize = numberOfBones * numberOfKeyFrames * MATRIX_ROWS_COUNT);

// рассчитываем ширину и высоту текстуры

var size = NextPowerOfTwo((int) Math.Sqrt(dataSize));

var texture = new Texture2D(size, size, TextureFormat.RGBAFloat, false)

{

wrapMode = TextureWrapMode.Clamp,

filterMode = FilterMode.Point,

anisoLevel = 0

};

We write the data into the texture. For each clip, we save the transformation matrix frame by frame. The data format is as follows. Clips are recorded sequentially one by one and consist of a set of frames. Which in turn consist of a set of bones. Each bone contains 3 rows of matrix.

Clip0[Frame0[Bone0[row0,row1,row2]...BoneN[row0,row1,row2].]...FramM[bone0[row0,row1,row2]...ClipK[...]

The following is the save code:

var textureColor = new Color[texture.width * texture.height];

var clipOffset = 0;

for (var clipIndex = 0; clipIndex < sampledBoneMatrices.Count; clipIndex++)

{

var framesCount = sampledBoneMatrices[clipIndex].GetLength(0);

for (var keyframeIndex = 0; keyframeIndex < framesCount; keyframeIndex++)

{

var frameOffset = keyframeIndex * numberOfBones * 3;

for (var boneIndex = 0; boneIndex < numberOfBones; boneIndex++)

{

var index = clipOffset + frameOffset + boneIndex * 3;

var matrix = sampledBoneMatrices[clipIndex][keyframeIndex, boneIndex];

textureColor[index + 0] = matrix.GetRow(0);

textureColor[index + 1] = matrix.GetRow(1);

textureColor[index + 2] = matrix.GetRow(2);

}

}

}

texture.SetPixels(textureColor);

texture.Apply(false, false);

Mesh (mesh) preparation

Add an additional set of texture coordinates to which for each vertex we save the associated bone indices and the weight of the influence of the bone on this vertex.

Unity provides a data structure in which up to 4 bones are possible for one vertex. Below is the code to write this data to uv. We save bone indices in UV1, weights in UV2.

var boneWeights = mesh.boneWeights;

var boneIds = new List(mesh.vertexCount);

var boneInfluences = new List(mesh.vertexCount);

for (var i = 0; i < mesh.vertexCount; i++)

{

boneIds.Add(new Vector4(bw.boneIndex0, bw.boneIndex1, bw.boneIndex2, bw.boneIndex3);

boneInfluences.Add(new Vector4(bw.weight0, bw.weight1, bw.weight2, bw.weight3));

}

mesh.SetUVs(1, boneIds);

mesh.SetUVs(2, boneInfluences);

Shader

The main task of the shader is to find the transformation matrix for the bone associated with the vertex and multiply the coordinates of the vertex by this matrix. To do this, we need an additional set of coordinates with indices and bone weights. We also need the index of the current frame, it will change over time and will be transmitted from the CPU.

// frameOffset = clipOffset + frameIndex * clipLength * 3 - рассчитываем это на стороне CPU

// boneIndex - индес костти к котрой привязана вершина, берем из UV1

int index = frameOffset + boneIndex * 3;

So we got the index of the first row of the matrix, the index of the second and third will be +1, +2, respectively. It remains to translate the one-dimensional index into the normalized coordinates of the texture and for this we need the size of the texture.

inline float4 IndexToUV(int index, float2 size)

{

return float4(((float)((int)(index % size.x)) + 0.5) / size.x,

((float)((int)(index / size.x)) + 0.5) / size.y,

0,

0);

}

Having subtracted the rows, we collect the matrix without forgetting about the last row, which is always equal to (0, 0, 0, 1).

float4 row0 = tex2Dlod(frameOffset, IndexToUV(index + 0, animationTextureSize));

float4 row1 = tex2Dlod(frameOffset, IndexToUV(index + 1, animationTextureSize));

float4 row2 = tex2Dlod(frameOffset, IndexToUV(index + 2, animationTextureSize));

float4 row3 = float4(0, 0, 0, 1);

return float4x4(row0, row1, row2, row3);

At the same time, several bones can affect one vertex at once. The resulting matrix will be the sum of all matrices affecting the vertex multiplied by the weight of their influence.

float4x4 m0 = CreateMatrix(frameOffset, bones.x) * boneInfluences.x;

float4x4 m1 = CreateMatrix(frameOffset, bones.y) * boneInfluences.y;

float4x4 m2 = CreateMatrix(frameOffset, bones.z) * boneInfluences.z;

float4x4 m3 = CreateMatrix(frameOffset, bones.w) * boneInfluences.w;

return m0 + m1 + m2 + m3;

Having received the matrix, we multiply it by the coordinates of the vertex. Therefore, all vertices will be moved to the character's pose, which corresponds to the current frame. Changing the frame, we will animate the character.

Putting it all together

To display objects, we will use Graphics.DrawMeshInstancedIndirect, into which we will transfer the prepared mesh and material. Also, in the material, we must pass the texture with animations to the texture size and an array with pointers to the frame for each object at the current time. As additional information, we pass the position for each object and the rotation. How to change the position and rotation on the shader side can be found in [article] .

In the Update method, increase the time elapsed from the beginning of the animation on Time.deltaTime.

In order to calculate the frame index, we must normalize the time by dividing it by the length of the clip. Therefore, the frame index in the clip will be the product of normalized time by the number of frames. And the frame index in the texture will be the sum of the shift of the beginning of the current clip and the product of the current frame by the amount of data stored in this frame.

var offset = clipStart + frameIndex * bonesCount * 3.0fThat's probably all having passed all the data to the shader, we call Graphics.DrawMeshInstancedIndirect with the prepared mesh and material.

conclusions

Testing this technique on a machine with a 1050 graphics card showed a performance increase of about 2 times.

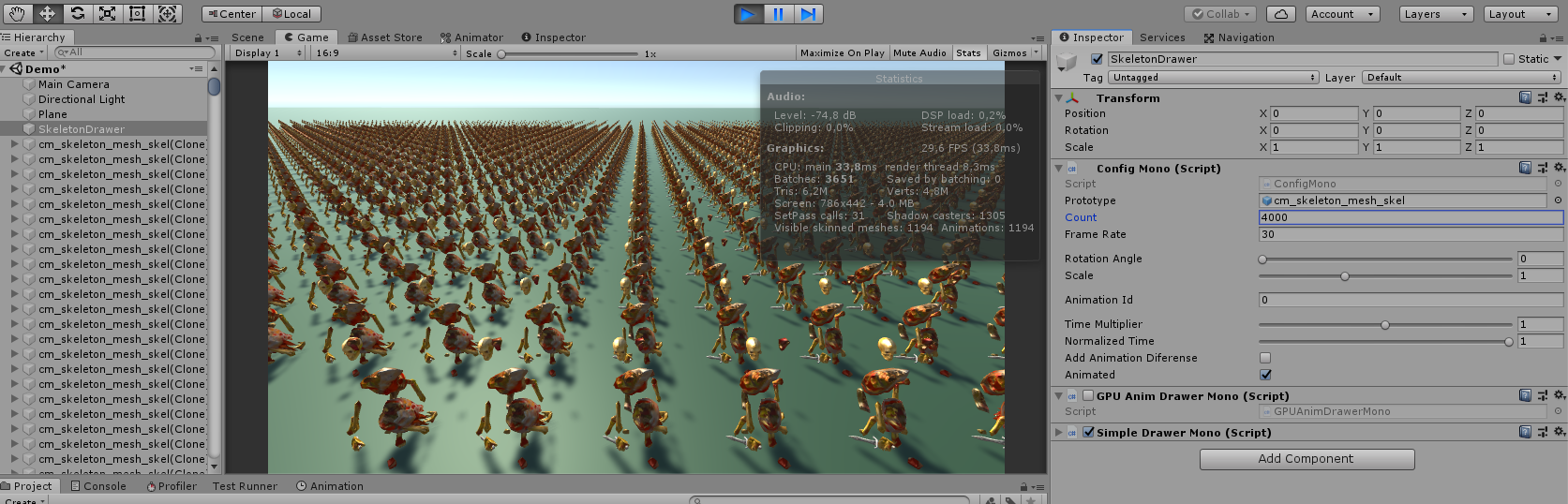

Animation of 4000 objects of the same type on the CPU

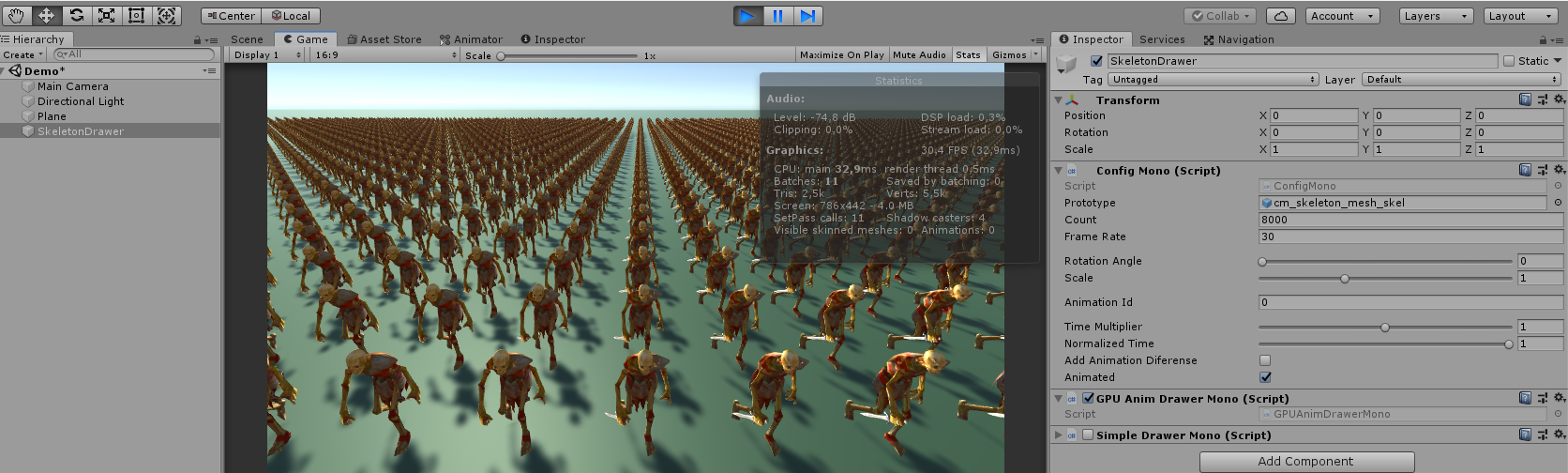

Animation of 8000 objects of the same type on the GPU

At the same time, testing this scene on a macbook pro 15 with an integrated graphics card shows the opposite result. The GPU shamelessly loses (about 2-3 times), which is not surprising.

Animation on the video card is another tool that can be used in your application. But like all tools, it should be used wisely and out of place.

References

- Animation Instancing - Instancing for SkinnedMeshRenderer

- GPUSkinning - improve performance.

- UniteAustinTechnicalPresentation - ECS demo

[GitHub project code]

Thanks for attention.

PS: I am new to Unity and do not know all the subtleties, the article may contain inaccuracies. I hope to fix them with your help and understand the topic better.