From the epicenter of Google Cloud Next '19: CEO field notes

If you want to know where the wind is blowing, head to the very heart of the hurricane.

Approximately this feeling came from attending the Google Cloud Next '19 conference, where for three days developers, product managers, data specialists and other bright minds shared the hottest news in the field of application development and architecture, security, cost management, data analysis, hybrid cloud technologies, ML and AI, off-server data processing, etc ... 30,000 people, flows in industries and technical areas, almost non-stop lectures. What a stir!

Let me tell you some important news about Google Cloud in 2019-2020. and its 122 updates cannot be missed, based on notes by Vlad Flax, CEO of OWOX , who attended the conference.

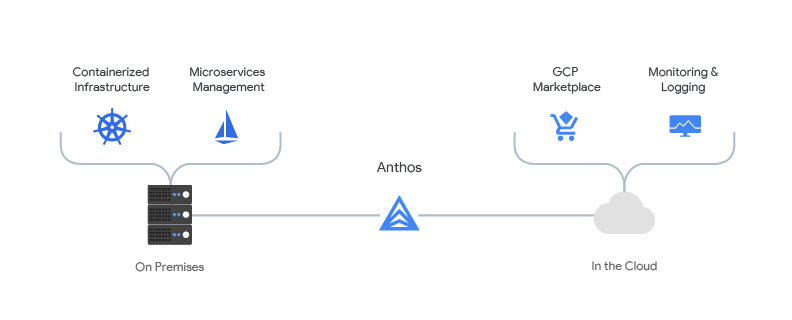

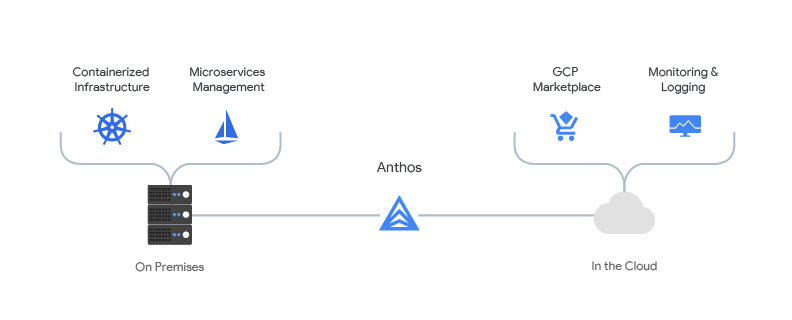

While the product is in closed beta, but developers are already waiting for acquaintance with Anthos , which allows them to write and run hybrid applications regardless of the development environment. Locally or in the cloud, you are guaranteed the same level of security and ease of access.

Thanks to the container and microservice system, your virtual machines, servers and services can be launched from all over the world (and possibly the Moon)

In Anthos:

Although all technical stuffing is based on open source, such a luxury in combination still costs money: from $ 10,000 / month. Anthos video presentation inquire here .

Another joy for developers, which will finally help to answer the question of how to run applications from a half-kick without the hateful choice between local or server data processing.

Synthesizing a product that solves the problems of scaling, support and server settings, and the limitations of standard procedures, libraries and languages of local solutions (count, crossing bulldogs and rhinos) for web application developers, Cloud Run has appeared , so far beta.

The meaning is: take all that is useful from these two approaches. Place an HTTP container without state preservation, working in any language, under which all the necessary libraries and dependencies will be automatically pulled up, in a full-fledged “cloud”, where the server capacities will be pulled up automatically if the traffic load changes. Sounds complicated?

But it really brings more time-to-value during the development process: no more thinking, choosing, asking the price of the server side, container development is easily managed and adapted using the Cloud Code . Created, launched, got the result.

Apigee hybrid (beta) is a new feature of the API management system that provides the ability to manage the API in hybrid cross-cloud conditions: in your data center or on a public cloud environment.

It also includes many updates to expand access to the basic functions of Google Cloud. As well as the necessary security alert APIs .

This again simplifies the execution of the tasks of a business that wants to go the shortest and safest way everywhere.

Data management in Google Cloud is based on the principle: no matter how much data you have, let management, movement and access to this data be simple. Therefore, this year the following service improvements were announced:

And many security and access issues got their updates. For example, Google Filestore cloud file system management has been improved , a unified database access coordination system is now more than convenient, full access using AWS V4 keys will become fully available after beta testing.

For business, this is again good news, as access to managing your data from anywhere in the world is accelerated and simplified, and the operational and software and hardware base for Google Cloud is expanding.

To start using this service soon you will not need to do anything superhuman. After all, another commandment of Google is to simplify everything for a non-technical specialist, while maintaining the proper level of security and privacy.

Depending on the type of organization, you have the option to choose one of four schemes that provides the fastest traffic to internal or external IP addresses, from local to global levels, to your Google Cloud services, BigQuery or third-party SaaS services via VPN or Cloud Interconnect By the way, is 100 Gbps a lot or a little for Interconnect? Such a connection capacity will delight any giants of the data world!

In addition, the beta is already Traffic Director , fully covering the SLA and designed to cope with hundreds of products and services at your disposal.

If you came up with what other application you would like to have, more than likely, Google has already released its beta.

More than 17 updates designed to save you from even accidental data loss, information vandalism, phishing, etc. Additional protection for individual perimeters, detection of malware, crypto mining or DDoS attacks based on proprietary computing models.

Who knows more about security flaws than a professional data collection company?

Data Fusion (beta) is a fully managed, native cloud-based data federation service designed to receive and integrate data from multiple sources into BigQuery. All ETL processes and data structures, regardless of complexity and type, will be presented in a single interface with a high level of protection, the convenience of real-time data visualization and integration with other services.

And all this happiness is “for businesses of all sizes” and “without technical knowledge”.

Without high-tech expertise, thanks to standardized procedures, Data Fusion creates and maintains your data in the format of a data lake - a data lake, an ideal “universal soup” for analytics.

But in terms of OWOX practice, with data lakethere is still a lot of work to build an analytical structure for the daily needs of the business.

BigQuery Data Transfer Service now supports over 100+ SaaS applications, automating scheduled data collection without a single line of code.

But if you are looking for personal solutions, you still have to sweat.

Google makes it easy to migrate databases of any size from platforms such as Teradata, Amazon Redshift, Amazon S3, etc.

Cloud Dataflow SQL (already in full alpha) and Dataflow Flexible Resource Scheduling (FlexRS in beta) allows you to configure streams with using the familiar Standard SQL scheduled for equally batch and stream processing. The system itself will “decide” for you which method to apply in a particular case.

But there is still no better way than collecting data with an understanding of the specifics of marketing data.

Despite the fact that Google defines many of its products as those that do not need “technical” specialists, updates, solutions and services for data specialists are the air that the corporation breathes.

The next three updates (all beta) relate specifically to these toothy technologies.

Service Cloud Dataproc autoscalling will solve pain specialists according to the connection and disconnection of Hadoop and Spark clusters on Google Cloud Platform. Dataproc Kerberos TLC enables Hadoop security mode on Dataproc using the Kerberos protocol support API.

And also - Presto fans will have the opportunity to work with the Dataproc Presto job typethrough the native Dataproc API and write simple queries to disparate sources such as Cloud Storage and Hive meta-stores.

Meet the beta version of BigQuery BI Engine , which promises a service for presenting big data analysis with controlled access to data from RAM, less than a second response to queries, high stability, simplified BI architecture and “smart” performance tuning.

Despite so many years of development, Google continues to cover many “simplified” models, creating niches for companies such as OWOX BI, which are not afraid to come up with more accurate solutions for the needs of the business. Also, the cost of BigQuery BI Engine is still a little repulsive compared to Amazon Kinesis and ClickHouse.

New type of tables Connected sheetsspecialized for the needs of data experts working with BigQuery. This is really a convenient thing that hundreds of specialists all over the world dreamed of, because earlier it would have been necessary at least to configure a separate API and mess with requests. And now everything is beautifully written into a single interface.

The complete BigQuery ML library is now available with new types of models invoked using SQL queries.

The alpha version of the import of TensorFlow models now allows you to call them directly from BigQuery to create a classifier and predictive models directly from the repository. You can also teach, configure, and run a DNN model directly from the standard BigQuery SQL interface. And in general a lot of attention was paid to this technology.

Google has prepared more than 14 updates that simplify the development and configuration of applications on AI using Google Cloud services. And among them are 4 actually developed APIs that deal with a wide variety of tasks: from navigation on auto-tagging videos with object tracking and automatic annotation of streaming videos to libraries of Russian and Japanese languages available for NLP-processing of text on ML-capacities from Google.

But the beta version of Recommendations AI is most appealing .

This future service candy promises to become a personalized adviser to your customers without limiting the scale of the business, while remaining fully GDPR-compliant.

Here are the promised accomplishments possible with Recommendations AI:

+ 90% CTR of recommendations

+ 40% of conversions from recommendations

+ 50% of profits from recommendations

+ 5% of total profits from visits

Here is how it will work:

What can go wrong? That if the business does not know its customers, it will not be able to give the necessary recommendation (at any step). The fact that the strength of recommendations multiplied by marketing pressure can cause a strictly opposite result.

But in general terms, any instrument needs to be tested before being judged, and we look forward to the alpha version!

The trend “without technical specialists” and the trend “only for technical specialists” were mixed together, and now it is important for a business to be as close as possible to all relevant technologies in order to choose from them those that meet business goals.

This is evidenced by the number of C-level visitors to the corporate world who attended the conference. Because the requirements, pains and needs of these specialists constitute the driving force of advanced business and technology around the world.

Most of all, Google Cloud invests in the simplicity of implementing complex tasks and simplifying the lives of its main users - large corporations and large companies, realizing the non-trivial and non-linear nature of the challenges of modern data processing. And it is increasingly turning programming and working with data into a virtuoso non-technical art performed by the Google Cloud Product Orchestra.

Approximately this feeling came from attending the Google Cloud Next '19 conference, where for three days developers, product managers, data specialists and other bright minds shared the hottest news in the field of application development and architecture, security, cost management, data analysis, hybrid cloud technologies, ML and AI, off-server data processing, etc ... 30,000 people, flows in industries and technical areas, almost non-stop lectures. What a stir!

Let me tell you some important news about Google Cloud in 2019-2020. and its 122 updates cannot be missed, based on notes by Vlad Flax, CEO of OWOX , who attended the conference.

Products: Anthos (formerly Cloud Services Platform)

While the product is in closed beta, but developers are already waiting for acquaintance with Anthos , which allows them to write and run hybrid applications regardless of the development environment. Locally or in the cloud, you are guaranteed the same level of security and ease of access.

Thanks to the container and microservice system, your virtual machines, servers and services can be launched from all over the world (and possibly the Moon)

In Anthos:

- GKE On-Prem, a key element, is responsible for local orchestration of container infrastructure.

- For microservice management - Istio

- For Cloud Opportunities - GCP

Although all technical stuffing is based on open source, such a luxury in combination still costs money: from $ 10,000 / month. Anthos video presentation inquire here .

Products: off-server but still slightly cloudy Cloud Run (BETA)

Another joy for developers, which will finally help to answer the question of how to run applications from a half-kick without the hateful choice between local or server data processing.

Synthesizing a product that solves the problems of scaling, support and server settings, and the limitations of standard procedures, libraries and languages of local solutions (count, crossing bulldogs and rhinos) for web application developers, Cloud Run has appeared , so far beta.

The meaning is: take all that is useful from these two approaches. Place an HTTP container without state preservation, working in any language, under which all the necessary libraries and dependencies will be automatically pulled up, in a full-fledged “cloud”, where the server capacities will be pulled up automatically if the traffic load changes. Sounds complicated?

But it really brings more time-to-value during the development process: no more thinking, choosing, asking the price of the server side, container development is easily managed and adapted using the Cloud Code . Created, launched, got the result.

API Management: Hybridization

Apigee hybrid (beta) is a new feature of the API management system that provides the ability to manage the API in hybrid cross-cloud conditions: in your data center or on a public cloud environment.

It also includes many updates to expand access to the basic functions of Google Cloud. As well as the necessary security alert APIs .

This again simplifies the execution of the tasks of a business that wants to go the shortest and safest way everywhere.

Data management: anywhere, on anything

Data management in Google Cloud is based on the principle: no matter how much data you have, let management, movement and access to this data be simple. Therefore, this year the following service improvements were announced:

- Cloud SQL under Microsoft SQL Server (in special display mode)

- CloudSQL under PostgreSQL, version 11 is now supported

- Multi-regional copy of Cloud Bigtable available

And many security and access issues got their updates. For example, Google Filestore cloud file system management has been improved , a unified database access coordination system is now more than convenient, full access using AWS V4 keys will become fully available after beta testing.

For business, this is again good news, as access to managing your data from anywhere in the world is accelerated and simplified, and the operational and software and hardware base for Google Cloud is expanding.

To start using this service soon you will not need to do anything superhuman. After all, another commandment of Google is to simplify everything for a non-technical specialist, while maintaining the proper level of security and privacy.

Networking

Depending on the type of organization, you have the option to choose one of four schemes that provides the fastest traffic to internal or external IP addresses, from local to global levels, to your Google Cloud services, BigQuery or third-party SaaS services via VPN or Cloud Interconnect By the way, is 100 Gbps a lot or a little for Interconnect? Such a connection capacity will delight any giants of the data world!

In addition, the beta is already Traffic Director , fully covering the SLA and designed to cope with hundreds of products and services at your disposal.

If you came up with what other application you would like to have, more than likely, Google has already released its beta.

Security and Identity

More than 17 updates designed to save you from even accidental data loss, information vandalism, phishing, etc. Additional protection for individual perimeters, detection of malware, crypto mining or DDoS attacks based on proprietary computing models.

Who knows more about security flaws than a professional data collection company?

Analytics: the most important and interesting

Data Fusion (beta) is a fully managed, native cloud-based data federation service designed to receive and integrate data from multiple sources into BigQuery. All ETL processes and data structures, regardless of complexity and type, will be presented in a single interface with a high level of protection, the convenience of real-time data visualization and integration with other services.

And all this happiness is “for businesses of all sizes” and “without technical knowledge”.

Without high-tech expertise, thanks to standardized procedures, Data Fusion creates and maintains your data in the format of a data lake - a data lake, an ideal “universal soup” for analytics.

But in terms of OWOX practice, with data lakethere is still a lot of work to build an analytical structure for the daily needs of the business.

BigQuery Data Transfer Service now supports over 100+ SaaS applications, automating scheduled data collection without a single line of code.

But if you are looking for personal solutions, you still have to sweat.

Google makes it easy to migrate databases of any size from platforms such as Teradata, Amazon Redshift, Amazon S3, etc.

Cloud Dataflow SQL (already in full alpha) and Dataflow Flexible Resource Scheduling (FlexRS in beta) allows you to configure streams with using the familiar Standard SQL scheduled for equally batch and stream processing. The system itself will “decide” for you which method to apply in a particular case.

But there is still no better way than collecting data with an understanding of the specifics of marketing data.

Despite the fact that Google defines many of its products as those that do not need “technical” specialists, updates, solutions and services for data specialists are the air that the corporation breathes.

The next three updates (all beta) relate specifically to these toothy technologies.

Service Cloud Dataproc autoscalling will solve pain specialists according to the connection and disconnection of Hadoop and Spark clusters on Google Cloud Platform. Dataproc Kerberos TLC enables Hadoop security mode on Dataproc using the Kerberos protocol support API.

And also - Presto fans will have the opportunity to work with the Dataproc Presto job typethrough the native Dataproc API and write simple queries to disparate sources such as Cloud Storage and Hive meta-stores.

Meet the beta version of BigQuery BI Engine , which promises a service for presenting big data analysis with controlled access to data from RAM, less than a second response to queries, high stability, simplified BI architecture and “smart” performance tuning.

Despite so many years of development, Google continues to cover many “simplified” models, creating niches for companies such as OWOX BI, which are not afraid to come up with more accurate solutions for the needs of the business. Also, the cost of BigQuery BI Engine is still a little repulsive compared to Amazon Kinesis and ClickHouse.

New type of tables Connected sheetsspecialized for the needs of data experts working with BigQuery. This is really a convenient thing that hundreds of specialists all over the world dreamed of, because earlier it would have been necessary at least to configure a separate API and mess with requests. And now everything is beautifully written into a single interface.

The complete BigQuery ML library is now available with new types of models invoked using SQL queries.

The alpha version of the import of TensorFlow models now allows you to call them directly from BigQuery to create a classifier and predictive models directly from the repository. You can also teach, configure, and run a DNN model directly from the standard BigQuery SQL interface. And in general a lot of attention was paid to this technology.

AI & ML: towards the target customer

Google has prepared more than 14 updates that simplify the development and configuration of applications on AI using Google Cloud services. And among them are 4 actually developed APIs that deal with a wide variety of tasks: from navigation on auto-tagging videos with object tracking and automatic annotation of streaming videos to libraries of Russian and Japanese languages available for NLP-processing of text on ML-capacities from Google.

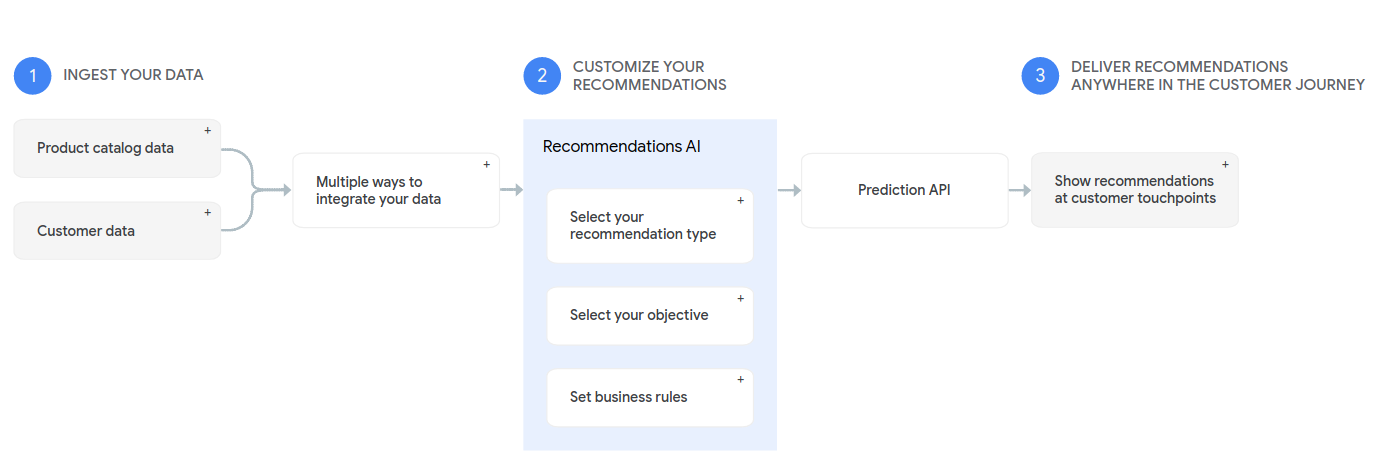

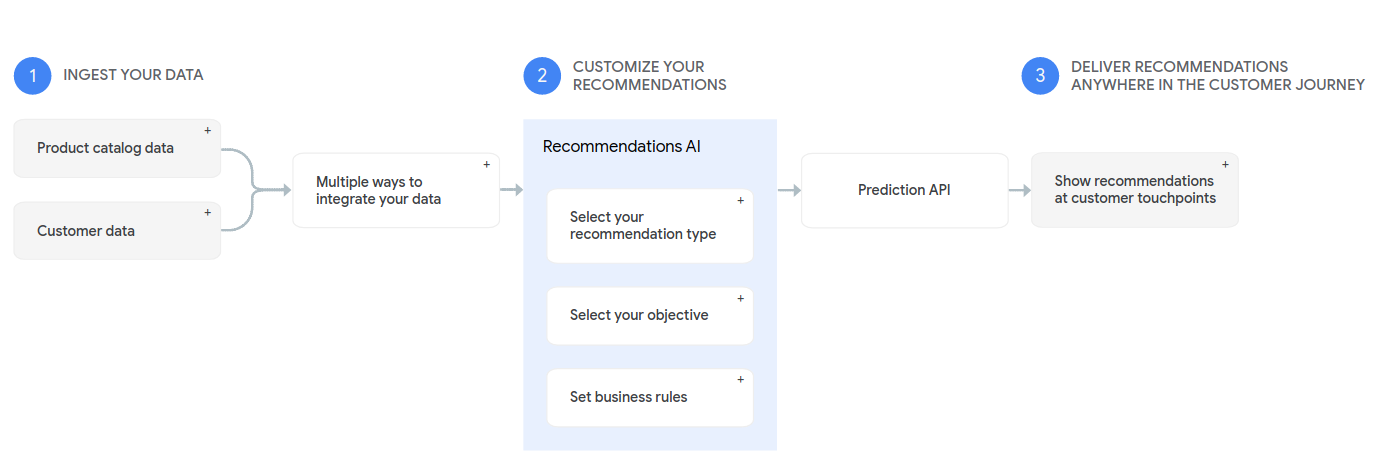

But the beta version of Recommendations AI is most appealing .

This future service candy promises to become a personalized adviser to your customers without limiting the scale of the business, while remaining fully GDPR-compliant.

Here are the promised accomplishments possible with Recommendations AI:

+ 90% CTR of recommendations

+ 40% of conversions from recommendations

+ 50% of profits from recommendations

+ 5% of total profits from visits

Here is how it will work:

What can go wrong? That if the business does not know its customers, it will not be able to give the necessary recommendation (at any step). The fact that the strength of recommendations multiplied by marketing pressure can cause a strictly opposite result.

But in general terms, any instrument needs to be tested before being judged, and we look forward to the alpha version!

Main findings

The trend “without technical specialists” and the trend “only for technical specialists” were mixed together, and now it is important for a business to be as close as possible to all relevant technologies in order to choose from them those that meet business goals.

This is evidenced by the number of C-level visitors to the corporate world who attended the conference. Because the requirements, pains and needs of these specialists constitute the driving force of advanced business and technology around the world.

Most of all, Google Cloud invests in the simplicity of implementing complex tasks and simplifying the lives of its main users - large corporations and large companies, realizing the non-trivial and non-linear nature of the challenges of modern data processing. And it is increasingly turning programming and working with data into a virtuoso non-technical art performed by the Google Cloud Product Orchestra.